Windows Azure and Cloud Computing Posts for 7/15/2013+

Top Stories This Week:

- Luis Panzano (@luispanzano) reported Remote Desktop Services are now allowed on Windows Azure in a 7/15/2013 blog post (see the Windows Azure Infrastructure and DevOps section below.)

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 7/20/2013 with new articles marked ‡.

• Updated 7/18/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

‡ Ross Gardler (@rgardler) reported MS Open Tech early contributor to open source dash.js community to accelerate advanced video streaming through MPEG-DASH standard in a 7/16/2013 post:

MPEG-DASH is key to the future of online video as it is the latest ISO standard for Internet streaming. Over 75% of surveyed European broadcasters are planning to adopt the standard by the middle of 2014. To accelerate this new media technology, Microsoft Open Technologies, Inc. (MS Open Tech) recently contributed code on a new open source project called dash.js that will deliver an MPEG-DASH video player for Internet Explorer 11, and other browsers.

In June, at the //BUILD/ conference, Netflix demonstrated MPEG-DASH playback in Internet Explorer 11 (IE11) without any browser plugins. IE11 was also shown playing MPEG-DASH content using dash.js JavaScript player. This marked a significant step forward in facilitating cross platform content streaming since it removes the need to develop proprietary plugins for each platform. Using the dash.js code developers can more easily build browser based MPEG-DASH players in order to directly address broadcasters concerns about a lack of MPEG-DASH players.

MS Open Tech contributions

Dash.js is an early stage community project, initiated by members of the DASH-IF, to build a cross-platform video player that is compliant with the DASH-AVC/264 Implementation Guidelines. In the latest release of dash.js, our work focused on features that stabilize the player and its ability to stream content in browsers that support Media Source Extensions (MSE), a W3C specification that allows JavaScript to generate media streams for playback.

As a part of this work, the essential on demand video streaming operations such as seek, play and pause have been refactored to address potential incompatibilities between different browser implementations. We have also put considerable effort into improving the development process through the provision of a better test infrastructure. This makes it easier for third parties to participate in the project in order to further enhance the player.

Building the developer ecosystem

MS Open Tech contributions, when combined with the work of other community members, has enabled plugin free video streaming in IE11 and other browsers supporting MSE and we look forward to continuing to play our part in delivering on the promise of MPEG-DASH. To be one of the first to experience the future of Internet video install the Windows 8.1 preview and take a look at dash.js project. Your feedback is important to us and as part of the dash.js project community we welcome all forms of input.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

Craig Kitterman (@craigkitterman) posted What do cloud and mobile have in common? by Olivier Bloch (@obloch, pictured below) on 7/15/2013:

Editor's Note: This post comes from Olivier Bloch, Senior Technical Evangelist for the MS Open Tech Team.

Besides being two of the most heavily used buzz words of 2013, cloud and mobile also have Microsoft Open Technologies, Inc. (MS Open Tech) in common. MS Open Tech is a wholly owned subsidiary of Microsoft that strives to bridge open source technologies with Microsoft technologies, including Windows Azure. Over the last several months, MS Open Tech has partnered with Windows Azure Mobile Services to enable developers utilizing open source technologies deliver new cloud-based experiences in mobile and web apps across devices.

In March, MS Open Tech built the Android SDK for Windows Azure Mobile Services to complement the support for Windows Store, Windows Phone, iOS and HTML5 apps. That SDK gave Android developers access to a range of advanced cloud-based services for storage authentication and notifications. MS Open Tech also contributed the Android SDK for the Windows Azure Notification Hubs, which lets you broadcast push notifications to millions of devices across platforms from almost any backend hosted in Windows Azure.

Recently, MS Open Tech also created a Backbone adapter for Windows Azure Mobile Data Service, letting you seamlessly sync your data with the Cloud using your usual favorite Backbone APIs. After this update, developers using the popular JavaScript framework Backbone.JS to structure their web apps now only needed to add a couple values indicating the table name and location to offer their customers brand new experiences leveraging the cloud.

We’re excited to continue with these projects, but also hope to bring a few more exciting updates shortly. Feel free to email MS Open Tech or leave a comment to suggest other open source technologies supporting Windows Azure.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

Abishek Lal announced the availability of Service Bus Durable Task Framework Preview - Samples in a 6/27/2013 post (missed when published):

The Service Bus Durable Task Framework (Preview) provides developers a means to write code orchestrations in C# using the .Net Task framework and the async/await keywords added in .Net 4.5. Samples included here showcase Timer, Sequence, Error Handling and Signals.

Download here.

Introduction

The Service Bus Durable Task Framework provides developers a means to write code orchestrations in C# using the .Net Task framework and the async/await keywords added in .Net 4.5.Here are the key features of the durable task framework:

- Definition of code orchestrations in simple C# code

- Automatic persistence and check-pointing of program state

- Versioning of orchestrations and activities

- Async timers, orchestration composition, user aided checkpointing

The framework itself is very light weight and only requires an Azure Service Bus namespace and optionally an Azure Storage account. Running instances of the orchestration and worker nodes are completely hosted by the user. No user code is executing ‘inside’ Service Bus.

Building the Sample

- Create a Service Bus Namepsace (steps here)

- Copy the connection string and paste in app.config file

- Build and Run the sample

Service Bus Durable Task Framework (Preview)

The Service Bus Durable Task Framework allows users to write C# code and encapsulate it within ‘durable’ .Net Tasks. These durable tasks can then be composed with other durable tasks to build complex task orchestrations.

Core Concepts

There are a few fundamental concepts in the framework.

Task Hub

The Task Hub is a logical container for Service Bus entities within a namespace. These entities are used by the Task Hub Worker to pass messages reliably between the code orchestrations and the activities that they are orchestrating.

Task Activities

Task Activities are pieces of code that perform specific steps of the orchestration. A Task Activity can be ‘scheduled’ from within some Task Orchestration code. This scheduling yields a plain vanilla .Net Task which can be (asynchronously) awaited on and composed with other similar Tasks to build complex orchestrations.

Task Orchestrations

Task Orchestrations schedule Task Activities and build code orchestrations around the Tasks that represent the activities.

Task Hub Worker

The worker is the host for Task Orchestrations and Activities. It also contains APIs to perform CRUD operations on the Task Hub itself.

Task Hub Client

The Task Hub Client provides:

- APIs for creating and managing Task Orchestration instances

- APIs for querying the state of Task Orchestration instances from an Azure Table

Both the Task Hub Worker and Task Hub Client are configured with connection strings connection strings for Service Bus and optionally with connection strings for a storage account.Service Bus is used for storing the control flow state of the execution and message passing between the task orchestration instances and task activities. However Service Bus is not meant to be a database so when a code orchestration is completed, the state is removed from Service Bus. If an Azure Table storage account was configured then this state would be available for querying for as long as it is kept there by the user.

The framework provides TaskOrchestration and TaskActivity base classes which users can derive from to specify their orchestrations and activities. They can then use the TaskHub APIs to load these orchestrations and activities into the process and then start the worker which starts processing requests for creating new orchestration instances.The TaskHubClient APIs are used to create new orchestration instances, query for existing instances and then terminate those instances if required.

Code Example

Video Encoding Orchestration

Assume that user wants to build a code orchestration that will encode a video and then send an email to the user when it is done.To implement this using the Service Bus Durable Task Framework, the user will write two Task Activities for encoding a video and sending email and one Task Orchestration that orchestrates between these two.

Source code elided for brevity.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

• J. Peter Bruzzese (@JPBruzzese) asserted “Two new Microsoft services make Windows Azure a serious competitor to Amazon Web Services” as a deck for his Active Directory heads to the cloud: What it does and doesn't do article of 2/17/2013 for InfoWorld’s Enterprise Windows blog:

Windows Azure has been evolving steadily since its release in spring 2010, its pricing is now fairer, and useful third-party add-ons can be found in the Windows Azure Store. Today, Windows Azure can be used not only in the development and testing environments for which it was originally geared, but in production enterprise environments as well.

Two new services that make Azure ready for production enterprise environments are Windows Azure Infrastructure Services and Windows Azure Active Directory. They provide a good excuse to take a second look at using Azure instead of Amazon Web Services.

Windows Azure Infrastructure Services helps you move existing apps and infrastructure to the cloud. For example, if you have an on-premises VM on Hyper-V as a .vhd file, you can use this tool to move that .vhd to the cloud. Or if you have a VMware VM, you can convert it for use on Azure and upload it. You can also build your own images or choose from preconfigured ones, such as a SharePoint Server farm or SQL Server support.

To extend your on-premises Active Directory to the cloud, such as when using Azure beyond isolated dev and test instances, you can use Windows Azure Active Directory to connect to servers running on Azure or to bridge the gap to Office 365. You create a hybrid Active Directory forest with domain controllers both on premises and in the cloud, so you can sync identities and authenticate users across them.

IT admins have long extended their Active Directory to external data centers; the ability to extend to Azure is a new development. Just make sure you have DNS server connectivity and VPN connectivity between your on-premises and cloud-based networks.

However, there are on-premises Active Directory features not available to Azure Active Directory, such as the widely used Group Policy. Currently, only Access Control Services is supported to federate identities between Azure Active Directory and on-premises Active Directory, as well as with other established identity management providers like Google and Facebook. The limited features in Azure Active Directory provide room for third-party assistance

Read more: 2, next page ›

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

‡ Craig Kitterman (@craigkitterman, pictured below) posted Stefan Schackow’s Windows Azure Web Sites: How Application Strings and Connection Strings Work article on 7/17/2013:

Editor's Note: This post comes from Stefan Schackow, Principal Program Manager Lead for the Windows Azure Web Sites Team.

Windows Azure Web Sites has a handy capability whereby developers can store key-value string pairs in Azure as part of the configuration information associated with a website. At runtime, Windows Azure Web Sites automatically retrieves these values for you and makes them available to code running in your website. Developers can store plain vanilla key-value pairs as well as key-value pairs that will be used as connection strings.

Since the key-value pairs are stored behind the scenes in the Windows Azure Web Sites configuration store, the key-value pairs don’t need to be stored in the file content of your web application. From a security perspective that is a nice side benefit since sensitive information such as Sql connection strings with passwords never show up as cleartext in a web.config or php.ini file.

You can enter key-value pairs from “Configure” tab for your website in the Azure portal. The screenshot below shows the two places on this tab where you can enter keys and associated values:

You can enter key-value pairs as either “app settings” or “connection strings”. The only difference is that a connection string includes a little extra metadata telling Windows Azure Web Sites that the string value is a database connection string. That can be useful for downstream code running in a website to special case some behavior for connection strings.

Retrieving Key-Value Pairs as Environment Variables

Once a developer has entered key-value pairs for their website, the data can be retrieved at runtime by code running inside of a website.

The most generic way that Windows Azure Web Sites provides these values to a running website is through environment variables. For example, using the data shown in the earlier screenshot, the following is a code snippet from ASP.NET that dumps out the data using environment variables:

Here is what the example page output looks from the previous code snippet:

[Note: The interesting parts of the Sql connection string are intentionally blanked out in this post with asterisks. However, at runtime rest assured you will retrieve the full connection string including server name, database name, user name, and password.]

Since the key-value pairs for both “app settings” and “connection strings” are stored in environment variables, developers can easily retrieve these values from any of the web application frameworks supported in Windows Azure Web Sites. For example, the following is a code snippet showing how to retrieve the same settings using php:

From the previous examples you will have noticed a naming pattern for referencing the individual keys. For “app settings” the name of the corresponding environment variable is prepended with “APPSETTING_”.

For “connection strings”, there is a naming convention used to prepend the environment variable depending on the type of database you selected in the databases dropdown. The sample code is using “SQLAZURECONNSTR_” since the connection string that was configured had “Sql Databases” selected in the dropdown.

The full list of database connection string types and the prepended string used for naming environment variables is shown below:

If you select “Sql Databases”, the prepended string is “SQLAZURECONNSTR_”

If you select “SQL Server” the prepended string is “SQLCONNSTR_”

If you select “MySQL” the prepended string is “MYSQLCONNSTR_”

If you select “Custom” the prepended string is “CUSTOMCONNSTR_”

Retrieving Key-Value Pairs in ASP.NET

So far we have shown how the key-value pairs entered in the portal flow through to a web application via environment variables. For ASP.NET web applications, there is some extra runtime magic that is available as well. If looking at the names of the different key-value types seems familiar to a .NET developer that is intentional. “App settings” neatly map to the .NET Framework’s appSettings configuration. Similarly “connection strings” correspond to the .NET Framework’s connectionStrings collection.

Here is another ASP.NET code snippet showing how an app setting can be referenced using System.Configuration types:

Notice how the value entered into the portal earlier just automatically appears as part of the AppSettings collection. If the application setting(s) happen to already exist in your web.config file, Windows Azure Web Sites will automatically override them at runtime using the values associated with your website.

Connection strings work in a similar fashion, with a small additional requirement. Remember from earlier that there is a connection string called “example-config_db” that has been associated with the website. If the website’s web.config file references the same connection string in the <connectionStrings /> configuration section, then Windows Azure Web Sites will automatically update the connection string at runtime using the value shown in the portal.

However, if Windows Azure Web Sites cannot find a connection string with a matching name from the web.config, then the connection string entered in the portal will only be available as an environment variable (as shown earlier).

As an example, assume a web.config entry like the following:

A website can reference this connection string in an environment-agnostic fashion with the following code snippet:

When this code runs on a developer’s local machine, the value returned will be the one from the web.config file. However when this code runs in Windows Azure Web Sites, the value returned will instead be overridden with the value entered in the portal:

This is a really useful feature since it neatly solves the age-old developer problem of ensuring the correct connection string information is used by an application regardless of where the application is deployed.

Configuring Key-Value Pairs from the Command-line

As an alternative to maintaining app settings and connection strings in the portal, developers can also use either the PowerShell cmdlets or the cross-platform command line tools to retrieve and modify both types of key-value pairs.

For example, the following PowerShell commands define a hashtable of multiple app settings, and then stores them in Azure using the Set-AzureWebsite cmdlet, associating them with a website called “example-config”.

Running the following code snippet in ASP.NET shows that the value for the original app setting (“some-key-here”) has been updated, and a second key-value pair (“some-other-key”) has also been added.

Sample HTML output showing the changes taking effect:

some-other-key <--> a-different-value

some-key-here <--> changed-this-valueUpdating connection strings works in a similar fashion, though the syntax is slightly different since internally the PowerShell cmdlets handle connection strings as a List<T> of ConnStringInfo objects (Microsoft.WindowsAzure.Management.Utilities.Websites.Services.WebEntities.ConnStringInfo to be precise).

The following PowerShell commands show how to define a new connection string, add it to the list of connection strings associated with the website, and then store all of the connection strings back in Azure.

Note that for the property $cs.Type, you can use any of the following strings to define the type: “Custom”, “SQLAzure”, “SQLServer”, and “MySql”.

Running the following code snippet in ASP.NET lists out all of the connection strings for the website.

Remember though that for Windows Azure Web Sites to override a connection string and materialize it in the .NET Framework’s connection string configuration collection, the connection string must already be defined in the web.config. For this example website, the web.config has been updated as shown below:

Now when the ASP.NET page is run, it shows that both connection string values have been over-ridden using the values stored via PowerShell (sensitive parts of the first connection string are intentionally blanked out with asterisks):

Summary

You have seen in this post how to easily associate simple key-value pairs of configuration data with your website, and retrieve them at runtime as environment variables. With this functionality web developers can safely and securely store configuration data without having this data show up as clear-text in a website configuration file. And if you happen to be using ASP.NET, there is a little extra configuration “magic” that also automatically materializes the values as values in the .NET AppSettings and ConnectionStrings configuration collections.

No significant articles today

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

No significant articles today

Return to section navigation list>

Windows Azure Infrastructure and DevOps

‡ David Linthicum (@DavidLinthicum) asserted “There's no sign that innovation will accelerate -- but a real risk Azure will lose momentum as reorg chases out employees ” in a deck for his Windows Azure users, be afraid of Microsoft's shake-up post of 7/16/2013 for InfoWorld’s Cloud Computing blog:

Microsoft is changing -- or at least reorganizing. In my experience, a company reorganizes when it isn't doing well, so it plays three-card monte in front of the press to distract from those woes. In the end, a reorg typically makes no changes to the culture, solves no problems, and confuses and disenchants both employees and customers. But it's a trick that public companies play all the time.

In Microsoft's reorg, who gets Windows Azure? The winner: Satya Nadella. As CITEworld's Matt Rosoff notes, "Nadella has been running Microsoft's fastest-growing business unit, Server and Tools, since 2011, when longtime veteran Bob Muglia left. Before that, he oversaw Bing engineering and tools and services for small businesses. He now runs back-end development and engineering for services, including Microsoft's data center, and retains control of the Azure business."

If you're a so-called Microsoft shop and/or have moved to Azure, you should be concerned by the reorg for a few reasons.

First, it does not appear that the level of innovation will rise at Microsoft, specifically around its PaaS and IaaS offerings. Microsoft has been playing follow the leader -- specifically, Amazon Web Services, which is right down the street from Microsoft's campus -- when Microsoft really should be innovating to be the leader. However, doing so takes creativity, an attribute Microsoft has been lacking. (Look no further than the Surface for proof.)

Second, it takes months, sometimes years to recover from reorgs, even good ones, in terms of realigning the resources to be most effective. I suspect there will be a ton of resignations at Microsoft three to six months after the reorg is complete, with many Azure staff members finding their way to Amazon Web Services (which is hiring). Product-delivery issues will result, affecting Azure features and updates.

Azure needs some TLC if it wants to hold its position in the market. Many enterprises need Azure to succeed, which means many need Microsoft as a whole to succeed. Will Azure get the innovation and attention it requires? That's not clear. What's clear is that it's not the Microsoft I knew in the 1990s.

Luis Panzano (@luispanzano) reported Remote Desktop Services are now allowed on Windows Azure in a 7/15/2013 post:

I’ve not seen a lot of news about this so I thought it was worth writing a short post just to remember everyone that on July 1st, Microsoft has officially changed Windows Azure licensing terms (PUR) to allow the use of Remote Desktop Services (RDS) on Windows Azure Virtual Machines. Previously this scenario was not allowed in Windows Azure. Before July 1st you could only access an Azure Windows Server VM for purpose of server administration or maintenance (up to 2 simultaneous sessions are authorized for this service).

Let’s see some details about this change:

To enable more than 2 simultaneous sessions you will need to purchase RDS Subscriber Access Licenses (SALs) through the Microsoft Services Provider Licensing Agreement (SPLA) for each user or device that will access your solution on Windows Azure. SPLA is separate from an Azure agreement and is contracted through an authorized SPLA reseller. Click here for more information about SPLA benefits and requirements.

RDS Client Access Licenses (CALs) purchased from Microsoft VL programs such as EA, do not get license mobility to shared cloud platforms, hence they cannot be used on Azure.

Windows ‘Client’ OS (e.g. Windows 8) virtual desktops, or VDI deployments, will continue to not be allowed on Azure, because Windows client OS product use rights prohibit such use on multi-tenant/shared cloud environments.

Customers can use 3rd party application hosting products that require RDS sessions functionality (e.g. Citrix XenDesktop), subject to product use terms set by those 3rd party providers, and provided these products leverage only RDS session-hosting (Terminal Services) functionality. Note that RDS SALs are still required when using these 3rd party products.

These new licensing has been updated in the latest Microsoft Product Use Rights docs and in the Windows Azure Licensing FAQ.

So if you are a service provider with a legacy application that needs RDS to work (eg. WinForms based solution), you can now offer it to your customers on Windows Azure.

The licensing requirements are bizarre, to be generous. CALs are the obvious choice for Windows Azure users. I’ve received price and availability information for RDS SPLA + SALs from Ingraham Micro’s SPLA Team, making it clear that I had an “ISV client who’s interested in hosting a legacy Windows desktop app in Windows Azure Virtual Machines.” The terms and conditions in the form letter that the SPLA Team sent me included the following:

SPLA Hosting Model:

Through SPLA, Ingram would be your reseller as you are seen as the End-user. Server hardware MUST be owned, rented, or leased by the SPLA partner (i.e. your company); your customer cannot own the hardware. Server software does not require a purchase in SPLA.

Service providers can provide software services that interact with Microsoft licensed products to their customers. However, the service provider is the licensee, not the customer. This licensing model is for a partner that wishes to set up their own datacenter and host a solution for their customer. [Emphasis added.]

In response to a query, a member of Ingram''s SPLA Team offered the following advice:

You can have the Windows Server outsourced for you. That is addressed in the terms and conditions of the agreement paperwork.

Your ISV customer that will be providing this service needs to be the one who will sign the SPLA agreement. In that case they are renting the server from a third party ( Windows Azure). Which is allowed.

The cost of SAL’s is $3.45 per user per month = $41.40 per user per year, which might be subject to sales tax, depending on the user’s location. My client will need to be a Microsoft partner and have a Windows Azure subscription. The additional paperwork consists of a Microsoft Business and Services Agreement and a Microsoft Service Provider License Agreement (SPLA).

My Enabling Remote Desktop Services in a Windows Azure Virtual Machine with Active Directory Installed post of 7/9/2012 described Microsoft’s previous refusal to license use of RDS in virtual environments:

The following Microsoft licensing restrictions, which were conveyed to me in an email message, preclude use of Remote Desktop Services and Remote Web Access with Windows Azure Virtual Machines:

Virtualized Desktop Services fall under the terms of the Windows Server Licensing Agreement. Unless you are an Independent Software Vendor (ISV) using SPLA[*] licensing to provide a SaaS based service, Windows Server does not include License Mobility to Public Clouds, and as a result Virtualized Desktop Services are not licensable on Windows Azure and other Public Clouds because of restrictions under the Windows Server License Agreement. Virtualized Desktop Services include Remote Desktop Services (RDS), Remote Terminal Services, and related third party offerings (example given - Citrix XenDesktop).

<Return to section navigation list>

Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

‡ Mike McKeown (@nwoekcm) described Building Private Clouds with Windows Azure Pack (WAP) in an 7/16/2013 post to the Aditi Technologies blog:

Azure public cloud’s model of elastic self-service deployment of VMs and applications, is changing the way IT departments allocate servers. Instead of tying servers to a specific application, IT departments now look to providing a pool of shared and dynamically self-allocated resources.

There are compelling needs to run a private version of the Azure Cloud that provides most of the multi-tenant services and the benefits of the public Cloud, in an on-premise location. There are a lot of hosting partners that want to offer these Azure Cloud OS services to their customers. Microsoft wants to give a consistent platform across hosting providers, private DCs, and Azure Cloud. The Windows Azure Pack (WAP) decouples and brings a few of Azure OS features and a modified portal with a common code base into the private Cloud. It allows an enterprises to assume the role of service providers. It removes limitations to allow service providers to try and garner enterprise workloads.

Using WAP, your IT department can install these new features. (This was previously Windows Azure Services. Windows Server released with System Center at the start of 2013). The Azure Pack is built on top of Windows Server 2012 and System Center R2/ with Service Provider Foundation. An IT Department that builds on Windows Server 2012 and System Center can move to WAP anytime. One of the goals of WAP is to drive a consistent IT operations and developer experience. These technologies will evolve over time. Some features for Azure will be released first in WAP and rolled into Azure Cloud, and vice versa. For datacenters running System Center and Windows Server 2012 WAP comes at no cost.

Here are the services/workloads in the first release of Windows Azure Pack:

1. Web sites

- IIS currently is a server-centric platform but needs to evolve to be Cloud-first. The IIS team rebuilt a new hosting PaaS with LB and scaling on-demand, devOps optimized. High-density supports thousands of users at a cost lesser than that of IIS with new capabilities. This is a good motivation to move into the on-premise cloud instead of running original IIS.

- Multi-machine PaaS container with data and application tier and load balancing. The platform can talk to many source-code providers. As an IT Operations person you only have to deploy the Web PaaS and do not have to mess with configuration issues.

2. Service Bus

- Earlier the on-premise service bus had restrictions. Now you can get the same messaging architecture as Azure Cloud service bus with no limitations.

- Reliable messaging to build a cloud application that scales and communicates with other applications or across other boundaries. Messaging allows you to pass and receive messages across the platform.

- Supports publish and subscribe messaging patterns across a variety of access points on multiple platforms using standard protocols.

3. Virtual Machines (IaaS)

- Allows you to provision and manage VMs as a consumer and define your networking. Gallery of applications and fully self-serviced experience for provisioning VMs.

- Consistent Azure VM API on-premise and in cloud so you can access VMs the same way regardless of where the datacenter that you are using is.

- Adds a new Azure feature called Virtual Machine Roles (like AMIs in AWS which are Amazon EC2 Virtual Machine Templates). A VM Role provides a way to scale VMs elastically and define metadata for its container and its parameters. They are VM templates the IT Department can define to make available for self-provisioning and scale. Templates can be versioned and take initial container information such as instance count, VM size, and hard disk. Provide admin credentials and OS version, IP address type and allocation method for IP address. You can specify application specific settings as well.

- Virtual Networks allow you to define VMs. Site-to-site connectivity allows customers to connect their cloud networks to their private networks. Good for hosters as well as the enterprise.

4. Service Management Portal and API

- Federate identities, Active Directory, and standards based.

- Take same portal as in Azure, decouple it, and run it in the on premise the datacenter and talk to the consistent Service Management API.

Service Consumers

Service consumers are those who consume applications (developers) and infrastructure (IT Ops) from service providers. They need self-service admin and want to acquire capacity upon demand within limits defined by the IT Department or the hosting provider (have an internal approval process to increase beyond limits). There is a need for predictable costs and infrastructure that is up-and-running quickly.

IT Departments are now moving internally using a charge-back model (internal dollars vs. credit card) where IT Operations are charging back to different departments, almost like internal hosters. Today, some internal IT requests lead internal folks to go out of band to get their job done via external hosting providers or acquire hardware or software without IT approval. WAP helps with simple and quick self-provisioning so that you no longer need to acquire hosting hardware that is outside the IT budget.

Additional Consumer Services

- Integration with AD for the enterprise-ADFS and co-admins those are critical for the enterprise (Not for service providers).

- Integration with SQL Server and MySQL- Support for SQL Server always on to make databases highly available across the cluster.

- Co-Admins in WAP now allow you to associate an IT group with a co-admin account. This does not exists in Azure Cloud yet.

- Console Connect – Today Remote desktop in Azure Cloud IaaS will only work on a public network (RDP for Windows VM or SSH for Linux). If you cannot get to it publicly you cannot remote into VM. Now, with WAP, you have a new feature called Console Connect through a secure channel that allows you to connect to a machine that is not running on a public network but in an enterprise on-premise network.

Service Providers

Service providers want to provide the most service at lowest cost to service consumers. Providers want to use hardware efficiency by automating everything. Providers may also want to provide differentiate on SLAs and profiles for different environments – thereby having different SLAs per workload that is not present in public cloud.

As an enterprise looks to move from capital to operational expenditures, service providers see a window of opportunity to acquire enterprise business in the leased model of a private cloud. WAP allows service providers to easily shift their offerings in this direction to attract this business from the enterprise.

Provider Portal

WAP supplies a provider portal for the cloud services that service providers can offer their tenants (for enterprises or hosters). It can provide different SLAs to customers through the portal and also tailor how you offer those services. The provider portal runs inside the enterprise firewall. It manages a different set of objects than the normal portal. You can manage a high-level PaaS Web hosting container that hosts multiple web sites. You can connect to VM clouds and service bus deployments along with their health. There is an automation tab that integrates with run books in System Center and you can edit workbook jobs and schedule them, and tie them to events coming from System Center.

Additional Provider Services

In the provider portal, there is a Plans service that allows providers to decide what types of plans a customer can access. Providers pick services to make available and then define a set of constraints and quotas for each subscription for subscribers. Providers can pick the VM template and Gallery items available. Maps capabilities to backend infrastructure.

- Public plan allows subscribers to try out a plan

- Private plan allows you to manually permit a subscription.

Additionally, in the provider portal there is a User Accounts service allowing providers to manage users and add co-admins or suspend/delete a subscription.

Resources

For additional information on the Windows Azure Pack go to the following site:

http://www.microsoft.com/en-us/server-cloud/windows-azure-pack.aspx

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

‡ Jan Van der Haegen (@janvanderhaegen) showed how to Detect if the browser will run your LightSwitch HTML app. in a 7/19/2013 post:

I just got a great tip from Michael Washington (yes, that awesome dude from that awesome website) that I wanted to post while I’m working on a large blog post about threading in LightSwitch (whoops),

If an end-user tries to open a LightSwitch application in a browser that is not supported, they are very likely to get stuck on the loading screen, with a little loading animation leading them to believe that the application is almost ready to rock&roll. Unfortunately, a lot of browsers will silently swallow the JavaScript exceptions (or just show a minor warning icon with a message that makes no sense to end users anyways, at best), making the user wait in vain until the loading icon stops spinning.

One way to reduce the number of support tickets heading towards the internal IT department, is to prepend the loading of the LightSwitch JavaScript libraries with a check if JQuery mobile is supported.

<script type="text/javascript">

$(document).ready(function () {

var supported = $.mobile.gradeA();

if (!supported) {

$(".ui-icon-loading").hide();

$(".ui-bottom-load").append('<div class="msls-header">This browser is not supported. Please upgrade to a more recent, <a href="http://jquerymobile.com/gbs/">supported</a> version.</div>');

} else {

msls._run()

.then(null, function failure(error) {

alert(error);

});

}

});

</script>

view raw default.html extract This Gist brought to you by GitHub.

Small caveat: not all browsers that support JQuery mobile at A-grade, will be able to run LightSwitch HTML apps. My WP7.8 for example is not running LightSwitch HTML apps correctly, although it will slip through the net of this check. This means you can either deal with that small percentage by buying enough hardware to run every possible browser and manually cross-check every LightSwitch feature so that you can keep a completely bullet-proof browsers<>version matrix up to date, or keeping this maintenance-free check and letting the IT crowd handle the rest… (thanks Matthieu for getting me addicted :p)

‡ Beth Massi (@bethmassi) posted Quick Tip: Getting LightSwitch Extensions to Install into Visual Studio 2013 Preview on 7/18/2013:

So you want to try out Visual Studio 2013 Preview and test out the awesome new LightSwitch features on your own real projects, but you can’t because your extension(s) won’t install into the VS2013 preview. Here’s a tip on how you can force the extension to install. But first, some background….

Visual Studio LightSwitch extensions are built as VSIX files, just like any other Visual Studio extension. Some extensions are built for specific versions of Visual Studio. The extension author decides what versions are supported when the VSIX is created. Because of this, when we release a preview of the next version of Visual Studio, extensions that you use are probably not available yet for the new version.

When you upgrade a LightSwitch project that uses extensions from one version of Visual Studio to the next, you really should have all the extensions available to the new version of Visual Studio. Less headaches that way :). So you need to install the extensions first, then you can open your LightSwitch Solution in the new version of Visual Studio and it will perform the upgrade smoothly. If you don’t have the extensions installed, you will get a warning and your project will not build correctly until you remove all the references. This can be a major pain if you are using a lot of extensions.

You can force an extension to install into any version of Visual Studio by changing the manifest. However, keep in mind that depending on the extension, it may not work correctly in the new version – ultimately it’s the extension author’s responsibility to upgrade their extension. However, many LightSwitch extensions should migrate just fine, particularly if they are already supporting multiple Visual Studio versions.

Here’s how to do it.

1. Rename the .VSIX extension to .ZIP

2. Extract the contents of the archive and open the .vsixmanifest contained inside with a text editor like notepad

3. Locate the <SupportedProducts> XML element and add version 12.0. i.e:

<SupportedProducts> <VisualStudio Version="10.0"> <Edition>VSLS</Edition> </VisualStudio> <VisualStudio Version="11.0"> <Edition>VSLS</Edition> </VisualStudio> <VisualStudio Version="12.0"> <Edition>VSLS</Edition> </VisualStudio> </SupportedProducts>4. Alternately you may see an <InstallationTarget> element instead. Add “12.0” to the version attribute. i.e.

<Installation> <InstallationTarget Id="Microsoft.VisualStudio.Pro" Version="[11.0,12.0]" /> </Installation>5. Save the .vsixmanifest, zip up the contents again, and rename the archive with the .vsix extension

6. Double-click the VSIX to install it into all the versions of Visual Studio you have on your system.

Now you should be ready to upgrade your project by opening it in Visual Studio 2013 Preview. I smoke tested the Filter Control, Office Integration Pack and Bing Map Control (available in the Contoso Construction sample) and they seem to be working so far so good.

I hope this helps you start testing your LightSwitch projects in the Visual Studio 2013 preview. I can’t tell you how important it is for us to find bugs sooner than later (especially upgrade issues) so thank you for helping make LightSwitch the best it can be. Remember if you have any feedback, please visit the LightSwitch forum. If you know you’ve found a bug, enter it directly into our bug database here: https://connect.microsoft.com/visualstudio

‡ Julie Lerman (@julielerman) posted Work on the Entity Framework Team! on 7/17/2013:

Here’s the first part of the job description:

Software Development Engineer in Test II Job

Date: Jul 9, 2013

Job Category: Software Engineering: Test

Location: Redmond, WA, US

Job ID: 842421-117199

Division: Server & Tools Business

Our team, part of Windows Azure Group, is developing an open source ORM technology for .NET called Entity Framework and related tooling for Visual Studio. Our latest runtime and designer preview is recently released with Visual Studio 2013 Preview, and through NuGet. This release includes exciting new features such as great SQL Azure support, async APIs, code based conventions, and many more! On the horizon, we are considering lots of exciting areas ranging from in-memory DB support, big data, mobile local data support and others. [Emphasis added.]

We are looking for incredibly dedicated and passionate engineers who can help us build a great database access technology on .NET across clouds, servers and devices. Come join us at the forefront of database access technologies, cloud computing, services world and more!

Our testing strategy is centered on building the best customer experience. We accomplish this by involving our testers in every stage of the development cycle including heavy investment in the design, prototyping and implementation phases. Because you will know how everything in the product works, you will be actively seeking ways to test it and provide feedback to the team to make it better. We are also a team that believes in leveraging key individual’s strengths and providing many opportunities for career advancement.

While this post is for an SDET position, we are in fact looking for more all-around engineers who are creative around building and ensuring the right customer experience. Our SDETs are expected to write product code as well. This is a fast paced environment (think startup culture) where process, rules and overhead is limited, in order to meet our customers’ requirements.

Alessandro Del Sole (@progalex) wrote Customizing Your LightSwitch HTML Apps with Authentication, JavaScript Code, Custom Styles, and Queries for InformIT.com on 7/15/2013. From the summary and Introduction:

In part 2 of his series about the new HTML client in Visual Studio LightSwitch 2012, Alessandro Del Sole, author of Microsoft Visual Studio LightSwitch Unleashed, details the anatomy of a project, showing how to customize the application by using special styles, implementing authentication, showing computed properties, and more.

Introduction

Part 1 of this series explained what the HTML client in LightSwitch is and how you can use it to build rich mobile data-centric applications that can run on multiple devices and platforms.

You learned that you can be productive quickly, even without knowing anything about HTML and JavaScript, and that LightSwitch allows you to create mobile business applications with the same simplified approach that you already use for desktop applications. This article continues from that structure, showing you some important customizations you can apply to the HTML client of your application. Let's begin by exploring the anatomy of a LightSwitch HTML project.

No significant Entity Framework articles today

<Return to section navigation list>

Cloud Security, Compliance and Governance

‡ Nino Filipe Godinho (@NunoGodinho) posted an Introduction to Windows Azure Security on 7/15/2013:

Last Monday I presented on Windows Azure UK User Group a session about “Windows Azure Security & Compliance”. During that session I spoke about the base security elements that Windows Azure provides from an infrastructure standpoint and how important is to implement security also in our applications.

In order to pass this information to a broader audience I decided to create a series of 3 posts about this topic. Those 3 posts will be:

Introduction to Windows Azure Security

- Introduction to Windows Azure Compliance

- Lessons Learned Building Secure and Compliant solutions in Windows Azure

Introduction to Windows Azure Security

When we talk to someone about Cloud, generally the following Security concerns are shown:

- Where is my Data Located?

- Is my Cloud Provider secure?

- Who can see my Data?

- How can I make sure my data on the Cloud continue to follow “my company policies”?

- Can I have my Data back?

- Can I have compliant applications in the Cloud?

- Can I encrypt my data? Where do I store the keys?

Those are extremely important questions that need to be answered before moving forward. The best way to answer them is generally to work with the Cloud provider and also with a partner that can provide real work insights about those topics for the application that is being built. For a reference, please check also this Windows Azure Standard Response to Request for Information: Security and Privacy from Cloud Security Alliance – http://bit.ly/WASecurityPrivacy.

Security is Multi-Dimensional

Also important is to understand that Security is Multi-Dimensional, since we shouldn’t only look at how secure the Cloud provider infrastructure is. For example, the Cloud infrastructure can be secure but if our solution isn’t it will allow unsecure access to the data, thus making the complete solution insecure.

In order to have a solution completely secure, we need to think about the following perspectives:

- Human: How does people treat sensitive data?

- You can have a very secure infrastructure, encryption strategy, but if your users share your sensitive data by exporting it to excel and place them in unsecure locations, or even use unsecure passwords, the system is still at risk.

- Windows Azure can’t help here

- Data: DB Hardening, Cryptography, Permissions

- By hardening the DB and encrypting data, using least privileges accounts, and for example changing the default database ports, the security will be increased.

- Windows Azure can’t help here

- Application: Design and Implement Security Best Practices

- The application design and implementation is very important to make sure the application is secure. Making sure you use for example “Partial trust” in .NET development will definitely make the security a lot better. Also I recommend checking the Microsoft Security Development Lifecycle.

- Windows Azure can’t help a lot here, but it allows the ability to run the Cloud Services in Partial Trust which will improve security.

- Host: OS Hardening, Regular Patching

- Making sure the OS that is being used is correctly configured and is patched regularly is extremely important. I recommend whenever creating Windows environments to leverage the Microsoft Best Practices Analyzer.

- Handled by Windows Azure in Cloud Services (PaaS) but handled by the user in Virtual Machines (IaaS)

- Networking: Firewall, VLANS, Secure Channels, ...

- From an infrastructure best practices it is very important to make sure that Firewalls and VLANs are correctly configured, and also making sure that all communications are always correctly configured.

- Handled by Windows Azure internally. All communications inside Windows Azure are secure, from communications from the Host to the Guest machine in the infrastructure level.

- Physical: Who can access my servers?

- Who can handle our servers is always important. In Windows Azure, like in most Cloud providers, servers are very secure and access to then is highly restricted. More information can be found here.

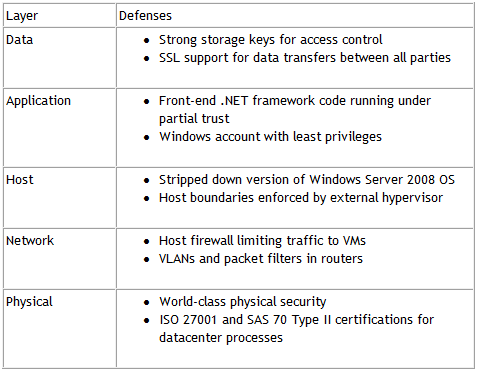

Windows Azure Security Layers

In order to improve security Windows Azure provides the following security defenses for each layer:

If we analyze the security layers in more detail we’ll see the following:

This means that Windows Azure provides several different layers which will improve the security of your application, and by using all the elements in the “onion” like graph, we’ll have a very secure system.

Defenses Inherited by Windows Azure Platform Applications

In addition, when thinking about security one very important analysis to do is how the application handles the STRIDE Model.

This is a quick overview of what is done/enabled by Windows Azure in each area of the STRIDE model.

Penetration Testing in Windows Azure

Microsoft conducts regular penetration testing to improve Windows Azure security controls and processes. Also, customers can execute Penetration Testing in Windows Azure, and will required to get previous authorization from Microsoft through filling out a Penetration Testing Approval Form (http://bit.ly/WAPenTesting) and contacting Support.

Summary

Windows Azure is secure, and if we think about most data centers used by companies today, we’ll see that Windows Azure and even other Cloud providers are a lot more secure. Having said that, the infrastructure can be secure but our application is only as secure as the combination of Infrastructure and Application, and so only if the application is built in a very secure way we will be able to say “Our application is Secure”.

I would recommend to look at the following resources in order to understand more about Windows Azure Security:

- Windows Azure Standard Response to Request for Information: Security and Privacy (Cloud Security Alliance) – http://bit.ly/WASecurityPrivacy

- Windows Azure Penetration Testing Approval Form – http://bit.ly/WAPenTesting

- Windows Azure Security – http://bit.ly/WASecurity

- Microsoft Security Development Lifecycle

<Return to section navigation list>

Cloud Computing Events

Robin Shahan (@RobinDotNet) reported her presentations about Windows Azure at San Diego Code Camp – 27th and 28th of July 2013 in a 7/15/2013 post:

There is a code camp in San Diego on July 27th and 28th that has a lot of really interesting sessions available. If you live in the area, you should check it out – there’s something for everyone. It also gives you an opportunity to talk with other developers, see what they’re doing, and make some connections.

I’m going to be traveling from the San Francisco Bay Area to San Diego to speak, as are some of my friends — Mathias Brandewinder, Theo Jungeblut, and Steve Evans.

For those of you who don’t know me, I’m VP of Technology for GoldMail (soon to be renamed PointAcross), and a Microsoft Windows Azure MVP. I co-run the Windows Azure Meetups in San Francisco and the Bay.NET meetups in Berkeley. I’m speaking about Windows Azure. One session is about Windows Azure Web Sites and Web Roles; the other one is about my experience migrating my company’s infrastructure to Windows Azure, some things I learned, and some keen things we’re using Azure for. Here are my sessions:

Theo works for AppDynamics, a company whose software has some very impressive capabilities to monitor and troubleshoot problems in production applications. He is an entertaining speaker and has a lot of valuable expertise. Here are his sessions:

- Clean Code I – Design Patterns and Best Practices

- Clean Code II – Cut your Dependencies with Dependency Injection

- Clean Code III – Software Craftmanship

- Debugging, Troubleshooting, and Monitoring Distributed Web & Cloud Applications

Mathias is an Microsoft MVP in F# and a consultant. He is crazy smart, and runs the San Francisco Bay.NET Meetups. Here are his sessions:

Steve (also a Microsoft MVP) is giving an interactive talk on IIS for Developers – you get to vote on the content of the talk! As developers, we need to have a better understanding of some of the system components even though we may not support them directly.

Also of interest are David McCarter’s sessions on .NET coding standards and how to handle technical interviews. He’s a Microsoft MVP with a ton of experience, and his sessions are always really popular, so get there early! And yet another Microsoft MVP speaking who is always informative and helpful is Jeremy Clark, who has sessions on Generics, Clean Code, and Design patterns.

In addition to these sessions, there are dozens of other interesting topics, like Estimating Projects, Requirements Gathering, Building cross-platform apps, Ruby on Rails, and Mongo DB (the name of which always makes me think of mangos), and that’s just to name a few.

So register now for the San Diego Code Camp and come check it out. It’s a great opportunity to increase your knowledge of what’s available and some interesting ways to get things done. Hope to see you there!

<Return to section navigation list>

Other Cloud Computing Platforms and Services

‡ Tom Rizzo (@TheRealTomRizzo) described an AWS Planning and Implementation Guide for Microsoft Exchange Server in a 7/18/2013 post:

Over the last few months we have released some powerful Windows enhancements to AWS including AWS Management Pack for Microsoft System Center and Guidance for Microsoft SQL Server AlwaysOn Availability Groups.

Building on the popularity of our SQL Server guidance, we are releasing the Microsoft Exchange Server 2010 in the AWS Cloud: Planning and Implementation guide. Customers continually ask us how they can move their email to the cloud, and the main product they ask about is Microsoft Exchange Server. In fact, a number of customers, including Choice Logistics and Banro, have already moved their Exchange deployments to AWS from on-premises locations.

The new planning and implementation guide discusses architectural considerations and configuration steps relevant when you launch the services, such as Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Virtual Private Cloud (Amazon VPC), that you need to run a highly available and site-resilient Exchange architecture. It discusses the tools that Exchange administrators and deployment engineers are already familiar with and walksthough different stages of planning process and how to use the tools in the context of AWS environment.

Included with the guide are sample AWS CloudFormation templates for a small business scenario. These samples templates are easy to customize and designed to help you get started quickly so you can deploy the necessary infrastructure with the right configuration.

Download the Implementation Guide and get started today!

<Return to section navigation list>

0 comments:

Post a Comment