Windows Azure and Cloud Computing Posts for 1/21/2013+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 1/26/2013 with new articles marked ••.

• Updated 1/25/2013 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V, System Center and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

•• Nathan Totten (@ntotten) and Nick Harris (@cloudnick) produced CloudCover Episode 99 - Windows Azure Media Services General Availibility on 1/25/2013:

In this episode Nick Harris and Nate Totten are joined by Mingfei Yan Program Manager II on Windows Azure Media Services. With Windows Azure Media Services reaching General Availability Mingfei joined us to demonstrate how you can use it to build great, extremely scalable, end-to-end media solutions for streaming on-demand video to consumers on any device and in this particular demo shows off the portal, encoding and both a Windows Store app and iOS device consuming encoded content.

For more information visit the Windows Azure Media Services page to learn more about the capabilities, and visit the Windows Azure Media Service Dev Center for tutorials, how-to articles, blogs, and more information and get started building applications with it today

Craig Kitterman (@craigkitterman) announced SQL Server Backup and Restore to Cloud Simplified in a 1/24/2013 post:

Editor’s Note: Today’s post was written by Guy Bowerman and Karthika Raman from the Microsoft Data Platform team.

SQL Server 2012 SP1 Cumulative Update 2 includes new functionality that simplifies the backup and restore capability of an on-premises SQL Server database to Windows Azure. You can now directly create a backup to Windows Azure Storage using SQL Server Native Backup functionality. Read the information below to get a brief introduction to the new functionality and follow the links for more in-depth information.

To download the update, go to the SQL Release Services Blog.

Overview:

In addition to disk and tape you can now use SQL Server native backup functionality to back up your SQL Server Database to the Windows Azure Blob storage service. In this release, backup to Windows Azure Blob storage is supported using T-SQL and SMO. SQL Server Databases on an on premises instance of SQL Server or in a hosted environment such as an instance of SQL Server running in Windows Azure VMs can take advantage of this functionality.

Benefits:

- Flexible, reliable, and limitless off-site storage for improved disaster recovery: Storing your backups on Windows Azure Blob service can be a convenient, flexible and easy to access off-site option. Creating off-site storage for your SQL Server backups can be as easy as modifying your existing scripts/jobs. Off-site storage should typically be far enough from the production database location to prevent a single disaster that might impact both the off-site and production database locations. You can also restore the backup to a SQL Server Instance running in a Windows Azure Virtual Machine for disaster recovery of your on-premises database. By choosing to geo replicate the Blob storage you have an extra layer of protection in the event of a disaster that could affect the whole region. In addition, backups are available from anywhere and at any time and can easily be accessed for restores.

- Backup Archive: The Windows Azure Blob Storage service offers a better alternative to the often used tape option to archive backups. Tape storage might require physical transportation to an off-site facility and measures to protect the media. Storing your backups in Windows Azure Blob Storage provides an instant, highly available and durable archiving option.

- No overhead of hardware management: There is no overhead of hardware management with Windows Azure storage service. Windows Azure services manage the hardware and provides geo-replication for redundancy and protection against hardware failures.

- Currently for instances of SQL Server running in a Windows Azure Virtual Machine, backing up to Windows Azure Blob storage services can be done by creating attached disks. However, there is a limit to the number of disks you can attach to a Windows Azure Virtual Machine. This limit is 16 disks for an extra-large instance and fewer for smaller instances. By enabling a direct backup to Windows Azure Blob Storage, you can bypass the 16 disk limit.

- In addition, the backup file which now is stored in the Windows Azure Blob storage service is directly available to either an on-premises SQL Server or another SQL Server running in a Windows Azure Virtual Machine, without the need for database attach/detach or downloading and attaching the VHD.

- Cost Benefits: Pay only for the service that is used. Can be cost-effective as an off-site and backup archive option.

The Windows Azure pricing calculator can help estimate your costs.

Storage: Charges are based on the space used and are calculated on a graduated scale and the level of redundancy. For more details, and up-to-date information, see the Data Management section of the Pricing Details article.

Data Transfers: Inbound data transfers to Windows Azure are free. Outbound transfers are charged for the bandwidth use and calculated based on a graduated region-specific scale. For more details, see the Data Transfers section of the Pricing Details article.

How it works:

Backup to Windows Azure Storage is engineered to behave much like a backup device (Disk/Tape). Using the Microsoft Virtual Backup Device Interface (VDI), Windows Azure Blob storage is coded like a “virtual backup device”, and the URL format used to access the Blob storage is treated as a device. The main reason for supporting Azure storage as a destination device is to provide a consistent and seamless backup and restore experience, similar to what we have today with disk and tape.

When the Backup or restore process is invoked, and the Windows Azure Blob storage is specified using the URL “device type”, the engine invokes a VDI client process that is part of this feature. The backup data is sent to the VDI client process, which sends the backup data to Windows Azure Blob storage.

As previously mentioned, the URL is much like a backup device used today, but it is not a physical device, so there are some limitations. For a full list of the supported options, see SQL Server Backup and Restore with Windows Azure Blob Storage Service.

How to use it

To write a backup to Windows Azure Blob storage you must first create a Windows Azure Storage account, create a SQL Server Credential to store storage account authentication information. By using Transact-SQL or SMO you can issue backup and restore commands.

The following Transact-SQL examples illustrate creating a credential, doing a full database backup and restoring the database from the full database backup. For a complete walkthrough of creating a storage account and performing a simple restore, see Tutorial: Getting Started with SQL Server Backup and Restore to Windows Azure Blob Storage Service.

Create a Credential

The following example creates a credential that stores the Windows Azure Storage authentication information.

Backing up a complete database

The following example backs up the AdventureWorks2012 database to the Windows Azure Blob storage service.

Restoring a database

To restore a full database backup, use the following steps.

Resources:

Please send your feedback on the feature and or documentation to karaman@microsoft.com or guybo@microsoft.com.

Scott Guthrie (@scottgu) posted Announcing Release of Windows Azure Media Services on 1/22/2013:

I’m excited to announce the general availability (GA) release of Windows Azure Media Services. This release is now live in production, supported by a new media services dev center, backed by an enterprise SLA, and is ready to be used for all media projects.

With today’s release, you now have everything you need to quickly build great, extremely scalable, end-to-end media solutions for streaming on-demand video to consumers on any device. For example, you can easily build a media service for delivering training videos to employees in your company, stream video content for your web-site, or build a premium video-on-demand service like Hulu or Netflix. Last year several broadcasters used Windows Azure Media Services to stream the London 2012 Olympics.

Media Platform as a Service

With Windows Azure Media Services, you can stream video to HTML5, Flash, Silverlight, Windows 8, iPad, iPhone, Android, Xbox, Windows Phone and other clients using a wide variety of streaming formats:

Building a media solution that encodes and streams video to various devices and clients is a complex task. It requires hardware and software that has to be connected, configured, and maintained. Windows Azure Media Services makes this problem much easier by eliminating the need to provision and manage your own custom infrastructure. Windows Azure Media Services accomplishes this by providing you with a Media Platform as a Service (PaaS) that enables you to easily scale your business as it grows, and pay only for what you use.

As a developer, you can control Windows Azure Media Services by using REST APIs or .NET and Java SDKs to build a media workflow that can automatically upload, encode and deliver video. We’ve also developed a broad set of client SDKs and player frameworks which let you build completely custom video clients that integrate in your applications. This allows you to configure and control every aspect of the video playback experience, including inserting pre-roll, mid-roll, post-roll, and overlay advertisement into your content.

Upload, Encode, Deliver, Consume

A typical video workflow involves uploading raw video to storage, encoding & protecting the content, and then streaming that content to users who can consume it on any number of devices. For each of these major steps, we’ve built a number of features that you’ll find useful:

Upload

Windows Azure Media Services supports multiple different options to upload assets into Media Services:

- Using REST APIs, or .NET or Java SDKs you can upload files to the server over HTTP/S with AES 256 encryption. This works well for smaller sets of files and is for great uploading content on a day to day basis.

- Bulk upload an entire media library with thousands of large files. Uploading large asset files can be a bottleneck for asset creation and by using a bulk ingesting approach, you can save a lot of time. For bulk upload, you can use the Bulk Ingest .NET Library or a partner upload solution such as Aspera which uses UDP for transporting files at very rapid speeds.

- If you already have content in Windows Azure blob storage, we also support blob to blob transfers and storage account to storage account transfers.

- We also enable to you to upload content through the Windows Azure Portal – which is useful for small jobs or when first getting started.

Encode and then Deliver

Windows Azure Media Services provides built-in support for encoding media into a variety of different file-formats. With Windows Azure Media Services, you don’t need to buy or configure custom media encoding software or infrastructure – instead you can simply send REST calls (or use the .NET or Java SDK) to automate kicking off encoding jobs that Windows Azure Media Services will process and scale for you.

Last month, I announced we added reserved capacity encoding support to Media Service which gives you the ability to scale up the number of encoding tasks you can process in parallel. Using the SCALE page within the Windows Azure Portal, you can add reserved encoding units that let you encode multiple tasks concurrently (giving you faster encode jobs and predictable performance).

Today, we have also added new reserved capacity support for on-demand streaming (giving you more origin server capacity) - which can also now be provisioned on the same SCALE page in the management portal:

In addition to giving your video service more origin streaming capacity to handle a greater number of concurrent users consuming different video content, our on-demand streaming support also now gives you a cool new feature we call dynamic packaging.

Traditionally, once content has been encoded, it needs to be packaged and stored for multiple targeted clients (iOS, XBox, PC, etc.). This traditional packaging process converts multi-bitrate MP4 files into multi-bitrate HLS file-sets or multi-bitrate Smooth Streaming files. This triples the storage requirements and adds significant processing cost and delay.

With dynamic packaging, we now allow users to store a single file format and stream to many adaptive protocol formats automatically. The packaging and conversion happens in real-time on the origin server which results in significant storage cost and time savings:

Today the source formats can be multi-bitrate MP4 or Smooth based, and these can be converted dynamically to either HLS or Smooth. The pluggable nature of this architecture will allow us, over the next few months, to also add DASH Live Profile streaming of fragmented MP-4 segments using time-based indexing as well. The support of HLS and the addition of DASH enables an ecosystem-friendly model based on common and standards-based streaming protocols, and ensures that you can target any type of device.

Consume

Windows Azure Media Services provides a large set of client player SDKs for all major devices and platforms, and they let you not only reach any device with a format that’s best suited for that device - but also build a custom player experience that uniquely integrates into your product or service.

Your users can consume media assets by building rich media applications rapidly on many platforms, such as Windows, iOS, XBox, etc. At this time, we ship SDKs and player frameworks for:

- Windows 8

- iOS

- Xbox

- Flash Player (built using Adobe OSMF)

- Silverlight

- Windows Phone

- Android [Emphasis added.]

- Embedded devices (Connected TV, IPTV)

To get started with developing players, visit the Developer tools for Windows Azure Media Services. The SDKs and player frameworks contain player samples that you can use as-is or customize with very little effort.

Start Today

I’m really excited about today’s the general availability (GA) release of Windows Azure Media Services. This release is now live in production, backed by an enterprise SLA, and is ready to be used for all projects. It makes building great media solutions really easy and very cost effective.

Visit the Windows Azure Media Services page to learn more about the capabilities, and visit the Windows Azure Media Service Dev Center for tutorials, how-to articles, blogs, and more information and get started building applications with it today!

Finally! A Windows Azure Service with an SDK that supports Android.![]()

Haddy El-Haggan (@Hhaggan) offered a Blob Storage using .NET (all the predefined functions) white paper on 1/19/2013 (missed when published):

Following a previous blog post on how to develop on Windows Azure, Microsoft Platform of Cloud Computing, I have [written] a document that I hope

itmight help you with your development with all the predefined functions of the blob storage. This type of storage is most likely used for storing unstructured data on the cloud. This is mainly all about the Microsoft.WindowsAzure.StorageClient..

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

•• Josh Twist (@joshtwist) described Dispatching to different query functions in Mobile Services in a 1/25/2013 post:

It’s common to want to run trigger differing behaviors inside your read script based on a parameter. For example, imagine we have a table called ‘foo’ and we want to have a default path and two special operations called ‘op1’ and ‘op2’ that do something slightly different (maybe one loads a summary of the objects to reduce the amount of traffic on the wire whilst the other expands a relationship to load child records).

So now, if I hit the HTTP endpoint for my table

http://todolist.azure-mobile.net/tables/foo

We’ll load the records as normal, returning a JSON array. However, if we add a parameter

http://todolist.azure-mobile.net/tables/foo?operation=op1Then we’ll get the following response:

"this result is from operation1"And if we hit ?operation=op2 then we’ll get:

"this result is from operation2"And, with the script above if we hit some undeclared operation (?operation=nonsense) then we’ll go back to the default path (you may decide to throw an error).

Glenn Gailey (@ggailey777) described New Samples and Videos Featured for Mobile Services in a 1/24/2013 post:

I just wanted to take a few minutes to share some exciting new videos and samples-related activity for Mobile Services—particularly for Windows Store apps. (You can find even more details at http://www.nickharris.net/2013/01/new-windows-azure-mobile-services-getting-started-content/).

New WindowsAzure.com Resources

Mobile Services guru Nick Harris has been busy adding value to Mobile Services content and samples, including creating videos for many of the Mobile Services tutorials. Links to these videos from the Channel 9 series are embedded in the Tutorials and Resources page.

We also have a new Code Samples page in the Mobile Services dev center, featuring (at this point) Windows Store samples.

New Scenario-Based Samples

Nick has also written 5 kickin’ new samples that address cool app-driven scenarios for Mobile Services and Windows Store apps.

I should point out that these samples are documented at least as well as our Mobile Services tutorials on WindowsAzure.com.

5 stars all the way….Geolocation sample end to end using Windows Azure Mobile Services (New)

This sample provides an end to end location scenario with a Windows Store app using Bing Maps and a Windows Azure Mobile Services backend. It shows how to add places to the Map, store place coordinates in a Mobile Services table, and how to query for places near your location.

Enqueue and Dequeue messages with Windows Azure Mobile Services and Services Bus (New)

My Store - This sample demonstrates how you can enqueue and dequeue messages from your Windows Store apps into a Windows Azure Service Bus Queue via Windows Azure Mobile Services. This code sample builds out an ordering scenario with both a Sales and Storeroom and app.

Capture, Store and Email app Feedback using Windows Azure Mobile Services (New)

This sample shows how you can implement a Feedback charm option in your Windows Store application and submit the feedback to be both stored Windows Azure Mobile Services and emailed directly to you.

Upload File to Windows Azure Blob Storage using Windows Azure Mobile Services (New)

This demonstrates how to store your files such as images, videos, docs or any binary data off device in the cloud using Windows Azure Blob Storage. In this example we focus on capturing and uploading images, with the same approach you can upload any binary data to Blob Storage.

Create a Game Leaderboard using Windows Azure Mobile Services (New)

The My Trivia sample demonstrates how you can easily add, update and view a leaderboard from your Windows Store applications using Windows Azure Mobile Services.

Keep it up Nick!

The Windows Azure Mobile Services Team described how to Build Real-time Apps with Mobile Services and Pusher [for iOS] in a 1/23/2012 tutorial:

This topic shows you how can add real-time functionality to your Windows Azure Mobile Services-based app. When completed, your TodoList data is synchronized, in real-time, across all running instances of your app.

The Push Notifications to Users tutorial shows you how to use push notifications to inform users of new items in the Todo list. Push notifications are a great way to show occasional changes. However, a service like Pusher is much better at delivering frequent and rapid changes to users. In this tutorial, we use Pusher with Mobile Services to keep a Todo list in sync when changes are made in any running instance of the app.

Pusher is a cloud-based service that, like Mobile Services, makes building real-time apps incredibly easy. You can use Pusher to quickly build live polls, chat rooms, multi-player games, collaborative apps, to broadcast live data and content, and that’s just the start! For more information, see http://pusher.com.

This tutorial walks you through these basic steps to add realtime collaboration to the Todo list application:

This tutorial is based on the Mobile Services quickstart. Before you start this tutorial, you must first complete Get started with Mobile Services.

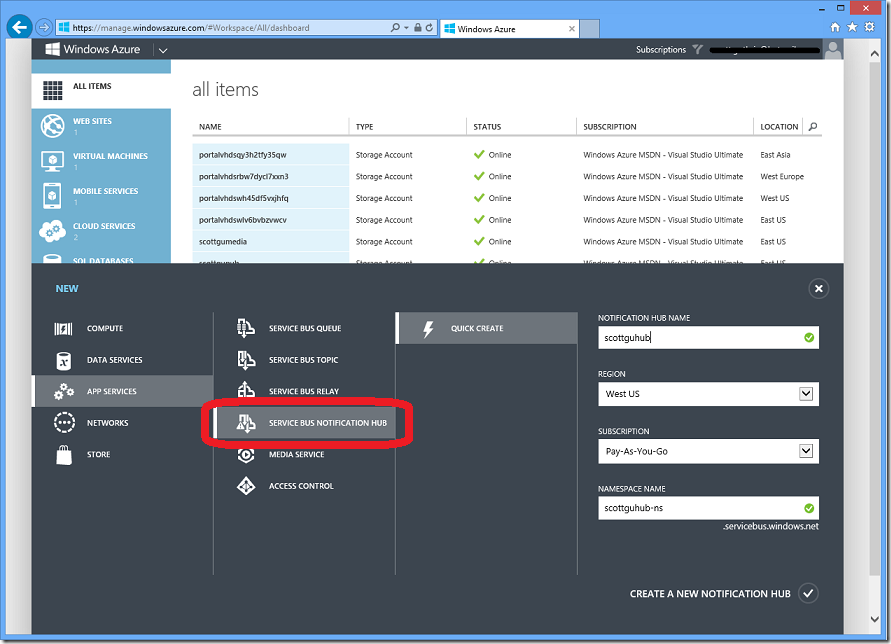

Create a new Pusher account

Your first step is to create a new account to use for the tutorial. You can use the FREE Sandbox plan, it's perfect for this tutorial.

To sign up for a Pusher account

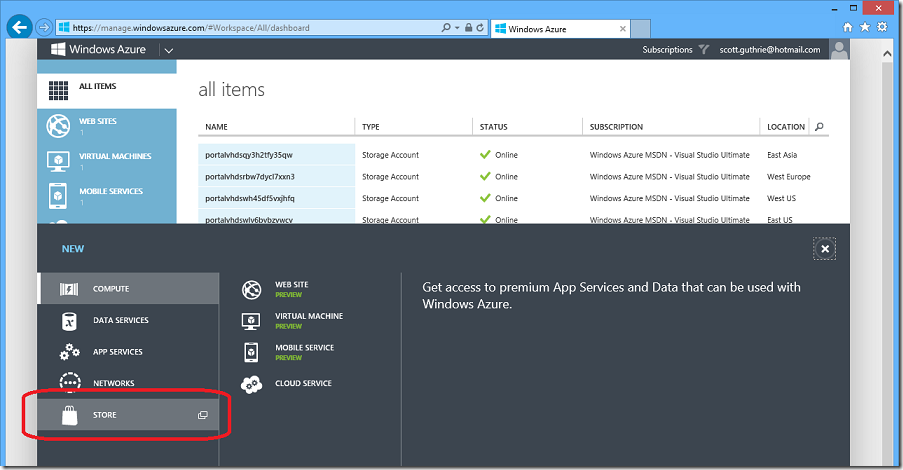

Log in to the Windows Azure Management Portal.

In the lower pane of the management portal, click New.

Click Store.

In the Choose an Add-on dialog, select Pusher and click the right arrow.

In the Personalize Add-on dialog select the Pusher plan you want to sign up for.

Enter a name to identify your Pusher service in your Windows Azure settings, or use the default value of Pusher. Names must be between 1 and 100 characters in length and contain only alphanumeric characters, dashes, dots, and underscores. The name must be unique in your list of subscribed Windows Azure Store Items.

Choose a value for the region; for example, West US.

Click the right arrow.

On the Review Purchase tab, review the plan and pricing information, and review the legal terms. If you agree to the terms, click the check mark. After you click the check mark, your Pusher account will begin the provisioning process.

After confirming your purchase you are redirected to the add-ons dashboard and you will see the message Purchasing Pusher.

Your Pusher account is provisioned immediately and you will see the message Successfully purchased Add-On Pusher. Your account has been created and you are now ready to use the Pusher service.

To modify your subscription plan or see the Pusher contact settings, click the name of your Pusher service to open the Pusher add-ons dashboard.

When using Pusher you will need to supply your Pusher app connection settings.

To find your Pusher connection settings

Click Connection Info.

In the Connection info dialog you will see your app ID, key and secret. You will use these values later in the tutorial so copy them for late use.

For more information on getting started with Pusher, see Understanding Pusher.

Update your app

Now that you have your Pusher account set up, the next step is to modify the iOS app code for the new functionality.

Install the libPusher library

The libPusher library let’s you access Pusher from iOS.

Download the libPusher library from here.

Create a group called libPusher in your project.

In Finder, unzip the downloaded zip file, select the libPusher-combined.a and /headers folders, and drag these items into the libPusher group in your project.

Check Copy items into destination group’s folder, then click Finish

This copies the libPusher files into your project.

On the project root in the project explorer, click Build Phases, then click Add Build Phase and Add Copy Files.

Drag the libPusher-combined.a file from the project explorer into the new build phase.

Change the Destination to Frameworks and click Copy only when installing.

Within the Link Binary With Libraries area, add the following libraries:

- libicucore.dylib

- CFNetwork.framework

- Security.framework

- SystemConfiguration.framework

Finally within Build Settings, locate the target build setting Other Linker Flags and add the -all_load flag.

This shows the -all_load flag set for the Debug build target.

The library is now installed ready for use.

Add code to the application

In Xcode, open the TodoService.h file and add the following method declarations:

// Allows retrieval of items by id - (NSUInteger) getItemIndex:(NSDictionary *)item; // To be called when items are added by other users - (NSUInteger) itemAdded:(NSDictionary *)item; // To be called when items are completed by other users - (NSUInteger) itemCompleted:(NSDictionary *)item;Replace the existing declarations of addItem and completeItem with the following:

- (void) addItem:(NSDictionary *) item; - (void) completeItem: (NSDictionary *) item;In TodoService.m, add the following code to implement the new methods:

// Allows retrieval of items by id - (NSUInteger) getItemIndex:(NSDictionary *)item { NSInteger itemId = [[item objectForKey: @"id"] integerValue]; return [items indexOfObjectPassingTest:^BOOL(id currItem, NSUInteger idx, BOOL *stop) { return ([[currItem objectForKey: @"id"] integerValue] == itemId); }]; } // To be called when items are added by other users -(NSUInteger) itemAdded:(NSDictionary *)item { NSUInteger index = [self getItemIndex:item]; // Only complete action if item not already in list if(index == NSNotFound) { NSUInteger newIndex = [items count]; [(NSMutableArray *)items insertObject:item atIndex:newIndex]; return newIndex; } else return -1; } // To be called when items are completed by other users - (NSUInteger) itemCompleted:(NSDictionary *)item { NSUInteger index = [self getItemIndex:item]; // Only complete action if item exists in items list if(index != NSNotFound) { NSMutableArray *mutableItems = (NSMutableArray *) items; [mutableItems removeObjectAtIndex:index]; } return index; }The TodoService now allows you to find items by id and add and complete items locally without sending explicit requests to the remote service.

Replace the existing addItem and completeItem methods with the following code:

-(void) addItem:(NSDictionary *)item { // Insert the item into the TodoItem table and add to the items array on completion [self.table insert:item completion:^(NSDictionary *result, NSError *error) { [self logErrorIfNotNil:error]; }]; } -(void) completeItem:(NSDictionary *)item { // Set the item to be complete (we need a mutable copy) NSMutableDictionary *mutable = [item mutableCopy]; [mutable setObject:@(YES) forKey:@"complete"]; // Update the item in the TodoItem table and remove from the items array on completion [self.table update:mutable completion:^(NSDictionary *item, NSError *error) { [self logErrorIfNotNil:error]; }]; }Note that items are now added and completed, along with updates to the UI, when events are received from Pusher instead of when the data table is updated.

In the TodoListController.h file, add the following import statements:

#import "PTPusherDelegate.h" #import "PTPusher.h" #import "PTPusherEvent.h" #import "PTPusherChannel.h"Modify the interface declaration to add PTPusherDelegate to look like the following:

@interface TodoListController : UITableViewController<UITextFieldDelegate, PTPusherDelegate>Add the following new property:

@property (nonatomic, strong) PTPusher *pusher;Add the following code that declares a new method:

// Sets up the Pusher client - (void) setupPusher;In TodoListController.m, add the following line under the other @synthesise lines to implement the new property:

@synthesize pusher = _pusher;Now add the following code to implement the new method:

// Sets up the Pusher client - (void) setupPusher { // Create a Pusher client, using your Pusher app key as the credential // TODO: Move Pusher app key to configuration file self.pusher = [PTPusher pusherWithKey:@"**your_app_key**" delegate:self encrypted:NO]; self.pusher.reconnectAutomatically = YES; // Subscribe to the 'todo-updates' channel PTPusherChannel *todoChannel = [self.pusher subscribeToChannelNamed:@"todo-updates"]; // Bind to the 'todo-added' event [todoChannel bindToEventNamed:@"todo-added" handleWithBlock:^(PTPusherEvent *channelEvent) { // Add item to the todo list NSUInteger index = [self.todoService itemAdded:channelEvent.data]; // If the item was not already in the list, add the item to the UI if(index != -1) { NSIndexPath *indexPath = [NSIndexPath indexPathForRow:index inSection:0]; [self.tableView insertRowsAtIndexPaths:@[ indexPath ] withRowAnimation:UITableViewRowAnimationTop]; } }]; // Bind to the 'todo-completed' event [todoChannel bindToEventNamed:@"todo-completed" handleWithBlock:^(PTPusherEvent *channelEvent) { // Update the item to be completed NSUInteger index = [self.todoService itemCompleted:channelEvent.data]; // As long as the item did exit in the list, update the UI if(index != NSNotFound) { NSIndexPath *indexPath = [NSIndexPath indexPathForRow:index inSection:0]; [self.tableView deleteRowsAtIndexPaths:@[ indexPath ] withRowAnimation:UITableViewRowAnimationTop]; } }]; }Replace the

**your_app_key**placeholder with the app_key value you copied from the Connection Info dialog earlier.Replace the onAdd method with the following code:

- (IBAction)onAdd:(id)sender { if (itemText.text.length == 0) { return; } NSDictionary *item = @{ @"text" : itemText.text, @"complete" : @(NO) }; [self.todoService addItem:item]; itemText.text = @""; }In the TodoListController.m file, locate the (void)viewDidLoad method and add a call to the setupPusher method so the first few lines are:

- (void)viewDidLoad { [super viewDidLoad]; [self setupPusher];At the end of the tableView:commitEditingStyle:forRowAtIndexPath method, replace the call to completeItem with the following code:

// Ask the todoService to set the item's complete value to YES [self.todoService completeItem:item];The app is now able to receive events from Pusher, and to update the local Todo list accordingly.

Install server scripts

All that remains is setting up your server scripts. We'll insert a script for when an item is inserted or updated into the TodoList table.

Log on to the Windows Azure Management Portal, click Mobile Services, and then click your mobile service.

In the Management Portal, click the Data tab and then click the TodoItem table.

In TodoItem, click the Script tab and select Insert.

This displays the function that is invoked when an insert occurs in the TodoItem table.

Replace the insert function with the following code:

var Pusher = require('pusher'); function insert(item, user, request) { request.execute({ success: function() { // After the record has been inserted, trigger immediately to the client request.respond(); // Publish event for all other active clients publishItemCreatedEvent(item); } }); function publishItemCreatedEvent(item) { // Ideally these settings would be taken from config var pusher = new Pusher({ appId: '**your_app_id**', key: '**your_app_key**', secret: '**your_app_secret**' }); // Publish event on Pusher channel pusher.trigger( 'todo-updates', 'todo-added', item ); } }Replace the placeholders in the above script with the values you copied from the Connection Info dialog earlier:

**your_app_id**: the app_id value**your_app_key**: the app_key value**your_app_key_secret**: the app_key_secretClick the Save button. You have now configured a script to publish an event to Pusher every time a new item is inserted into the TodoItem table.

Select Update from the Operation dropdown.

Replace the update function with the following code:

var Pusher = require('pusher'); function update(item, user, request) { request.execute({ success: function() { // After the record has been updated, trigger immediately to the client request.respond(); // Publish event for all other active clients publishItemUpdatedEvent(item); } }); function publishItemUpdatedEvent(item) { // Ideally these settings would be taken from config var pusher = new Pusher({ appId: '**your_app_id**', key: '**your_app_key**', secret: '**your_app_secret**' }); // Publish event on Pusher channel pusher.trigger( 'todo-updates', 'todo-completed', item ); } }Repeat step 5 for this script to replace the placeholders.

Click the Save button. You have now configured a script to publish an event to Pusher every time a new item is updated.

Test your app

To test the app you'll need to run two instances. You can run one instance on an iOS device and another in the iOS simulator.

Connect your iOS device, press the Run button (or the Command+R key) to start the app on the device, then stop debugging.

You now have your app installed on your device.

Run the app on the iOS simulator, and at the same time start the app on your iOS device.

Now you have two instances of the app running.

Add a new Todo item in one of the app instances.

Verify that the added item appears in the other instance.

Check a Todo item to mark it complete in one app instance.

Verify that the item disappears from the other instance.

Congratulations, you have successfully configured your mobile service app to synchronise across all clients in realtime.

Next Steps

Now that you’ve seen how easy it is to use the Pusher service with Mobile Services, follow these links to learn more about Pusher.

- Pusher API documentation: http://pusher.com/docs

- Pusher tutorials: http://pusher.com/tutorials

To learn more about registering and using server scripts, see Mobile Services server script reference.

Nick Harris (@cloudnick) updated CodePlex’s Windows Azure Toolkit for Windows 8 Release Preview’ Release Notes on 1/21/2013:

During the early previews of Windows 8, the Windows Azure Toolkit for Windows 8 provided developers with the first support for building backend services for Windows Store apps using Windows Azure. The main areas of feedback we received from mobile developers was that they wanted a turn-key set of services for common functionality such as notifications, auth, and data.

Windows Azure Mobile Services directly reflects this feedback by enabling developers to simply provision, configure, and consume scalable backend services. The downloads for this toolkit will be removed on the week of Feb 1st 2013. Future improvements will be channeled into Windows Azure Mobile Services rather than this toolkit.

To get started with Mobile Services, sign up for a Windows Azure account and receive 10 free Mobile Services.

Josh Twist (@joshtwist) described Using the scheduler to backup your Mobile Service database in a 1/20/2013 post:

Recently I launched my first iOS application called ‘doto’. doto is a todolist app with two areas of focus: simplicity and sharing. I wanted a super simple application to share lists with my wife (groceries, trip ideas, gift ideas for the kids, checklist for the camping trip etc). For more info, check out the mini-site or watch the 90 second video:

Now that I have a real app that stores real people’s data, I feel a responsibility to ensure that I take good care of it. Whilst it’s unlikely; it is possible that I could do something silly like drop a SQL table and lose a lot of data that is important to those users. So taking a periodic backup and keeping that in a safe location is advisable.

SQL Azure has a cool export feature that creates a ‘.bacpac’ file that contains your schema and your data – it saves the file to blob storage. And what’s more, they have a service endpoint with a REST API.

This means it’s easy for me to invoke an export from a Mobile Services script, even better, I can use the scheduler to do a daily backup.

Here’s the script I use; notice how the URL of the export service varies depending on the location of your database and server.

And now I just have to set a schedule, I’m going to go for 1 minute past midnight UTC.

Restore

If I ever need to restore the backup data I can create a new database from an import, right in the portal:

Which opens a cool wizard that even helps me navigate my blob storage containers to find the appropriate .bacpac file. To hook this new database up to my Mobile Service I could do an ETL over to the existing connected database or use the Change DB feature in the Mobile Service CONFIGURE tab:

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

• Sara M G Silva (@saramgsilva) posted Consuming Odata Service in Windows Store Apps (Include MVVM Pattern) to the Windows Dev Center - Windows Store Apps | Samples forum on 1/22/2013:

Introduction

This demo has the main goal to show the steps that is need to consuming an OData service in Windows Store Apps, and use the MVVM Pattern and has Ioc container for manage the dependencies.

Building the Sample

You only need Visual Studio 2012 and Windows 8, both the RTM version.

Description

For concretizate this sample i used the Netflix OData Catalog API (Preview), that can be found where:

For who is starting with OData service in Windows Store Apps i recommend to install the:

WCF Data Services Tools for Windows Store Apps

“The WCF Data Services Tools for Windows Store Apps installer extends the Add Service Reference experience with client-side OData support for Windows Store Apps in Visual Studio 2012.”

Here are the main points:

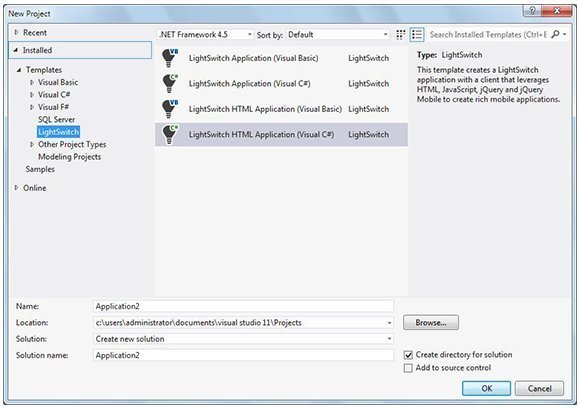

1. Creating a Blank Project like you can see in the following image:

2. Add Service Reference

3. Analizing the NetflixService

4. The IServiceManager, FakeServiceManager and ServiceManager

4.1. The LoadNewDVDMoviesAsync method

4.2. The LoadTopDVDMoviesAsync method

4.3. The DataServiceContextAsyncExtension

4.4 The model

5. The TitlesViewModel

5.1 The LoadDataAsync method

5.2 The GroupView and ItemView

6. Binding the view model with the view

6.1. ViewModelLocator

6.2. In App.xaml

6.3. In the TitlesPage.xaml

6.4. In the TitlesPage.xaml.cs

6.5. Binding Data

7. The output / running the demo

8. Fiddler

Let's start:

1. Creating a Blank Project like you can see in the following image:

Note: I used te name Netflix.ClientApp (Win8) for the project, but i will change the namespace forNetflix.ClientApp and in the future if I need to create theNetflix.ClientApp (WP8, i can use the same namespace and if need to "linked as" some file i will not have problems with namespaces.

2. Add Service Reference

The service reference that i added is: http://odata.netflix.com/v2/Catalog/ …

Sara continues with sections 3 and later and shows the Titles page in section 7:

• The Team Foundation Services group released the Team Foundation Service OData API (Beta) on 1/22/2013:

IMPORTANT: Beta Notes

- The OData service landing page is hosted at https://tfsodata.visualstudio.com/

- The OData service endpoint is hosted at https://tfsodata.visualstudio.com/DefaultCollection

- View changelog, up-to-date notes on known issues at: PublicNotes.txt

Overview

The Team Foundation Service OData API is an implementation of the OData protocol built upon the existing Team Foundation Service client objet model used to connect to Team Foundation Service. The API is subject to change as we get feedback from customers.

To learn more about the OData protocol, you can browse the OData site at http://www.odata.org.

If you have questions or feedback about this service, please email TFSOData@Microsoft.com. Please note that this service is provided "as-is", with no guaranteed uptime and is not officially supported by Microsoft. But if you are having problems please let us know and we'll do our best to work with you.

See the Demo: There is a video for Channel 9 which shows how to get started using the v1 of the service. Most of the same concepts from that video still apply for this version, but a revised video has not yet been created.

Samples: Windows 8 client (see Nisha Singh's blog entry)

On-Premises version of service (see Brian Keller's blog entry). This version of the codebase can be used against on-premises deployments of Team Foundation Server 2010 and 2012.

Getting Started

In the following section you will find meaningful information about how to consume data from the Team Foundation Service taking advantage of the OData API.

In order to authenticate with the service, you will need to enable and configure basic auth credentials on tfs.visualstudio.com:

- Navigate to the account that you want to use on https://tfs.visualstudio.com. For example, you may have https://account.visualstudio.com.

- In the top-right corner, click on your account name and then select My Profile

- Select the Credentials tab

- Click the 'Enable alternate credentials and set password' link

- Enter a password. It is suggested that you choose a unique password here (not associated with any other accounts)

- Click Save Changes

To authenticate against the OData service, you need to send your basic auth credentials in the following domain\username and password format:

- account\username

- password

- Note: account is from account.visualstudio.com, username is from the Credentials tab under My Profile, and password is the password that you just created.

Collections

The main resources available are Builds, Changesets, Changes, Builds, Build Definitions, Branches, Work Items, Attachments, Projects, Queries, Links and Area Paths. A couple of sample queries are provided for each resource, although complete query options are provided further in this page.

Case Sensitivity: Be aware that the OData resources are case-sensitive when making queries.

Page size defaults: the default page sizes returned by the OData service are set to 20, although you can certainly use the top and skip parameters to override that. …

The article continues with detailed resources tables.

Brian Benz (@bbenz) described Using Drupal on Windows Azure to create an OData repository in a 1/18/2013 post to the Interoperability @ Microsoft blog:

OData is an easy to use protocol that provides access to any data defined as an OData service provider. Microsoft Open Technologies, Inc., is collaborating with several other organizations and individuals in development of the OData standard in the OASIS OData Technical Committee, and the growing OData ecosystem is enabling a variety of new scenarios to deliver open data for the open web via standardized URI query syntax and semantics. To learn more about OData, including the ecosystem, developer tools, and how you can get involved, see this blog post.

In this post I’ll take you through the steps to set up Drupal on Windows Azure as an OData provider. As you’ll see, this is a great way to get started using both Drupal and OData, as there is no coding required to set this up.

It also won’t cost you any money – currently you can sign up for a 90 day free trial of Windows Azure and install a free Web development tool (Web Matrix) and a free source control tool (Git) on your local machine to make this happen, but that’s all that’s required from a client point of view. We’ll also be using a free tier for the Drupal instance, so you may not need to pay even after the 90 day trial, depending on your needs for bandwidth or storage.

So let’s get started!

Set up a Drupal instance on Windows Azure using the Web Gallery.

The Windows Azure team has made setting up a Drupal instance incredibly easy and quick – in a few clicks and a few minutes your site will be up and running. Once you’ve signed up for Windows Azure and have your account set up, click on New > Quick Create > from Gallery, as shown here:

Then click on the Drupal 7 instance, as shown here. The Web Gallery is where you’ll find images of the latest Web applications, preconfigured and ready to set up. Currently we’re using the Acquia version of Drupal 7 for Drupal:

Enter some basic information about your site, including the URL (.azurewebsites.net will be added on t what you choose), the type of database you want to work with (currently SQL Server and MySQL are supported for Drupal), the region you want your app instance deployed :

Next, add a database name, username and password for the database, and a region that the database should be deployed :

That’s it! In a few minutes your Windows Azure Web Site dashboard will appear with options for monitoring and working with your new Drupal instance:

Setting up the OData provider

So far we have a Drupal instance but it’s not an OData provider yet. To get Drupal set up as an OData provider, we’re going to have to add a few folders and files, and configure some Drupal modules.

Because good cloud systems protect your data by backing it up and providing seamless, invisible redundancy, working with files in the cloud can be tricky. But the Windows Azure team provide a free, easy to use tool to work with files on Windows azure, called Web Matrix. Web Matrix lets you easily download your files, work with them locally, test your work and publish changes back up to your site when you’re ready. It’s also a great development tool that supports most modern Web application development languages.

Once you’ve downloaded and installed Web Matrix on your local machine, you simply click on the Web Matrix icon on the bottom right under the dashboard, as show in the image above. Web Matrix will confirm that you want to make a local copy of your Windows Azure Web site and download the site:

Web Matrix will detect the type of Web site you’re working with, set up a local instance Database and start downloading the Web site to the instance:

When Web Matrix is done downloading your site you’ll see a dashboard showing you options for working with your local site. For this example, we’re only going to be working with files locally, so click the files icon shown here:

We need to add some libraries and modules to our Drupal Instance to make the Windows Azure standard configuration of Drupal 7 become an OData provider. There are three sets of files we need to download and place in specific places in our instance. You’ll need Git, or your favorite Git-compatible tool installed on your local machine to retrieve some of these files:

1) Download the OData Producer Library for PHP V1.2 to your local machine from https://github.com/MSOpenTech/odataphpprod/

Under the sites > all folder, create a folder called libraries> odata (create the libraries folder if it doesn’t exist ) and copy in the downloaded files.2) Download version 2 of the Drupal Libraries API from your local machine from http://drupal.org/project/libraries

Under the sites > all folder, create a folder called modules > libraries (yes, there are two libraries directories in different places) and copy in the downloaded files.3) Download r2integrated's OData Server files to your local machine from //git.drupal.org/sandbox/r2integrated/1561302.git

Under the sites > all folder, create a folder called modules > odata_server and copy in the downloaded files.Here’s what the directories should look like when you’re done:

Next, click on the Publish button, to upload the new files to your Windows Azure Web site via WebMatrix. After a few minutes your files should be loaded up and ready to use.

OData Configuration in Drupal on Windows Azure

Next, we will configure the files we just uploaded to provide data to OData clients.

From the top Menu, Go to the Drupal modules, and navigate down to the “other”section.

Enable Libraries and OData Server, then save configuration. The modules should look like this when you’re done:

Next, go to Site Configuration from the top menu, and navigate down to the Development section. Under development, click on OData Settings

Under Node, enable page and or article, (click on expose then to OData clients), the select the fields from each Node you want to return in an OData search. You can also return Comments, Files, Taxonomy Terms, Taxonomy Vocabularies, and Users. All are off by default and have to be enabled to expose properties, fields, and references through the OData server:

Click Save Configuration and you’re ready to start using your Windows Azure Drupal Web site as an OData provider!

One last thing - unfortunately, the default data in Drupal consists of exactly one page, so search results are not too impressive. You’ll probably want to add some data to make the site useful as an OData provider. The best way to do that is via the Drupal feeds module.

Conclusion

As promised at the beginning of this post, we’ve now created an OData provider based on Drupal to deliver open data for the open Web. From here any OData consumer can consume the OData feed and doesn’t have to know anything about the underlying data source, or even that it’s Drupal on the back end. The consumers simply see it as an OData service provider. Of course there’s more effort involved in getting your data imported, organizing it and building OData clients to consume the data, but this is a great start with minimal effort using existing, free tools.

<Return to section navigation list>

Windows Azure Service Bus, Caching Access Control, Active Directory, Identity and Workflow

•• Haishi Bai (@Haishi Bai2010) described New features in Service Bus Preview Library (January 2013) - 1: Message Pump in a 1/25/2013 post:

Recently Microsoft has announced the new Windows Azure Service Bus Push Notification Hubs. And many samples and videos have been posted on the new feature. To support Notification Hubs, a new Service Bus previews features library (Microsoft.ServiceBus.Preview.dll) has been released to NuGet gallery. In this series of post I’ll drill down to several other cool new features and important enhancements contained in this library.

Message Pump

Up until now, if you want to receive messages from a Windows Azure Service Bus queue or topic/subscription, you need to periodically poll the queue or the subscription asking for new messages. The following code should look quite familiar:

while (!IsStopped) { ... BrokeredMessage receivedMessage = null; receivedMessage = Client.Receive(); if (receivedMessage != null) { ... receivedMessage.Complete(); } ... Thread.Sleep(10000); }Actually, the above code is a simplified version of auto-generated code when you used “Worker Role with Service Queue” template to add a new Worker Role. This pattern works well in this case because you do need a loop or other blocking wait in your Run() method to keep your role instances running. However, there are a couple of problems with this pattern. First, the Thread.Sleep() calls cause unnecessary delays in the system – the above code can only respond to at most one message every ten seconds. This kind of throughputs is unacceptable to many systems. Of course we can reduce the sleep interval, let’s say to get it down to 1 second. This makes the system more responsive, but it creases number of service calls by 10 times. Polling at a 1 second interval generates 86,400 billable messages (60 * 60 * 24) per day, even if most of them are NULL messages. That doesn’t cost much – at the price of $0.01 per 10,000 billable messages it translates to 8.64 cents per day. However that IS a lot of service calls. Second, in some applications, especially client applications, event-driving programming model is often preferred. Service Bus preview features changes all these. Underneath it uses long-polling so that you don’t occur service transactions as often. And you get immediate feedbacks when a new message shows up in the pipeline. For instance, let’s say if default long-polling timeout is 1 minute, the number of billable messages reduces to 1,440 (60 * 24) per day. That’s quite a improvement in terms of reducing number of service calls. In addition, the preview library supports event-driven model instead of polling - you can simply wait for OnMessage events.

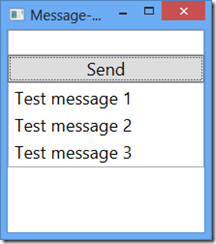

The following is a walkthrough of using the preview library. The walkthrough uses a simple WPF application that allows you to send and receive messages.

- Create a new WFP application.

- Install the preview NuGet package:

install-package ServiceBus.Preview- Get a minimum UI in place:

<Window x:Class="EventPumpWPF.MainWindow" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" Title="Message-Driven Messaging" Height="350" Width="525" FontSize="18"> <StackPanel Grid.Column="0"> <TextBox x:Name="messageText" /> <Button x:Name="sendMessage" Click="sendMessage_Click_1">Send</Button> <ListBox x:Name="messageList"/> </StackPanel> </Window>- Modify the code-behind:

public partial class MainWindow : Window { const string conString = "[SB connection string]"; const string queueName = "workqueue"; QueueClient mSender, mReceiver; public MainWindow() { InitializeComponent(); mSender = QueueClient.CreateFromConnectionString(conString, queueName); mReceiver = QueueClient.CreateFromConnectionString(conString, queueName, ReceiveMode.ReceiveAndDelete); mSender.OnMessage((m) => { messageList.Dispatcher.Invoke(() => { messageList.Items.Add(m.GetBody<string>()); }); }, 1); } private void sendMessage_Click_1(object sender, RoutedEventArgs e) { mSender.Send(new BrokeredMessage(messageText.Text)); messageText.Text = ""; } }- And that’s all! The only line that is new is highlighted – very simple and very straightforward.

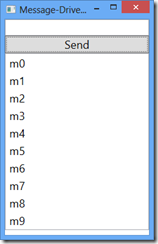

If you’ve observed closely, you might notice there’s a second parameter (highlight in green) to OnMessage() method. This method controls how many concurrent calls to the callback (the first parameter) can occur. To illustrate the effect of this parameter, let’s modify the code a little.

- First, we add a randomizer to MainWindow class:

Random rand = new Random();- And we’ll update or message handler to add a random sleep. This is to simulate fluctuations in processing time:

mSender.OnMessage((m) => { Thread.Sleep(rand.Next(1000, 3000)); messageList.Dispatcher.Invoke(() => { messageList.Items.Add(m.GetBody<string>()); }); }, 1);- Finally, we change the sending code to send 10 messages instead of 1:

for (int i = 0; i < 10; i++) { mSender.Send(new BrokeredMessage(messageText.Text + i.ToString())); }- Now launch the program and send a message “m”, which morphs into ten messages. The code takes a while to execute because of the random sleeps and there’s only a single entry is allowed to the callback. But because the single-entrance limit, you eventually get all messages back in-order.

- Now modify the second parameter (highlighted in green) to 10. Run the app again. Now the code takes shorter time to execute because the callback can be invoked multiple times at the same time. But the message display may be out-of-order:

There you go. A very cool addition to Service Bus provided by Service Bus preview features. The feature is very useful when you want to use event-driven programming

• Vittorio Bertocci (@vibronet) posted Group & Role Claims: Use the Graph API to Get Back IsInRole() and [Authorize] in Windows Azure AD Apps on 1/22/2013:

Welcome to a new installment of the “addressing the most common questions about Windows Azure AD development” series! This time I am going to tackle one question that I know is very pressing for many of you guys:

How do I get role and group membership claims for users signing in via Windows Azure AD?

Right now the tokens issued by Windows Azure AD in Web sign-on flows do not contain groups or role claims. In this post I will show you how to leverage the Graph API and the WIF extensibility model to work around the limitation; I will also take advantage of this opportunity to go a bit deeper in the use of the Graph API, which means that the post will be longer (and at times more abstract) than a simple code walkthrough. As usual, those are my personal musings and my own opinions. I am writing this on a Saturday night (morning?) hence I plan to have fun with this :-) For the ones among you who are in a hurry or have low tolerance for logorrhea, please feel free to head to the product documentation on MSDN.

Bird’s Eye View of the Solution

Most pre-claims authorization constructs in ASP.NET are based on the idea of roles baked in IPrincipal: namely, I am thinking of the <authorization> config element, the [Authorize] attribute and of course the IsInRole() method. There’s an enormous amount of existing code based on those primitives, and abundant literature using those as the backbone of authorization enforcement in .NET applications.

This state of affair was well known to the designer of the original WIF 1.0, who provided mechanisms for projecting claims with the appropriate semantic (and specifically http://schemas.microsoft.com/ws/2008/06/identity/claims/role) as roles in IPrincipal. We even have a mechanism which allows you to specify in config a different, arbitrary claim type to be interpreted as role, should your STS use a different claim type to express roles.As mentioned in the opening, right now Windows Azure AD does not send anything that can be interpreted as a role claim. The good news, however, is that Windows Azure AD offers the Graph API, a complete API for querying the directory and retrieve any information stored there, for any user; that includes the signed-in user, of course, and the roles he/she belongs to. If you need to know what roles your user is in, all you need to do (over-simplifying a bit, for now) is to perform a GET on a resource of the form https://graph.windows.net/yourtenant.onmicrosoft.com/Users('guido@yourtenantname.onmicrosoft.com')/MemberOf. That is pretty sweet, and in fact is just a ridiculously tiny sliver of all the great things you can do with the Graph API; however, if you’d do this from your application code that would not help you to leverage the user’s role information from <authorization> and the like. When you are in your application’s code is kind of too late, as the ClaimsPrincipal representing the caller has already been assembled and that’s where the info should be for those lower-level mechanisms to kick in. True, you could do something to the ClaimsPrincipal retroactively, but that’s kind of brittle and messy.

There is another solution here, which can save both goat and cabbage (can you really say this in English?:-)). The WIF processing pipeline offers plenty of opportunities for you to insert custom logic for influencing how the token validation and ClaimsPrincipal creation takes place: details in Chapter 3 of Programming WIF. Namely, there is one processing stage that is dedicated to incoming claims processing. Say that you have logic for filtering incoming claims, modifying them or extending the claims set you are getting from the STS with data from other sources. All you need to do is to derive from the ClaimsAuthenticationManager class, override the Authenticate method and add a reference to your custom class in the application’s config.

So, the solution I propose is simple: we can create a custom ClaimsAuthenticationManager that at sign-in time reaches back to the Graph, retrieves the roles information, creates roles claims accordingly and adds them to the ClaimsPrincipal. Everything else downstream from that will be able to see the roles information just like if they would have been originally issued by the STS.The code of custom ClaimsAuthenticationManager is going to be pretty simple, also thanks to the use of AAL for obtaining the necessary access token: just a tad more than 30 lines, and most of it string manipulation. In my experience, the thing that people often find tricky is the work that is necessary for enabling your Web application to invoke the Graph; furthermore, even if AAL reduces to a mere 3 the lines of code necessary for obtaining an access token, the structure of the parameters you need to pass is not always immediately clear to everybody. Here I’ll do my best to explain both: they are not especially hard and I am confident you’ll grok it right away. That said, I do hope we’ll manage to automate A LOT of this so that in the future you won’t be exposed to this complexity unless you want to change the defaults. We kind of already do this for the Web SSO part, if you use the MVC tool you can get a functioning MVC4 app which uses Windows Azure AD for Web SSO in no time. In fact, in this post I’ll use such an app as starting point.

Ready? Let’s dive.

Prepping the GraphClaimsAuthenticationManager Wireframe

Let’s get this immediately out of the way; also, it will provide structure for the rest of the work.

As mentioned, I assume you already have an MVC4 app that you configured with the MVC tool to integrate with your Windows Azure AD tenant for sign-in. If you didn’t do it yet, please head to this page now and follow the instructions for configuring your application. You can skip the publication to Windows Azure Web Sites, for this post we’ll be able to do everything on the local box. If you want to see the tool in action, check out this BUILD talk.

Create a new class library (though you could just add a class to your web project) and call it something meaningful: I called mine GraphClaimsAuthenticationManager.

Add a reference to System.IdentityModel, rename the class1.cs file to GraphClaimsAuthenticationManager.cs, then change the code as follows:1: public class GraphClaimsAuthenticationManager : ClaimsAuthenticationManager2: {3: public override ClaimsPrincipalAuthenticate(string resourceName, ClaimsPrincipal incomingPrincipal)4: {5: if (incomingPrincipal != null &&incomingPrincipal.Identity.IsAuthenticated == true)6: {7: // get a token for calling the Graph8:9: // query the Graph for the current user's memberships10:11: // add a role claim for every membership found12:13: }14: return incomingPrincipal;15: }16: }This is pretty much the default ClaimsAuthenticationManager implementation: it passes through all the incoming claims to the next stage undisturbed. Our job will be to fill in the method’s body following the comment placeholders I wrote there. You can make your application pick up and execute your class by adding a reference to the class library project and inseriting the proper config element to the web.config, as shown below (sci-fi formatting, you would not break strings IRL).

<system.identityModel> <identityConfiguration> <claimsAuthenticationManager

type="GraphClaimsAuthenticationManager.GraphClaimsAuthenticationManager,

GraphClaimsAuthenticationManager" />I’d suggest hitting F5 and see if everything still works, often something silly like misspelled namespaces in the type attribute will create stumble points and you want to catch that before there will be more moving parts later on.

Enabling An MVC App to Invoke the Graph API

Alrighty, now for the first interesting part.

The next thing we need to do is enabling your MVC application to call back into the Graph and inquiry about the user’s roles. But in order to do that, we first need to understand how our MVC application is represented in Windows Azure AD and what do we need to change.

When you run the MVC tool for enabling Windows Azure authentication you are basically getting lots of the steps I described here done for you. As a quick recap, the tool

- asks you which directory tenant you want to work with

- gathers your admin credentials and uses them to get an access token for the Graph API

- Invokes the Graph to create a new ServicePrincipal representing your MVC app. It does so by generating a new random GUID as identifier, assigning your local IIS express and project address as return URL, and so on

- Reaches out for the WS-Federation metadata document of the tenant you have chosen, and uses it to generate the necessary WIF settings to configure your app for Windows Azure SSO with the tenant of choice

…and that’s what enables you to hit F5 right after the wizard and see the SSO flow unfold in front of your very eyes, without the need of writing any code. Veeery nice.

Now, from the above you might be tempted to think that a ServicePrincipal is the equivalent of a RP role in ACS: an entry which represents an entity meant to be a token recipient. In fact, a ServicePrincipal can represent more roles than a simple RP: for example, an ServicePrincipal can also represent an applicative identity, with its own associated credential, whihc can be used for obtaining a token to be used somewhere else. Remember ACS’ service identities? That’s kind of the same thing.I guess you are starting to figure out what’s the plan here. We want to use the app’s ServicePrincipal credentials (in trusted subsystem fashion) to obtain a token for calling the Graph. That’s a fine plan, but it cannot be implemented without a bit more work. Namely:

- The MVC tool does not do anything the ServicePrincipal’s credentials. We must get to know them, and the only way after creation is to assign new ones. We’ll do that by updating the existing ServicePrincipal via cmdlets

- Calling the Graph is a privilege reserved only to entities belonging to well known roles: Company Administrators for read/write Directory Readers for read-only access. Needless to say, the ServicePrincipal created by the MVC tool belongs to neither. We’ll use the cmdlets here as well to add the app’s ServicePrincipal to the Directory Readers role.

Luckily it’s all pretty straightforward. The first thing we need to do is to retrieve a valid identifier for the ServicePrincipal, so that we can get a hold on it and modify it. That is pretty easy to do. Go to the app’s web.config, in the <system.identityModel> sections, and you’ll find the AppPrincipalId GUID in multiple places: in the identityConfiguration/audienceUris or in the realm property of the system.identityModel.services/federationConfiguration/wsFederation element. Put it in the clipboard (without the “SPN:”!) and open the O365 PowerShell cmdlets prompt. Then, consider the following script. The formatting is all broken, of course: keep an eye on the line numbers for understanding where the actual line breaks are.

1: Connect-MsolService2: Import-Module msonlineextended -Force3: $AppPrincipalId = '62b4b0eb-ef3e-4c28-7777-2c7777776593'4: $servicePrincipal =(Get-MsolServicePrincipal -AppPrincipalId $AppPrincipalId)5: Add-MsolRoleMember -RoleMemberType "ServicePrincipal"-RoleName "Directory Readers"-RoleMemberObjectId $servicePrincipal.ObjectId6:7: $timeNow = Get-Date8: $expiryTime = $timeNow.AddYears(1)9: New-MsolServicePrincipalCredential-AppPrincipalId $AppPrincipalId-Type symmetric-StartDate $timeNow-EndDate $expiryTime-Usage Verify-Value AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA/Q8=Line by line:

Line 1: connect to the tenant. You’ll be prompted for your admin user, make sure you choose the same tenant you have used to configure the MVC app :-)

Line 2: it imports the O365 cmdlets, and specifically the ones about ServicePrincipals. The “force” flag is mandatory on Win8 boxes.

Line 3: I assign the AppPrincipalId from the web.config so I don’t have to paste it every time.

Line 4: retrieve the ServicePrincipal

Line 5: add it to the “Directory Readers” role

Lines 7 and 8: get the current date and the date one year from now, to establish the valitity bopundaries of the credentials we are going to assign to the ServicePrincipal

Line 9: create a new ServicePrincipalCredential of type symmetric key (there are other flavors, like certificate based creds) and assign it to the app’s ServicePrincipal

Simple, right? Well, I have to thank Mugdha Kulkarni from the Graph team for this script. She wrote it for me while I was prepping for the BUILD talk, though in the end I decided I didn’t have enough time to show it on stage. Thank you Mugdha, told you this was going to come in handy! ;-)

Anyway, we’ve done our first task: our app has now the right to invoke the Graph. Let’s get back to the GraphClaimsAuthenticationManager and write some code to exercise that right.

Using AAL to Obtain an Access Token for the Graph API

Get back to VS and paste the following at the beginning of the if block in the Authenticate method:

1: string appPrincipalID = "62b4b0eb-ef3e-4c28-7777-2c7777776593";2: string appKey = "AAAAAAAAAAAAAAAAAAAAAAAAAAAA/Q8=";3:4: string upn = incomingPrincipal.FindFirst("http://schemas.xmlsoap.org/ws/2005/05/identity/claims/upn").Value;5: string tenantID = incomingPrincipal.FindFirst("http://schemas.microsoft.com/ws/2012/10/identity/claims/tenantid").Value;That is pretty scrappy code, I’ll readily admit. The first 2 lines hold the app’s ServicePrincipal id and key, respectively. I could have retrieved it from the config, but if I do everything how are you going to have fun? ;-)

The next 2 lines retrieve the UPN of the incoming user (“username@domain”) and the ID of the directory tenant from where he/she is coming from, both very important values for crafting our query.VERY IMPORTANT especially for you Observers landing on this post from the future (aren’t you sad that Fringe ended? Luckily the finale wasn’t terrible).

The claims used above are the claims from Windows Azure AD available TODAY. Those claims are very likely to change, hence the above will no longer be valid either because the claim types will no longer be there or more appropriate alternatives will emerge.Next, we are going to inject the ServicePrincipal credentials in AAL and obtain a token for calling the Graph. As mentioned, this requires just few lines but the parameters are a bit arcane. Bear with me as I walk you though their function and meaning. Also, don’t forget to add a reference to the AAL NuGet and associated using! You can do that by right-clicking on the GraphClaimsAuthenticaitonManager in solution explorer, choose Manage NuGet Packages, search for AAL and reference the result.

1: // get a token for calling the graph2: AuthenticationContext _authContext =new AuthenticationContext(string.Format(https://accounts.accesscontrol.windows.net/{0},tenantID));3: SymmetricKeyCredential credential =new SymmetricKeyCredential(string.Format("{0}@{1}",appPrincipalID,tenantID),Convert.FromBase64String(appKey));4: AssertionCredential _assertionCredential =_authContext.AcquireToken(string.Format(00000002-0000-0000-c000-000000000000/graph.windows.net@{0},tenantID),credential);5: string authHeader = _assertionCredential.CreateAuthorizationHeader();OK. Ready?

Line 2: we begin by initializing AuthenticationContext to the Windows Azure AD tenant we want to work with. We’ll use the AuthenticationContext for accessing from our code the features that Windows Azure AD offers. In order to do that, we simply pass the path of the Windows Azure AD tenant we want to work with.

Line 3: we create a representation of the app’s ServicePrincipal credentials, as an instance of the class SymmetricCredential. We do that by combining its symmetric key the ServicePrincipal name, obtained by combining the ServicePrincipal GUID (used as ServicePrincipalAppID in the cmdlet earlier) and the ID of the current tenant. The reason for which we need both the AppPrincipalId and the Tenant ID is that we want to make sure we specify we are referring to THIS principal in THIS tenant. If our app would be a multitenant app, designed to work with multiple AAD tenants, the same AppPrinciplaId would be (possibly) used across multiple tenants. we’d need to ensure we are getting a token for the right tenant, hence we qualify the name accordingly: appprincipalid@tenant1, appprincipalid@tenant2 and so on. Here we are working on a single tenant hence there is no ambiguity, but we have to use that format anyway

Line 4: we ask to the AuthenticationContext (hence to the directory tenant) to issue an access token for the Graph.

We need to prove who we are, hence we pass the credentials. Also, we need to specify for which resource we are asking a token for, hence the string.Format clause in the call. You see, the Graph is in itself a resource; and just like your app, it is represented with a ServicePrincipal. The string 00000002-0000-0000-c000-000000000000 happens to be its AppPrincipalId, and graph.windows.net is the hostname; qualify the two with the target tenantID and you' get the Graph ServicePrincipal name.Line 5: with this line we retrieve (from the results of the call to AcquireToken) the string containing the access token we need to call the Graph . The CreateAuthorizationHeader will simply put it in the form “Bearer <token>” for us, less work when we’ll put it in the HTTP header for the call.

Getting the Memberships and Enriching the Claims Collection of the Current Principal

A last effort and we’ll be done with our GraphClaimsAuthenticationManager! I’ll just put all the code there and intertwine the explanation of what’s going on in the description of every line. Paste the code below right after the AAL code just described, still within the if block of the Authenticate method.

1: // query the Graph for the current user's memberships2: string requestUrl =string.Format(https://graph.windows.net/{0}/Users('{1}')/MemberOf,tenantID, upn);3: HttpWebRequest webRequest =WebRequest.Create(requestUrl) as HttpWebRequest;4: webRequest.Method = "GET";5: webRequest.Headers["Authorization"] = authHeader;6: webRequest.Headers["x-ms-dirapi-data-contract-version"] = "0.8";7: string jsonText;8: var httpResponse = (HttpWebResponse)webRequest.GetResponse();9: using (var streamReader =new StreamReader(httpResponse.GetResponseStream()))10: {11: jsonText = streamReader.ReadToEnd();12: }13: JObject jsonResponse = JObject.Parse(jsonText);14: var roles =15: from r in jsonResponse["d"]["results"].Children()16: select (string)r["DisplayName"];17:18: // add a role claim for every membership found19: foreach(string s in roles)20: ((ClaimsIdentity)incomingPrincipal.Identity).AddClaim(new Claim(ClaimTypes.Role, s, ClaimValueTypes.String, "GRAPH"));Line 1: we craft the URL representing the resource we want to obtain. We are using the OData query syntax, which happens to be very intuitive. I’ll break this query down for you. Note that every element builds on its predecessors,

https://graph.windows.net/

This indicates the Windows Azure feature we want to access. In this case, it is the Graph API: if we would have wanted to access a token issuing endpoint, or a metadata document, we would have used a different URL accordingly{tenantID}

This indicates which AAD tenant we want to query. Here I am using tenantID (a GUID) because it is pretty handy, i received it with the incoming claims; howeve I could have used the tenant domain (the cloud-managed ones are of the form ‘tenantname.onmicrosoft.com’) just as well/Users

/Users indicate the entity I want to GET. If I’d stop the query here, I’d get a collection of all the users in the tenant(‘{upn}’)

adding this element filters the users’ list to select a specific entry, the one of the user that matches the corresponding UPN. Once again, the UPN is not the only way of identifying a user. Every entity in the directory has its (GUID) identifier, and if I would have access to it (the web sign on token did not carry it, but I could have gotten it as the result of a former query) I could use it as search key. In fact, that would even be more robust given that the UPN is non-immutable… though it is quite unlikely that a UPN would get reassigned during your session :-).

If we’d stop the query here, we’d get back a representation of the user matching our search key/MemberOf

assuming that the query so far produced a user: /MemberOf returns all the roles and security groups the user belongs to.Lines 3 and 4: standard HttpWebRequest initialization code. I guess I’ll have to start using HttpClient soon, or Daniel will stop greeting me in the hallways ;-)

Line 5: we add the header with the access token we obtained earlier.

Line 6: we add a well-known header, which specifies the version of the API we want to work with. This header is MANDATORY, no version no party.

Line 7 to 12: standard request execution and deserialization of the response stream into a string. We expect this string to be filled with JSON containing the answer to our query.