Windows Azure and Cloud Computing Posts for 9/8/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

• Updated 9/8/2011 6:00 PM PDT with articles marked • by Chris Hoff, Eric Nelson, Jim O’Neil, SQL Azure Communications team, and System Center Virtual Machine Manager team.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• SQL Server Communications sent the following e-mail on 9/8/2011:

What should you know about the upcoming CTP of SQL Azure Reporting?

Microsoft has extended its best-in-class reporting offering to the cloud with SQL Azure Reporting, enabling you to deliver rich insights to even more users without the need to deploy and maintain reporting infrastructure. During August communication, we have discussed the scope of upcoming CTP of SQL Azure Reporting in September 2011, which is the last CTP prior to SQL Azure Reporting's commercial release.

Before upcoming CTP is available, there are few things you should know:

1. This upcoming CTP will be deployed to a different environment than the current Limited CTP. Your reports will NOT be automatically migrated to this new CTP environment by Microsoft. If you wish to keep the reports and use in the new CTP, please make sure to have a local copy of the Business Intelligence Development Studio project. Once the upcoming CTP is available, you can then republish the reports from BIDS to the new Web Service endpoint URL that you will get from the new portal once you provision a new "server".

2. After the upcoming CTP is rolled out, we will replace the current "Reporting" page on Windows Azure portal (http://windows.azure.com) with a new Reporting portal experience. You will NOT be able to access the information related to the limited CTP from the new portal page (e.g. old Web Service endpoint URL and Admin user name). If you want to continue using the current CTP, please make sure to copy and save that information somewhere.

Nominate your company to Microsoft SQL Azure Reporting Customer Program

We are looking for a small number of customers who are interested in using SQL Azure Reporting in production. Selected customers will be invited to a NDA customer program managed by SQL Azure Reporting Development Team for product feedbacks.

To apply for this NDA Customer Program, please fill out the form on Microsoft Connect: http://connect.microsoft.com/BusinessPlatform/Survey/Survey.aspx?SurveyID=13263.

Here are some benefits of the program:

- Free technical assistance on SQL Azure Reporting provided directly by Microsoft SQL Azure Reporting Development Team during the program engagement

- Dedicated Program Manager during the engagement

The deadline for self-nomination is 9/22/2011. All nominations will be reviewed by the Customer Selection team on a weekly basis. Customers will be informed through emails of the selection results. We encourage those customers who would like to use SQL Azure Reporting in production to sign-up for the program to get direct support by SQL Azure Reporting Team.

Sincerely,

The SQL Azure Reporting team

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Patriek Van Dorp (@pvandorp) posted Windows Azure AppFabric ServiceBus Enhancements on 9/7/2011:

I just received an email from the Windows Azure Team announcing the release of some ServiceBus enhancements. This is what they wrote:

“Today we are excited to announce an update to the Windows Azure AppFabric Service Bus. This release introduces enhancements to Service Bus that improve pub/sub messaging through features like Queues, Topics and Subscriptions, and enables new scenarios on the Windows Azure platform, such as:

- Async Cloud Eventing – Distribute event notifications to occasionally connected clients (e.g. phones, remote workers, kiosks, etc.)

- Event-driven Service Oriented Architecture (SOA) – Building loosely coupled systems that can easily evolve over time

- Advanced Intra-App Messaging – Load leveling and load balancing for building highly scalable and resilient applications

These capabilities have been available for several months as a preview in our LABS/Previews environment as part of the Windows Azure AppFabric May 2011 CTP. More details on this can be found here: Introducing the Windows Azure AppFabric Service Bus May 2011 CTP.

To help you understand your use of the updated Service Bus and optimize your usage, we have implemented the following two new meters in addition to the current Connections meter:

- Entity Hours – A Service Bus “Entity” can be any of: Queue, Topic, Subscription, Relay or Message Buffer. This meter counts the time from creation to deletion of an Entity, totaled across all Entities used during the period.

- Message Operations – This meter counts the number of messages sent to, or received from, the Service Bus during the period. This includes message receive requests that return empty (i.e. no data available).

These two new meters are provided to best capture and report your use of the new features of Service Bus. You will continue to only be charged for the relay capabilities of Service Bus using the existing Connections meter. Your bill will display your usage of these new meters but you will not be charged for these new capabilities.

We continue to add additional capabilities and increase the value of Windows Azure to our customers and are excited to share these new enhancements to our platform. For any questions related to Service Bus, please visit the Connectivity and Messaging – Windows Azure Platform forum.

Windows Azure Platform Team

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Avkash Chauhan reported a solution for Windows Azure: XML Configuration Error while deploying a package to Windows Azure Management Portal in a 7/8/2011 post:

It is possible that you may hit “Bad XML Error” while deploying your Windows Azure application due to some issue in your configuration XML. This error could occur while deploying directly to Windows Azure Management Portal or using Visual Studio 2010.

The error details are as below:

Error: The provided configuration file contains XML that could not be parsed. [Xml_BadAttributeChar]

Arguments: <,0x3C,6,90

Debugging resource strings are unavailable. Often the key and arguments provide sufficient information to diagnose the problem. See http://go.microsoft.com/fwlink/?linkid=106663&Version=4.0.60531.0&File=System.Xml.dll&Key=Xml_BadAttributeChar Find more solutions in the Windows Azure support forum.

Error: The provided configuration file contains XML that could not be parsed. [Xml_BadAttributeChar]

Arguments: <,0x3C,6,90

Debugging resource strings are unavailable. Often the key and arguments provide sufficient information to diagnose the problem. See http://go.microsoft.com/fwlink/?linkid=106663&Version=4.0.60531.0&File=System.Xml.dll&Key=Xml_BadAttributeChar Find more solutions in the Windows Azure support forum.

When deploying your package directly at Windows Azure Management Portal the error dialog looks like as below:

Solution:

In your Windows Azure application you will find two configuration file ServiceDefinition.csdef and ServiceConfiguration.cscfg. While building your package ServiceDefinition.csdef file contents are crammed into your CSPKG file. Finally you get your application CSPKG and ServiceConfiguration.cscfg to use in application deployment.

I found that any XML specific error in ServiceDefinition.csdef are notified during build time so the above error is mostly caused by XML configuration error in your ServiceConfiguration.cscfg so you would need to check your ServiceConfiguration.cscfg thoroughly for any XML specific error.

Once you fix those XML specific errors in your ServiceConfiguration.cscfg, the deployment would be smooth and error free.

The Microsoft Case Studies Team reported Ticketing Company [Flavorous] Scales to Sell 150,000 Tickets in 10 Seconds by Moving to Cloud Computing Solution in a 9/2/2011 case study (missed when published):

Flavorus sells event tickets for venues, artists, and promoters through its website. In January 2011, it decided to host its ticketing application in the cloud, rather than on-premises, to handle traffic for a large music festival. The company built its Jetstream application to work with the Windows Azure platform and adopted sharding, a partitioning technique that spreads ticketing data across multiple database servers. It spent only a few months developing and testing the solution. During testing, Flavorus sold 150,000 tickets in 10 seconds by using 550 Microsoft SQL Azure databases. When tickets went on sale on April 23, Jetstream worked flawlessly. The company uses the application for all of its high-volume events and benefits from dramatically reduced costs and capital expenditures, exponential scalability, high data reliability, fast time-to-market, and a new competitive landscape. [Link added.]

Situation

Started in 1999, Flavorus is a primary ticket seller, selling tickets to individuals on behalf of its customers, which include venues, artists, and promoters. Its main revenue source is service fees on ticket sales. The company is the largest ticket seller of electronic music events in the United States. “We have advanced client services,” says James Reichardt, Chief Technology Officer (CTO) and Lead Programmer at Flavorus. “We can handle festivals, but we’re still growing. We’re not as big as Ticketmaster yet.”Flavorus is a midsize company with sales, marketing, customer service and support, design, and development departments. On its website, the company hosts a complete ticketing solution that makes it possible for customers to create an engaging and easy-to-use ticket sales page for an event in minutes. Selling tickets on Flavorus is free, and the company provides flexible ticket-payment options and real-time management tools where customers can view a breakdown of sales and projected sales.

Sharding gives us infinite flexibility in terms of sending traffic to different databases. It was an eye-opening experience for us in terms of SQL Azure capabilities.James Reichardt

CTO and Lead Programmer, Flavorus

Flavorus provides ticket buyers with a variety of services, including low service fees and multiple ticket types. The company supports traditional tickets, print-at-home tickets, and mobile tickets. “As far as ticket buyers are concerned, usability is the biggest challenge,” says Reichardt. “It is one thing to buy a ticket, but it’s another thing to be able to get into the venue easily—especially when it comes to large events. We offer services that aid concert-goers in getting into the event as fast as possible.” Flavorus recently introduced paperless ticketing that features a customizable card that fits into a wallet like a credit card. It can hold multiple tickets and is scanned at the entrance, just as a paper ticket would be.

Flavorus also makes it possible for its customers to connect with ticket buyers by using marketing tools. “We offer added functionality for both our customers and theirs,” says Reichardt. “We provide built-in social networking tools and tie-ins to popular social networks, and we support the posting of videos, photos, and blogs.”

Ticketing is a unique problem on the Internet because demand is only high for a short time. In the past few years, some ticketing companies have had major issues with large-scale events. For example, in 2010, the ticketing site for Comic-Con International experienced crashes

. In January 2011, the Burning Man organization, which uses a queuing method (ticket buyers wait in a virtual line), encountered issues

when its ticketing vendor’s website could not handle demand after tickets went on sale. “When big events go on sale, ticket sales can hit some of the highest peak bandwidth, even higher than that experienced by Facebook or Google,” says Reichardt. “As many as 300,000 buyers can hit a ticketing website at one time, vying for 80,000 tickets. An event can sell out in a few seconds.”

Flavorus hosts its own on-premises data center in Los Angeles that features the capacity to handle midsize events. It is traditionally a very technology-focused company. “For a company our size, we have very nice servers with cutting-edge firewalls and load balancing,” says Reichardt. “We have had slowdowns in the past, but our servers can handle a pretty large workload. For example, for a large event at the Hollywood Palladium in Los Angeles, we sold 2,000 tickets in two hours—about 20 orders a minute.”

In early January 2011, the company started looking into how it would handle ticket sales for the largest paid music festival in the United States—expected to attract as many as 150,000 attendees per day—to be held in Las Vegas, Nevada, in June 2011. In 2010, Flavorus sold tickets for the same event as a secondary ticketing company, and in 2011, because of a venue change, it would be acting as the primary agent. It knew that its servers couldn’t handle the load. “We had never sold tickets for an event that came close to the demand of this event,” says Reichardt. “We didn’t know if the event was going to sell out in five seconds or 10 hours, but when we looked at the scalability of our hardware, there was just no way we could handle it.”

In addition, the company would be servicing ongoing ticket sales for hundreds of other events at the same time—all of which put demands on its data center for compute time and database querying. Flavorus considered handling the event’s large-scale traffic by bolstering its on-premises solution with greater database capabilities, additional web servers, and higher bandwidth. “From a business perspective, it didn’t make sense to buy hardware that we might not need otherwise,” says Reichardt. “On the flip side, this was our biggest customer, and we had to do it right. We were up against a wall on how to approach scaling for this particular event.”

Flavorus started reviewing options for hosting ticketing sales in the cloud with a comprehensive ticketing solution presented as a set of applications and services accessed over the Internet. The solution would need to be easy to maintain and scale to handle ticketing for individual events that faced a large demand on the day tickets go on sale. Flavorus also had to make sure that any cloud-hosted solution was highly stable and would provide seamless customer service. “The way the Internet is now—with social networking and everything else—if one customer has a bad experience, he has a very strong voice,” says Reichardt. “We needed the system to be stable enough that all of our customers would have a good experience buying tickets and checking into the event.”

The way that SQL Azure is architected, you just can’t lose data. That’s the sort of thing that makes you sleep well at night.James Reichardt

CTO and Lead Programmer, Flavorus

Additionally, a primary consideration for Flavorus—and for ticketing companies in general—is to make sure that every ticket sold from a database maps to a physical ticket. The company’s relational database structure has to be flawless. Flavorus needed a solution that would enable high-volume reading and writing to its ticketing database and an application that was optimized for the cloud. It also needed to launch a fully functioning ticketing application within a short time frame to accommodate its marquee customer.

Solution

Flavorus considered hosting its service with a company that offers a cloud solution for websites. However, Flavorus did some basic testing using that model, and its engineers determined that the solution couldn’t handle the scale of traffic required. “The hosting company offers scalable commodity hardware in that they have a server and run virtual machines in the cloud,” says Reichardt. “You can host a traditional website in the cloud, but the application itself doesn’t scale. That’s a problem when you have the amount of traffic we were facing.”

Instead, Flavorus chose to host its ticket application on the Windows Azure platform. Developers can use Windows Azure to host, scale, and manage web applications on the Internet through Microsoft data centers. The platform includes a set of development tools, services, and management systems familiar to Flavorus. The company builds solutions by using the Microsoft .NET Framework, software that provides a comprehensive programming model and set of application programming interfaces for building applications and services. “We’re a .NET shop and I’m a .NET programmer,” says Reichardt. “Microsoft solutions are much more rich and easy to program.”

Sharding the Data

In early February 2011, Flavorus started working closely with product representatives for the Windows Azure platform. At first, the company wrote its ticketing application—which was built by using Microsoft SQL Server 2008 data management software—for a traditional single-instance database in Microsoft ASP.NET, a web application framework for building dynamic websites, web applications, and web services. It evaluated storage options on the Windows Azure platform and found that Microsoft SQL Azure, a cloud-based relational and self-managed database service, was the fastest option for its performance needs.

Flavorus used the .NET Framework to rework the ticket-processing application—which the company calls Jetstream—to run on Windows Azure, the Microsoft cloud services development, hosting, and management platform. The Jetstream application is very simple: it collects the customer’s data and reserves a ticket, while a dynamic webpage calls a database and processes the customer data.

It was easy for Flavorus to port its solution to the Windows Azure platform. The company developed and tested its initial application from February 7 to February 20 and then ran the initial high-volume tests on February 21 and February 22.

The first volume tests on the Windows Azure platform failed. One SQL Azure database did not provide enough computational capacity to process large numbers of tickets and high user traffic. The database automatically restricted connections when the load was exceeded. For its ticketing application, Flavorus needed to scale beyond what a single SQL Azure database could provide.

The company decided to adopt sharding in SQL Azure to take advantage of the massive scale that a SQL Azure cluster provides. Sharding is a horizontal partitioning technique that spreads data across multiple databases. To this end, the company could turn its single-database ticketing service into an application with data that is split across multiple SQL Azure database servers (Figure 1).

Figure 1. To accommodate a high volume of ticket sales in a short period of time, Flavorus assigned ticketing data to multiple SQL Azure databases in a process called sharding. During testing, it discovered that it could sell out the event by selling 150,000 tickets in 10 seconds on 550 databases.

The company was able to scale its on-premises, single-database solution into a sharded solution within two weeks. By doing this, Flavorus gained higher availability by engaging many databases. “By using sharding with SQL Azure, we can have tons of customers on the site at once trying to buy tickets,” says Reichardt. “If any one of the database servers goes down, it doesn’t really matter.”

Flavorus realized that the ticketing application was ideal for sharding because the tickets are all the same. “With sharding, you can put a few tickets on each database if you want,” says Reichardt. “It doesn’t matter which database the ticket is on. You just need to distribute the load. It’s a beautiful thing.”

From February 22 to February 24, Flavorus ran initial high-volume tests using sharding. The ticketing application was able to handle a large amount of ticket sales but hit a limit because of how Internet Information Services 7.5, the default web server role on the Windows Azure platform, handles traffic. To get around this limitation, the company had to rewrite the data-dependent routing logic.

For debugging purposes, Flavorus needed to access logs to troubleshoot issues with the compute nodes and databases. “I was able to retrieve the logs and track down the line of code that was causing the web servers to have difficulty managing connections to the databases,” says Reichardt.

Adopting SQL Azure for our Jetstream ticketing application is a game-changer for Flavorus. It means our salespeople can start knocking on some big doors. From a business standpoint, it is almost unbelievable. It is a windfall.James Reichardt

CTO and Lead Programmer, Flavorus

From March 2 to March 24, Flavorus ran tests using the new logic, gradually increasing the number of sharding instances. By March 25, Reichardt was running volume tests with 7,000 general-admission tickets available in each of the 550 SQL Azure databases. During load testing, he successfully sold 150,000 tickets in 10 seconds. “When we reached that speed, we realized we could fulfill ticketing for any festival in the United States,” says Reichardt. “The Jetstream application literally scaled as I added servers. We were ready to go.”

A few days before event tickets went on sale, Flavorus learned that the promoters wanted to sell eight ticket types at different prices. To accommodate this request, the company sharded the ticket data into eight groups of servers, with each group serving a specific ticket type. For example, it used 20 databases for the VIP tickets and 400 databases for the most popular tickets—the general-admission, three-day tickets. Flavorus allocated ticket data by using projections based on the previous year’s ticket sales. “Sharding gives us infinite flexibility in terms of sending traffic to different databases,” says Reichardt. “It was an eye-opening experience for us in terms of SQL Azure capabilities.”

Launching Jetstream in the Cloud

To deploy Jetstream to production on the Windows Azure platform, Reichardt simply ran a command in the Microsoft Visual Studio 2010 Professional development system, and the application—which comprises 1,391 lines of code—launched in the cloud. He then assigned the application and data to the required number of web servers and database servers.Tickets went on sale for the event on April 23, and Jetstream worked flawlessly. To support the spike in first-day ticket sales, Flavorus used the Windows Azure platform to host 750 web role instances of the Jetstream application and 550 SQL Azure databases. The application wrote chunks of customer data to multiple sharded SQL Azure databases in parallel. Jetstream was live for two days, after which the company returned the event data to its on-premises servers and continued selling tickets. “For an on-sale event, we really need scalability for a small amount of time—about two hours,” says Reichardt. “About half an hour before sales start, traffic ramps way up. Then you get the latecomers who go to the website to find out if tickets are still available.”

The company continues to use its on-premises environment for day-to-day ticketing sales. It offers the Jetstream service only for customers promoting large-scale events. “By using the cloud model, we can isolate the ticket sales of one event,” says Reichardt. “We can run Jetstream on demand and point the ticket-sales traffic to that application. It doesn’t affect any of the other events on our website. Then, we can shut down Jetstream and pull the data back into our system to process it—with no interruption in service.”

Benefits

By designing its Jetstream application to scale for large events by using SQL Azure, Flavorus benefits from greatly reduced costs while increasing the ability to handle high ticket-sales traffic exponentially. The company used Windows Azure technologies to ramp up capacity within a short time frame, easily meeting the deadline for its largest customer. It also benefits from highly reliable data stability. Thanks to cloud capabilities, Flavorus finds itself in a new competitive landscape in which it can host ticket sales for the largest events in the country.“Part of the beauty of SQL Azure is that you can tinker with its capabilities if you have unique needs—like the way we adopted sharding to scale ticketing on the on-sale day,” says Reichardt. “But for the most part, the Windows Azure platform handles everything for you. It’s a really beautiful model.”

Avoids Costs

With its ticketing application in the cloud, Flavorus can sell tickets for big events without investing in infrastructure. The company avoids purchasing bandwidth and a variety of hardware and software that would need to be replaced in a few years anyway. It also bypasses the costs of administering and maintaining a large on-premises data center. Flavorus estimates that it is saving more than U.S.$100,000 annually in hardware, software, and bandwidth.

Sharding with SQL Azure turned out to be the golden ticket for us. It’s almost infinitely scalable.James Reichardt

CTO and Lead Programmer, Flavorus

When putting Jetstream into operation on the Windows Azure platform, Flavorus pays only for each hour of use. The cost of running Jetstream on Windows Azure for two days was minimal, and the on-sale ticketing event was profitable. “Using sharding with SQL Azure is so cost-effective, it is mind-blowing,” says Reichardt. “Plus, we can turn Jetstream off when we’re not using it—and there’s no cost to us.”

Increases Scalability

Thanks to the sharding capabilities of SQL Azure, Flavorus has gone from knowing its servers would crash during high-volume on-sale events to being able to sell 900,000 tickets in a minute. The company has more scalability than it needs. “Sharding with SQL Azure turned out to be the golden ticket for us,” says Reichardt. “It’s almost infinitely scalable. You could continue to ramp it up with more databases and get faster and faster times. It is like night and day compared to what we had done before.”Speeds Time-to-Market

When Flavorus first started investigating options for large-scale ticketing in January 2011, it went to its primary bandwidth provider to inquire about increasing bandwidth within a three-month time frame. As of June 2011, the company was still waiting for its bandwidth to be increased. If it had had to procure and set up hardware and software, it would have taken many months to prepare an on-premises solution.In the meantime, Flavorus developed, tested, and deployed its ticketing application in the cloud within a few months. “From start to finish, developing Jetstream to work on the Windows Azure platform was an amazingly fast process,” says Reichardt. “We wrote the application in a few days, and within a few months of testing and bug-fixing, we had the functionality to sell 150,000 tickets in 10 seconds. We’re extremely happy we went with SQL Azure because we met our deadline.”

Improves Data Stability

Flavorus relies on the redundancy and data stability that’s available with SQL Azure because the customer data in cloud-based ticket sales is processed after the on-sale event takes place. “The ability of SQL Azure to ensure data recoverability was essential for the Jetstream application,” says Reichardt. “That’s because we weren’t processing credit cards or performing other service calls in real time. The way that SQL Azure is architected, you just can’t lose data. That’s the sort of thing that makes you sleep well at night.”Enhances Competitiveness

Promoters are particularly concerned about ticketing capabilities because the ticket-buying experience is the first exposure that customers have to an event. With the ability to scale ticket sales in the cloud, Flavorus gains a competitive advantage in the market because it can now cater to events of any size. The company can offer its customers a reliable and highly scalable ticketing service that has been proven to work. “Adopting SQL Azure for our Jetstream ticketing application is a game-changer for Flavorus,” says Reichardt. “It means our salespeople can start knocking on some big doors. From a business standpoint, it is almost unbelievable. It is a windfall.”Windows Azure platform

The Windows Azure platform provides developers the functionality to build applications that span from consumer to enterprise scenarios. The key components of the Windows Azure platform are:

Windows Azure. Windows Azure is the development, service hosting, and service management environment for the Windows Azure platform. It provides developers with on-demand compute, storage, bandwidth, content delivery, middleware, and marketplace capabilities to build, host, and scale web applications through Microsoft data centers.

Microsoft SQL Azure. Microsoft SQL Azure is a self-managed, multitenant relational cloud database service built on Microsoft SQL Server technologies. It provides built-in high availability, fault tolerance, and scale-out database capabilities, as well as cloud-based data synchronization and reporting, to build custom enterprise and web applications and extend the reach of data assets.

To learn more, visit:

www.windowsazure.com

www.sqlazure.com

John Brodkin (@JBrodkin) reported Microsoft eats its own tasty cloud dog food in a 9/7/2011 post to Ars Technica:

It was more than 20 years ago when Microsoft executive Paul Maritz coined the phrase “eating your own dog food,” beginning a long tradition of Microsoft proving its products are good enough for the world by using them in Redmond.

“We are dogfooding this product” has become a common, if unappetizing phrase muttered every day by executives and marketing types from nearly every IT vendor on the planet. But nowadays Microsoft itself rarely needs to convince customers that it actually uses its major cash cows, Windows and Office—after all, no one really expects Microsoft employees to run Google Apps on a Mac or Linux box.

But Microsoft still has to prove that Windows Azure, its new cloud service for deploying and hosting Web applications, is reliable, safe, and ready for enterprises. That’s where dogfooding comes in.

The Windows Azure team blogged this week about how Microsoft uses Windows Azure throughout numerous parts of the business. Of course, the majority of Microsoft’s IT infrastructure isn’t running in any cloud, and the Azure cloud’s data centers are operated by Microsoft in the first place. But the examples do illustrate some ways in which Azure may benefit customers who wish to ease the burdens on their own data centers.

Parts of Microsoft.com, particularly its social aspects, are powered by Azure, and have been for 15 months.

“Windows Azure and Microsoft SQL Azure currently power the social features of Showcase, Cloud Power, and 50 other Microsoft.com properties through a multi tenant web service known as the Social eXperience Platform (SXP),” Microsoft said. Bing Games and Bing Twitter search are floating in the Azure cloud, although the core Bing search engine is apparently still anchored to the ground.

Windows Azure became generally available in February 2010. As of its first anniversary, Microsoft said Azure had signed up 31,000 subscribers and was hosting 5,000 applications, while Google App Engine and Salesforce’s Force.com were already in the 100,000 range on both counts. While numbers for Amazon’s Elastic Compute Cloud are harder to come by, Amazon says its broader "Web Services" business has hundreds of thousands of customers in 190 countries. EC2 is considered the dominant player in cloud infrastructure, but while Amazon provides pure infrastructure-as-a-service, in which customers are responsible for maintaining their own virtual machines and operating systems, Windows Azure’s platform-as-a-service model abstracts away much of the complexity, letting developers focus on writing code.

Just building an application doesn’t prove hosting it in Microsoft’s cloud will be reliable and secure, though. So Microsoft provided several examples of how its own employees used Azure to deploy business-critical and customer-facing applications.

One internal application—that is, one used only by Microsoft employees—called Business Case Web (BCWeb) was moved from its previous location to Windows Azure. The application, which helps Microsoft salespeople handle price-change requests, was created in 2007 and is used by 2,500 employees.

BCWeb was a good candidate for Azure because it was developed with .NET, hosted on Internet Information Services (IIS) servers, and uses SQL Server databases.

While the application now runs in the Azure cloud, it still connects to the primary Microsoft network to preserve data security. “Due to the nature of the information that employees access and use in BCWeb, the application was integrated with several on-premises components hosted on the Microsoft corporate network (corpnet),” Microsoft writes in a case study. “These components primarily provide access to external data that BCWeb functionality requires. Microsoft considers the information that BCWeb accesses and contains to be important business data, so Microsoft IT had to ensure that it was secured accordingly.”

In another example, Microsoft’s IT folks moved two customer-facing software tools for volume licensing customers to Azure in about six weeks. The tools let customers and service providers create customized documents detailing existing or potential license purchases. Microsoft chose these tools as an early test case of Azure’s migration capabilities because they have a simple configuration, small databases and data that is somewhat less critical than nuclear launch codes.

Some of the code had to be altered to function on Azure and some features present in SQL Server were not present in SQL Azure, Microsoft said. But the project was able to reroute Microsoft.com users to Azure, and should result in cost and time savings in the long run.

Because it is based on virtual computing resources and can be scaled up or down on-demand, Microsoft has argued that Azure is both more reliable and flexible than many customers’ internal data centers. Still, Azure and Microsoft’s Office 365 cloud services have suffered occasional downtime, just as Amazon EC2 and other cloud platforms have.

Microsoft boasts that Azure’s customer base includes NASA, Xerox, T-Mobile, Lockheed Martin and many others. If it’s good enough for them, Microsoft hopes it's good enough for you.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Kunal Chowdhury (@kunal2383) posted Telerik RadChartControl for LightSwitch Application - Day 2 - Integrating Chart Control on 9/8/2011:

Yesterday in the blog post "Telerik RadChartControl for LightSwitch Application - Day 1 - Setup the Screen", we discussed about the prerequisite, setting up project, creation of Table and Screens for LightSwitch application. We created a simple screen of data grid from some data present in the table.

In this post, we will discuss on integration of Telerik Chart control in LightSwitch application. This will not only help you Telerik control integration but will help you to add your own UserControls into the screen. Don't forget to take part in the Giveaway, which Telerik is doing for my blog readers.

Giveaway: Telerik RadControls for Silverlight

At the end of this article series, there will be a Giveaway of Telerik RadControls for Silverlight where Telerik will provide a license of their package just for my blog readers.

To be part of the Giveaway, do the following steps:

- Register to my blog with your valid Email ID (We will keep it secret)

Don't forget to Activate the link that we will send it to your registered Email ID- Follow me on Twitter: @kunal2383

- Become a Fan of my Facebook page

Stay tuned for my Giveaway post to Win the license. Who knows, you would be one of the Winner.

Prerequisite

Not a big prerequisite is require for this post. If you read the previous article and setup your environment correctly, it is well and good. If you came to this post directly, I will request you to go back and read my yesterday's post "Telerik RadChartControl for LightSwitch Application - Day 1 - Setup the Screen" where prerequisites were well documented. Also we discussed about setting up the project, table and screen.

Hope you already downloaded the Telerik RadControl for Silverlight (Trial) from Telerik site. Install it in your system. We need this library now.

Adding Telerik Assembly References

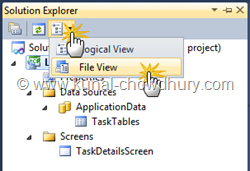

Now we need to add the required assemblies of Telerik RadControls in our project. First of all, go to the Solution Explorer and click the small view icon and select the File View. The default view is the logical one where you see the Screens and Tables. Once you changed it to File View, you will see various projects in the solution as shown below:

As shown above, click the "Show All Files" icon to show the hidden projects under the main project. Once this step is done, add the following three Assembly References in the projects called "Client" and "ClientGenerated":

- Telerik.Windows.Controls.dll

- Telerik.Windows.Controls.Charting.dll

- Telerik.Windows.Data.dll

The above mentioned dlls are required by our application to integrate the Telerik Chart Controls that we want to demonstrate here.

Adding [a] Chart Control

It's time to add the chart control in the LightSwitch UI. To do this, we will go with a different approach. Instead of adding the control directly, we will create a UserControl in the Client project and will add the chart inside that UserControl. To start with, create a folder named "UserControls" in the Client project and add the UserControl.

Now we will add the xmlns namespace in the UserControl XAML (we will name the UserControl as "TaskDashboardChart" for reference) and modify the XAML to add the control inside LayoutRoot. Here is the modified code:

<UserControl x:Class="LightSwitchApplication.UserControls.TaskDashboardChart"xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"xmlns:d="http://schemas.microsoft.com/expression/blend/2008"xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"xmlns:telerik="http://schemas.telerik.com/2008/xaml/presentation"mc:Ignorable="d" Width="300" Height="250"><Grid x:Name="LayoutRoot" Background="White"><telerik:RadChart><telerik:RadChart.SeriesMappings><telerik:SeriesMapping LegendLabel="Task Dashboard"ItemsSource="{Binding Screen.TaskTables}"><telerik:SeriesMapping.SeriesDefinition><telerik:LineSeriesDefinition ShowItemLabels="True"ShowPointMarks="False"ShowItemToolTips="True" /></telerik:SeriesMapping.SeriesDefinition><telerik:SeriesMapping.ItemMappings><telerik:ItemMapping DataPointMember="YValue"FieldName="Completed" /><telerik:ItemMapping DataPointMember="XValue"FieldName="Id" /></telerik:SeriesMapping.ItemMappings></telerik:SeriesMapping></telerik:RadChart.SeriesMappings></telerik:RadChart></Grid></UserControl>Let's build the project for any error and resolve those issues if any.

Adding [a] UserControl to LightSwitch Screen

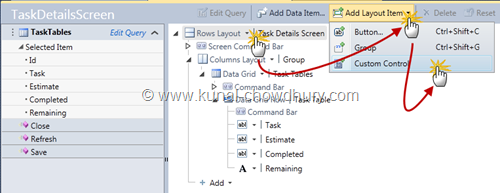

By now, our UserControl is ready with the Chart control. Now we need to add the UserControl to the screen. Open the screen in Design view. As shown below, click the "Rows Layout" -> from the "Add Layout Item" dropdown, select the "Custom Control" menu item.

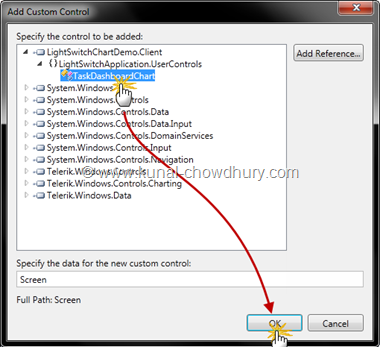

This will open a dialog called "Add Custom Control". Browse to the proper assembly and select the UserControl (in our case, it is TaskDashboardChart). Now click "OK" to continue.

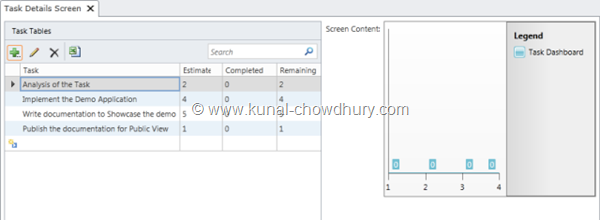

This will add the Control in the screen as shown below:

This completes the end of the Telerik Chart integration. Place the control properly in the UI as per your need.

See it in Action

Build the project and run the application. If you followed the steps properly, you will see the screen as shown below:

The chart control has all the data set to zero initially because we marked them with the "Completed" column of the table, where all the completed fields have value as zero. Let's try to modify those values one by one.

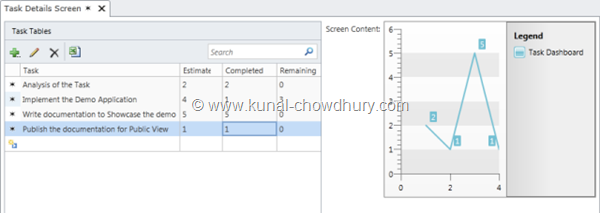

You will now notice that, whenever you are modifying the completed value, the value of remaining field is auto calculating based on the algorithm and the chart control is also getting updated based on the column values.

After some modifications, you will see the graph similar to this:

Hope these two days posts were helpful for you to understand how to integrate UserControl in LightSwitch screen, as well as the integration of Telerik chart controls.

End Note

Tomorrow I will publish the giveaway post, where you will have chance to Win a license of Telerik RadControls for Silverlight. You might know that, Telerik is offering a prize to one of my blog reader, LightSwitch developers: a FREE developer license for RadControls for Silverlight (worth $799) which you can use in your LightSwitch projects and/or to develop Silverlight applications!

This giveaway will start from tomorrow and will be available for next 5 days. You will have better chance as soon as you enter into the contest. So, come back again on tomorrow and enter into the contest. Till then happy coding.

Related Posts

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• Eric Nelson (@ericnel) asked Microsoft Data Centers – more impressive than sliced bread? in a 9/8/2011 post:

Last week it felt like every colleague and customer I bumped into had just come back from visiting our Dublin Datacentre – and every one of them was blown away by the experience.

I on the other hand was not invited, did not attend and generally felt thoroughly left out by the end of the week …grrrrrr

Which is why it is timely that the nice folks at Microsoft Global Foundation Services (GFS) team have produced a video that shows customers how seriously Microsoft takes data center design and why we are a leader in building cloud technology from the ground, up.

If only I was invited …

Related Links:

My (@rogerjenn) DevOps: Keep tabs on cloud-based app performance article of 9/8/2011 for SearchCloudComputing.com carries “Application performance anxiety can make enterprises uneasy about public cloud. Monitor your cloud-based apps to overcome any issues” as a deck:

Poor performance of public cloud-based applications leads to end user frustration. SLAs from PaaS and IaaS providers cover availability, but not overall response time. DevOps teams can monitorcloud computingapps for performance using a few different tools.

Nearly 350 IT pros throughout North America estimated an average annual revenue loss of $985,260 from performance problems with cloud-based applications, reported a Compuware survey. Respondents in the EU estimated a $777,000 loss (in U.S. dollars). Concerns about app performance caused 58% of North American and 57% of EU-based respondents to delay adoption of cloud-based applications.

The report also found that 94% of North American respondents and 84% of EU respondents think cloud application service-level agreements (SLAs) are based on the actual end-user experience, not just service provider availability metrics. This naivety likely won't convince public or private cloud providers to offer potentially costly end-to-end SLAs. Therefore, it is DevOps' job to instrument cloud-based apps with error logging, analytics and diagnostic code.

So what's the best way to monitor performance of your data-intensive applications? Free or low-cost uptime and response time reports from Pingdom.com,Mon.itor.usand other site monitoring providers confirm that applications meet SLAs and end-to-end site performance.

Firms like LoadStorm and Soasta sell customized cloud application load testing. Compuware's CloudSleuth site provides a free monthly Global Performance Ranking of end-to-end response times of major public Infrastructure as a Service (IaaS) and Platform as a Service (PaaS) providers.

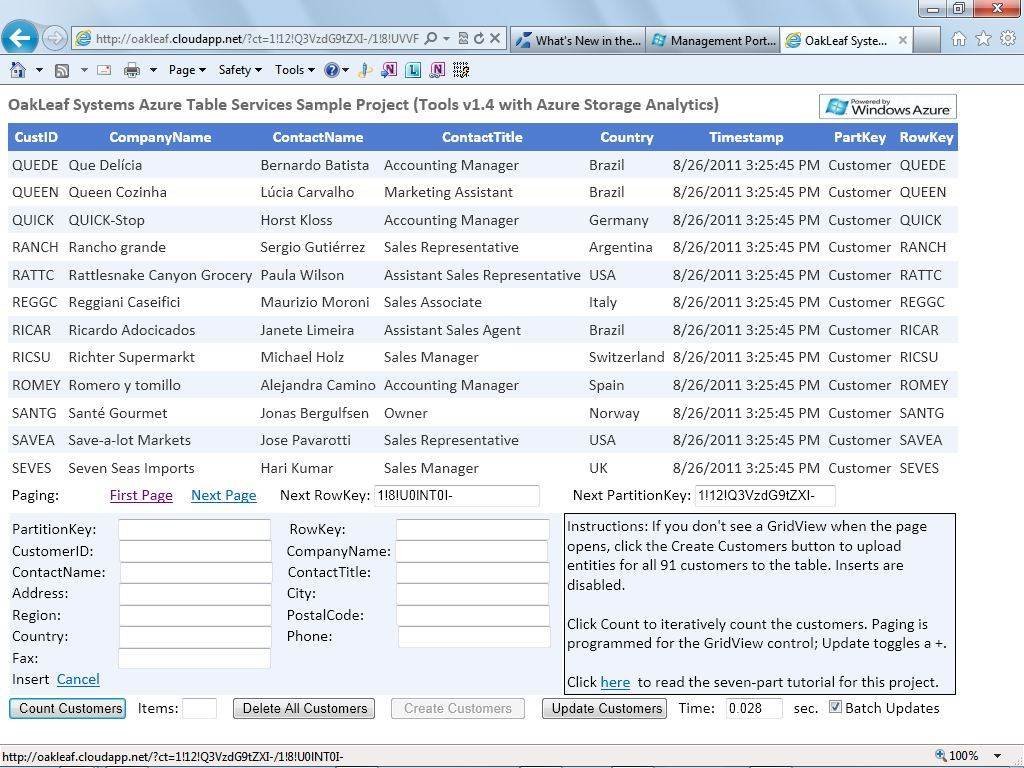

Figure 1 shows a Windows Azure sample project that demonstrates paging as well as create, read, update and delete (CRUD) operations on an Azure table. Attempting this for OakLeaf's Azure Table Services Sample Project would result in negative values for code execution.

FIGURE 1. Windows Azure sample project showing paging and CRUD operations.The Time text box at the bottom of the window indicates the last action's code execution time, which was 28 ms for a new page. By clearing the Batch Updates check box, you'll see a dramatic increase in execution time for individual CRUD operations on the 91 customer records.

Relying on reports isn't enough; organizations also must acquire or develop on-premises diagnostic management tools to download and analyze performance logs, as well as to deliver alarms and graphical reports. IaaS providers like Amazon Web Services (AWS) concentrate on building their hardware with native metrics; PaaS products like Windows Azure and SQL Azure provide deeper, more customized insight into the application and its code.

How AWS CloudWatch keeps an eye on performance

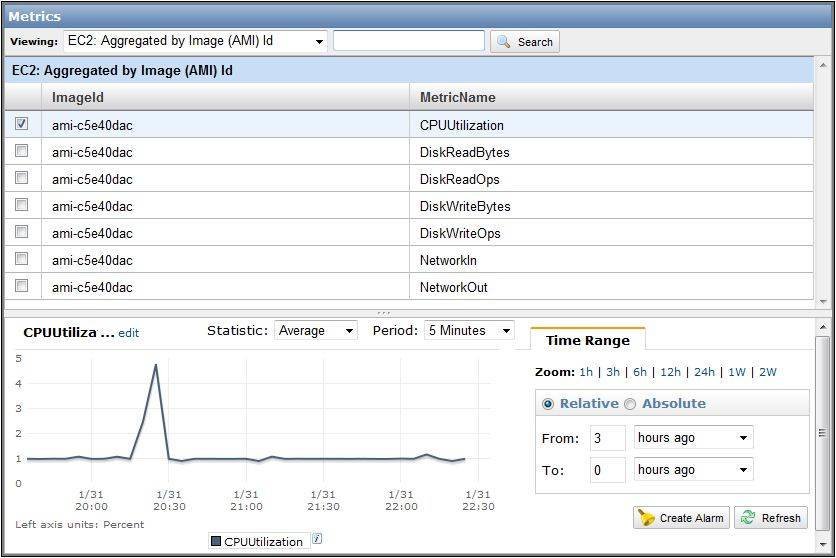

Amazon CloudWatch allows DevOps teams to automatically monitor CPU, data transfer, disk activity, latency and request counts for their Amazon Elastic Compute Cloud (EC2) instances. Basic metrics for EC2 instances, EBS volumes, SQS queues, SNS topics, Elastic Load Balancers and Amazon RDS database instances occur at five-minute intervals for no additional cost. You can add standard metrics and alarms in the AWS Management Console's CloudWatch tab and view graphs of metrics by navigating to the Metric page in the Navigation Pane (Figure 2).

FIGURE 2. A graph for an EC2 instance's CPU utilization metric, aggregated by EC2 Image ID. The remaining six metrics comprise the seven Basic Metrics for EC2 images.The DevOps team can use AWS's Auto Scaling feature to provide elastic availability by adding or deleting Amazon EC2 instances dynamically based on an app's CloudWatch metrics. AWS added new notification, recurrence and other Auto Scaling features in July 2011.

CloudWatch doesn't provide built-in application monitoring metrics because AWS is an IaaS offering that's OS- and development-platform agnostic. However, developers can program applications to submit API requests in response to app events, such as handled or unhandled errors, function or module execution time, and other app-related metrics.

Windows Azure performance logging and analytics

The Windows Azure team has been adding logging, analytic and diagnostic features to the platform steadily since its PaaS service became available in January 2010. Because Windows Azure Portal's interface doesn't support adding metrics, analytics or alarms, DevOps teams must write code and edit configuration files to enable diagnostics and logging for Windows Azure compute and storage services. Table 1 shows logs that were available for analysis by DevOps with Windows Azure's Diagnostic API in June 2010.

Data source Details Stored in Windows Azure logs Requires that trace listener be added to web.config or application.config: <system.diagnostics>. The ScheduledTransferPeriod is set to 1 minute. WADLogsTable (table) Windows event logs Events from application and system event logs. The ScheduledTransferPeriod is set to 1 minute. WADWindowsEventLogs-Table (table) IIS 7.0 Logs The ScheduledTransferPeriod is set to 10 minutes. wad-iis-logfiles (blob container) IIS7 Failed Request logs Enable tracing for all failed requests with status codes 400–599 under the system.webServer section of the role's web.config file. The Scheduled-TransferPeriod is set to 10 minutes. wad-iis-failedreqlogfiles (blob container) Performance counters Enable logging for performance counters. Set the SampleRate and ScheduledTransferPeriod to 5 minutes. WADPerformance-CountersTable (table)

TABLE 1. Windows Azure diagnostic data collection logswith the names of Azure tables and binary large objects (blobs) that store the log data enabled by Windows Azure SDK v1.2.SDK v.1.4 and Visual Studio Tools for Azure v1.4 added the capability to profile a Windows Azure app with Visual Studio 2010 Premium or Ultimate when it runs in Windows Azure's production fabric. An early August 2011 update to tools and a new Windows Azure Storage Analytics feature enabled logs that trace executed requests for storage accounts as well as metrics that provide a summary of capacity and request statistics for binary large objects (blobs), tables and queues.

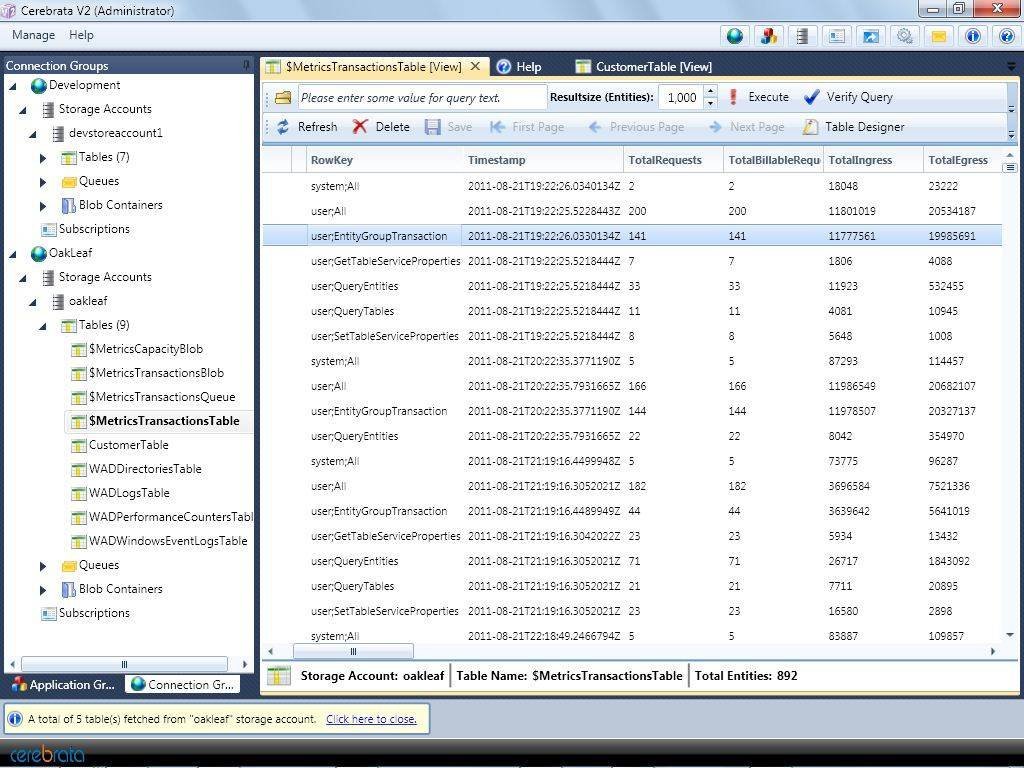

An updated sample project of Aug. 22 generated analytics tables for both table and blob storage, as well as internal timing data based on TraceWriter items, but requires a separate app to read, display and manage diagnostics. Cerebrata's Azure Diagnostics Manager reads and displays log and diagnostics data in tabular or graphic format. The firm's free Windows Azure Storage Configuration Utility lets IT operations teams turn on storage analytics without writing code. Cerebrata's Cloud Storage Studio adds table and blob management capabilities (see Figure 3).

FIGURE 3. A beta version of Cerebrata's forthcoming Cloud Storage Studio v2.The System Center Monitoring Pack for Windows Azure Applications provides capabilities similar to Cerebrata products for System Center Operations Manager (SCOM) 2007 and 2010 beta users. Autoscaling Windows Azure for elastic availability currently requires a third-party solution, such as Paraleap's AzureWatch, hand-crafted .NET functions or the forthcoming Windows Azure Integration Pack for Enterprise Library. Monitoring SQL Azure databases requires you to use SQL Azure's three categories of dynamic management views: database-related views, execution-related views and transaction-related views.

More on apps in the cloud:

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

My (@rogerjenn) Links to Online Resources for my “DevOps: Keep tabs on cloud-based app performance” Article of 9/8/2011 post of 9/8/2011:

My DevOps: Keep tabs on cloud-based app performance article of 9/8/2011 for SearchCloudComputing.com has internal links to many (but not all) of the following online resources for monitoring cloud-based application performance:

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

Simon May asked Why monitor Windows Azure with System Center? in a 9/8/2011 post to his TechNet blog:

One of the questions that I often get into when talking with folks normally have a dev head on around Windows Azure is why you’d want to be able to monitor a Windows Azure application with System Center and why you wouldn’t just build a bespoke monitoring solution since you’re building an application already. The answer is actually a very obvious one to integrate with existing systems. Anyone with an ounce of IT operational background or ITIL training will tell you that you rightly need to centralise monitoring and ultimately to simplify it. The first obvious reason why you’d do that is to save money but there’s a second reason:

That amazing application probably isn’t running in isolation.

You’ll find that it requires other components like network connectivity (yes even in the cloud) to be in place and available – particularly if you’re running in a hybrid environment. A holistic management solution, such as System Center will help you to achieve an overall view of what’s going on, of all the dependencies on various components of the system.

Interestingly we’ve just moved such a system to Windows Azure, along with the required monitoring and documented the whole process with a case study of moving a Business Critical application to Windows Azure. The article is well worth a read for anyone thinking about moving an application to the cloud but the following stuck out for me as benefits:

- Accurate and timely monitoring and alerting for [application] critical components

- A large number of reusable monitoring components that can be leveraged in future Windows Azure applications

Accuracy and timeliness are so important in IT Operations it’s untrue but that second benefit around reusability is also so important. Not only will you have developed a reusable system monitoring Windows Azure applications but you’ll also have reusable skills.

How do you get started? Well you could just download this little package of 2 ready made evaluation VHDs and play with a pre-built Windows Azure application, I have and it really doesn’t take long to get to grips with.

Simon is an IT Pro Evangelist for Microsoft UK.

Michael Stiefel posted Why Did My Azure Application Crash? Using the Windows Azure Diagnostics API to Find Code Problems to InformIT on 8/8/2011:

Michael Stiefel shows a variety of ways to use the Windows Azure Diagnostics API, particularly the new Diagnostic Monitor, to find and fix problems in your Azure code.

Your Azure application isn't working. What should you do now? Better question: What should you have done before now?

You can't put your application in the debugger and run it in the cloud. Your event logs, traces, and performance counters are all stored in the filesystem of the virtual machine. Since you can't assume that your role instances will run on the same virtual machine all the time, the information you need may not be there when you need it.

Your application runs behind a round-robin load balancer, so all the information you need might not be in one virtual machine anyway. If you use queuing, you've introduced a level of indeterminism in your application. In general, distributed applications are more complex because multiple applications or services have to be analyzed together.

In this article, I'll explain how the Windows Azure Diagnostics classes can help you to figure out why your Azure application isn't working correctly. If you want to follow along, I'll assume that you have some basic expertise:

- You have a basic understanding of Windows Azure.

- You understand roles and roles instances, as well as Azure Storage (blobs and tables).

- You know how to build a simple Windows Azure application.

- You have a basic understanding of the standard .NET diagnostic classes.

NOTE

Click here to download a zip file containing the source files for this article.

Application Monitoring

To understand what went wrong in an application, you have to monitor and record the state of the application. In general, you want to record four categories of information about your application:

- Programmatic: Exceptions, values of key variables[md]in general, any information needed to debug the application.

- Business process: Auditing needed for security, change tracking, compliance.

- System stability: Performance, scalability, throughput, latencies.

- Validation of business assumptions: Is the application being used the way you thought it would?

NOTE

The focus here is on a production cloud application. Remote desktop is of limited use. I won't talk about IntelliTrace; when IntelliTrace is enabled, Azure role instances don't automatically restart after a failure. Performance also degrades.

Read more: Page 1 of 10 Next >

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

• The System Center Virtual Machine Manager team sent the following message on 9/8/2011:

Release Candidate Available

We are very excited to announce the Release Candidate of System Center Virtual Machine Manager 2012 (VMM) and the corresponding Evaluation VHD. This is a great step forward in enabling our customers to create Private Cloud Solutions, and will allow you to begin creating your Private Cloud environments. Virtual Machine Manager delivers industry leading fabric, virtual machine and services management in private cloud environments. We have made many improvements since the Beta of VMM, and our Product Team has summed up the improvements on the VMM Team Blog. Go ahead and download this release, give it a try, and provide your feedback.

<Return to section navigation list>

Cloud Security and Governance

Joseph Granneman posted Cloud risk assessment and ISO 27000 standards to the SearchCloudSecurity.com blog on 9/7/2011:

Do you trust an external third party with your sensitive data? This is the primary concern for companies that are joining the gold rush that is cloud computing. Never has there been a more important role for information security professionals to play than advising management on how to gain “trust” when outsourcing their IT infrastructure to cloud providers. Is there a good measuring stick for proving a cloud provider is trustworthy? Many cloud providers tout their SAS 70 Type II certification, but one of the best tools to help with cloud risk assessment is the ISO 27000 series of standards.

SAS 70 comparison

The SAS 70 Type II has long been the standard of choice for evaluating external IT operations. This standard was originally developed by financial auditors in order to evaluate the impact of outsourcing certain business operations to third-party providers. The SAS 70 Type I was designed to evaluate that controls existed to protect both the confidentiality and operational stability of an external provider. The SAS 70 Type II was developed to evaluate the existence and the effectiveness of controls in place at an external provider. Although this was preferable to the Type I, which did not test any controls, it was only as effective as the comprehensiveness of the controls being audited. In any SAS 70 audit, the provider can determine which controls will be tested; an unscrupulous cloud services provider could only test controls it knows could pass an auditor’s testing methodology.

The ISO 27000 standards

The ISO 27000 series of standards had a much different origin than the SAS 70 Type II standards. Whereas the SAS 70 has its roots in accounting and financial audits, ISO 27000 started from the ground up as an information security evaluation standard. The SAS 70 was developed and maintained by the American Institute of Certified Public Accountants (AICPA) and has been modified to be used as an information security evaluation criteria. The ISO 27000 standards originated as information security evaluation criteria that was developed by the U.K. government and is now maintained by the International Standards Organization. This is a key differentiator when choosing a standard for evaluating cloud services providers.

The ISO 27000 standards define a detailed listing of controls, processes and procedures that must be followed in order to successfully complete an audit. These standards may look familiar, as the predecessors to ISO 27000 were the models for U.S. laws to regulate information security in various industries, including HIPAA and GLBA. This makes the task of creating a crosswalk between these compliance regulations and ISO 27000 relatively simple. This crosswalk reduces the burden on a cloud provider that’s trying to comply with multiple local, national and international compliance mandates. The output of an ISO 27000 audit could even be adapted to generate the documentation necessary for a successful SAS 70 Type II.

Although ISO 27000 was originally intended to evaluate internal technology resources, these detailed controls, processes and procedures apply equally well to cloud service providers. A company may not need to possess the information security skillsets internally to receive value from ISO 27000 certification. Management could simply look for the ISO 27000 certification from a prospective vendor and have some assurance that an information security program exists. This makes ISO 27000 one of the best available prepackaged standards for evaluating cloud services.

No substitute for due diligence

However, the ISO 27000 standards are not a substitute for developing a custom due diligence process for evaluating cloud providers in your cloud risk assessment. Companies shouldn’t make the mistake of assuming any certification or audit standard is simply“good enough” to validate prospective cloud solutions. All audits and certification specifications, such as the ISO 27000 series, are general in nature and only provide a snapshot in time of information security program capabilities. There may be data that is more sensitive in nature or business processes that requires more specific security precautions. It’s also important to evaluate the current state of the cloud provider’s information security program and whether it’s being maintained at the same level as when certification was obtained.

The ISO 27000 standards are the best prepackaged standards available today for evaluating the security programs of cloud service providers. The information security professional can look for this certification and also utilize it as a foundation to build a custom due diligence assessment. The ISO 27000 series evaluates many important aspects of an information security program, but should be used in conjunction with a custom due diligence process in alignment with a risk assessment of the data or processes being placed in the cloud. There is no one single indicator that can determine whether the company’s data will be secure in the cloud, but the ISO 27000 series is a good start.

Joseph Granneman, CISSP, has over 20 years in information technology and security with experience in both healthcare and financial services.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com, a sister publication to TechTarget’s SearchCloudSecurity.com.

Elaine de Beer (@PatentKat) described the Legal implications of cloud computing from a South African vantage point in a 9/16/2011 post to her Softwarepatensa blog:

Cloud computing, which is generally understood as a model for enabling on-demand network access to an elastic pool of shared computing resources that can be rapidly provisioned and released with minimal service provider interaction, has various advantages and offers exceptional opportunities to a business. This technology is now finding wide implementation in South Africa in so far as it offers dynamic scalability and flexibility at a reduced cost…..

However, the legal implication of this technology is not perhaps as clearly defined as it could be and the following might provide some handy hints and tips on the matter……

In terms of managing the cloud computing infrastructure, from a legal point of few, certain best practices have been identified in the industry, these are:

- Find a test case: Test the waters and set up a test case first, thereby minimizing the potential risk to the rest of your system/infrastructure,

- Understand the cloud infrastructure and the risks: Enhance regular auditing and monitoring in your company,

- Ownership of information is key: Ensure that you own your information and understand that how your data is going to be handled before the system is implemented,

- Avoid cross-platform proliferation: Focus on one or perhaps two cloud service platforms at a time,

- Understand the role and the lack of standards: Develop standard templates of contractual safeguards, data ownership and use limitations.

Customers such as banks, retail and telecommunications companies generally have a standard agreement developed to look after their position to reduce risks through a comprehensive set of terms and schedules.

Cloud vendors are, however, at the other end of the spectrum and the difference between the approaches of the service provider and customer are enormous. For example, in Cloud vendor contracts, it is normal that warranties are given by the customer instead of by the service provider, and the service providers have a right to suspend your service whenever they choose to do so…this concept is almost unheard of in traditional outsourcing contracts and would certainly be questionable in view of the provisions of the Consumer Protection Act….. The CPA would certainly have a large role to play in these negotiations and should be borne in mind when reviewing the Cloud vendor contract……

To bridge this gap, before choosing a service provider, you should firstly analyze the available cloud service providers within the context of the market as a whole and try to derive the core themes and standard practices of each of these service providers……

It if further advised that you analyze the standard terms in your agreements with these service providers in order to identify any potential red herrings before hand as well as identifying those key factors you are prepared to discuss, negotiate, and agree on with the service provider.. …in this way you can filter down to the issues which need to be resolved a lot quicker and focus on those during your negotiations……

Elaine is an electronics engineer and a patent attorney at a large corporate law firm in Johannesburg, South Africa.

<Return to section navigation list>

Cloud Computing Events

• Jim O’Neil reported an Entity Framework Boot Camp (Oct. 3–7th) to be held at Waltham, Mass. in a 9/8/2011 post:

Perhaps you’ve heard of some of the significant new features of Entity Framework 4.1, including “code first” development and the DbContext API, but you just never had the time to dig in? Well, with the Entity Framework Boot Camp, you’ve got a chance to learn from the person who wrote the book on Entity Framework, literally.

Julie Lerman (pictured) will be conducting a week-long course through our friends at Data Education from October 3 through the 7th, at the Microsoft Technology Center in Waltham. The course does have a fee, but a $400 early-bird discount is being offered until September 16th.

You can get the full syllabus and register on the Data Education site.

Mick Badran announced Azure: Training - Inside Windows Azure, the Cloud Operating System in a 9/8/2011 post:

Hi folks, I’m starting off a series Azure training sessions for Microsoft via Live Meeting – yesterday Scotty & I delivered a great presentation with all the main pillars on show.

This session is more about what is inside the Azure ‘Fabric’ and how is this space managed. In the coming sessions we will delve into creating/configuring applications, deployments etc.

For now – here is the fundamental ‘what’s under the hood.’ (Recording will be made available shortly)

You can download from here - http://bit.ly/qEiqLC

Jeff Barr (@jeffbarr) reported an Amazon Technology Open House - October 4, 2011 at Amazon’s South Lake Union, WA office in a 9/8/2011 post:

I will be keynoting an Amazon Technology Open House at our South Lake Union offices on October 4, 2011.

You'll have the opportunity to meet and to hang out with the leaders of the various AWS services, learn about recent engineering innovations at Amazon, and to win one of two Kindles that we'll be giving away during the evening.

We'll also have some local beer and pretzels.

The event is free but space is limited and you need to preregister if you would like to attend. See you there!

And be prepared to prove your age.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Chris Hoff (@Beaker) recommended VMware vCloud Architecture ToolKit (vCAT) 2.0 – Get Some! in a 9/8/2011 post:

Here’s a great resource for those of you trying to get your arms around VMware’s vCloud Architecture:

VMware vCloud Architecture ToolKit (vCAT) 2.0

This is a collection of really useful materials, clearly painting a picture of cloud rosiness, but valuable to understand how to approach the various deployment models and options for VMware’s cloud stack:

- Document Map – Document descriptions, function, and table of contents.

- vCAT Introduction – Considerations when first developing your Cloud strategy.

- Private VMware vCloud Service Definition - Business and functional requirements for either Private cloud architectures—complete with sample use cases.

- Public VMware vCloud Service Definition - Business and functional requirements for Public cloud architectures—complete with sample use cases.

- Architecting a VMware vCloud - Design considerations for architecting a VMware vCloud.

- Operating a VMware vCloud - Operational considerations for running a VMware vCloud.

- Consuming a VMware vCloud - Organization and user considerations for building and running vApps within a VMware vCloud VMware

- vCloud Implementation Examples:

- Hybrid VMware vCloud Use Case

Related articles

- VMware’s vShield – Why It’s Such A Pain In the Security Ecosystem’s *aaS… (rationalsurvivability.com)

- VMware orders vCloud army across five continents (go.theregister.com)

- VMware’s Vision: Connected Global Clouds (datacenterknowledge.com)

- VMware Places a Bet on Enterprise Hybrid Cloud (diversity.net.nz)

Jeff Barr (@jeffbarr) reported Updated Mobile SDKs for AWS - Improved Credential Management in an 8/7/2011 post:

We have updated the AWS SDK for iOS and the AWS SDK for Android to make it easier for you to build applications that need to make calls to AWS using your AWS credentials.

Until now, your AWS credentials would have to be stored on the device. If your credentials are embedded in a mobile application there is no straightforward way to rotate them without updating every installed copy of the application. Alternatively, each installed copy of the application could require entry of an individual set of AWS credentials. This option would add some friction to the installation process.

The SDK now includes support for using temporary security credentials provided by the AWS Security Token Service. The SDK provides two sample applications that demonstrate how to connect to a token vending machine which serves as an interface to the AWS Security Token Service. See the Credential Management in Mobile Applications article for more details.

Applications that make use of the token vending machine can obtain AWS credentials on an as-needed basis. You can use the token vending machines that we supply, or you can implement your own.

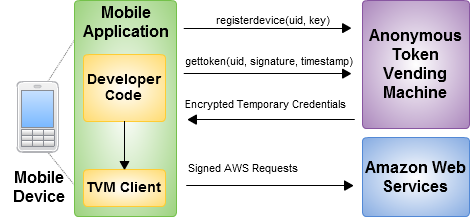

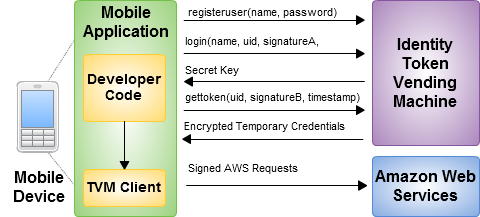

Our token vending machines are distributed as WAR files that can be run with AWS Elastic Beanstalk, preferably using the credentials of an IAM (Identity and Access Management) user. We have provided two versions of each token vending machine, Anonymous and Identity.

Anonymous Token Vending Machine

The Anonymous token vending machine is designed to support registration at the device level. It supports two principal functions - registerdevice and gettoken. Here is the basic request and response flow:

Identity Token Vending Machine

The Identity token vending machine is designed to support registration and login at the user level. It supports three principal functions: registeruser, login, and gettoken. Here's the basic request and response flow:

Read more by reading our Getting Started Guides for iOS and Android.

If you have used one of our SDKs to build a mobile application, I'd enjoy hearing about it. Please feel free to post a comment or to send me some email (awseditor@amazon.com).

An interesting approach.

<Return to section navigation list>

0 comments:

Post a Comment