Windows Azure and Cloud Computing Posts for 6/16/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Eric Nelson (@ericnel) described Backup and Restore in SQL Azure with the upcoming CTP in a 6/16/2011 post to the UK ISV Developer Evangelism Team Blog:

A lot of early adopters have asked about this “missing feature” in SQL Azure. Whilst workarounds exist (such as using BCP and SQL Server Integration Services), folks still wanted the backup and restore capabilities they lean on for SQL Server.

At TechEd in May David Robinson confirmed that we would be delivering a database restore capability for SQL Azure.

Screenshots from the upcoming CTP:

Step1: Backup is enabled for the database

Step2: Drop the database

Step3: Choose Restore

Step4: Restoring

<Return to section navigation list>

MarketPlace DataMarket and OData

Glenn Gailey (@ggailey777) described Entity Framework 4.1: Code First and WCF Data Services in a 6/15/2011 post:

Now that Entity Framework 4.1 is released, I decided that it was past-time for me to try to implement a data service by using the Code First functionality in Entity Framework 4.1. Code First is designed to be super easy; as already mentioned by ScottGu, it will even autogenerate a database for you, including connection strings…all by convention. Code First in EF 4.1 is, in fact, so different from the previous incarnations of Entity Framework, that Scott Hanselman famously dubbed a preview of this release “magic unicorn,” a name which folks ran with.

The unicorn moves Entity Framework way more toward the object-end of the ORM world. However, by relying solely on 1:1 “by convention” mapping, Code First definitely does not take advantage of much of the great benefits of the Entity Framework, which is the powerful conceptual-to-store mapping functionality of the EDMX. IMHO, the real reason that so people like this new version is that with its new DbContext and DbSet(Of TEntity) objects, validation, and the extensive use of attributes for any not-by-convention mappings, Code First in EF 4.1 ends-up looking very much like LINQ to SQL (still the darling Microsoft ORM of many programmers). There are even new fluent APIs, which can be used to configure a data model at runtime.

Bottom line: If you like how LINQ to SQL works (or nHibernate with its fluent APIs), you will probably also like Code First in Entity Framework 4.1.

However, rather than letting the unicorn do its magical thing, I opted to instead get Code First working with an existing database, Northwind. (Not because I thought Code First was too easy, but I just didn’t want to have to invent a bunch of new data—and I am very familiar Northwind, especially my favorite customer 'ALFKI'.) Not the most interesting demonstration, but I did get Code First working with some LINQ to SQL-style mapping, of which the self-referencing association on Employees was the most challenging). If you want to see how the Code First data classes look, download the Code First Northwind Data Service project from the code gallery on MSDN.

Now on to the data service…

Rowan Miller posted some early information on how define a data service provider using a pre-release version EF 4.1. However, the EF folks made some minor improvements before the final release, so the required customizations for WCF Data Services (the .NET Framework 4 version) are a bit different now that EF 4.1 is officially released. This is what I’m going to demonstrate in the rest of this post. (Note that the upcoming release of WCF Data Services that supports OData v3 natively supports DbContext as an Entity Framework data service provider, so customization of the data service will not be required in the next version.)

Here is what I did to get my EF 4.1 RTM Code First Northwind data model working with WCF Data Services:

- Create a new ASP.NET Web application (even an empty project works)

- Install EF 4.1

You can get the final RTM of “magic unicorn” from the Microsoft Download Center.- Add reference to EntityFramework.dll

This is EF 4.1 assembly.- Create the set of “plain-old CLR object” (POCO) Northwind classes

I basically followed ScottGu’s example by creating a 1:1 mapping for the Employees, Orders, Order Details, Customers, and Products tables in Northwind. OK, not exactly 1:1 since I didn’t want pluralized my POCO type names, so I had to use mapping attributes. Again, you download the project from MSDN Code Gallery to see how I did this.- Add a reference to System.ComponentModel.DataAnnotations

This assembly defines many of the mapping attributes that I needed to map my POCO classes to the Northwind database.- Define a context class that inherits from DbContext (I called mine NorthwindContext), which exposes properties that return typed-DbSet properties for each of my POCO classes (entity sets):

public class NorthwindContext : DbContext

{

public DbSet<Customer> Customers { get; set; }

public DbSet<Employee> Employees { get; set; }

public DbSet<Order> Orders { get; set; }

public DbSet<OrderDetail> OrderDetails { get; set; }

public DbSet<Product> Products { get; set; }

}- Add the Northwind connection string (NorthwindContext) to the Web.config file.

This is a named connection string, which is basically just a SqlClient connection to the Northwind database. This should point to the data source that is the SQL Server instance running the Northwind database.- Add a new item to the project, which is a new WCF Data Services item template. Detailed steps on how to do this are shown in the WCF Data Services quickstart. Here’s what my data service definition looks like:

public class Northwind : DataService<ObjectContext>Note that the type of the data service is ObjectContext and not NorthwindContext; there is a good reason for this. Basically, the current released version of WCF Data Services doesn’t recognize DbContext as an Entity Framework provider, so we need to get the base ObjectContext class to create the data source.

{

// This method is called only once to initialize service-wide policies.

public static void InitializeService(DataServiceConfiguration config)

{

// We need to set these explicitly to avoid

// returning an extra EdmMetadatas entity set.

config.SetEntitySetAccessRule("Customers", EntitySetRights.AllRead);

config.SetEntitySetAccessRule("Employees", EntitySetRights.AllRead);

config.SetEntitySetAccessRule("Orders", EntitySetRights.All);

config.SetEntitySetAccessRule("OrderDetails", EntitySetRights.All);

config.SetEntitySetAccessRule("Products", EntitySetRights.AllRead);config.DataServiceBehavior.MaxProtocolVersion =

DataServiceProtocolVersion.V2;

}

}- Override the CreateDataSource method to manually get the ObjectContext to provide to the data services runtime:

// We must override CreateDataSource to manually return an ObjectContext,

// otherwise the runtime tries to use the built-in reflection provider

// instead the Entity Framework provider.

protected override ObjectContext CreateDataSource()

{

NorthwindContext nw = new NorthwindContext();

// Get the underlying ObjectContext for the DbContext.

var context = ((IObjectContextAdapter)nw).ObjectContext;

context.ContextOptions.ProxyCreationEnabled = false;

// Return the underlying context.

return context;

}From here, I just published the data service and I was able to access Northwind data using Code First as if the project had instead used an .edmx-based Entity Framework data model.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Ron Jacobs posted How To Load WF4 Workflow Services from a Database with IIS/AppFabric to the AppFabric Team Blog on 6/16/2011:

Cross Post from Ron Jacobs blog

This morning I saw a message post on the .NET 4 Windows Workflow Foundation Forum titled Load XAMLX from database. I’ve been asked this question many times.

How can I store my Workflow Service definitions (xamlx files) in a database with IIS and AppFabric?

Today I decided to create a sample to answer this question. Lately I’ve been picking up ASP.NET MVC 3 so my sample code is written with it and EntityFramework 4.1 using a code first approach with SQL Server Compact Edition 4.

Download Windows Workflow Foundation (WF4) - Workflow Service Repository Example

AppFabric.tv - How To Build Workflow Services with a Database Repository

Step 1: Create a Virtual Path Provider

Implementing a VirtualPathProvider is fairly simple. The thing you have to keep in mind is that it will be called whenever ASP.NET wants to resolve a file or directory anywhere on the website. You will need a way to determine if you want to provide virtual content. For my example I created a folder in the web site called XAML. This folder is empty but I found that it has to be there or the WCF Activation code will throw an exception.

When I want to activate a Workflow Service that is stored in the database I use a URI that will point to this directory like this http://localhost:34372/xaml/Service1.xamlx

public class WorkflowVirtualPathProvider : VirtualPathProvider { #region Public Methods public override bool FileExists(string virtualPath) { return IsPathVirtual(virtualPath) ? GetWorkflowFile(virtualPath).Exists : this.Previous.FileExists(virtualPath); } public override VirtualFile GetFile(string virtualPath) { return IsPathVirtual(virtualPath) ? GetWorkflowFile(virtualPath) : this.Previous.GetFile(virtualPath); } #endregion #region Methods private static WorkflowVirtualFile GetWorkflowFile(string path) { return new WorkflowVirtualFile(path); } // TODO (01.1) Create a folder that will be used for your virtual path provider // Note: System.ServiceModel.Activation code will throw an exception if there is not a real folder with this name private static bool IsPathVirtual(string virtualPath) { var checkPath = VirtualPathUtility.ToAppRelative(virtualPath); return checkPath.StartsWith("~/xaml", StringComparison.InvariantCultureIgnoreCase); } #endregion }Step 2: Create a VirtualFile class

The VirtualFile class has to load content from somewhere (in this case a database) and then return a stream to ASP.NET. Performance is a concern so you should definitely make use of caching when doing this.

public class WorkflowVirtualFile : VirtualFile { #region Constants and Fields private Workflow workflow; #endregion #region Constructors and Destructors public WorkflowVirtualFile(string virtualPath) : base(virtualPath) { this.LoadWorkflow(); } #endregion #region Properties public bool Exists { get { return this.workflow != null; } } #endregion #region Public Methods public void LoadWorkflow() { var id = Path.GetFileNameWithoutExtension(this.VirtualPath); if (string.IsNullOrWhiteSpace(id)) { throw new InvalidOperationException(string.Format("Cannot find workflow definition for {0}", id)); } // TODO (02.1) Check the Cache for workflow definition this.workflow = (Workflow)HostingEnvironment.Cache[id]; if (this.workflow == null) { // TODO (02.2) Load it from the database // Note: I'm using EntityFramework 4.1 with a Code First approach var db = new WorkflowDBContext(); this.workflow = db.Workflows.Find(id); if (this.workflow == null) { throw new InvalidOperationException(string.Format("Cannot find workflow definition for {0}", id)); } // TODO (02.3) Save it in the cache HostingEnvironment.Cache[id] = this.workflow; } } /// <summary> /// When overridden in a derived class, returns a read-only stream to the virtual resource. /// </summary> /// <returns> /// A read-only stream to the virtual file. /// </returns> public override Stream Open() { if (this.workflow == null) { throw new InvalidOperationException("Workflow definition is null"); } // TODO (02.4) Return a stream with the workflow definition var stream = new MemoryStream(this.workflow.WorkflowDefinition.Length); var writer = new StreamWriter(stream); writer.Write(this.workflow.WorkflowDefinition); writer.Flush(); stream.Seek(0, SeekOrigin.Begin); return stream; } #endregion }Step 3: Register the Virtual Path Provider

Finally you register the provider and you will be on your way.

protected void Application_Start() { Database.SetInitializer(new WorkflowInitializer()); AreaRegistration.RegisterAllAreas(); RegisterGlobalFilters(GlobalFilters.Filters); RegisterRoutes(RouteTable.Routes); // TODO (03) Register the Virtual Path Provider HostingEnvironment.RegisterVirtualPathProvider(new WorkflowVirtualPathProvider()); }

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team explained how Windows Azure Helps Scientists Unfold Protein Mystery and Fight Disease in a 6/16/2011 post:

We love hearing about how Windows Azure helps drive innovation for organizations. Here’s another great example: Microsoft has partnered with the University of Washington’s Baker Laboratory to help scientists supercharge the computing power of their protein folding research by using Windows Azure. Helping scientists get faster results could mean speeding up cures for Alzheimer’s, cancers, salmonella, and malaria.

Earlier this year Microsoft IT’s Marlin Eiben was looking for a project to help demonstrate the “sheer computing power” of Windows Azure and got the idea for partnering with the Baker Lab from talking to his son, a research technologist there.

“We wanted a demonstration project that not only showed how Windows Azure worked, but something that would make a significant difference,” Eiben said. “Through my son’s connection I was aware that this is cutting-edge science, and it seemed a natural application for massive computing power.”

After Eiben got the green light at Microsoft, father and son approached David Baker, the lab’s principal and namesake, who put them in touch with Nikolas Sgourakis, a visiting scholar at the Baker Lab who is using computational modeling to try to help solve the mystery of what proteins look like up close.

Scientist Nikolas Sgourakis (left) is using Windows Azure to boost his protein folding research after father and son Marlin and Chris Eiben (center and right) helped establish a partnership between Microsoft and the University of Washington’s Baker Laboratory, the world’s top computational biology lab.

To deploy and test the lab’s software on Windows Azure, Eiben enlisted the help of his Microsoft IT colleagues Pankaj Arora and Chris Sinco. The two architected, tested and helped scale out a solution. Arora even set up a server under the bed in his home to create and test the Windows Azure deployment.

“What’s interesting about this is that it’s historically not the traditional Windows Azure scenario. More traditional scenarios are Web startups, hosting content, websites and business applications. This really shows the versatility of Windows Azure as a platform,” Arora said.

“Normally, this work would be shared by thousands of private machines owned by people who had donated computing time,” Eiben said. “Among these thousands, someone in Helsinki might offer time, and someone in Sao Paulo, but with Windows Azure Nikolas can get his results much faster and more reliably.”

Sgourakis’ “benchmark” Windows Azure experiment used 2.5 million calculations to essentially check the algorithms and process that he will use. He said the experiment – which used the equivalent of 2,000 computers running for just under a week – was a success. Everything checked out. The second test, the one to compute properties of the salmonella “needle,” runs this week and will take a similar number of computations.

Sgourakis said Windows Azure has been “invaluable” to his research. “This interaction with Microsoft has shown me the power of the cloud. This whole idea behind computing on demand could be very useful for scientists like myself who don’t have the money for on-demand computing time, but who need to get answers right away,” he said. “It’s a most powerful tool that we need to perform our research, and having a resource like Windows Azure available to do groundbreaking work right away is very encouraging to me.”

To learn more, click here to read the feature story on Microsoft News Center.

Doug Rehnstrom explained Why Every ASP.NET Developer Should Know Windows Azure in a 6/16/2011 post to the Learning Tree blog:

Windows Azure for Testing

A Web application must be tested before deploying it to the Internet. To do this effectively, you need a test server. With Windows Azure, you can have an ASP.NET test server, on the Internet, for free. Go to this link to sign up for your free trial, http://www.microsoft.com/windowsazure/free-trial/.

Admittedly, it’s not free forever. Once the free trial is over though, it will only cost you 5 cents per hour, for the time the server is running. So, if you want to put a site online for testing, it will cost you $1.20 per day or $6.00 for the work week. And there’s nothing else to buy. No hardware, no licenses, and no installation or administration. When you’re done, delete the deployment, and it costs nothing.

Windows Azure for Easy Deployment

When I teach ASP.Net, people often want to know details about deployment. With Azure, deployment is seamless and automated by Visual Studio. After a five minute setup, deploying to Windows Azure is three clicks. Right-click on your application in Visual Studio, select Publish, click OK. The details of deploying your application are handled by the Windows Azure operating system.

Windows Azure for Easy Updates

When you deploy to Azure you can choose to deploy to either “Staging” or “Production”. If you are coming out with an update, first deploy to staging. From there you can make sure everything works. Then, you just flip the production and staging deployments. Later, if you realize you made a mistake, you can even flip them back. You just click the buttons in the Azure Management tool.

Windows Azure for Fault Tolerance

Fault tolerance is achieved by creating redundant servers. If you deploy internally, that means multiple computers, wires, routers, load balancers, multiple copies of your deployments. All of that adds up to money and administration headaches. In Azure, if you want fault tolerance you specify an instance count greater than 1 in application properties.

Windows Azure for Scalability

Scalability is achieved by increasing computing power. This can be done by adding more machines (scaling out) or adding bigger machines (scaling up). In Azure, this is done simply by setting instance count and VM size, again in application properties.

When deploying internally, a company has to buy enough machines to handle their peak periods. In Azure, you can tune instance count and VM size up or down, paying only for the resources you need at any given time.

Some Windows Azure Articles

I’ve written some articles on getting started with Windows Azure. Here are some links.

- Windows Azure Training Series – Understanding Subscriptions and Users

- Windows Azure Training Series – Setting up a Development Environment for Free

- Windows Azure Training Series – Creating Your First Azure Project

- Windows Azure Training Series – Understanding Azure Roles

- Windows Azure Training Series – Deploying a Windows Azure Application

Windows Azure Training

At Learning Tree we have a 4-day class on Windows Azure, course 2602, Windows Azure Platform Introduction: Programming Cloud-Based Applications. We’ll cover everything you need to know. Take a look at the schedule and outline. Hopefully, we’ll see you there.

Adam Hall explained Orchestrator extensibility - Creating TFS Integrations using PowerShell in a 6/15/2011 post to The System Center Team Blog:

We have had a lot of requests for a Team Foundation Server Integration Pack, and the team has been working on, testing and using one internally.

Zhenhua Yao who works in our Orchestrator Engineering team has written up a post describing how we did this, and for those who just can’t wait, he has provided all the instructions on how to get going!

As with all engineering efforts, we are evaluating this Integration Pack and assessing how best it could be made available externally. One thing we do know is that you can do just about anything with the base Orchestrator product using the Standard Activities (formerly Foundation Activities), so if you want to have a read of the article then please do.

Integration Packs are packages of ready-made functions, sometimes combining multiple commands into a single activity, and they also bring change control, packaging and deployment to Orchestrator. But you do not NEED an Integration Pack (in most cases) to be able to integrate with systems, map our process workflows and create runbooks! In this case, you can use PowerShell to integrate with TFS, and if you want to, run them through the Quick Integration Kit to create an IP.

You can read the article here.

<Return to section navigation list>

Visual Studio LightSwitch

JimmyPS announced ClientUI 5 SP1 Adds Support for LightSwitch in a 6/16/2011 post to the Intersoft Solutions Corporate Blog:

By top requests, we’ve added full support for LightSwitch Beta 2 in the recently released ClientUI 5 service pack 1. At a quick glance, LightSwitch is a Microsoft tool for “developers of all skill levels” who want to build simple business applications in a short time without having to understand most of the underlying technologies. You can find out more about LightSwitch here.

LightSwitch generates applications based on the presentation-logic-data storage architecture. I’m not a huge fan of application generator though, particularly the one that generates the UI and layout part of the application. IMHO, user interface and user experience aspects of an application should be authentic and customized to reflect the brand of the associated business or the product. Of course, application generators can be useful in certain scenarios, for example, when you need to quickly build internal applications for administrative use.

Some have argued that LightSwitch is amateurish reminding of the old Access, but others consider it appropriate for small businesses with simple needs, being able to create their own CRUD applications without having to hire programmers for that. Nevertheless, we have decided to add full support for LightSwitch as part of our commitment in delivering the best tooling support for Visual Studio development. Click here to download ClientUI 5 service pack 1 with LightSwitch support.

Using ClientUI Controls in LightSwitch

In this blog post, I’d like to share how easy and simple it is to consume ClientUI controls in your LightSwitch application – thanks to the MVVM-ready control architecture. Starting from beta 2, LightSwitch allows nearly all layout and predefined controls to be replaced with custom controls. This includes the list, data grid, and form controls such as text box and date time picker.

In this post, I will show how to to replace the default date and time picker with ClientUI’s UXDateTimePicker to provide more appealing date and time selection. Assuming that you already have a sample LightSwitch project ready, please open the screen designer for a particular form. In my sample, I used the Employee List Detail which was modeled from our all-time favorite Northwind database.

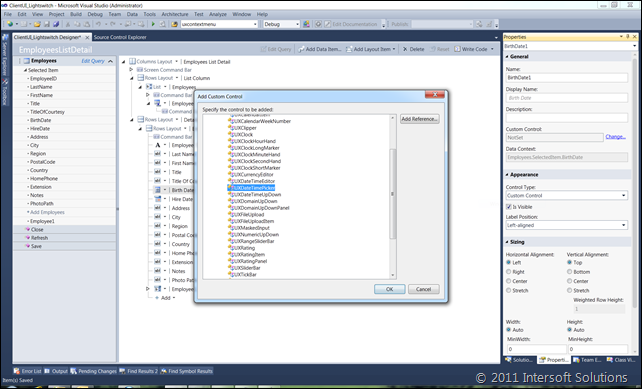

Once the designer is presented, select one of the date time fields. The “Hire Date” seems to make the most sense here since it utilizes both the date and time elements. In the property window, change Control Type to Custom Control, then click the Change link to specify the custom control type. In the next second, you will be prompted with a dialog box asking you to select the type of the control to use.

Expand Intersoft.Client.UI.Aqua.UXInput assembly and select UXDateTimePicker such as shown in the following shot.

If the desired assembly is not listed, you can browse the assembly by clicking the Add References button and add the desired assembly. Certainly, only compatible Silverlight controls will be accepted.

Now that you’ve specified the custom control, the final step is writing code to bind the data value to the control. I’m initially expecting LightSwitch to have automatic capability in doing the binding, but unfortunately it’s not. You also cannot access the custom control’s properties directly in the designer, which is one of shortcoming in the LightSwitch that I hope can be addressed in the final version.

The following code shows how to implement data binding to the custom control and customize the control’s properties via code.

namespace LightSwitchApplication { public partial class EmployeesListDetail { partial void EmployeesListDetail_Created() { // get the reference to the UXDateTimePicker var datePicker = this.FindControl("HireDate1"); // set the value binding to the UXDateTimePicker's Value property. datePicker.SetBinding(UXDateTimePicker.ValueProperty, "Value"); datePicker.ControlAvailable += new EventHandler<ControlAvailableEventArgs>(DatePicker_ControlAvailable); } private void DatePicker_ControlAvailable(object sender, ControlAvailableEventArgs e) { UXDateTimePicker datePicker = e.Control as UXDateTimePicker; datePicker.EditMask = "MM/dd/yyyy hh:mm tt"; datePicker.UseEditMaskAsDisplayMask = true; } } }That’s it, we’re all set! Simply press F5 to run the project to the browser. The build process took much longer than normal Silverlight projects. You might need to wait for several seconds before you can see the browser window popped up.

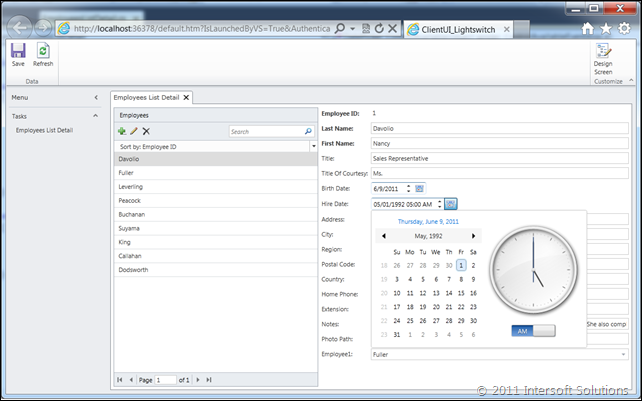

The following screenshot shows the UXDateTimePicker in action. Click on the Hire Date’s picker to see the calendar with an elegant analogue clock. Notice that the date is correctly selected, as well as the time. All good!

Want to give it a try quickly? Download the sample here, extract and run the project. Note that you will need Northwind database installed in your local SQL server.

Licensing ClientUI in LightSwitch Application

When Microsoft announced the second beta of LightSwitch three months ago, our support team has been constantly bombarded with licensing questions in LightSwitch. How do you supposed to license ClientUI in such black-boxed application domain? I will unveil it next, read on.

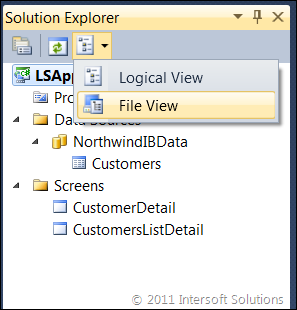

By default, LightSwitch application shows the logical view in the Solutions Explorer. This allows developers to quickly skim on the information that matters to them – in this case, the data source and the screens – instead of a huge stack of projects and generated files. Fortunately, LightSwitch still allows you to view the solution in File perspective. This can be done through the small button in the right most of the Solution Explorer’s toolbar, please refer to the following shot.

The file view is required for the ClientUI’s licensing to work. As you may have aware, to license ClientUI in a Silverlight or WPF app, you need to embed the runtime license file called licenses.islicx to the root of the main project.

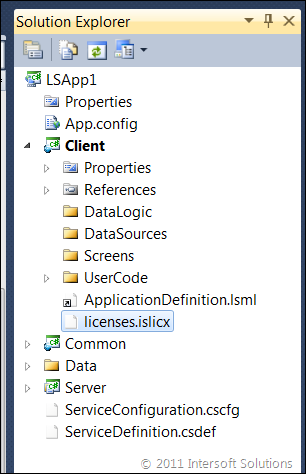

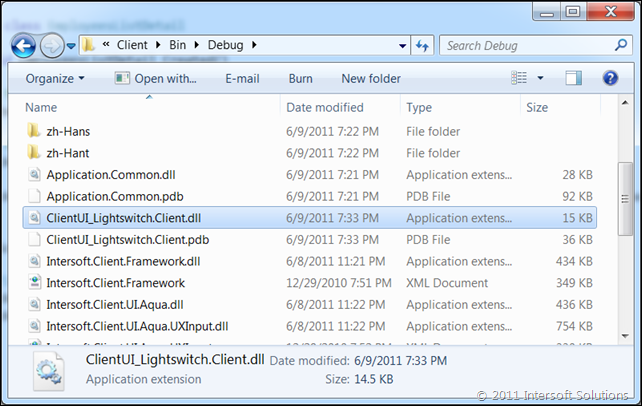

Once you switched to the File view, you will be presented with four projects in the Solution Explorer. The correct project to choose for the ClientUI licensing is the Client project. You can then follow the normal licensing procedure afterward, such as adding the license file and then set its Build Action to Embedded Resource. See the following shot.

And one more thing. LightSwitch generates dozens of assemblies in the output folder which contains the application building block and common runtime used in the LightSwitch application. Make no mistakes, the main assembly that you should select for the licensing is the one ended up with .Client.dll, for example, ClientUI_Lightswitch.Client.dll for project with name ClientUI_Lightswitch.

To make it clear, I included the screenshot below that shows the exact location of the Client assembly required for the ClientUI licensing.

I hope this blog post gives you a quick start on building ClientUI-powered LightSwitch application. Please post your feedback, thoughts or questions in the comment box below.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Bill Claybrook explained How providers affect cloud application migration in a 6/16/2011 post to SearchCloudComputing.com

In an attempt to reduce lock-in, improve cloud interoperability and ultimately choose the best option for their enterprises, more than a few cloud computing users have been clamoring for the ability to seamlessly migrate applications from cloud to cloud. Unfortunately, there's more to application migration than simply moving an application into a new cloud.

To date, cloud application migration in clouds has focused on moving apps back and forth between a virtualized data center or private cloud environment and public clouds such as Amazon Elastic Compute Cloud, Rackspace or Savvis. There is also a group of public cloud-oriented companies that are looking to move applications to private clouds or virtualized data centers to save money. Still others are interested in moving applications from one public cloud to another in a quest for better service-level agreements and/or performance at a lower cost.

What are some of the worries in moving an application from one environment to another?

- Data movement and encryption, both in transit and when it reaches the target environment.

- Setting up networking to maintain certain relationships in the source environment and preparing to connect into different network options provided by the target environment.

- The application itself, which lives in an ecosystem surrounded by tools and processes. When the application is moved to a target cloud, you may have to re-architect it based on the components/resources that the target cloud provides.

Applications are being built in a number of ways using various Platform as a Service offerings, including Windows Azure, Google App Engine and Force.com. With a few exceptions for Windows Azure, the applications you create using most platforms are not very, if at all, portable. If you develop on Google App Engine, then you have to run on Google App Engine.

Public clouds, like Amazon, also allow you to build applications. This is similar to building in your data center or private cloud, but public clouds may place restrictions on resources and components you can use during development. This can make testing difficult and create issues when you try to move the application into production mode in your data center environment or to another cloud environment.

Applications built in data centers may or may not be easily moved to target cloud environments. A large number of applications use third-party software, such as database and productivity applications. Without access to source code, proprietary third-party applications may be difficult to move to clouds when changes are needed. …

Bill continues with

- The complications of cloud application migration

- Tools to facilitate application migration in clouds

- Cloud application migration takeaways

topics.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

Teyo Tyree described Delivering on the Promise of Cloud Infrastructure with Puppet in a guest post of 6/16/2011 to the CloudTimes blog:

Four years ago, the idea of running large infrastructures in elastic virtualized compute clusters seemed outlandish. Now, it’s commonplace. A convergence of technology, cost, and need has led to a revolutionary way of accessing compute resources. Service providers such as Rackspace and Amazon have democratized access to enterprise-grade infrastructure. At small scale, this has enabled individuals to gain on-demand access to compute resources that would otherwise have required massive upfront investment. For large deployments, cloud infrastructures promises speed and operational efficiency by providing seemingly limitless and instantaneous access to compute resources.

Advances in Cloud-Based Infrastructure

Core virtualization technologies have been wrapped in simple APIs. Compute resources can be dynamically provisioned. These resources can be located within the corporate firewall or offered as services from external providers. Most importantly these computing resource are available, dynamically and are effectively limitless.

The utilization of cloud-based infrastructures has served to shorten the time between identification of business need and resource provisioning. With the bottlenecks of basic provisioning and capacity removed, the major challenge for resource allocation becomes configuring the compute resources to provide high level business value. This is the traditional system integration challenge[, just] at cloud speed. Without automation, provisioning services in the cloud becomes the new bottleneck. Manual systems integration is not an option—people aren’t fast enough to keep up.

If you can launch a thousand virtual machines in the cloud, but it takes you two weeks to configure those thousand machines to service a business need, you aren’t gaining any advantage. Automation is a key component to realizing the promise of cloud infrastructure.

Granular automation: Managing complexity through composition

Cloud infrastructure delivers on-demand access to compute nodes. Typically compute nodes are deployed as machine images. Unless your infrastructure is completely homogeneous, you will end up managing multiple images. As your infrastructures grow in complexity, the number of images to manage grows exponentially. Granular automation of the configuration components within an image allow you to easily manage like-configuration while taking the points of differentiation into account.

Consider a simple web application consisting of a web server, an application server, and a database server. Each of these roles needs to have a baseline of common configuration, along with specification about their roles. The web server needs to know about the application server, and the application server needs to know about the database server. Commonly, these nodes need to have a baseline set of security, users’ accounts configured, and administrative tools deployed. If we choose to use images to deliver these nodes, we will be duplicating all of the common configurations and hard-coding all of the shared information. Even in this very simple example we would have three images to maintain. In more complex environments, the overhead of image management becomes untenable. The utility of image management rarely survives within IT organizations. Administrators generally automate the configuration above a base operating system, factor out the common elements and implement automation that specifies required differentiations.

Managing change and building a dynamic Configuration Management Database (CMDB)

Naive automation implementations procedurally roll out changes across a population of machines. Recipes are rolled out to a set of systems the same way one would bake a cake. You have one shot at an ideal configuration. There are no tools to determine the state of a system. There are no high level specifications that must be met. Modern tools take a different approach. They should allow you to:

- specify the state of individual resources on a node

- specify the relationship between individual or collections of resources

- simulate the process of bringing a node into sync with its specification

- inspect the state of a given node

- dynamically build a queryable inventory of all of the resources and nodes that you are managing

By providing high level interfaces and APIs, modern automation systems enable accurate and rapid deployment.

- Change can be managed over the life time of a node.

- Simple changes can be deployed dynamically.

- Infrastructure can be modeled in code and built using simple tools.

- The state of an infrastructure can be discovered and managed.

At cloud scale, modern automation tools are a key enablers of the velocity and consistency promise by the cloud.

Teyo is VP of Business Development and Co-Founder of Puppet Labs.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Ellen Messmer asserted “Virtualization, cloud computing involve unique challenges” in a deck for her Gartner: IT should be planning, moving to private clouds article of 6/15/2011 for NetworkWorld:

If speedy IT services are important, businesses should be shifting from traditional computing into virtualization in order to build a private cloud that, whether operated by their IT department or with help from a private cloud provider, will give them that edge.

Survey finds many disappointed in virtualization, cloud computing

That was the message from Gartner analysts this week, who sought to point out paths to the private cloud to hundreds of IT managers attending the Gartner IT Infrastructure, Operations & Management Summit 2011 in Orlando. Transitioning a traditional physical server network to a virtualized private cloud should be done with strategic planning in capacity management and staff training.

"IT is not just the hoster of equipment and managing it. Your job is delivery of service levels at cost and with agility," said Gartner analyst Thomas Bittman. He noted virtualization is the path to that in order to able to operate a private cloud where IT services can be quickly supplied to those in the organization who demand them, often on a chargeback basis.

Gartner analysts emphasized that building a private cloud is more than just adding virtual machines to physical servers, which is already happening with dizzying speed in the enterprise. Gartner estimates about 45% of x86-based servers carry virtual-machine-based workloads today, with that number expected to jump to 58% next year and 77% by 2015. VMware is the distinct market leader, but Microsoft with Hyper-V is regarded as growing, and Citrix with XenServer , among others, is a contender as well.

"Transitioning the data center to be more cloud-like could be great for the business," said Gartner analyst Chris Wolf, adding, "But it causes you to make some difficult architecture decisions, too." He advised Gartner clientele to centralize IT operations, look to acquiring servers from Intel and AMD optimized for virtualized environments, and "map security, applications, identity and information management to cloud strategy."

Although cloud computing equipment vendors and service providers would like to insist that it's not really a private cloud unless it's fully automated, Wolf said the reality is "some manual processes have to be expected." But the preferred implementation would not give the IT admin the management controls over specific virtual security functions associated with the VMs.

Regardless of which VM platform is used — there is some mix-and-match in the enterprise today though it poses specific management challenges — Gartner analysts say there is a dearth of mature management tools for virtualized systems.

"There's a disconnect today," said Wolf, noting that a recent forum Gartner held for more than a dozen CIOs overseeing their organizations building private clouds, more than 75% said they were using home-grown management tools for things like hooking into asset management systems and ticketing.

Nevertheless, Gartner is urging enterprises to put together a long-term strategy for the private cloud and brace for the fast-paced changes among vendor and providers that will bring new products and services — and no doubt, a number of market drop-outs along the way.

<Return to section navigation list>

Cloud Security and Governance

Matthew Weinberger (@MattNLM) expanded on Chris Hoff’s recent move in his Cisco Cloud Security Vet Jumps to Juniper Networks post of 6/16/2011 to the TalkinCloud blog:

Chris Hoff, most recently director of Cloud and Virtualization Solutions in Cisco Systems’ Security Technology Business Unit (STBU), has announced on his personal blog that he’s jumping ship to competitor Juniper Networks. The blog in question is refreshingly candid: While Hoff never exactly strays from propriety or professionalism when discussing his departure from Cisco, his reasoning only reinforces the notion that Cisco faces its share of challenges.

Here’s Hoff’s reasoning on why Juniper was an offer he couldn’t refuse, in his own words:

OK: Lots of awesome people, innovative technology AND execution, a manageable size, some very interesting new challenges and the ever-present need to change the way the world thinks about, talks about and operationalizes “security.”

Reading between the lines, here’s what it sounds like he’s really saying to me: Juniper is nimble at a time when Cisco is in transition and trying to re-focus on its core businesses. That’s a familiar theme, as noted by The VAR Guy himself.

Juniper Networks has been promoting one single solitary message to its partners in 2011: The time to move to the cloud is now. Hoff isn’t even the first Cisco executive that Juniper has poached in 2011: Former Cisco channel executive Luanne Tierney switched to Juniper earlier this year.

Cisco is one of the oldest and most venerated names in the IT sphere. But Juniper has been showing signs of growth at the same time that Cisco has been showing signs of struggle.

Matthew J. Schwartz asserted “Industry council warns companies that handle cardholder data in virtualized environments, including cloud: Don't skimp on security requirements” in a deck for his PCI Updates Rules for Customer Data In Cloud article for InformationWeek:

Memo to organizations that store cardholder data in virtualized environments, including the cloud: Don't skimp on security.

That's the main message contained in new guidance, released Tuesday by the Payment Card Industry (PCI) council, for organizations that handle cardholder data and thus must comply with the council's data security standard, PCI DSS.

We spoke with Chris Sather, Product Management for Network Defense at McAfee about McAfee's next generation firewalls that analyze relationships and not protocols.

"You're not relieved of any of the PCI DSS requirements here. If you've got to do them in the real world, you've got to do them in a virtualized world too," said Bob Russo, general manager of the PCI Security Standards Council, by telephone.

Already, PCI DSS version 2.0, which went into effect in January 2011, had specified that cardholder data stored in virtualized environments was covered by the standard. (Businesses still on PCI 1.2 must comply with the new version by the end of the year.) But when it came to investigating virtualization and its PCI implications in greater depth, "we were able to work within the existing compliance framework," said Kurt Roemer, chief security officer of Citrix, in a telephone interview.

Accordingly, "this is supplemental guidance, these are not new requirements within the standard," said Russo. That means PCI-compliant organizations storing cardholder data in virtualized environments won't have to start from scratch.

The PCI council's security caution over virtualization is justified, because virtualized environments are susceptible to types of attacks not seen in any other environment. Furthermore, many businesses embrace virtualization to cut costs, but skimp on securing the environment.

"Security tends to be an afterthought in any environment, not just virtualized environments, and our job is to help people understand this," said Russo. "We're always telling people this is about security, and not compliance. If you're secure, compliance comes along as a byproduct."

The new "PCI DSS Virtualization Guidelines" specifies four principles. First, PCI DSS security requirements apply to cardholder data, even if stored in virtualized environments. Second, organizations have to audit the risks--which may be unique--associated with using virtualized environments. Third, the council wants to see detailed knowledge of each relevant virtualized environment, "including all interactions with payment transaction processes and payment card data." Finally, the guidance warns that "there is no one-size-fits-all method or solution to configure virtualized environments to meet PCI DSS requirements" and said that specific controls and procedures will necessarily vary by environment.

To help organizations get a handle on PCI and virtualization, the new guidelines also detail techniques for assessing risk in virtualized environments, and specify which aspects of virtualized environments are--or aren't--within the scope of PCI compliance, and thus liable to be assessed during an audit by qualified security assessors (QSAs). "This is a document not only for the QSAs, but also for merchants and people wanting to use virtualized environments. So it better prepares them for what they're going to be asked by QSAs," said Russo.

Don't look for guidance on specific types of technology, but rather core virtualization security challenges. "If you look at the standard, we try to be as technology-agnostic as possible, and as we address virtualization going forward, we recognize that numerous areas will evolve--storage, virtual networking, cloud computing-- but the requirements to manage the technology will probably not change, rather the risks will evolve, and we'll address those," said Troy Leach, the council's chief standards architect, in a telephone interview.

The new guidance applies to storing PCI data in the cloud too. "What we did was adopt the NIST definition of cloud computing, and we abstracted that down to three types of computing as a service--software, platforms, and infrastructure," said Citrix's Roemer. In future PCI versions, "that's probably the one area of the document that would need to be updated more," he said, "but cloud computing is being used for PCI environments today, there are a lot of benefits in doing so."

The new guidance was produced in part by the PCI council's virtualization special interest group, which includes representatives from 33 different organizations--from Bank of America and Cisco to Southwest Airlines and Stanford University. Overall, it included "QSAs and auditors, merchants, and vendors, we had a broad brush of people across the PCI ecosystem," said Roemer, who leads the special interest group.

<Return to section navigation list>

Cloud Computing Events

Velocity Conference 2011 posted links to live videos of keynotes and sessions for 6/14, 6/15 and 6/16/2011. Hopefully, the links will connect to archives shortly (stay tuned):

Tuesday, June 14th

- 7:00pm - 8:30pm: Ignite Velocity

Wednesday, June 15th

- 8:30am - 8:40am: Opening Remarks - Jesse Demonstrates Caffeine IV, Steve Souders (Google), Jesse Robbins (Opscode), John Allspaw (Etsy.com)

- 8:40am - 8:55am: Career Development, Theo Schlossnagle (OmniTI)

- 8:55am - 9:25am: JavaScript & Metaperformance, Douglas Crockford (Yahoo! Inc.)

- 9:25am - 9:45am: Look at Your Data, John Rauser (Amazon)

- 9:45am - 10:00am: Testing and Monitoring Mobile Apps, Vik Chaudhary (Keynote Systems, Inc.), Manny Gonzalez (Keynote Systems)

Morning Break

- 10:25am - 10:45am: Change = Mass x Velocity, and Other Laws of Infrastructure, Mark Burgess (Cfengine)

- 10:45am - 11:15am: Lightning Demos Wednesday, Marcel Duran (Yahoo!), Patrick Meenan (Google), Dave Johnson (Nitobi), Steve Souders (Google)

- 11:15am - 11:20am: Your Mobile Performance - Analyze and Accelerate, Michael Kuperman (Cotendo), Ronni Zehavi (Cotendo)

- 11:20am - 11:35am: From Inception to Acquisition: One Startup's Journey through the Cloud, Patrick Lightbody (BrowserMob)

- 11:35am - 11:50am: O'Reilly Radar, Tim O'Reilly (O'Reilly Media, Inc.)

Thursday, June 16th

- 8:30am - 8:35am: Opening Remarks and Belly Dancing, Jesse Robbins (Opscode), Steve Souders (Google), John Allspaw (Etsy.com)

- 8:35am - 8:50am: World IPv6 Day: What We Learned, Ian Flint (Yahoo!)

- 8:50am - 9:20am: Facebook Open Compute & Other Infrastructure, Jonathan Heiliger (Facebook)

- 9:20am - 9:35am: Velocity Culture, Jon Jenkins (Amazon.com)

- 9:35am - 9:40am: Artur on SSD's, Artur Bergman (Wikia/Fastly)

- 9:40am - 9:55am: Cisco and Open Stack, Lew Tucker (Cisco)

Morning Break

- 10:20am - 10:35am: State of the Infrastructure, Rachel Chalmers (The 451 Group)

- 10:35am - 11:05am: Holistic Performance, John Resig (Mozilla Corporation)

- 11:05am - 11:35am: Lightning Demos Thursday, Michael Schneider (Google), Andreas Grabner (dynaTrace Software), Paul Irish (jQuery Developer Relations), Sergey Chernyshev (truTV)

- 11:35am - 11:40am: Cast - The Open Deployment Platform, Paul Querna (Rackspace)

More Velocity Video

See more keynote video and other related interviews from the 2011 Velocity Conference on YouTube, velocityconference.blip.tv, and as a podcast subscription.

The current Blip.tv videos are from Velocity 2010.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

UnbreakableCloud reported 10,000 Cores Cluster on EC2 using Opscode Hosted Chef under 45 Minutes in a 6/16/2011 post:

Opscode, Inc., the leader in cloud infrastructure automation, yesterday at the O’Reilly Velocity Conference in Santa Clara, Calif., announced its latest infrastructure automation solution, Opscode Private Chef™ (OPC). The Private Chef appliance provides Opscode Hosted Chef’s™ highly available, dynamically scalable, fully managed and supported automation environment behind the corporate firewall.

Although Cycle Computing has already built 10,000 cores linux cluster in Amazon EC2 earlier in April for Genentech, according to CycleComputing.com, to do a protein analysis the recent news comes as it has used Opscode Hosted Chef to successfully build the 10,000 cores clusters to run HPC jobs in the cloud. This is a big milestone to create HPC infrastructure on the cloud for the cheapest price. The news indicate that it might cost about $5 million to build such cluster using discrete servers in the data center space and Cycle Computing has built it for about $8500, a singnificant value proposition on the cloud for High Performance Computing.

If the life science or any other data mining or analysis needs huge high performance computing power, now the public cloud such as Amazon EC2 can offer such powerful infrastructure at the fraction of the cost. For more information, please visit http://cyclecomputing.com.

James Saull (@jamessaull) described Google App Engine and instant-on for hibernating applications in a 6/16/2011 post to the CloudComments.net blog:

At the #awssummit @Werner mentioned the micro instances and how they were useful to support use cases such as simple monitoring roles or to host services that need to always be on and listen for occasional events. @simonmunro cynically suggested it was a way of squeezing life out of old infrastructure.

I would prefer applications to hibernate, release resources and stop billing me but come back on instantly on demand.

Virtual Machine centric compute clouds (whether they be PaaS or IaaS oriented) exhibit this annoying “irreducible minimum” issue, whereby you have to leave some lights on. Not necessarily for storage, cloud databases or queues such as S3, SQS, SimpleDB, SES, SNS, Azure Table Storage etc. – they are always alive and will respond to a request irrespective of volume or frequency. They scale from 0 upwards. Not so for compute. Compute typically scales from 1 or 2 upwards (depending on your SLA view of availability).

This is one feature I really like about Google’s App Engine. The App Engine fabric brokers incoming requests, resolves it to the serving application and launches instances of the application if none are already running. An application can be idle for weeks consuming no compute resources and then near-instantly burst into life and scale up fast and furiously before returning to 0 when everything goes quiet. This is how elasticity of compute should behave.

My own personal application on App Engine remains idle most of the time. I am unable to detect that my first request has forced App Engine to instantiate an instance of my application first. My application is simple, but a new instance of my Python application will get a memcached cache-miss for all objects and proceed to the datastore to query for my data, put this data into the cache, and then pass the view-model objects to Django for rendering. Brilliantly fast. I can picture a pool of Python EXEs idling and suddenly the fabric picks one, hands it a pointer to my code base and an active request – bam – instant-on application.

For those applications that cannot give good performance from a cold start, App Engine supports the notion of “Always On” by forcing instances to stay alive with caches all loaded and ready for action: http://code.google.com/appengine/docs/adminconsole/instances.html

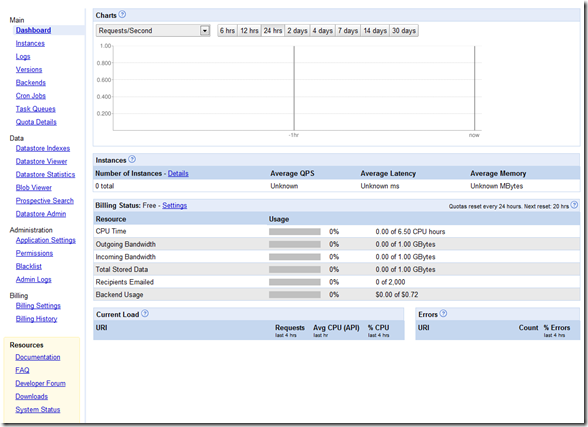

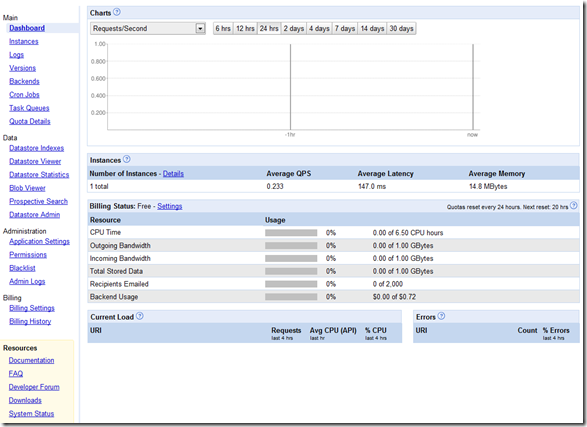

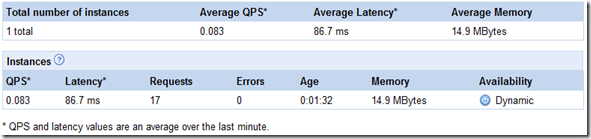

The screen shots below show my App Engine dashboard before my first request, how it spins up a single instance to cope with demand followed by termination after ten minutes of being idle.

Stage 1: View of the dashboard – no instances running – no activity in the past 24 hours

Stage 2: A request has created a single instance of the application and the average latency is 147ms. The page appeared PDQ in my browser.

Stage 3: 17 Requests later, and the average latency has dropped. One instance is clearly sufficient to support one user poking around.

Stage 4: I left the application alone for nearly ten minutes. My instance is still alive, but nothing happening.

Stage 5: After about ten minutes of being idle my application instance vanishes. App Engine has reclaimed the resources.

It will be interesting to see how GAE’s migration to a more conventional instance-hours billing system will affect the preceding sequence.

Derrick Harris (@derrickharris) asserted VMware's platform play could use a cloud connection in a 6/14/2011 post to Giga Om’s Structure blog:

After an acquisition spree that began with SpringSource almost two years ago, VMware (s vmw) unveiled the fruits of its labor on Tuesday with an application platform dubbed vFabric 5. As an integrated collection of those purchased components, vFabric 5 is a robust offering, but it’s a bit surprising to see how distinct VMware’s on-premise platform strategy is from its cloud-based strategy.

Unlike its vCloud portfolio that seeks to unify the experience of managing both on-premise and cloud-based infrastructure, VMware’s options for deploying and running applications are almost entirely separate experiences.

Cloud Foundry

In the public cloud, VMware is pushing its Cloud Foundry Platform-as-a-Service, which is an open-source offering designed to make it easier for developers to launch web applications on cloud computing resources. Developers can access the code for free via Github, or pay VMware for its hosted version. Cloud Foundry supports a variety of development languages and frameworks, including Java (s orcl), Spring, Ruby on Rails and Node.js. Whatever the case, though, it’s a far different experience than using vFabric on premise.

vFabric

vFabric 5 is an on-premise product designed to run atop VMware’s vSphere hypervisor and virtualization-management software. It consists of the following core components VMware was busy buying recently:

- Spring tc Server

- Spring Insight Operations

- GemFire distributed database

- RabbitMQ messaging protocol, Hyperic monitoring software

- Enterprise-grade Apache web server.

vFabric 5 is a Java application platform, although Dave McJannet, VMware’s director of product marketing for vFabric, explained to me during a recent phone call that it’s optimized for applications developed using the Spring framework.

At this point, the cloudiest aspect of vFabric 5 is its licensing model. As McJannet explained, it’s based on the number virtual machines licensed and utilizes an average-usage-based approach. Users can run as many or as few VMs as they need at any given time provided that the average number of VMs in use during the year doesn’t exceed the number of licenses originally purchased. This model does allow a fair amount of flexibility in that lets customers determine a pool of resources and use them as they wish, but it’s not exactly the same type of pay-per-use flexibility one associates with the public cloud.

Why they’ll merge

What’s interesting at this point is how vFabric and Cloud Foundry are so disconnected, especially considering how closely VMware has aligned its vCloud management software with its vCloud service provider program at the Infrastructure-as-a-Service level. Of course, VMware’s IaaS strategy took many years to shape up, so perhaps closer platform-layer alignment is on they way between vFabric, Cloud Foundry, and even its Spring-centric PaaS partnerships with Salesforce.com (s crm) and Google (s goog). McJannet did note that some vFabric components are available within Cloud Foundry, but the components are about the only connection between the two offerings.

As DotCloud Founder and CEO Solomon Hykes explained to me last month, PaaS, with its focus on automation, is all about upsetting the legacy application-platform market presently dominated by Oracle (s orcl) and IBM (s ibm) with their Java-centric WebLogic and WebSphere offerings. One would expect that VMware — a PaaS provider itself — has plans to PaaSify vFabric at some point. It might be a while , though, because, as VMware’s Mathew Lodge acknowledged to me in February, although VMware does foresee its platform business growing as big as its legacy infrastructure business, it could take several years or more.

I suspect VMware CEO Paul Maritz will offer some insights into his company’s expanding platform and overall cloud computing strategies when he sits down for a fireside chat with Om Malik at next week’s Structure 2011 conference in San Francisco. VMware’s cloud CTO and chief architect Derek Collision will also be on hand to discuss the future of PaaS.

<Return to section navigation list>

0 comments:

Post a Comment