Windows Azure and Cloud Computing Posts for 6/9/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

My Improper Billing for SQL Azure Web Database on Cloud Essentials for Partners Account saga continued with several 6/9/2011 updates. This is a lot of effort for a $10.00 refund, but the billing for my and others’ Windows Azure Platform Cloud Essentials for Partners benefits might be broken. So here’s the latest:

Update 6/9/2011 3:00 PM PDT: It appears as if I was right about the possibility of a billing snafu for Windows Azure Platform Cloud Essentials for Partners accounts:

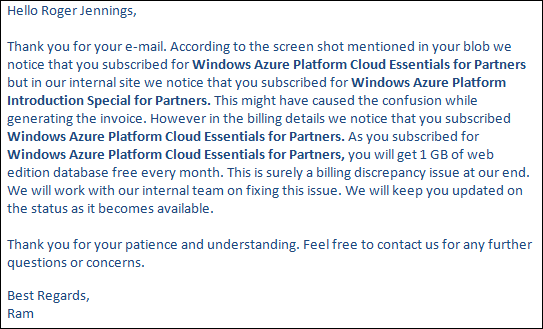

Update 6/9/2011 12:45 PM PDT: The saga continues after I sent a message about my questions to Ram:

![image[7] image[7]](http://lh3.ggpht.com/-d5KWtwl8oqw/TfEvgv3v7PI/AAAAAAAAL1M/XwyUXIcL_-o/image%25255B7%25255D%25255B6%25255D.png?imgmax=800)

The account in question is not Windows Azure Platform Introduction Special for Partners, it’s a Windows Azure Platform Cloud Essentials for Partners account, which requires considerable effort to obtain and enables one to use the Powered By Windows Azure logo (see my live OakLeaf Systems Azure Table Services Sample Project - Paging and Batch Updates Demo project as an example.) Here’s a screen capture for the subscription (at top):

![image[11] image[11]](http://lh4.ggpht.com/-ItGldzS0Gik/TfEvhEe29vI/AAAAAAAAL1Q/WNSVxtje7Qc/image%25255B11%25255D%25255B6%25255D.png?imgmax=800)

As far a I’ve been able to determine, there is no 90-day limit on use of a free SQL Azure Web database. See the Microsoft Windows Azure Cloud Essentials Pack screen capture below.

Mea Culpa: I was wrong about having no database in the SQL Azure server. I had a single database (plus master, which is free.)

Additional question: Why can’t I get rid of the Deactivated subscriptions?

This issue indicates to me that there is a bug in the billing system for my benefit.

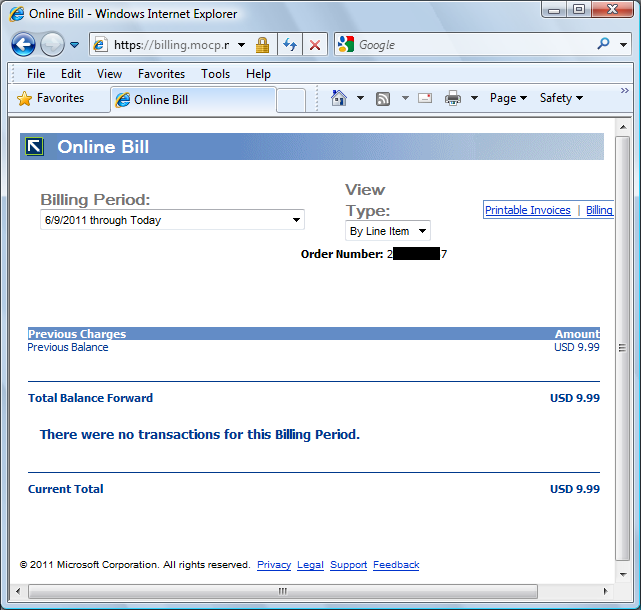

Update 6/9/2011 9:00 AM PDT: Credit takes a surprisingly long time to apply to account and longer to apply to credit card:

![image4[1] image4[1]](http://lh5.ggpht.com/-_Dqaq1zmlIY/TfEvhlG93wI/AAAAAAAAL1U/Rzn4kQ7SZyw/image4%25255B1%25255D%25255B5%25255D.png?imgmax=800)

My assumption was that the billing system was automated. Perhaps I was wrong.

Update 6/9/2011 7:30 AM PDT: An Azure Support Engineer responded as follows to my service request at 7:12 AM:

![image51[1] image51[1]](http://lh5.ggpht.com/-EHHSq0MRs18/TfEvjYeaeCI/AAAAAAAAL1Y/EzwemlkVjqA/image51%25255B1%25255D%25255B5%25255D.png?imgmax=800)

Ram’s response didn’t include answers to the three questions I asked in the service request, nor the requested description of the error, which should not have occurred with an automated billing system.

As of 9:15 AM PDT today, there was no indication of a refund for the previous month’s charge on my MOCP account page:

I’ve requested an explanation. Stay tuned. …

Mark Kromer (@mssqldude) is up to Part 4 of 5 of his All the Pieces: Microsoft Cloud BI Pieces series for SQL Server Magazine. Here are links with abstracts:

All the Pieces: Microsoft Cloud BI Pieces, Part 1

I’m going to try to expand a bit on the posting that I did last month describing different definitions of “Cloud BI” and what that means in Microsoft-speak. Starting here in part 1, I am going to walk through a complete example of building a Cloud BI solution using only Microsoft technologies from SQL Server, Azure and Office. By then end of the series, I will probably not be able to put a complete solution “in the Cloud”. If I want to build an analysis cube around my data, I can store the data in SQL Azure in the cloud, but my cube will have to be on-premises in SSAS or PowerPivot. But I can build a Cloud-based application and dashboard in Azure that is using data from SQL Azure, in the cloud, and I can now create cloud-based reports using Azure Reporting Services (CTP). Let’s start with the assumption that we’ve built a data mart in the Cloud using SQL Azure. We have transactional data from our stores in Adventure Works stored in a centralized data-center based SQL Server 2008 R2 database. ... Read the rest of entry »

All the Pieces: Microsoft Cloud BI Pieces, Part 2

In Part 1 of the series, I set-up a SQL Azure data mart and used SSIS to extract, transform & load (ETL) data from on-premises SQL Server 2008 R2 to a SQL Azure database. Azure allowed me to move my data into the cloud using the same tools that I use today for traditional SQL Server and I required no schema changes as well. In Part 2, I will begin performing some ad-hoc analysis on that Azure cloud database with PowerPivot. At this point in the project (click here for part 1), as I perform ad-hoc self-service business analysis using cloud data in my SQL Azure database, let me just point out to you that I (IMO) see this as a hybrid approach. As it stands today, I cannot create a PowerPivot cube or an SSAS cube in the cloud. But I can use Excel 2010 with the PowerPivot add-in to run powerful analysis on that data which is in the cloud and do so without needing any local on-premises infrastructure. No data marts, data warehouse, database of any kind. I am going to use a direct connection to ... Read the rest of entry »

All the Pieces: Microsoft Cloud BI Pieces, Part 3

In part 3 of this 5 part series, Mark examines the new Azure Reporting Services CTP (beta) that enables SSRS-like reporting services in the cloud with Windows Azure & SQL Azure. So far, in part 1 & part 2, we’ve talked about migrating data into data marts in SQL Azure and then running analysis against that data from on-premises tools that natively connect into the SQL Azure cloud database like PowerPivot for Excel. That approach can be thought of as a “hybrid” approach because PowerPivot is still requiring on-premises local infrastructure, such as PowerPivot, for the reporting. Now we’re going to build a dashboard that will exist solely in the cloud in Microsoft’s new Azure Reporting Services. This part of the Azure platform is only in an early limited CTP (beta), so you will need to go to the Microsoft Connect site to request access to the CTP: http://connect.microsoft.com/sqlazurectps. Think of Azure Reporting Services as SSRS in the cloud. You will author reports using the normal ... Read the rest of entry »

All the Pieces: Microsoft Cloud BI Pieces, Part 4

It is now time for part 4 of my 5-part series where I am walking you through the different Microsoft product and solution pieces to build a Microsoft Cloud BI solution. There are a few parts that I’ve called out thus far that are “hybrid”, i.e. not yet fully cloud-based. But today’s installment is going to focus on the presentation layer and we’re going to deploy these dashboards solely in the cloud, via Microsoft’s Azure platform. First, we’re going to use the new CTP (beta) of Azure Reporting Services to host a simple AdventureWorks dashboard that I built using Report Builder 3.0 in part 3 of this series. And, yes, that is currently a client tool that you need to have a local copy of to make this work. So we’re still quasi-complete in Cloud BI, still somewhat hybrid. But I’ll use our local copy of Visual Studio 2010 to build a simple ASP.NET application that will include the ReportViewer control hosting that Azure-based report, deployed in Windows Azure. Then another ASP.NET app will include ... Read the rest of entry »

Rachel Collier recommended that you Grab yourself a handful of SQL Azure how-to videos in a 6/9/2011 post to the MSDN UK Team blog:

For a good collection of webcasts and How Do I? videos on SQL Azure, take a trip over to MSDN. Here’s the line up:

- How Do I: Troubleshoot and Optimize Query in SQL Azure?

(6 Minutes 23 seconds)- How Do I: Work with Spatial Data in SQL Azure?

(4 Minutes 15 seconds)- How Do I: Calculate the cost of Azure database usage?

(9 minutes 37 seconds)- How Do I: Integrate An Existing Application With SQL Azure? Part - 1

(17 minutes 08 seconds)- How Do I: Integrate an Existing Application with SQL Azure? – Part 2

(15 minutes 44 seconds)- How Do I: Introducing the Microsoft Sync Framework Powerpack for SQL Azure?

(11 minutes 23 seconds)- How Do I: Use the RoleManager Class to Log SessionIDs in SQL Azure?

(18 minutes 31 seconds)- How Do I: Manage SQL Azure Firewall rules?

(10 minutes 24 seconds)

<Return to section navigation list>

MarketPlace DataMarket and OData

Core Solutions posted OData Where Art Thou? A Microsoft CRM Mystery Unravelled! to the CRM Software blog on 6/8/2011:

Recently, I took a look at the REST end point to provide a little CRM functionality that we’d developed for CRM 4. Imagine the following scenario, a person who is not an existing CRM contact sends you an email. Perhaps this person is a new contact at a sales prospect. You track the email in CRM and then proceed to create a contact record for that person. Now you associate the contact with the contact’s company or Account as it is known in CRM.

At this point you have a contact associated with an Account, but what is missing? The corporate mailing address! Our utility copies the account address to the contact record. Not too amazing, but useful. It’s the kind of customization we typically do for ourselves and our clients. So how does this scenario relate back to the REST endpoint I mentioned earlier?

For CRM 4 we created a Web service that when used performed a look up and returned the address of the parent Account. This web service had to run in its own web site. The client then made an AJAX call to the web service and the address magically appeared on the contact record. This worked well, but it does involve some development, error trapping, and of course testing once live on its own web site.

In comparison, the REST, or OData, method simply makes an AJAX call to the OData service and the contact record is updated. This provides the same functionality in a significantly shorter timeframe. A possible disadvantage to the OData method is that it only works from within CRM and therefore cannot be deployed to external code, but when working from within CRM, it can’t be beat. Contact Core today to see how our utilities can help improve your CRM experience.

by Core Solutions, a Massachusetts Microsoft Dynamics CRM Partner

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Brent Stineman (@BrentCodeMonkey) explained Azure AppFabric Queues and Topics and his Cloud Cover video segment in a 6/8/2011 post:

First some old business…. NEVER assume you know what a SequenceNumber property indicates. More on this later. Secondly, thanks to Clemens Vasters and his colleague Kartik. You are both gentlemen and scholars!

Ok… back to the topic at hand. A few weeks ago, I was making plans to be in Seattle and reached out to Wade Wegner (Azure Architect Evangelist) to see if he was going to be around so we could get together and talk shop. Well he lets me know that he’s taking some well deserved time off. Jokingly, I tell him I’d fill in for him on the cloud cover show. Two hours later I get an email in my inbox saying it was all set up and I need to pick a topic to demo and come up with news and the "tip of the week".. that’ll teach me!

So here I was with just a couple weeks to prepare (and even less time as I had a vacation of my own already planned in the middle of all this). Wade and I both always had a soft spot for the oft maligned Azure AppFabric, so I figured I’d dive in and revisit an old topic, the re-introduction of AppFabric Queues and the new feature, Topics.

Service Bus? Azure Storage Queues? AppFabric Queues?

So the first question I ran into was trying to explain the differences between these three items. To try and be succinct about it… The Service Bus is good for low latency situations where you want dedicated connections or TCP/IP tunnels. Problem is that this doesn’t scale well, so we’ll need a disconnected messaging model and we have a simple, fairly lightweight model for this with Azure Storage Queues.

Now the new AppFabric Queues are more what I would classify as an enterprise level queue mechanism. Unlike Storage Queues, AppFabric Queues can be bound too with WCF as well as a RESTful API and .NET client library. There’s also a roadmap showing that we may get the old Routers back and some message transformation functionality. As if this wasn’t enough, AppFabric Queues are supposed to have real FIFO delivery (unlike Storage Queues “best attempt” FIFO) and full ACS integration.

Sounds like some strong differentiators to me.

So what about the cloud cover show?

So everything went ok, we recorded the show this morning. I was having some technical challenges with the demo I had wanted to do (look for my original goal in the next couple weeks). But I got a demo that worked (mostly), some good news items, and even a tip of the day. All in all things went well.

Anyways… It’s likely you’re here because you watched the show so here is a link to the code for the demo we showed on the show. Please take it and feel free to use it. Just keep in mind its only demo code and don’t just plug it onto a production solution.

Being on the show was a great experience and I’d love to be back again some day. The Channel 9 crew was great to work with and really made me feel at ease. Hopefully if you have seen the episode, you enjoyed it as well.

My tip of the day

So as I mentioned when kicking off this update, never assume you know what a SequenceNumber indicates. In preparing my demo, I burned A LOT of my time and bugged the heck out of Clemens and Kartrik. All this because I incorrectly assumed that the SequenceNumber property of the BrokeredMessages I was pulling from my subscriptions was based on the original topic. If I was dealing with a queue, this would have been the case. Instead, it is based on the subscription. This may not mean much at the moment, but I’m going to be put together a couple posts on Topics in the next couple weeks that will bring it into better context. So tune back in later when I build the demo I originally wanted to demo on Cloud Cover.

Charles Young (@cnayoung) posted Windows Azure AppFabric May and June CTPs – A Summary on 6/2/2011:

I spent some time today summarising the new features in the Windows Azure AppFabric May CTP for SolidSoft consultants. Microsoft released the CTP a couple of weeks ago and has a second CTP coming out later this month. I might as well publish this here, although it has been widely blogged on already. There is nothing that you can’t glean from reading the release documents, but hopefully it will serve as a shorter summary.

The May CTP is all about the AppFabric Service Bus. The bus has been extended to support ‘Messaging’ using ‘Queues’ and ‘Topics’

‘Queues’ are really the Durable Message Buffers previewed in earlier CTPs. MS has renamed them in this CTP. They are not to be confused with Queues in Windows Azure storage! Think of these as ‘service bus queues’. They support arbitrary content types, rich message properties, correlation and message grouping. They do not expire (unlike in-memory message buffers). They allow user-defined TTLs. Queues are backed by SQL Azure. Messages can be up to 256KB and each buffer has a maximum size of 100 MB (this will be increased to at least 1GB in the release version). To handle messages larger than 256KB, you ‘chunk’ them within a session (rather like BTS large message handling for MSMQ). The CTP currently limits you to 10 queues per service namespace.

Service Bus queues are quite similar to Azure Queues. They support a RESTful API and a .NET API with a slightly different set of verbs – Send (rather than Put), Read and Delete (rather than Get), Peek-Lock (rather than ‘Peek’) and two verbs to act on locked messages – Unlock and Delete. The locking feature is all about implementing reliable messaging patterns while avoiding the use of 2-phase-commit (no DTC!). Queue management is very similar, but configuration is done slightly differently. AppFabric provides dead letter queues and message deferral. The deferral feature is a built-in temporary message store that allows you to resolve out-of-order message sequences. Hey, this stuff is actually beginning to get my attention!

Today’s in-memory message buffers will be retained for the time being. MS is looking at how much advantage they provide as low-latency non-resilient queues before making a decision on their long-term future. This is beginning to sound like the BizTalk Server low-latency debate all over again! Currently, the documented recommendation is that we migrate to queues.

‘Topics’ provide new pub/sub capabilities. A topic is…drum roll please…a queue! The main difference is that it supports subscription. I assume it has the same limitations and capabilities as a normal queue, although I haven’t seen this stated. It is certainly built on the same foundation. You can have up to 2000 subscriptions to any one topic and use them to fan messages out. Subscriptions are defined as simple rules that are evaluated against user and system-define properties of each message. They have a separate identity to topics. A single subscription can feed messages to a single consumer or can be shared between multiple consumers. Unlike Send Port Groups in BizTalk, this multi-consumer model supports an ‘anycast’ model for competing consumers where a single consumer gets a message on a first-come-first-served basis. MS invites us to think of a subscription as a ‘virtual queue’ on top of the actual topic queue. Potential uses for anycasting include basic forms of load balancing and improved resilience.

The CTP supports AppFabric Access Control v2.0. It is fully backward-compatible with the current service bus capabilities in AppFabric.

CTP does not have load balancing and traffic optimization for relay. These were in earlier CTPs, but have been removed for the time being. They may reappear in the future.

June CTP

The June CTP will introduce CAS (Composite Application Services). CAS is a term used by other vendors (e.g., SAP) for similar features, and has been a long time coming in the Microsoft world. The basic idea is that you build a model of a composite application, the services it contains, its configuration, etc., and then drive a number of tasks from this model such as build and deployment, runtime management and monitoring. Some of us remember an ancient Channel 9 video on a BizTalk-specific CAS-like modelling facility that MS were working on years ago. It was entirely BizTalk-specific and never saw the light of day. However, one connection to make is that CAS will provide capabilities that are conceptually related to the notion of ‘applications’ in BizTalk Server.

We will get a graphical Visual Studio modeling tool to design and manage CAS models. The CAS metamodel is implemented as a .NET library, allowing models to be constructed programmatically. Models are consumed by the AppFabric Application Manager in order to automate deployment, configuration, management and monitoring of composite applications.

So, things are rapidly evolving. However, we won’t see anything on Integration Services until, I suspect, next year. It’s important to remember that the May CTP is all about broadening the existing Service Bus with messaging capabilities, rather than about delivering an integration capability. So, even though we are seeing more BizTalk Server-like features, we are still a long way off having what Burley Kawasaki called a “true integration service” in the cloud. Obviously, Azure Integration Services will exploit and build on the Service Bus, but a lot more needs to be done before we have integration-as-a-service as part of the Azure story.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Linxter issued on 6/9/2011 a New In-App Purchasing and Mobile Payment Capabilities for Windows Phone 7 on CloudMiddleware.com press release via BusinessWire:

Linxter, Inc., provider of message-oriented cloud middleware, today announced the version 2.0 release of CloudMiddleware.com, its cloud integration hub running on the Windows Azure platform. Through integration with PayPal, the Cloud Middleware hub provides credit card payment capabilities, enabling application developers to implement both in-app purchasing and mobile payment solutions.

“We’re excited to see our PayPal X developers continue to innovate on a range of platforms,” said Naveed Anwar, PayPal’s senior director of X.Commerce Developer Network. “CloudMiddleware.com is creating a whole new way for developers to add payment processing into their apps on the Windows Azure platform.” [Emphasis added.]

“A cloud middleware integration platform is ideal because it reduces technical barriers and lowers costs,” stated Jason Milgram, founder and CEO of Linxter. “Adding easy-to-implement, secure, reliable financial transaction capabilities to our Cloud Middleware offering ushers in a new wave of innovation in product development.”

The Cloud Middleware pre-built integration services hub brings a range of new possibilities to Windows Phone 7 app developers, by eliminating the need to learn the specifics of each Application Programming Interface (API) they wish to utilize. Instead, developers use cloud messaging to send a simple message to any of their private, secure, cloud services.

“Integration services are a fast-growing segment in cloud computing,” commented Nicole Denil, Director, Americas, Windows Azure Platform at Microsoft Corp.. “We are excited to see a solution like CloudMiddleware.com being brought to life on the Windows Azure platform.”

The first two private services created on a CloudMiddleware.com account are free. The service requires a Linxter cloud messaging subscription, which is available with a 30-day free trial, after which $5 per month covers 1,000,000 secure, reliable, durable transactional data exchanges.

“Running on the Windows Azure platform, Cloud Middleware is able to massively scale up during high volume spikes and scale down when processing loads are lower,” added Milgram. “This enables us to bring a Just-in-Time (JIT) operations model to our compute infrastructure needs, lowering costs and providing consistent availability to our business customers.”

About Linxter, Inc.:

Linxter, Inc. was established to make the Internet of Things a secure and reliable reality. A message-oriented cloud middleware platform, Linxter removes the complexities and costs for developers when implementing secure and reliable communication among distributed applications, devices, and systems. Linxter, Inc. is headquartered in Cooper City, Florida. For more information, visit http://www.linxter.com or call Stephanie Helf at 800.490.1363.

The Windows Azure Team reported JUST ANNOUNCED: GreenButton Expands Global Reach, Underscores Value of Windows Azure in Exclusive Alliance with Microsoft in a 6/9/2011 post:

New Zealand-based InterGrid’s GreenButton plugin technology cleverly offloads compute and data intensive tasks from applications and distributes them to the cloud via the Windows Azure platform. This helps companies enter the cloud faster than they would if they progressed on their own, and enables smaller companies to tap computing resources that would traditionally be beyond their reach.

InterGrid just announced it has signed an exclusive global alliance with Microsoft that will enable Microsoft to promote GreenButton to its customers globally. The agreement also reinforces the use of the Windows Azure platform to power the GreenButton solution and provide end-to-end solution management.

As Doug Hauger, GM for Windows Azure, explains, “with GreenButton, companies have access to on-demand high performance computing resources without the burden of costly hardware and management costs. By leveraging the power of Windows Azure, GreenButton is creating a unique solution that can be easily customized to grow with the needs of its customers across a multitude of verticals and workloads.”

The application is already embedded in six software products, including Auckland 3-D rendering firm Right Hemisphere’s Deep Exploration software, and has more than 4,000 registered users in more than 70 countries.

To learn more about this announcement, click here to read the full press release. Click here to learn more about GreenButton and InterGrid.

Cory Fowler (@SyntaxC4) posted Talking PHP on Windows Azure on 6/8/2011:

A while back I had the opportunity to talk to Richard Campbell and Carl Franklin of DotNetRocks. The Show focuses on how to run PHP applications on Windows Azure.

Check out DotNetRocks: Episode 651.

One of the things that I talk about during the show is the PHP SDK for Windows Azure. What I forgot to mention was all the hard work Fellow MVP Maarten Balliauw has put into the Development and Maintenance of the PHP SDK for Windows Azure.

If you’d like to find out how to install PHP to run on Windows Azure read my 3 part blog series:

- Installing PHP on Windows Azure Leveraging Full IIS Support: Part 1

- Installing PHP on Windows Azure Leveraging Full IIS Support: Part 2

- Installing PHP on Windows Azure Leveraging Full IIS Support: Part 3

Other great resources for PHP on Windows Azure:

If you’d like to see me continue some investigation of PHP running on Windows Azure, Please contact me on twitter.

<Return to section navigation list>

Visual Studio LightSwitch

No significant articles today.

No significant articles today.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum, pictured below) asserted “As the Goldilocks fable taught us, you need to pick the path that is just right for your business” as a deck for his Too cloud-averse or too cloud-eager: Either way, you're fired post of 6/9/2011 to InfoWorld’s Cloud Computing blog:

I thought CIO magazine's Bernard Golden did a great job of highlighting the threats and opportunities for cloud computing for both the rank-and-file workers in enterprises, as well as IT executives: "CIOs and senior IT managers are not immune from the employment risks that cloud computing poses to lower-level infrastructure and operations workers. Failing to rethink the delivery of IT services -- and the new organizational structures that will be needed to deliver them--poses a threat to their job security."

The essence of this issue is that the way we deliver IT resources -- including infrastructure, applications, and development -- will change in the near future. Your ability to get ahead of these changes is directly related to your success. On the other hand, if you ignore the potential value of the cloud, you could come to be known as non-innovative and a hindrance to productivity. And that is a quick path to a seat near the door.

CIOs tend to take one of two paths:

- There is the CIO who believes that what he or she oversees in the enterprise is optimal to the needs of the business, and new technology trends such as cloud computing won't be helpful or the trend will not be validated until it is adopted by the majority of corporate America. In some areas, such a CIO may be right, but not in all. If there is no understanding of the potential value, then missed opportunities are also not understood. Clearly, the cloud should not be force-fitted in most enterprises, but there is value in specific areas where the technology should be applied. For a CIO on this path, the cloud will get him or her fired for not paying attention to its value.

- There is the CIO who loves whatever is new and hyped, and he or she tries to apply it everywhere, no matter if there is a real need. Although you would think that this type of CIO would get big innovation points, you'll find that as the number of failed projects racks up, such a CIO is quite vulnerable. For a CIO on this path, the cloud will get him or her fired for overapplying the technology.

The right path is one of a change agent, working within the organization to determine the value of cloud computing and the right path for your enterprise. This typically means splitting the difference between ignoring and overusing cloud computing. Moreover, this also means focusing on all levels of the organization and mapping a path from the existing state to the future state of using the cloud.

This is not just a technology change; it's about organizational and cultural changes as well. If you don't get ahead of this trend, you could find that your job is at risk.

Ignacio M. Llorente described a A Strategic Framework for Cloud Migration in a 6/8/2011 post:

I am often asked about the steps to be taken and the issues to be considered for a successful migration to cloud computing. There is no magic formula to it, the specific steps will depend on your internal structure, industry and differentiation in the market. As general framework, I usually recommend the Decision Framework for Cloud Migration described in the Federal Cloud Computing Strategy together with a thorough study and comparison of cloud providers tailored to your needs and requirements. The three main guidelines would be:

- Shifting from Infrastructure to Service Management. This is a shift in mindset of the IT staff. We should be able to define our needs in terms of services (applications) and their expected quality of service. IT staff usually express their needs and requirements using infrastructure terms, I need 4 physical boxes to run the web server for a new site. However needs should be described in terms of service elasticity rules, this is in terms of service level objectives using key performance indicators, In order to ensure an optimal quality of service, I need to automatically scale the number of servers when the average CPU utilization of the running web servers exceeds a given threshold.

- Prioritizing Services that Are Best Suited for Migration. This prioritization should be performed according to its readiness to be executed on cloud; its affinity to the cloud model in terms of security, performance, relevance and duration; and the expected gain in terms of costs, performance, quality, agility, and innovation.

- Selecting the Best Cloud Provider. This selection is critical if we consider that given the current lack of interoperability and portability, the change to other provider in the future may be time-consuming and expensive. Besides the Price-Performance-Reliability metric, the following aspects should be considered: data protection, privacy and regulatory issues; support for business continuity; and level of control exposed to users. You could also conclude that best solution is to use different providers for different workloads.

These three guidelines constitute a very simple model to support organizations in adopting cloud computing. I will elaborate on each one shortly.

Ignacio is one of the pioneers and world's leading authorities on Cloud Computing.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Matthew Weinberger (@MattNLM) updated his Fujitsu Powers Global Cloud Platform with Windows Azure article for the TalkinCloud blog on 6/9/2011:

Fujitsu and Microsoft have issued the joint announcement that August 2011 is the target date for the launch of the Global Cloud Platform service powered by Windows Azure – the culmination of an partnership established in July 2010. Under the deal, the Fujitsu Global Cloud Platform will be able to deploy Azure PaaS clouds and services from its data center in Japan. It’s the first official production release of the Windows Azure platform appliance – and practically the first time Microsoft has let a marquee cloud solution out from under its thumb.

First, a TalkinCloud mea culpa: When I reported on the launch of the Fujitsu Global Cloud Platform IaaS offering in North America back at the end of May, a commenter (rightly) asked for clarification on the mention of Fujitsu’s Azure offering. It appears I misunderstood my contact at Fujitsu: The company was piloting the new Fujitsu-Microsoft Azure offering with 20 Japanese customers, but it wasn’t available when I wrote that. [Emphasis added.]

But now, Fujitsu can offer any enterprise in Japan a domestically hosted cloud with compute and storage resources handled by Azure and built on its Microsoft-friendly FGCP/A5 application and data framework, according to the press release. And Fujitsu is also offering customers managed and value-add services around deployment, application migration and overall administration.

Prices will start at 5 yen per hour for an Azure “Extra Small”-equivalent instance, and Fujitsu is hoping to recruit 400 enterprise companies, 5,000 SMEs and ISVs in a five-year period after the service launches. For many, many more details, especially around the technical aspects, I suggest you take a look at that press release.

To me, this announcement is just further proof of two things: First, the Japanese cloud market isn’t going to show shrinkage anytime soon. And second, Microsoft’s willingness to play ball with Fujitsu shows that its commitment to growing its cloud vision overcomes even its notorious sense of pride.

I was the “commenter.”

<Return to section navigation list>

Cloud Security and Governance

Adam Bridgewater reported New cloud requirements standards laid down to ComputerWeekly.com’s Open Source Insider blog on 6/9/2011:

The Open Data Center Alliance has announced what it calls a "major milestone" in its mission to drive open, interoperable cloud solutions. With 280 member companies in tow, the group has formed workgroups and aligned with key industry standards bodies.

Yes yes, well done, but so what?

The organisation has just published its initial cloud requirements documents -- that's what. This is the first user-driven requirements documentation for the cloud based on member prioritisation of the most pressing challenges facing IT.

"This release will shape member purchasing and outline requests to vendors and solutions providers to deliver leading cloud and next-generation data center solutions," said the organisation, in a statement.

"The speed at which the organization formed and delivered the initial usage models sends a clear message to the cloud industry on how IT is planning to prioritise its data center and cloud planning along with the organization's commitment to solve real challenges," said Matthew Eastwood, group vice president, Enterprise Platform Research at IDC.

The first publicly-available documents published by the Open Data Center Alliance include eight Open Data Center Usage Models which define:

These lay out a plan to enable federation, agility and efficiency across cloud computing while identifying the specific innovations in secure federation, automation, common management and policy and solution transparency required for widespread adoption of cloud services.

- IT requirements for cloud adoption and,

- An Open Data Center Vision for Cloud Computing.

"I would have to agree with the Open Data Center Alliance (ODCA) report released this week and say that automation is definitely a fundamental requirement for those looking to adopt cloud computing. In fact, I would argue that to move to the cloud but still rely on manual processes to provide management is a little like buying a Superbike and then pushing it everywhere -- the manual intervention will always be the bottleneck preventing full exploitation of the benefits," said Terry Walby, UK managing director, IPsoft

"Automation services can help set parameters to provision and resource those processes that are important to an organisation; whether that be reduction in latency issues, managing server overloads or the allocation of bandwidth to those who need it most. It is through automation that businesses will be able to unlock the full benefits and elasticity which cloud provides."

“So what?” is right on. 280 member companies doesn’t give this group substantial standing in as large a market as cloud computing, in my opinion.

Robert X. Cringely posted When Engineers Lie to his I, Cringely blog on 6/9/2011:

Twenty years ago, when I was writing Accidental Empires, my book about the PC industry, I included near the beginning a little rant about how good engineers were incapable of lying, because their work relied on Terminal A being positive and not negative and if they lied about such things then nothing would ever work. That was before I learned much about data security, where apparently lying is part of the game. Well, based on recent events at RSA, Lockheed Martin, and other places, I think lying should not be part of the game.

Was there a break-in? Was data stolen? Was there an unencrypted database of SecureID seeds and serial numbers? All we can say at best is that we don’t really know. And in some quarters that is supposed to make us feel more secure because it means the bad guys are equally clueless. Except they aren’t, because they broke-in, they stole data, they knew what the data was good for while we — including SecureID customers it seems — are still mainly in the dark.

A lot of this is marketing — a combination of “we are invincible” and “be afraid, be very afraid.” But a lot of it is intended also to keep us locked-in to certain technologies. To this point most data security systems have been proprietary and secret. If an algorithm appears in public it escaped, was stolen, or reverse-engineered. Why should such architectural secrecy even be required if those 1024- or 2048-bit codes really would take a thousand years to crack? Isn’t the encryption, combined with a hard limit on login attempts, good enough?

Good question.

Alas, the answer is “no.” There are several reasons for this but the largest by far is that the U.S. government does not want us to have really secure networks. The government is more interested in snooping in on the rest of the world’s insecure networks. The U.S. consumer can take the occasional security hit, our spy chiefs rationalize, if it means our government can snoop global traffic.

This is National Security, remember, which mean ethical and common sense rules are suspended without question.

RSA, Cisco, Microsoft and many other companies have allowed the U.S. government to breach their designs. Don’t blame the companies, though: if they didn’t play along in the U.S. they would go to jail. Build a really good 4096-bit AES key service and watch the Justice Department introduce themselves to you, too.

The feds are so comfortable in this ethically-challenged landscape in large part because they are also the largest single employer… on both sides. One in four U.S. hackers is an FBI informer, according to The Guardian. The FBI and Secret Service have used the threat of prison to create an army of informers among online criminals.

While security dudes tend to speak in terms of black or white hats, it seems to me that nearly all hats are in varying shades of gray.

The U.S. government is a big supporter of IPv6, yet the National Security Agency isn’t. Cisco best practices for three-letter agencies, I’m told, include disabling IPv6 services. From the government’s perspective, their need to “manage” (their term, not mine — I would have said “control”) is greater than their need to engineer clean solutions. IPv6 is messy because it violates many existing management models.

Yet there is good news, too, because IPv6 and Open Source are beginning to close some of those security doors that have been improperly propped open. The Open Source community is building business models that may finally put some security in data security.

The key winners are going to be those companies that embrace IPv6 as a competitive advantage. IPv6-ready outfits in the U.S. include Google, AT&T, and Verizon. Yahoo and Comcast still have work to do. Apple has been ready for years.

Some readers will question why I appear to be promoting the undermining of U.S. intelligence interests. Why would I promote real data security if what we have now is working so well for our spy agencies?

I’m not a spy, for one thing, but if I was a spy and trying to keep my secrets secret I wouldn’t buy any of these products. I’d roll my own, which is what I think most governments have long done. So the really deep dark secrets were probably always out of reach, meaning most low-hanging fruit is simple commercial data like the 125+ million credit card numbers stolen so far this year from Sony, alone.

If the NSA needs my credit card information let them show me why. I think they don’t need it.

We’ve created a culture of self-perpetuating paranoia in military-industrial data security by building systems that are deliberately compromised then arguing that draconian measures are required to defend these holes we’ve made ourselves. This helps the unquestioned three-letter agencies maintain political power, doing little or nothing to increase national security, while at the same time compromising personal security for all of us.

There is no excuse for bad engineering.

<Return to section navigation list>

Cloud Computing Events

Martin Tantow reported CloudTimes Joins Cloud Control Conference as a Sponsor in a 6/8/2011 post to the CloudTimes blog:

Not Your Ordinary Cloud Conference

The market is flooded with generic conferences and trade shows. We have all attended them; few are entertaining others overwhelming and some simply useless. The sales pitch hits you in the face like a brick wall as you cross the carpeted threshold of the Exhibitor gauntlet. Education as a concept is thrown out the window and replaced with some sort of hybrid presentation that incorporates a hard sell with tidbits of fleeting wisdom.

The Cloud Control Conference debuting in Boston this July is a refreshing splash within the ocean of cloud conferences that have emerged over the past year or so. Unlike other exhibitor driven functions the Cloud Control Conference focuses on educating today’s Enterprises about the benefits of cloud computing, the risks and obstacles involved with a cloud migration and some technical aspects that new users must be aware of as they make their approach. The conference’s focus on education comes in the form of use cases and case study presentations. The agenda is full of non-service provider entities discussing their experiences with migration and management.

To kick off the event Adam Swidler of Google Enterprise will be co-presenting with his customer Chet Loveland CISO of MeadWestvaco, a multinational Fortune 500 manufacturing corporation. Loveland and Swidler will discuss the process and deployment of Google’s cloud based services within MWV’s existing IT architecture. An exciting case study presentation will also be delivered by NASA CIO James Williams. Williams will analyze the evolution of the NASA Nebula program and it’s function. Nebula combines the computing power of a container data center with the scalability of cloud computing, providing NASA researchers with immense computing power ready with the flick of a switch. Back in 2009 federal CIO Vivek Kundra stated that Nebula was an example of the governments ability to “leverage the most innovative technologies.”

Another interesting company that will be discussing the use case of cloud computing is CrowdFlower. CrowdFlower is the largest crowdsourcing company out there and is breaking down the barriers of the way the enterprise conducts tasks. The Founder and CEO Lukas Biewald will be presenting, not on crowdsourcing’s effect on industry, but on the cloud’s effect on the technology. This case study will highlight cloud computing’s role as the catalyst behind new business models and concepts that will change the way our world sees connectivity, work and productivity.

Leaders in the industry will also be involved, diving deeper into the mechanics and technical operations of the cloud and the security implications it brings about. Market leaders such as Rackspace, AT&T, Unisys and Akamai will all be included in the discussion.

To give the conference a little spark for industry professionals looking for an exciting cloud meetup, The Cloud Control Conference is co-located with the popular Green Data Center series. GDCON covers energy efficiency technologies in the data center, and will highlight leading facilities experts discussing the importance of reducing the carbon footprint of our computing centers. The educational overtones and options available at both conferences give delegates something to grab hold, take back to their organization and begin feeling the impact of cost and energy savings.

CloudTimes is a media sponsor of the Cloud Control Conference. The Cloud Control Conference and exposition will be debuting in Boston on July 19-21 [at the Boston Logan Hilton hotel].

YACCC: Yet another cloud computing conference.

Joel Foreman (@slalom) announced on 6/8/2011 a Webcast: Microsoft Enterprise Cloud Solutions – Real World Experiences to take place on 6/9/2011:

Cloud computing is a top priority for CIOs and businesses today. Did you know that IDC is predicting that spending on Cloud Computing solutions will reach $55 Billion by 2014? Microsoft continues to invest heavily in the cloud solutions across all products and technology capability.

My fellow Slalom consultant Mo Ramsey and I will be delivering an informative session of behalf of Slalom Consulting and Microsoft exploring cloud trends and how Microsoft technology can help you respond to rapid business needs. The session will be delivered live via a webcast on June 9th. You can register via this link. But don’t worry if you miss it. I will be updating this post with a link to the recorded webcast, where you can view it on-demand.

During the session we will provide an overview of Microsoft offerings across Infrastructure, Application, and Platform needs. We will also provide an account of common trends and real world success stories with Microsoft solutions and how many companies are adopting cloud solutions to rapidly solve common business problems.

Slalom Consulting is a market leader in Microsoft Cloud Computing Solutions and Microsoft’s 2010 U.S. Partner of the Year.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Williams asked Is the EcoPOD the World's Greenest Data Center? in a 6/9/2011 post to the ReadWriteCloud:

HP introduced a new modular data center at HP Discover this week that resembles a double wide mobile home with a cooling system that the company says has 95% better efficiency than the monolithic data centers built over the past 20 years.

The cooling system for the HP EcoPOD is designed to adapt to IT loads and outside conditions. For the most part, it uses air it pulls into the EcoPOD.

Here's a hologram of the HP EcoPOD that I shot at HP Discover this week. There is a bit about the particulars of the EcoPOD in the video.

The container market is a crowded one as Rich Miller points out in a blog post this week on Data Center Knowledge. HP maintains its Power Usage Effectiveness (PUE) rating is as low as 1.05. That's pretty low. But is it the lowest?

Setting aside the PUE Olympics, the efficiency ratings for the EcoPOD certainly place it on a competitive footing with other modular designs offering free cooling, a group including SGI ICE Cube, BladeRoom, i/o Anywhere, the Dell DCS modular unit and even Microsoft's IT-PAC design, which has recently been licensed to Savier. The growing number of players in the modular market is creating a virtuous cycle in which competitors are continually revising their designs to enhance their efficiency and usability.

HP touts the cost savings of the modular technology, which it says costs $8 million, compares to the $33 million for building a data center from the ground up. Those are impressive numbers but it is important to remember that this is a bit of a horse race. Competition is fierce in this data center boom time.

The new data centers represent transformation in IT but it really is a commodity game, a race for the most efficient data center technology at the lowest price. This is highlighted by projects such as OpenCompute, the data center initiative that Facebook launched. The goal for that project is to show how open systems can be used to create an efficient data center. That's a philosophy shared by HP and one we can expect to be more common as infrastructures become standardized to support application innovation.

Still, the HP EcoPOD is a new innovation for HP. It plays on the need for new, efficient data centers that have efficiency and power savings as the top priority.

David Ohara (@greenm3) described Amazon’s Data Center Container "Perdix" something we haven’t seen in a 6/8/2011 post to his Green (Low Carbon) Data Center Blog:

Yesterday I went to Amazon’s Technology Open House. [See post below for links to James Hamilton’s slides.]

Here is a 1/4 of the crowd getting food and drinks early before James Hamilton’s keynote.

In James’s presentation he has a section on Modular & Advanced Building Designs

Every day, Amazon Web Services adds enough new capacity to support all of Amazon.com’s global infrastructure through the company’s first 5 years, when it was $2.7 billion annual revenue.

James presents his latest observations on data center costs.

And waste in mechanical systems.

But, here is something I didn’t expect. Amazon Perdix. Amazon’s version of modular pre-fab data container data center. The below picture has Microsoft’s design on the left and Amazon’s on the right.

James is a believer in low density, 30 servers per rack where the cost per server is $1,450 or less.

James Hamilton posted links to the slides from his Amazon Technology Open House of 6/8/2011 on 6/9/2011:

The Amazon Technology Open House was held Tuesday night at the Amazon South Lake Union Campus. I did a short presentation on the following:

- Quickening pace of infrastructure innovation

- Where does the money go?

- Power distribution infrastructure

- Mechanical systems

- Modular & Advanced Building Designs

- Sea Change in Networking

Slides and notes:

Slides are posted at: http://mvdirona.com/jrh/TalksAndPapers/JamesHamilton_AmazonOpenHouse20110607.pdf

Coverage on Data Center Knowledge: http://www.datacenterknowledge.com/archives/2011/06/09/a-look-inside-amazons-data-centers/

Coverage on Dave Ohara on GreenM3: http://www.greenm3.com/gdcblog/2011/6/8/amazonrsquos-data-center-container-quotperdixquot-something.html

Derrick Harris (@derrickharris) reported HP wants to challenge Amazon for cloud developers in a 6/7/2011 post to Giga Om’s Structure blog:

Systems giant HP (s hpq) today announced a slew of new enterprise cloud products and services, but it won’t be until later this summer that we’ll see just how big a role HP will play in the cloud computing space. Everyone knows HP can compete against IBM, BMC and CA selling cloud software to large companies, but can it compete against Amazon Web Services (s amzn) in wooing developers to the public cloud? It’s certainly going to try.

In March, HP CEO Leo Apotheker announced that HP will offer its own Infrastructure as a Service, Platform as a Service and cloud storage services, some of the details of which were leaked last month. When I spoke today with Patrick Harr, HP’s vice president of cloud solutions, he acknowledged those services are coming this summer and explained that they’ll primarily target developers and will compete with AWS.

There will of course be an HP spin on the services, which is that they’ll utilize some of HP’s expertise in security and availability, and will be integrated with HP’s overall cloud portfolio. According to Harr, that could mean many things, including being available through HP’s hybrid-cloud management software as a resource option for low-priority applications. At Structure 2011, we’ll discuss a number of efforts to make public cloud computing and storage more palatable for important applications and data, and HP sounds like it might help drive those use cases. But make no mistake, HP intends to offer a pure cloud play without the trappings of legacy infrastructure.

Harr acknowledges this is new ground for HP, but his experience tells him that HP can actually be competitive with developer-first cloud providers like AWS. For one, Harr, who previously served as CEO of cloud storage pioneer Nirvanix, thinks that scale will be a driving factor in determining which providers fare the best when providing commodity services such as simple compute and storage. And while he said that Nirvanix had to adopt an enterprise focus to differentiate because it couldn’t buy disk drives for less than Amazon could, that’s not the case with HP.

But winning developers will also require institutional buy-in from HP to prove that it’s not the stodgy, slow-moving vendor that many make it out to be. Harr said he questioned whether HP was ready for such a move when he came, but that progress has been fairly rapid in spreading the web-first principles to the other aspects of HP’s cloud strategy. Additionally, he said the cloud services business is operating much like its own business, which allows it the freedom to bring in the right people and pursue its own agenda without getting too caught up in legacy bureaucracy.

The highlights of today’s HP cloud news are CloudAgile — a service aimed at helping service providers build and offer cloud services — and CloudSystem — a preconfigured platform comprised of converged infrastructure and cloud-management software. When used in unison, customers will be able to build and manage their application architectures via what appears to be a fairly intuitive drag-and-drop interface, and then scale out by adding external resources from HP’s CloudAgile program or from on-premise resource pools.

HP is touting choice throughout the process, as CloudSystem supports a variety of hypervisors, operating systems and applications, and early CloudAgile partners include Verizon (s vz), Savvis (s svvs) and OpSource.

As I said when Apotheker announced his grand cloud-provider plans, though, I’ll believe HP can compete in wooing developers when I see it. We know HP can deliver private clouds and advanced cloud management for large enterprises, but despite saying all the right things, it doesn’t have a reputation on which to hang its developer-friendly hat. If HP does pull it off, it’ll be like the Wild West with HP, AWS, Rackspace and several other providers shooting it out for market dominance and, hopefully, making the cloud space even more interesting.

Image courtesy of Flickr user uwdigitalcollections.

Full disclosure: I’m the recipient of a complimentary press pass to the Structure 2011 conference.

Borhan Uddin, Bo He, and Radu Sion of the Stony Brook Network Security and Applied Cryptography Lab co-authored on 6/1/2011 a 10-page Cloud Performance Benchmark Series - Amazon SQS - Simple Queue Service report available from Cloud Commons:

Amazon Simple Queue Service (SQS) is a hosted queue for storing messages that are transmitted between computers. The benefit of having a hosted queue is that developers can transmit data between distributed components of an application without requiring the availability of all the components of that application. In this edition of the Cloud Performance Benchmark Series from Stony Brook Network Security and Applied Cryptography Lab, Amazon SQS is evaluated by measuring the number of simultaneously supported users, the size of the message, and the throughput rate. This 10-page report details the testing setup, costs associated with the service, and results of the experiments.

Download the Complete Report: Cloud Performance Benchmark Series: Simple Queue Service (SQS)

<Return to section navigation list>

0 comments:

Post a Comment