Windows Azure and Cloud Computing Posts for 6/7/2011+

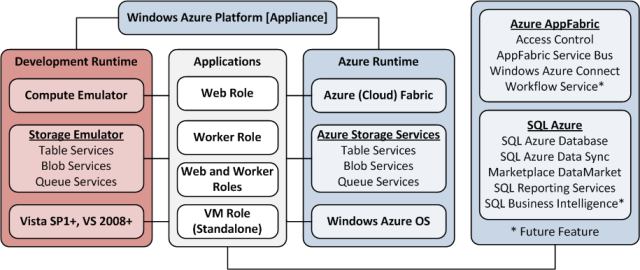

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, Traffic Manager, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework 4.1+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

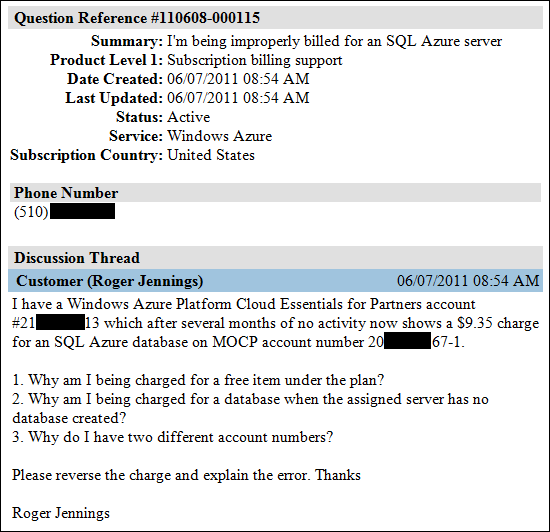

My Improper Billing for SQL Azure Web Database on Cloud Essentials for Partners Account post of 6/7/2011 described a glitch in the billing system:

Today I received a notice from the Microsoft Online Customer Portal (MOCP) that the May 2011 bill for my Windows Azure Platform Cloud Essentials for Partners account was ready. Here’s what I found when I checked my account:

This was the first time I had incurred a charge on this account, which I opened on 1/8/2011. The server provisioned has no database installed. …

I completed a service request form this morning and immediately received three messages from Microsoft Online Customer Services (MOCS) identical to the following (confidential information redacted):

It will be interesting to see how fast MOCS responds with the answers to my questions.

Has anyone else been similarly miss-billed?

Shaun Xu recommended that you Backup Your SQL Azure by Data-tier Application CTP2 in a 6/6/2011 post:

In the next generation of SQL Server, codename “Denali”, there is a new feature named Data-tier Application Framework v2.0 Feature Pack CTP to enhance the data import and export with SQL Server, and currently it had been available in SQL Azure Labs.

Run Data-tier Application Locally

From the portal we know that the Data-tier Application CTP2 can be executed from the development machine through an EXE utility. So what we need to do is to download the components listed below. Only one thing, the SQLSysClrTypes.msi should be installed before the SharedManagementObjects.msi.

- .NET 4 runtime

- SQLSysClrTypes.msi

- SharedManagementObjects.msi

- DACFramework.msi

- SqlDom.msi

- TSqlLanguageService.msi

And then just download the DacImportExportCli.zip, the client side utility and proform the import export command in the local machine against the SQL Server and SQL Azure. For example we have a SQL Azure database available here:

- Server Name: uivfb7flu1.database.windows.net

- Database: aurorasys

- Login: aurora@uivfb7flu1

- Password: MyPassword

Then we can run the command like this below to export the database schema and data into a single file on local disk named “aurora.dacpac”.

1: DacImportExportCli.exe -s uivfb7flu1.database.windows.net -d aurorasys -f aurora.dacpac -x -u aurora@uivfb7flu1 -p MyPasswordAnd if we needed we can import it back to another SQL Azure database.

1: DacImportExportCli.exe -s uivfb7flu1.database.windows.net -d aurorasys_dabak -f aurora.dacpac -i -edition web -size 1 -u aurora@uivfb7flu1 -p MyPasswordBut since the SQL Server backup and restore feature is not supported in SQL Azure currently, and many projects I was working on were willing to backup their databases on SQL Azure from the website running on Windows Azure, so in this post I would like to demonstrate how to perform the export from the cloud instead of in local command windows.

Run Data-tier Application on Cloud

From this wiki we know that the Data-tier Application can be invoked by the EXE or directly from the managed library. We can leverage the DACStore class in Microsoft.SQLServer.Management.DAC.dll to import and export from the code. So in this case we will build the web role to export the SQL Azure database via the DACStore.

The first thing we need to do is to add the references about the Data-tier Application to the cloud project. But to be aware that in Windows Azure the role instance virtual machine only has the .NET Framework installed. So we need to add all DLLs Data-tier Application needed in our project and set their Copy Local = True. Here is the list we need to add:

- Microsoft.SqlServer.ConnectionInfo.dll

- Microsoft.SqlServer.Diagnostics.STrace.dll

- Microsoft.SqlServer.Dmf.dll

- Microsoft.SqlServer.Management.DacEnum.dll

- Microsoft.SqlServer.Management.DacSerialization.dll

- Microsoft.SqlServer.Management.SmoMetadataProvider.dll

- Microsoft.SqlServer.Management.SqlParser.dll

- Microsoft.SqlServer.Management.SystemMetadataProvider.dll

- Microsoft.SqlServer.SqlClrProvider.dll

- Microsoft.SqlServer.SqlEnum.dll

If you had installed the components mentioned above you can find the assemblies in your GAC directory. You should copy them from the GAC directory and then added to the project. Regarding how to copy files from GAC please refer to this article. Otherwise you can download the source code from the link at the end of this post which has the assemblies attached.

And next we move back to the source code and create a page to let the user input the SQL Azure database information that he wants to export. In this example I created an ASP.NET MVC web role and let the user enter the information in Home/Index. After the user clicked the button we will begin to export the database. Firstly we should create the connection string from the user input, and then create an instance of ServerConnection, defined in Microsoft.SqlServer.ConnectionInfo.dll, that will be used to connect to the database when exporting.

1: // establish the connection to the sql azure2: var csb = new SqlConnectionStringBuilder()3: {4: DataSource = model.Server,5: InitialCatalog = model.Database,6: IntegratedSecurity = false,7: UserID = model.Login,8: Password = model.Password,9: Encrypt = true,10: TrustServerCertificate = true11: };12: var connection = new ServerConnection()13: {14: ConnectionString = csb.ConnectionString,15: StatementTimeout = int.MaxValue16: };And then we will create the DACStore from the ServerConnection and handle some events such as action initialized, started and finished. We will store the events into a StringBuilder and show after finished.

1: // create the dac store for exporting2: var dac = new DacStore(connection);3:4: // handle events of the dac5: dac.DacActionInitialized += (sender, e) =>6: {7: output.AppendFormat("{0}: {1} {2} {3}<br />", e.ActionName, e.ActionState, e.Description, e.Error);8: };9: dac.DacActionStarted += (sender, e) =>10: {11: output.AppendFormat("{0}: {1} {2} {3}<br />", e.ActionName, e.ActionState, e.Description, e.Error);12: };13: dac.DacActionFinished += (sender, e) =>14: {15: output.AppendFormat("{0}: {1} {2} {3}<br />", e.ActionName, e.ActionState, e.Description, e.ActionState == ActionState.Warning ? string.Empty : (e.Error == null ? string.Empty : e.Error.ToString()));16: };The next thing we are going to do is to prepare the BLOB storage. We will export the database to a local file firatly in Windows Azure virtual machine and then upload to BLOB storage for future download.

1: // prepare the blob storage2: var account = CloudStorageAccount.FromConfigurationSetting("DataConnection");3: var client = account.CreateCloudBlobClient();4: var container = client.GetContainerReference("dacpacs");5: container.CreateIfNotExist();6: container.SetPermissions(new BlobContainerPermissions() { PublicAccess = BlobContainerPublicAccessType.Container });7: var blobName = string.Format("{0}.dacpac", DateTime.Now.ToString("yyyyMMddHHmmss"));8: var blob = container.GetBlobReference(blobName);Then the final job is to invoke the Export method of the DACStore after the ServerConnection.Connect() to be invoked. The Data-tier Application library will connect to the database and perform the actions to export the schema and data to the file specified.

1: // export to local file system2: connection.Connect();3: var filename = Server.MapPath(string.Format("~/Content/{0}", blobName));4: dac.Export(model.Database, filename);And after that we will upload the file to BLOB and remove the local one.

1: // upload the local file to blob2: blob.UploadFile(filename);3:4: // delete the local file5: System.IO.File.Delete(filename);6:7: output.AppendFormat("Finised to export to {0}, elapsed {1} seconds.<br />", blob.Uri, sw.Elapsed.TotalMilliseconds.ToString("0.###"));Finally we can download the DACPAC file from the BLOB storage to local machine.

Summary

The Data-tier Application enables us to be able to export the database schema and data from SQL Azure to anywhere we want, such as the BLOB storage or the local disk. This means we don’t need to pay for the backup database but only the storage usage if we put it into BLOB, and the bandwidth cost if we need to download from the BLOB. But I’m not sure if we need to pay for the bandwidth between the web role and SQL Azure if they are in the same data center (sub-region).

One more thing must be highlighted here is that, the Data-tier Application is NOT a fully backup solution. If you need the transactional consistent backup you should think about the Database Copy or Data Sync. But if you just need the schema and data this might be best choice.

PS: You can download the source code and the assemblies here.

<Return to section navigation list>

MarketPlace DataMarket and OData

EastBanc Technologies added their Voting Information Project to Microsoft’s MarketPlace DataMarket on 6/3/2011:

The Voting Information Project (VIP) offers cutting edge technology tools to provide voters with access to customized election information to help them navigate the voting process and cast an informed vote. VIP works with election officials across the nation to ensure this information is official and reliable. We answer voters' basic questions like "Where is my polling place?", "What's on my ballot?" and "How do I navigate the voting process?" VIP uses an open format to make data available and accessible, bringing 21st century technology to our elections and ensuring that all Americans have the opportunity to cast an informed vote.

Marcelo Lopez Ruiz (@mlrdev) described Latest datajs changes - changeset 8334 in a 6/7/2011 post:

Yesterday we uploaded the changeset 8334 to datajs, which includes a pretty extensive list of improvements. These haven't made it into a release yet, but you can build and play with the sources - here's what's new.

- Adds support for configuring cache sizes. The options argument to datajs.cacheSize can include a cache size in (estimated) bytes - this helps allocate storage across different stores, and make sure you leave room for the most important ones.

- Adds support for RxJs to caches. If you include the Reactive Extensions for JavaScript library, you can get an observable source by simply invoking ToObservable on a cache.

- Adds support for options in cache constructor. This includes things like user and password.

- Adds support for filtering forward/backwards to caches. You don't have to wait for data to be local - after all, the cache can't index on an opaque callback. So we're simplifying this, and you can simply call filter at any point.

- Supports JSON options on a per-request basis. In the OData.request object (and the cache options), you can specify values that would otherwise have been set on default objects, including whether to enable JSONP, the callback handler name, whether dates should be handled, etc.

- Supports user and password in requests. In addition to the JSON/JSONP flags, you can also specify user and password for basic authentication scenarios.

- Improves default success handler. Now we stringify the response data, so if you're just playing with the API to get a sense of the response data it's super-easy to get started.

- And a bunch of bug fixes, internal changes and test improvements...

William Vambenepe (@vambenepe) posted Comments on “The Good, the Bad, and the Ugly of REST APIs” on 6/6/2011:

A survivor of intimate contact with many Cloud APIs, George Reese shared his thoughts about the experience in a blog post titled “The Good, the Bad, and the Ugly of REST APIs“.

Here are the highlights of his verdict, with some comments.

“Supporting both JSON and XML [is good]“

I disagree: Two versions of a protocol is one too many (the post behind this link doesn’t specifically discuss the JSON/XML dichotomy but its logic applies to that situation, as Tim Bray pointed out in a comment).

“REST is good, SOAP is bad”

Not necessarily true for all integration projects, but in the context of Cloud APIs, I agree. As long as it’s “pragmatic REST”, not the kind that involves silly contortions to please the REST police.

“Meaningful error messages help a lot”

True and yet rarely done properly.

“Providing solid API documentation reduces my need for your help”

Goes without saying (for a good laugh, check out the commenter on George’s blog entry who wrote that “if you document an API, you API immediately ceases to have anything to do with REST” which I want to believe was meant as a joke but appears written in earnest).

“Map your API model to the way your data is consumed, not your data/object model”

Very important. This is a core part of Humble Architecture.

“Using OAuth authentication doesn’t map well for system-to-system interaction”

Agreed.

“Throttling is a terrible thing to do”

I don’t agree with that sweeping statement, but when George expands on this thought what he really seems to mean is more along the lines of “if you’re going to throttle, do it smartly and responsibly”, which I can’t disagree with.

“And while we’re at it, chatty APIs suck”

Yes. And one of the main causes of API chattiness is fear of angering the REST gods by violating the sacred ritual. Either ignore that fear or, if you can’t, hire an expensive REST consultant to rationalize a less-chatty design with some media-type black magic and REST-bless it.

Finally George ends by listing three “ugly” aspects of bad APIs (“returning HTML in your response body”, “failing to realize that a 4xx error means I messed up and a 5xx means you messed up” and “side-effects to 500 errors are evil”) which I agree on but I see those as a continuation of the earlier point about paying attention to the error messages you return (because that’s what the developers who invoke your API will be staring at most of the time, even if they represents only 0.01% of the messages you return).

What’s most interesting is what’s NOT in George’s list. No nit-picking about REST purity. That tells you something about what matters to implementers.

If I haven’t yet exhausted my quota of self-referential links, you can read REST in practice for IT and Cloud management for more on the topic.

Related posts:

- WS Resource Access at W3C: the good, the bad and the ugly

- Amazon proves that REST doesn’t matter for Cloud APIs

- REST in practice for IT and Cloud management (part 1: Cloud APIs)

- Separating model from protocol in Cloud APIs

- REST in practice for IT and Cloud management (part 3: wrap-up)

- REST-*: good specs, bad branding?

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

![]() No significant articles today.

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, Traffic Manager, RDP and CDN

Joel Foreman posted Geo-Distributed Services and Other Examples with the Windows Azure Traffic Manager on 5/25/2011 (missed when posted):

In April, Microsoft introduced a new component to the Windows Azure platform called Windows Azure Traffic Manager. Traffic Manager, falling under the Windows Azure Virtual Network, allows for the ability to control how traffic is distributed to multiple hosted services in Windows Azure. These services (i.e. web site, web application, or web services) can be in the same data center or in different data centers across the globe.

You may be wondering how this is different from the load-balancing provided by Windows Azure for your hosted service. Windows Azure provides load-balancing across all of the instances you have running in a single Windows Azure service. The big difference here is that Traffic Manager is not custom load-balancing for a single service but for multiple services. This means that I can deploy my service several times (even to different regions), have my end-users hitting a single address, and control how those end-users are routed to those difference services. Really cool.

How Traffic Manager Works

One of the great things about Traffic Manager is that it adds very little overhead. This is because of the way it uses DNS. A custom domain name is registered to a Traffic Manager address. The first time a user requests the domain name, Traffic Manager resolves the DNS entry for the domain name to the IP address of the hosted service based on the defined traffic policy. After that, the user talks directly to the hosted service until their DNS cache expires, at which point they would repeat the process.

Traffic Manager provides three different policies for routing traffic:

- Performance: Routes requests to the nearest service based on the incoming request’s location.

- Failover: Routes requests based on health of the services.

- Round Robin: Distributes requests equally across the hosted services.

Traffic Manager also provides monitoring of the hosted services regardless of the policy being used to ensure they are online. You have the ability to configure protocol, port and path for monitoring. The monitoring system will perform a GET on the file and expects to receive a 200 OK response within 5 seconds. This occurs every 30 seconds. A service is deemed unhealthy and marked offline if it fails 3 times in a row.

Traffic Policy Examples

So what are some examples of when you would use one of these policies? Here are a few:

- Performance Policy Example: Building a Geo-Distributed service. If you have users using your service around the globe, deploy your hosted service into multiple regions (i.e. US, Europe, Asia) and have your users routed to the nearest location.

- Failover Policy Example: Having a “hot” standby service. If the primary service is offline, requests will be routed to the next in order, and so on.

- Round Robin Example: Increased scalability and availability within a region. Spread the load across multiple services with multiple instances. Note that Traffic Manager still monitors health and will not route a request to a service that is down.

I tend to align the Failover and Round Robin polices in an “availability” category. Both are ways to further improve the availability of services. I would be really curious to see the impacts of availability in terms of Windows Azure’s SLA of 99.95%. I wonder if using either of these policies can reliably extend the actual availability beyond these numbers, for applications with the need for more “nines”.

Using Traffic Manager for Monitoring

One of the things I have been asked before is if there is any monitoring capability included for your Windows Azure services. Traffic Manager now provides some capabilities in this space. Instead of adding third-party URL based monitoring to a web site hosted in Windows Azure, you can now leverage the monitoring capabilities provided by Traffic Manager to do the same thing. In addition, a developer can even create transactional test endpoints or smoke test endpoints that exercise key behaviors of the system, and have Traffic Manager hit these endpoints every 30 seconds as well.

Using Traffic Manager for Geo-Distributed Applications

One of the largest scenarios that Traffic Manager addresses is the ability to build geo-distributed applications. What does it mean to have a geo-distributed application? This refers to the service being deployed into multiple data centers around the world. Delivering high-performance, low-latency experiences for applications that have a geographically distributed user base is a real challenge. This will be even more important in the mobile and social application industries. The expectation of end-users is that the applications they use will be available and perform to the same level that they are accustomed to everywhere. With the growth of mobile apps and smart phones, the need for geo-distributed architectures will grow as well.

With Windows Azure, it is easy to deploy your hosted service package into multiple regions. But there are challenges in terms of data and storage that come with distributing your front and middle tier. Mainly, you should not simply deploy your hosted service into multiple regions without considering where your hosted service will store its data. You would not want a web application in Europe talking to a database in the United States for performance considerations. There are a couple of options that I will point out for addressing your data and storage:

- Leverage SQL Azure Data Sync: This technology can keep multiple SQL Azure databases in sync with each other, even across regions.

- Leverage Worker Roles for Custom Sync: Build a worker role to move necessary data into appropriate regions. This may be useful if you are leveraging Table Storage and BLOB Storage.

- Leverage CDN wherever possible: Store data in BLOB Storage and enable the CDN to act as a distributor of content to all other regions.

Let’s dive into a mobility scenario. This diagram depicts a mobile application being used from 2 different parts of the globe. You can see the clients connected to Traffic Manager initially, and then talking directly to the local hosted service layer in Windows Azure. The service layer talks to SQL Azure as a relational database in the same local region. SQL Azure Data Sync is used to keep data synced across all of the SQL Azure databases in all the regions.

In this scenario, we showed users on different continents. But this use could be just as effective for users on the same continent, especially if the number of Windows Azure regions grows in the future.

Get Started

Windows Azure Traffic Manager is currently available as a CTP (Community Technology Preview). You can sign up for the preview from the Windows Azure Management Portal and try it out today.

Resources

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

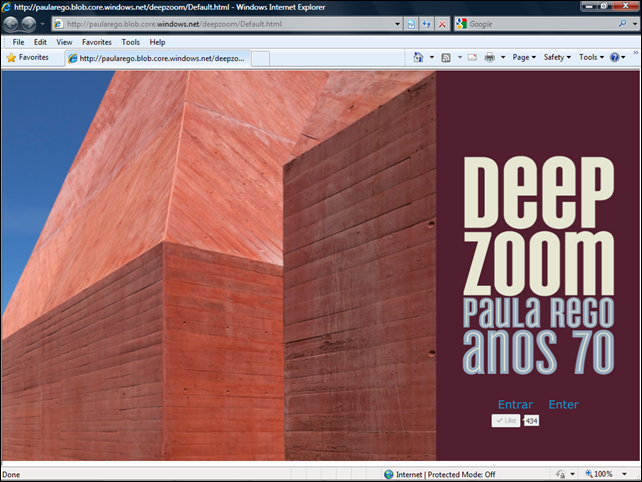

Casa das Histórias Paula Rego and Microsoft Portugal present Deep Zoom: Paula Rego in the 1970s running from Windows Azure:

Dame Maria Paula Figueiroa Rego, GCSE, DBE (Portuguese pronunciation: [ˈpawlɐ ˈʁeɡu]; born in Lisbon in 1935) is a Portuguese painter, illustrator and printmaker. She lived in Portugal (Ericeira) until 1976 and later moved to London with her family.

Education

Rego started painting at the age of four. She attended the English-language Saint Julian's School, Carcavelos, Portugal before studying at the Slade School of Art, London where she met her future husband, British artist Victor Willing.

Career

Her work often gives a sinister edge to storybook imagery, emphasizing malicious domination or the subversion of natural order. She deals with social realities that are polemic, an example being her important Triptych (1998) on the subject of abortion, now in the collection of Abbot Hall Art Gallery in Kendal.

Rego's style is often compared to cartoon illustration. As in cartoons, animals are often depicted in human roles and situations. Her later work adopts a more realistic style, but sometimes keeps the animal references — the Dog Woman series of the 1990s, for example, is a set of pastel pictures depicting women in a variety of dog-like poses (on all fours, baying at the moon, and so on).[1]

Clothes play an important role in Rego's work, as pieces of her visual story-telling. Many of the clothes worn by models and mannequins in her work are representative of the frocks she wore as a child in Portugal. Rego's thoughts on how the clothes show character are as follows:

“‘clothes enclose the body and tighten it and give you a feeling of wholeness. You are contained inside your clothes. So I put them in the pictures’.[2]”

In 1989, Rego was shortlisted for the Turner Prize, in June 2005 was awarded the Degree of Doctor of Letters honoris causa by Oxford University and in February 2011 the 'honoris causa’ by the Universidade de Lisboa.

Rego has also painted a portrait of Germaine Greer, which is in the National Portrait Gallery in London, as well as the official presidency portrait of Jorge Sampaio. Rego only ever painted one self-portrait which included her grand daughter, Grace Smart, that sold for some £300,000.

On September 18, 2009 a new museum dedicated to the work of Paula Rego, [pictured at right,] opened in Cascais, Portugal. [3] The building was designed by the architect Eduardo Souto Moura and is called Casa das Histórias Paula Rego / Paula Rego - House of Stories. [4]

Rego was appointed Dame Commander of the Order of the British Empire (DBE) in the 2010 Birthday Honours.[5]

Personal life

Rego and her husband, Willing, divided their time between Portugal and England until 1976, when they moved to England permanently. In 1988, Willing died after suffering for some years from multiple sclerosis.

Rego is the mother of Nick Willing, a film director. He is a graduate of the National Film and Television school, and began his career directing commercials and music videos for such artists as The Eurythmics, Bob Geldof, and Swing Out Sister. His credit on feature films as either writer or director (or both) include: "Photographing Faires" (1997), "Doctor Sleep" (2002), and Alice (2009 - TV). Nick is also known for magical use of animation and visual effects in live action work.

Rego is the mother-in-law to Ron Mueck, whose career she has significantly influenced.

Paula Rego Museum photograph courtesy of Wikipedia.

Neil MacKenzie (@mknz) wrote Microsoft Windows Azure Development Cookbook: RAW for Packt Publishing. The first five chapters became available as a ebook on 6/7/2011:

Overview of Microsoft Windows Azure Development Cookbook: RAW

- Packed with practical, hands-on cookbook recipes for building advanced, scalable cloud-based services on the Windows Azure platform explained in detail to maximize your learning

- Extensive code samples showing how to use advanced features of Azure blobs, tables and queues.

- Understand remote management of Azure services using the Windows Azure Service Management REST API

Delve deep into Windows Azure Diagnostics

- Master the Windows Azure AppFabric Service Bus and Access Control Service

This book is currently available as a RAW book. A RAW book is an ebook, and this one is priced at 40% of the usual eBook price. Once you purchase the RAW book, you can immediately download the content of the book so far, and when new chapters become available, you will be notified, and can download the new version of the book. When the book is published, you will receive the full, finished eBook.

If you like, you can preorder the print book at the same time as you purchase the RAW book at a significant discount.

Purchase Options

Your choices:

- Buy the RAW version of this book immediately

[ $ 23.99 | £13.19 | EUR 18.59 ]- Buy the RAW version of this book and place a pre-order for the print book right now, with a 40% discount on both.

[ $ 29.99 | £ 18.59 | EUR 23.39 ]- Since a RAW book is an eBook, a RAW book is non returnable and non refundable.

- Local taxes may apply to your eBook purchase.

Chapter Availability

Chapter Number, Title, Availability:

- 1 Controlling Access in the Windows Azure Platform IN THE BOOK

- 2 Handling Blobs in Windows Azure IN THE BOOK

- 3 Going NoSQL with Azure Table Storage IN THE BOOK

- 4 Disconnecting with Azure Queue IN THE BOOK

- 5 Developing Azure Services IN THE BOOK

- 6 Digging into Windows Azure Diagnostics JUNE 2011

- 7 Managing Azure Services with the Service Management API JUNE 2011

- 8 Using SQL Azure JUNE 2011

- 9 Looking at the Azure AppFabric JUNE 2011

eBook available as PDF download

Marketwire posted a Cenzic to Provide Industry-Praised ClickToSecure Cloud Solution for Microsoft Windows Azure Users press release on 6/7/2011:

Cenzic Inc., the leading provider of Web application security assessment and risk management solutions, today announced the release of its ClickToSecure Cloud for the Windows Azure platform. This industry-praised solution puts Web application security within reach of all Windows Azure users, allowing them to test their websites for vulnerabilities and conduct quick assessments entirely in the cloud.

"Cenzic's ClickToSecure Cloud solution is a welcome addition to the solutions available on the Windows Azure platform," said Prashant Ketkar, Director of Product Marketing, Microsoft. "Web application security is an important initiative for businesses. By making ClickToSecure Cloud available for Windows Azure users, Cenzic is addressing Web application security issues."

Windows Azure provides on-demand compute, storage, networking and content delivery capabilities to host, scale and manage Web applications on the Internet through Microsoft data centers. Windows Azure serves as the development, service hosting and service management environment for the Windows Azure platform.

"Web application security and compliance are critical initiatives for many companies," said Wendy Nather, Senior Security Analyst at The 451 Group. "These issues are just as important in the cloud, particularly when companies are using it for the first time. ClickToSecure Cloud for the Windows Azure platform is one example of a tool to help with the basics that might otherwise be overlooked in the rush to this new, disruptive technology."

With ClickToSecure Cloud, Windows Azure users have the ability to select different types of Web application vulnerability options that will detect "holes" or vulnerabilities in their website -- from a standard "HealthCheck" to one that provides PCI 6.6 assurance. The offering is a fast, cost-effective way to improve website security posture without having to become a security expert. In May, the American Business Awards named the solution a finalist for product of the year.

"We are honored to bring ClickToSecure Cloud to the Microsoft Azure platform," said John Weinschenk, president and CEO at Cenzic. "Web security is a huge problem right now. Sony, Twitter, Facebook, and hundreds of other sites are being hacked on a regular basis. Implementing Web application security can be a daunting task for any business because of the complexity of new threats, rules, and regulations that permeate the industry. ClickToSecure Cloud was designed to take the guesswork around these issues out of the equation, allowing businesses with zero Web security expertise to ensure their websites are both protected from hackers and compliant with industry regulations such as PCI 6.6."

For more information about Cenzic's ClickToSecure Cloud solution for Microsoft Azure, please visit: http://www.cenzic.com/resellers/azure. You can also find out more about ClickToSecure Cloud on Windows Azure blog site -- http://blogs.msdn.com/b/windowsazure/.

About Cenzic

Cenzic, a trusted provider of software and SaaS security products, helps organizations secure their websites against hacker attacks. Cenzic focuses on Web Application Security, automating the process of identifying security defects at the Web application level where more than 75 percent of hacker attacks occur. Our dynamic, black box Web application testing is built on a non-signature-based technology that finds more "real" vulnerabilities as well as provides vulnerability management, risk management, and compliance for regulations and industry standards such as PCI. Cenzic solutions help secure the websites of numerous Fortune 1000 companies, all major security companies, leading government agencies and universities, and hundreds of SMB companies -- overall helping to secure trillions of dollars of e-commerce transactions. The Cenzic solution suite fits the needs of companies across all industries, from a cloud solution (Cenzic ClickToSecure Cloud™), to testing remotely via our managed service (Cenzic ClickToSecure® Managed), to a full enterprise software product (Cenzic Hailstorm® Enterprise ARC™) for managing security risks across the entire company.

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) of the Visual Studio Lightswitch Team suggested Submit Your LightSwitch Samples & Get Featured on the Developer Center! in a 6/7/2011 post:

Check it out, we’ve got a new Samples page on the LightSwitch Developer Center showcasing LightSwitch samples from the Samples Gallery. Just head to http://msdn.com/lightswitch and then click on the Samples tab at the top:

Here you can see the most popular, recent, and team samples quickly and easily. Get your own sample up onto the gallery and it will automatically show up on the LightSwitch Developer Center. Just follow these steps:

- Build your sample using LightSwitch Beta 2 (get it here)

- Upload your LightSwitch Sample to the Samples Galley (follow these instructions)

- On the description page of your sample, set the Technology = “LightSwitch” like so:

That’s it! It will usually take a couple hours to show up on the Developer Center samples feed. The team will be reviewing samples and the cool ones we’ll feature in the “Editors Pick” section at the top of the page. Have fun building samples and helping the LightSwitch community!

Bill Zack posted Building Line of Business Applications with Visual Studio LightSwitch to the Ignition Showcase blog on 6/6/2011:

In a previous blog post we told you about Visual Studio LightSwitch as a way to publish applications to Windows Azure and SQL Azure.

This blog post discusses a video walkthrough that shows how easily create Line of Business (LOB) applications for the cloud by using Visual Studio LightSwitch and SQL Azure.

A video highlights the benefits of Visual Studio LightSwitch and explains how you can quickly create powerful and interactive Silverlight applications with little or no code.

Roopesh Shenoy posted Entity Framework 4.1 – Validation to the InfoQ blog on 6/6/2011:

Validation is an interesting feature introduced in Entity Framework 4.1. It enables to validate entities or their properties automatically before trying to save them to the database as well as “on demand” by using property annotations. There are also a lot of improvements made to Validation from CTP5 to RTW version of Entity Framework 4.1.

Some reasons the ADO.NET team suggests why Validation is a really useful feature -

Validating entities before trying to save changes can save trips to the database which are considered to be costly operations. Not only can they make the application look sluggish due to latency but they also can cost real money if the application is using SQL Azure where each transaction costs.

and

In addition, figuring out the real cause of the failure may not be easy. While the application developer can unwrap all the nested exceptions and get to the actual exception message thrown by the database to see what went wrong the application user will not usually be able to (and should not even be expected to be able to) do so. Ideally the user would rather see a meaningful error message and a pointer to the value that caused the failure so it is easy for him to fix the value and retry saving data.

Why this is different from using Validator from System.ComponentModel.DataAnnotations for validating the Entities?

Unfortunately Validator can validate only properties that are only direct child properties of the validated object. This means that you need to do extra work to be able to validate complex types that wouldn’t be validated otherwise.

Fortunately the built-in validation is able to solve all the above problems without having you add any additional code.

First, validation leverages the model so it knows which properties are complex properties and should be drilled into and which are navigation properties that should not be drilled into. Second, since it uses the model it is not specific for any model or database. Third, it respects configuration overrides made in OnModelCreating method. And you don’t really have to do the whole lot to use it.

There have been several changes in Validation from CTP5 to RTW version of Entity Framework 4.1, as listed below -

- Database First and Model First are now supported in addition to Code First

- MaxLength and Required validation is now performed automatically on scalar properties if the corresponding facets are set.

- LazyLoading is turned off during validation.

- Validation of transient properties is now supported.

- Validation attributes on overridden properties are now discovered.

- DetectChanges is no longer called twice in SaveChanges.

- Exceptions thrown during validation are now wrapped in a DbUnexpectedValidationException.

- All type-level validators are treated equally and all are invoked even if one or more fail.

These are explained in detail in a blog post by the ADO.NET team.

There is an article on MSDN with Code samples that can help learn more about how to use Validation with Entity Framework.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

My Windows Azure Platform Appliance (WAPA) Finally Emerges from the Skunk Works post of 6/7/2011 riffs on the Windows Azure Team’s JUST ANNOUNCED: Fujitsu Launches Global Cloud Platform Service Powered by Windows Azure post of 6/7/2011 8:15 AM PDT:

Today Fujitsu and Microsoft announced the first release of Fujitsu’s Global Cloud Platform service (“FGCP/A5”), powered by Windows Azure and running in Fujitsu’s datacenter in Japan. This service, which Fujitsu has been offering in Japan on a trial basis to twenty companies since April 21, will launch officially in August. This launch is significant because it marks the first official production release of the Windows Azure platform appliance by Fujitsu. FGCP/A5 will enable customers to quickly build elastically scalable applications using familiar Windows Azure platform technologies that will allow them to streamline their IT operations management and compete more effectively globally.

Geared towards a wide variety of customers, FGCP/A5 consists of Windows Azure compute and storage, SQL Azure, and Windows Azure AppFabric technologies, with additional services covering application development and migration, real-time operations and support.

This announcement follows the global strategic partnership on the Windows Azure platform appliance between Fujitsu and Microsoft that was announced at the Worldwide Partner Conference in July 2010. The partnership allows Fujitsu to work alongside Microsoft providing services to enable, deliver and manage solutions built on the Windows Azure platform.

Click here to read the full press release.

OK, so where are HP’s and Dell’s WAPA announcements?

It appears to me that this is the “first official production release of the Windows Azure platform appliance by” anyone.

I wonder who was picked for this WAPA Product Manager, Senior Job in Redmond (posted 5/30/2011.)

Read the rest of my post here.

Timothy Prickett Morgan asked “Whither HP and Dell?” as a deck for his Fujitsu's Windows Azure cloud debuts this August post to The Register of 6/7/2011:

Using a platform cloud is supposed to be easy, but apparently building the hardware and software infrastructure is not so trivial. After unexplained delays, Fujitsu and Microsoft are finally getting ready to launch the first private label platform clouds based on Microsoft's Azure software stack.

Microsoft server partners Hewlett-Packard, Dell, and Fujitsu announced in July 2010 that they would serve up public Azure clouds from their own data centers and at the same time off Azure hardware that companies could use behind the corporate firewall.

Microsoft didn't come easily or immediately to the private cloud idea, but eventually came around to the idea that companies wanted to build their own private clouds more than use public clouds such as Azure and equally importantly, not everyone wanted to trust Microsoft to host their applications. Hence the alignment with HP, Dell, and Fujitsu to do both hosted and private clouds based on the Azure stack.

At last summer's announcement, Microsoft and its three server partners had hoped to get hosted versions of the Azure clouds out the door before the end of 2010 and did not make any commitments about the timing of when the private Azure cloud chunks might be available for sale. All three have been mum on the subject since.

In an announcement in Tokyo today, Fujitsu and Microsoft said that Fujitsu's Global Cloud Platform, based on the Azure stack, would launch in August. Fujitsu said that the hosted cloud, known as the FGCP/A5 cloud in typical server-naming conventions, has been in beta testing since April 21 at twenty companies.

"Based on the success of the trial service, we are now ready to launch FGCP/A5 officially," said Kazuo Ishida, corporate senior executive vice president and director of Fujitsu, in a statement. "We are very confident that this cloud service will deliver to our customers the flexibility and convenience they are looking for in streamlining their ICT operations management costs."

Fujitsu is plunking the Azure stack, which includes support for .NET, Java, and PHP program services and data storage capabilities that are compatible with Microsoft's own Azure cloud, on its own iron. This Azure stack includes compute and storage services as well as SQL Azure database services and Azure AppFabric technologies including Service Bus and Access Control Service. Fujitsu will be hosting its Azure cloud in one of its Japanese data centers and did not specify what servers, storage, and networking it planned to use to make its cloud. But Fujitsu has all of the pieces it needs to build the underlying infrastructure and is likely using its Primergy rack and blade servers, Eternus storage arrays, and home-grown Ethernet switches.

By putting the Azure knockoff in Japanese data centers and making the cloud accessible globally, as is the plan, Fujitsu can cater to Japanese multinational who either don't want to or cannot legally move their data outside of the country as they compute.

Fujitsu did not say much about pricing for the FGCP/A5 cloud, but said that it would charge a base price of ¥5 per hour (a little more than 6 cents at current exchange rates) for the equivalent of one virtual server instance corresponding to an extra small Azure instance from Microsoft. That's around $45 per month compared to the $37.50 per month that Microsoft is charging.

Fujitsu didn't just preview its launch of its Azure cloud knockoffs, but also put a sales target stake in the ground – something you almost never see a vendor do. (And they almost always regret it when they do.) Fujitsu says that over the next five years, it can line up 400 enterprise customers and 5,000 small and midrange businesses (including application software developers) for its FGCP/A5 cloud.

This begs the question: Where are the HP and Dell Azure hosted clouds, and when will these three companies deliver on the promised Azure private clouds? Dell confirmed to El Reg back in March that it would do two public clouds – one based on Azure and another on some other platform almost certainly to be OpenStack but VMware's vCloud is a possibility.

HP rolled out a bunch of "Frontline" appliances with Microsoft, including data warehousing appliances based on Microsoft's SQL Server database and email appliances based on Exchange Server. HP has been mum on its hosted Azure clouds. The company is making some kind of hybrid cloud announcement at its Discover user and partner conference in Las Vegas today, however, so there may be some movement on the Azure cloud front at HP. We'll see.

As for the private Azure clouds, which would seem to be what customers really want, Charles Di Bona, general manager of Microsoft's Server and Tools division, told a group of financial analysts last month that Azure appliances were at the "early stage at this point".

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) posted (Physical, Virtualized and Cloud) Security Automation – An API Example to his Rational Survivability blog on 6/7/2011:

The premise of my Commode Computing presentation was to reinforce that we desperately require automation in all aspects of “security” and should work toward leveraging APIs in stacks and products to enable not only control but also audit and compliance across physical and virtualized solutions.

There are numerous efforts underway that underscore both this need and the industry’s response to such. Platform providers (virtualization and cloud) are leading this charge given that much of their stacks rely upon automation to function and the ecosystem of third party solutions which provide value are following suit, also.

Most of the work exists around ensuring that the latest virtualized versions of products/solutions are API-enabled while the CLI/GUI-focused configuration of older products rely in many cases still on legacy management consoles or intermediary automation and orchestration “middlemen” to automate.

Here’s a great example of how one might utilize (Perl) scripting and RESTful APIs against VMware’s vShield Edge solution to provision, orchestrate and even audit firewall policies using their API. It’s a fantastic write-up from Richard Park of SourceFire (h/t to Davi Ottenheimer for the pointer):

Working with VMware vShield REST API in perl:

Here is an overview of how to use perl code to work with VMware’s vShield API.

vShield App and Edge are two security products offered by VMware. vShield Edge has a broad range of functionality such as firewall, VPN, load balancing, NAT, and DHCP. vShield App is a NIC-level firewall for virtual machines.

We’ll focus today on how to use the API to programatically make firewall rule changes. Here are some of the things you can do with the API:

- List the current firewall ruleset

- Add new rules

- Get a list of past firewall revisions

- Revert back to a previous ruleset revision

Awesome post, Richard. Very useful. Thanks!

/Hoff

Related articles

- Can IPS Appliances Remain Useful in a Virtual-machine World? (pcworld.com)

- Clouds, WAFs, Messaging Buses and API Security… (rationalsurvivability.com)

- AWS’ New Networking Capabilities – Sucking Less

(rationalsurvivability.com)

- Sourcefire Enables Application Control Within Virtual Environments (it-sideways.com)

- Using The Cloud To Manage The Cloud (informationweek.com)

- Virtualizing Your Appliance Is Not Cloud Security (securecloudreview.com)

- OpenFlow & SDN – Looking forward to SDNS: Software Defined Network Security (rationalsurvivability.com)

John Sawyer wrote a Strategy: Cloud Security Monitoring: How to Spot Trouble in the Cloud for InformationWeek::Analytics white paper on 6/5/2011 (premium subscription required):

Download Premium subscription required

How to Spot Trouble in the Cloud

Corporate decision makers are clamoring for the cloud because the benefits can be compelling: Economies of scale made possible by virtualization allow hosting providers to offer attractive savings over traditional data centers; on-demand services let companies throttle expenditures based on changing needs, such as busy retail seasons; new business initiatives can be spun up without heavy capital investment and IT resources.

All too often, however, security is an afterthought to cost savings, flexibility and convenience. The CISO and other security professionals may be the last to know they’re now responsible for sensitive data living in the cloud. Unfortunately, they’re also the ones left holding the bag when there’s a security incident. Why didn’t they prevent the exposure? Why didn’t the firewall they implemented protect against the attack? Why weren’t the systems being monitored?

The problem is that enterprises are flying blind unless they adapt their security monitoring, incident response and digital forensic policies and procedures to the cloud. In this report, we examine how the different cloud computing architectures impact visibility into IT operations and activities—especially security—and how to adapt enterprise practices to maintain a high level of security in cloud environments. (S2970611)

Table of Contents

4 Author’s Bio

5 Executive Summary

6 Rethinking Security Monitoring and Incident Response

7 Keys to Security Monitoring

9 Service Models: How *aaS Impacts Log Availability

10 The Software-as-a-Service Model

11 The Platform-as-a-Service Model

12 The Infrastructure-as-a-Service Model

13 Cloud Security Monitoring Options

15 Adapting Incident Response Needs

18 Choose Wisely

19 Related Reports6 Figure 1: Primary Migration Drivers

8 Figure 2: Primary Reason for Not Using Cloud Services

10 Figure 3: Holding Cloud Service Providers Accountable for Security

12 Figure 4: Scope of Control Coverage by Cloud Service Type

14 Figure 5: Providers in Use

15 Figure 6: Security Tasks Requiring Most Resources

16 Figure 7: Trust in Cloud Provider Security and Technology ControlsAbout the Author

John H. Sawyer is a senior security engineer with the University of Florida, Gainesville and a Dark Reading, Network Computing and InformationWeek contributor and blogger. Sawyer’s current duties include network and Web application penetration testing, intrusion analysis, incident response and digital forensics. He was recently awarded a 2010 Superior Accomplishment Award from the University of Florida for his work as part of the UF Office of Information Security and Compliance.

Sawyer is a member of team 1@stplace, a small group of righteous hackers that won the electronic Capture the Flag computer hacking competition at DEFCON in Las Vegas in 2006 and 2007. His certifications include Certified Information Systems Security Professional and GIAC Certified Web Application Penetration Tester, Incident Handler, Firewall Analyst and Forensic Analyst. He is a member of the SANS Advisory Board and has spoken to numerous groups, including the Florida Department of Law Enforcement and Florida Association of Educational Data Systems (FAEDS), on network attacks, incident response and malware analysis.

He holds a Bachelor’s of Science in Decision and Information Science from the University of Florida.

Jay Heiser asked When Was Your Last Login? in a 6/7/2011 post to his Gartner blog:

Back in the days of modems and character-based terminals, it was a normal practice to provide information about the previous login as part of the login sequence. This was trivially easy to implement on old-fashioned Unix, but it quickly faded from practice as graphical user interfaces became the norm. Instead of continuing this cheap and effective security practice, the world has moved on. And more loss to the world.

Multiple security failures related to logins have come to light over the last several weeks. Google has reported that their sophisticated (but unspecified) mechanisms have detected misuse of several hundred phished accounts. After a period of dissembling and vague assurances, it has now been established that the theft of seed material from RSA was instrumental in enabling the attack against Lockheed, and the status of the remaining 40,000,000 SecurID tokens remains a matter of discussion. A decade after the first feeble attempts to slurp UBS PINs, Trojans such as Zeus are stealing login factors on an industrial scale. Ultimately, no degree of authentication strength can defeat man-in-the-middle attacks against compromised endpoints. So now what?

Gartner analyst Avivah Litan makes a strong case that providers need to step up their level of fraud detection. I don’t disagree with that, but I think in the interests of defense in depth, its time to give the user some ability to monitor the status of their own account, and expect them to do so. If users were provided with information about their previous login, or better yet, logins, they would be much better prepared to detect misuse of their accounts.

I just checked Yahoo, and I can’t find anything like that. I don’t see anything like that on Facebook, and LinkedIn doesn’t tell me when I last logged in. My Recent History on Amazon does show me what books I most recently looked at, but provides no information on my login history. Gartner’s workstations run on a very common commercial operating system that is constantly asking me for my password, but I don’t remember ever being provided info on my previous login. I’ve got over 100 different logins, and the only login history I’m aware of is what appears at the bottom of a gmail page. I can’t remember the last time that any system, corporate or personal, provided me with any sort of obvious message during the login sequence.

Its time for the past to return to the present. Consumers and corporate users need to be able to defend themselves from a growing variety of increasingly simple attacks against their accounts. A cheap and simple way to enable normal people to detect some degree of account misuse would be to provide login history info, along with some explanation as to the purpose and value of reviewing it. Every login sequence on every system should pop up a message saying “You last logged into this account from IP address x.x.x.x and were logged in for a period of X”, along with a suggestion to change your password if you don’t think that’s when you last logged in.

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

GigaOM Events asked Over 700 People Will Be at Structure 2011. Will You Be There? in a 6/7/2011 email:

Structure 2011 is in two weeks. Register now -- we have less than 50 tickets left for sale!

In a recent post about the Structure 2011 LaunchPad finalists, GigaOM editor, Derrick Harris, commented:

One of the underlying themes of this year’s Structure conference is how cloud computing — as both a delivery model and a set of technologies — has matured to the point that we’re beyond arguing over whether it’s a good idea in general and instead are arguing over how to best implement it.

With over 32 sessions and 24 in-depth workshops, Structure 2011 will give you access to the people and the information to jump on the next wave of cloud technology and services:

- 11 keynote speakers and fireside chats featuring companies such as Facebook, Cisco, Amazon, AT&T and Verizon Business.

- Over 135 speakers in 55 sessions over 2 days.

- 24 workshops on topics such as hybrid clouds, datacenter, business issues and more.

- 11 LaunchPad companies.

- Over 5 hours of networking time.

- More than 100 top-tier press, including GigaOM editors, from outlets such as Information Week, Fortune, and CBS.

- 1 research report from GigaOM Pro on industry solutions for private clouds FREE with your registration

See the full schedule here.

As we ramp up for Structure 2011, we have a few related offers for you:

- If you are an early stage company looking for an affordable package with VIP benefits, then the Startup Bundle offer is for you. Sign up here.

- Join GigaOM Pro and Rackspace for a FREE webinar on The Open Cloud taking place on June 15 from 10:00 - 11:00am. Sign up here.

- What does the industry say about the Future of Cloud Computing? Take the survey, and get the results on June 22.

We hope you will join us this year. Register now to guarantee your spot.

Full disclosure: I have complimentary press credentials for Structure 2011.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Klint Finley (@Klintron) reported Ex-Google Engineer Says the Company's Software Infrastructure is Obsolete in a 6/7/2011 post to the ReadWriteCloud blog:

Yesterday former Google Wave engineer Dhanji R. Prasanna wrote on his blog about why he is leaving the company. It's an interesting look at Google's company culture, but there's also an interesting technical nugget in there. "Google's vaunted scalable software infrastructure is obsolete," Prasanna wrote. He emphasizes that the hardware infrastructure is still state of the art, "But the software stack on top of it is 10 years old, aging and designed for building search engines and crawlers."

Prasanna says software like BigTable and MapReduce are "ancient, creaking dinosaurs" compared to open source alternatives like Apache Hadoop.

Prasanna blames the state of Google's software stack on it being designed by "engineers in a vacuum, rather than by developers who have need of tools."

If true, this speaks to the strength of open source - or at least of well maintained open source projects. Open source software can be improved by a wide variety of stake holders, but proprietary software will always be shielded from outside improvements. The open source alternatives have surpassed the proprietary versions that Google kept under lock and key, and Google isn't in a position to take advantage of the improvements made by the open source community without making some major infrastructural changes.

Also, if Prasanna's assessment is correct, it would support RedMonk's Stephen O'Grady's thesis that software infrastructure is no longer a competitive advantage. This is particularly relevant as Google markets its App Engine platform-as-a-service. The Register's Cade Metz recently wrote a long piece on Google App Engine as a means of accessing Google's infrastructure. Although the platform has made improvements in the past year, many developers have been unhappy with its restrictions.

Developers have been willing to accept the proprietary nature of the PaaS and its restrictions to access Google's infrastructure. But what if Google's infrastructure really isn't special? Cloud services powered by open services would then be even more desirable.

We've written before that "open" has won against proprietary, at least in rhetoric if not in practice. Thus far App Engine has bucked that trend. But for how much longer?

Cade Mertz asked “The software scales. But will the Google rulebook?” in a deck for his five-page App Engine: Google's deepest secrets as a service analysis for The Register:

Google will never open source its back end. You'll never run the Google File System or Google MapReduce or Google BigTable on your own servers. Except on the rarest of occasions, the company won't even discuss the famously distributed software that underpins its sweeping collection of web services.

But if you like, you can still run your own applications atop GFS and MapReduce and BigTable. With Google App Engine – the "platform cloud" the company floated in the spring of 2008 – anyone can hoist code onto Google's live infrastructure. App Engine aims to share the company's distributed computing expertise by way of an online service.

"Google has built up this infrastructure – a lot of distributed software, internal processes – for building-out and scaling applications. We don't have the luxury of slowly ramping something up: when we launch something, we have to get it out there and scale it very, very quickly," Google App Engine product manager Sean Lynch recently told The Register.

"We decided we could take a lot of this infrastructure and expose it in a way that would let third-party developers use it – leverage the knowledge and techniques we have built up – to basically simplify the entire process of building their own web apps: building them, managing them once they're up there, and scaling them once they take off."

For some coders, it's an appealing proposition, not only because the Google back end has a reputation few others can match – a reputation fueled in part by the company's reluctance to discuss particulars – but also because App Engine completely removes the need to run your own infrastructure. As a platform cloud, App Engine goes several steps beyond an "infrastructure cloud" à la Amazon EC2. It doesn't give you raw virtual machines. It gives you APIs, and once you code to these APIs, the service takes care of the rest.

"When you're one guy trying to run a startup, you have to do absolutely everything. I've done everything from managing machines to writing code to marketing," says Jeff Schnitzer, a former senior engineer in EA's online games division who's now using App Engine to build an online-dating application. "That's one of the reasons why I've been drawn to App Engine. It eliminates entire classes of job descriptions, from sys admin to DBA."

The rub is that in order to benefit from this automation, you have to play by a strict Google rulebook. All applications must be built with Python, Java, or one of a handful of other supported languages, including Google's new-age Go programming language, which was just added to the list. And even within these languages, there are limits on the libraries and frameworks you can use, the way you handle data, and the duration of your processes.

Google is loosening some restrictions as the service matures. But inherently, App Engine requires a change of mindset. "You have to throw away a lot of what you know and basically write for Google's model: small instances, faster start, completely new data storage. It's a completely different beast," says Matt Cooper, a developer and entrepreneur who just recently started using the service. And because Google jealously guards the secrets of its infrastructure, anyone who builds an application atop App Engine will face additional hurdles if they ever decide to move the app elsewhere.

All of which makes Google App Engine a particularly fascinating case study. Much like in other markets, Google is promising you an added payoff if you seriously change the way you've done things in the past – and if you put a hefty amount of trust on its servers. Many have already embraced the proposition – App Engine serves more than a billion and a half page views a day, and 100,000 coders access the online console each month – but it's yet to be seen whether the service has a future in the mainstream.

We know that Google has a knack for scaling web applications. "Google employees are very good about not talking about their proprietary [infrastructure], but they do give you qualitative feel for it," says Dwight Merriman, cofounder of MongoDB, the distributed database that seeks to solve many of the same scaling issues as the Google back end. What we don't know is whether the Google ethos can scale into the enterprise.

Google believes it can. Lynch calls App Engine a "long-term business", and later this year, the service will exit a three-year beta period, brandishing new enterprise-centric terms of service. Proof of the service's viability, the company believes, lies in the track record of the Google infrastructure. "This is a service that's a tremendous differentiator for us, " says Lynch. "We can see it being valuable for other people to use it as well."

Read More: Next page: Lazy developers not allowed; Page: 2, 3, 4, 5, Next

Cade is the US editor of The Register.

<Return to section navigation list>

0 comments:

Post a Comment