Windows Azure and Cloud Computing Posts for 6/6/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

The Windows Azure Team announced New Windows Azure Service Management API Features Ease Management of Storage Services on 6/6/2011:

The Windows Azure Service Management API enables Windows Azure customers to programmatically manage their deployments, hosted services, and storage accounts. We are pleased to announce the release of new Windows Service Management API features that enable customers to manage the lifecycle of storage services – specifically to programmatically create, update, or delete storage services using the following new methods: Create Storage Account; Update Storage Account; and, Delete Storage Account.

In addition, new versions of two existing Service Management API methods enable customers to obtain additional information about their deployments and subscriptions.

- The new version of the Get Deployment method returns the following additional information

- Instance size, SDK version, input endpoint list, role name, VIP, port

- Update domain of role instance, ditto for fault domain

- The new version of the List Subscriptions method returns the following additional information: OperationStartedTime and OperationCompletedTime

- The request header to use the new versions of these methods is: “x-ms-version: 2011-06-01”

Click here to read more about the Windows Azure Management API.

<Return to section navigation list>

SQL Azure Database and Reporting

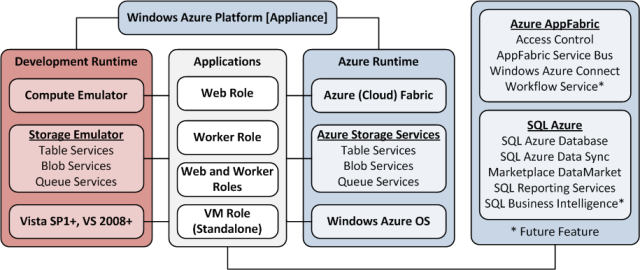

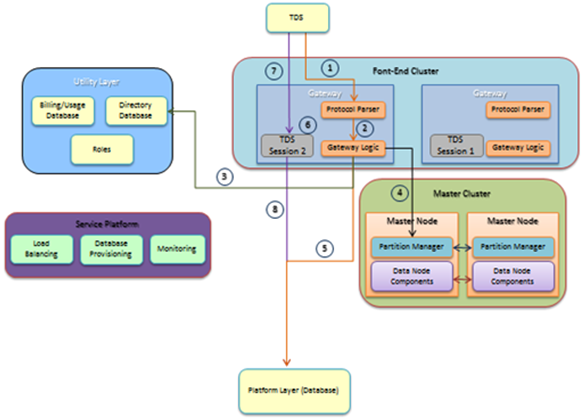

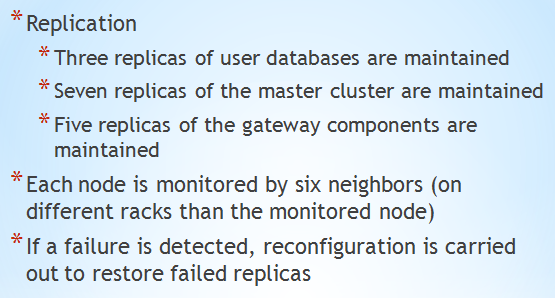

Prof. Munehiro Fukuda’s CSS 534 - Parallel Programming in Grid and Cloud course at the University of Washington – Bothell includes Tim and Piotr’s SQL Azure Database presentation with SQL Azure architectural details that aren’t available together in any other place that I’ve seen:

From slide 9: The Services Layer:

From slide 15: Data Redundancy:

Steve Yi described Integrating SQL Azure with SharePoint 2010 and Windows Azure in a 6/6/2011 post to the SQL Azure Team blog:

Steve Peschka wrote a great TechNet article a little while back about how to integrate SQL Azure services with Sharepoint 2010. He shares a few SQL Azure tips and tricks to get you more incorporated more quickly into new development projects. He provides detailed steps and instructions to how to integrate the two services, plus some additional reference links which makes this an involving read.

Bruce Kyle reported a Special $150 Rebate for SQL Azure Core in June in a 6/6/2011 post to the US ISV Evangelism blog:

Receive a $150 rebate when you sign up for one 10 GB Database in SQL Azure. This works out to $74.95 per month and is a one-time promotional offer that represents 25% off of our normal consumption rates. It requires a 6 month commitment beginning in June.

How to take advantage of the offers:

- Visit http://www.windowsazure.com/offers and select the prefer offer.

- Complete the purchase process before June 30th.

- Visit http://www.azureusoffer.com/Default.aspx and submit your redemption request before July 15th.

- After validating the request, we will send the customer a check within 4-8 weeks.

To compare offers for Windows Azure and SQL Azure, see Windows Azure Pricing Calculator.

How to Get Started Using SQL Azure

See Getting Started with Windows Azure and SQL Azure. Free tools and SDK and a free trial offer.

For assistance with your Azure applications, join Microsoft Platform Ready.

Extended Offer Continues Too

For those who participated in the extended offer last month…

Q: What happened to the Windows Azure and SQL Azure Extended 2 Pack Offer?

A: We removed the offer and replaced it with an offer we believe will better address the needs of our customers and partners. The SQL Azure Core rebate will give customers and partners access to one 10GB database instance of SQL Azure.

Q: What will happen to those customers who bought 2 Windows Azure and SQL Azure Extended subscriptions?

A: If you made their purchases between April 1st and June 1st, they can still submit their redemption request. If the request complies with the Terms and Conditions of the offer, you will receive a check 5-8 weeks after their request.

Q: Can customers still buy Windows Azure and SQL Azure extended subscriptions?

A: Yes, customers can still go to the Azure offers page and buy Windows Azure and SQL Azure extended subscriptions. They will enjoy the 52% discount customers get when buying this subscription.

Learn What Other ISVs Are Doing on Windows Azure

For other videos about independent software vendors (ISVs) on Windows Azure, see:

- Accumulus Makes Subscription Billing Easy for Windows Azure

- Azure Email-Enables Lists, Low-Cost Storage for SharePoint

- Crowd-Sourcing Public Sector App for Windows Phone, Azure<

- Food Buster Game Achieves Scalability with Windows Azure

- BI Solutions Join On-Premises To Windows Azure Using Star Analytics Command Center

- NewsGator Moves 3 Million Blog Posts Per Day on Azure

- How Quark Promote Hosts Multiple Tenants on Windows Azure

<Return to section navigation list>

MarketPlace DataMarket and OData

Marcelo Lopez Ruiz (@mlrdev) described Cool tricks with Internet Explorer Developer Tools and datajs in a 6/6/2011 post:

Today I want to show you how the Internet Explorer Developer Tools and datajs make it easy for developers to experiment with code and data. Just follow along in another Internet Explorer window and enjoy.

First, we'll want to start with a page, let's say http://www.bing.com/. As always, we're greeted with a nice background picture.

Next, we'll bring up the developer tools. Simply press F12 in your browser and the window will come up. Pin it by pressing Ctrl+P (or clicking the rightmost button on the menu area).

Now we'll check whether we have datajs loaded. Click on the Script tab, and type datajs at the prompt. A red message will be shown, "'datajs' is undefined". OK then, let's load it by pasting the following code at the prompt.

(function() {

var url = "http://download.codeplex.com/Project/Download/FileDownload.aspx?ProjectName=datajs&DownloadId=227462&FileTime=129470492005570000&Build=17889";

var scriptTag = document.createElement("SCRIPT");

scriptTag.setAttribute("type", "text/javascript");

scriptTag.setAttribute("src", url);

var h = document.getElementsByTagName("HEAD")[0];

h.appendChild(scriptTag);

})();

This pulls in datajs 0.0.3 from CodePlex. You'll note that the tool went into multi-line mode after pasting; you can go back to the single line by clicking on the 'Single line mode' button. 'Single line' is more convenient in that you can execute everything by pressing Enter, but of course it doesn't work well for multi-line snippets like the one above. If you run OData now, you'll get a few of the members displayed, and we're ready to go.

Next, if we check whether jQuery is loaded like before, we'll see that it's not, so the following snippet will do the trick (we're really just changing the url value).

(function() {

var url = "http://ajax.aspnetcdn.com/ajax/jQuery/jquery-1.6.1.min.js";

var scriptTag = document.createElement("SCRIPT");

scriptTag.setAttribute("type", "text/javascript");

scriptTag.setAttribute("src", url);

var h = document.getElementsByTagName("HEAD")[0];

h.appendChild(scriptTag);

})();Now we're ready to rock and roll. Let's get some sample data from the web and display it on the page.

(function() {

var url = "http://services.odata.org/Northwind/Northwind.svc/Customers";

OData.defaultHttpClient.enableJsonpCallback = true;

OData.read(url, function(data) {

var html = "<table>";

for (var i = 0; i < data.results.length; i++) {

html += "<tr>";

for (var element in data.results[i]) {

html += "<td>" + data.results[i][element] + "</td>";

}

html += "</tr>";

}

html += "</table>";

$(document.body).append(html);

});

})();Of course, you can also use the developer tools to craft more interesting queries, submit changes, test / tweak scripts, extract and reshape some interesting page... There are lots of scenarios and uses for this very useful tool for web developers.

Rudi Grobler (@rudigrobler) asserted AgFx makes writing data-heavy Windows Phone applications child's play! in a 6/5/2011 post:

I am currently busy working on 3 Windows Phone applications which are all VERY data-heavy… And with each application, I have to worry about data caching! I started playing with AgFx and haven’t looked back! It’s a little hard to get started with but once you understand their data first view of the world, you can create application VERY fast… and the best of all? Thy are ROCK SOLID!!!

Before you read any future, read their introduction: Building a connected phone app with AgFx

Everything in the AgFx world revolves around data, and more specifically… data on the web! As long as you can get to it using normal HttpWebRequest, you can use AgFx! It doesn’t matter if your data is RSS/ATOM, OData or raw XML, thy all play nice with AgFx!

In my scenario, I wanted to fetch data from Twitter (ATOM), some RSS feeds (ATOM), custom OData feeds (from SQL Azure) and Flickr… and AgFx was the glu!

I will use Twitter in this example but it works EXACTLY the same for all the other sources… to start off with, we need a ViewModel that represent the tweet

public class TweetViewModel { public string From { get; set; } public string Text { get; set; } public Uri Image { get; set; } }Next, we need a collection of tweets

[CachePolicy(CachePolicy.CacheThenRefresh, 60 * 15)] public class TwitterViewModel : ModelItemBase<TwitterLoadContext> { private BatchObservableCollection<TweetViewModel> tweets = new BatchObservableCollection<TweetViewModel>(); public BatchObservableCollection<TweetViewModel> Tweets { get { return tweets; } set { tweets = value; RaisePropertyChanged("Tweets"); } } #region IDataLoader public class TwitterViewModelDataLoader : IDataLoader<TwitterLoadContext> { private const string TwitterSearchUriFormat = "http://search.twitter.com/search.atom?lang=en&q={0}"; public LoadRequest GetLoadRequest(TwitterLoadContext loadContext, System.Type objectType) { string uri = String.Format(TwitterSearchUriFormat, loadContext.Terms, DateTime.Now.Ticks); return new WebLoadRequest(loadContext, new Uri(uri)); } public object Deserialize(TwitterLoadContext loadContext, System.Type objectType, System.IO.Stream stream) { var document = XDocument.Load(stream); var feed = SyndicationFeed.Load(document.CreateReader()); TwitterViewModel vm = new TwitterViewModel { LoadContext = loadContext }; foreach (var item in feed.Items) { TweetViewModel tweet = new TweetViewModel { From = item.Authors.FirstOrDefault().Name, Text = item.Title.Text, Image = (from _ in item.Links where _.MediaType == "image/png" select _).FirstOrDefault().Uri }; vm.Tweets.Add(tweet); } return vm; } } #endregion }Theirs a lot here so let’s break it down… first off, the attribute CachePolicy tells AgFx how it should handle this object. We want it to always show what's in the cache first and then, if the data is stale, refresh it. We also set the refresh rate to every 15 minutes.

The next is the ModelItemBase<> that your ViewModel must derive from… This is pretty standard in the MVVM world… ModelItemBase implements INotifyPropertyChanged, etc… The interesting part thou is the TwitterLoadContext! This is used to uniquely identify this specific instance of TwitterViewModel… Since our TwitterViewModel is a search for specific terms, I’ll use the terms as my unique identifier!

public class TwitterLoadContext : LoadContext { public string Terms { get; set; } public TwitterLoadContext(string terms) : base("twitter-" + terms) { Terms = terms; } }I’ll just pass in the terms to the constructor… I append the word twitter to the terms because in my scenario I might search for the same term but various sources so I just wanted to be able to identify which source is linked to which query! Next is the collection of tweets… I am using a custom implementation of ObservableCollection<> called a BatchObservableCollection that is a little lighter on notifications!

Lastly, each ViewModel must also tell AgFx how to fetch the data… This is done by creating a public nested class that implement IDataLoaded!

The GetLoadRequest needs to know what the uri is of your request and the result of this web request is returned to the Deserialize method as a stream! In the Deserialize method, you can read the stream and create a new TwitterViewModel! In this sample I am using the SyndicationFeed (System.ServiceModel.Syndication.dll) to deserialize the ATOM feed but I could have easily replaced this with a XLinq implementation!

That's all the pluming you need, all that is left to do is actually fetching the data…

TwitterViewModel Twitter = DataManager.Current.Load<TwitterViewModel>(new TwitterLoadContext("UFC"));And that’s it! This will now magically cache everything and if needed, go and fetch it in the background!

PS. I will be releasing the source of all 3 the apps I am working on so I should have more samples soon!

AgFx is available from CodePlex under an Apache License 2.0 license.

Webnodes announced Full OData support for its Webnode CMS product on 6/6/2011:

Webnodes CMS has a custom OData provider built-in that exposes the content in the system as OData feeds. This opens up a lot of opportunities!

Expose your data once for all integrations

OData makes it possible to expose your data once, and interested parties can then use that OData feed to integrate your data into their application. You don't even need to be involved.

If you need detailed control over who gets access to what, the OData implementation in Webnodes makes it possible to create multiple feeds, where each feed has access to different data sets.

Perfect for mobile applications

Mobile phones and tablets are quickly becoming the most common way to access web based services. By exposing your website data as OData, it's very easy to use your website content in apps for popular smartphone platforms like iOS(iPhone and iPad), Android and Windows Phone 7.

Realtime website data in Excel

One of the most exciting uses for OData is the support for loading OData directly into Excel using the free PowerPivot plugin from Microsoft. Just specify the url to an OData feed, and Excel can load the data from the specified url. The content can then be processed and visualized further in Excel by anyone familiar with Excel. No devoplers need to be involved at all.

This opens up for a wide range of possible uses, from deep analysis of e-commerce sales to demographic statistics about users of the website.

Multiplatform

There are client libraries available for most programming languages to work with OData, including Java, PHP, .Net, Ruby, Objective-C (iPhone etc) and Javascript, which means that there are no restrictions about who can integrate with your data.

Easy to use for developers

OData used AtomPub and JSON for exposing the data. Both of those formats are widely used on the web in other situations today, so the barrier to adopting OData for developers is very low.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Sam Vanhoutte (@SamVanhoutte) posted Sending large messages to AppFabric session-enabled queues in a 6/5/2011 post to his CODit blog:

In the recent CTP of Windows Azure AppFabric, we can see a lot of new rich-messaging features. In my previous blog post, I blogged about the publish-subscribe features. Another interesting feature is the use of sessions when sending messages to a queue. And that’s what this post is about.

We will demonstrate how to send large messages in an atomic batch of chunks over a queue.

The new queues

The new AppFabric Service Bus queues are much richer in functionality and features than the V1-message buffers or the Windows Azure storage queues. A list of the biggest differences:

- Reliable & durable storage (no limit on the TTL-time to live).

- The maximum size of a queue is 1GB. (100Mb in the CTP version).

- A message can measure up to 256KB.

- Different messaging API’s are available: REST, .NET client and WCF bindings.

- Transactional support in sending messages to a queue.

- De-duplication of messages.

- Deferring of messages.

As you can see, the limit of a message size is 256Kb, which is much more than the storage queue limit, but can still be not enough in certain cases. And that is where sessions come in the picture.

Session concept

The concept of sessions allows receiving messages that belong to a certain logical group all by the same receiver. This is done by specifying a SessionId on a message. Receivers can listen on a specific session, or can lock the session for their usage on a first come first served basis.

Sessions can also be used to implement Request-Reply patterns over a queue. But more on that can be expected in a future blog post.

Creating a session-enabled queue

By default, queues are created session-less. The usage of sessions can only be used on queues that have session-support enabled. The following code extract shows how to create a queue with sessions enabled. (notice the usage of the ServiceBusNamespaceClient object. This is the object you’ll always use in administrative operations.

sbClient.CreateQueue("qName", new QueueDescription { RequiresSession = true });In this way, the queue will now be created and be able to handle sessions.

Sending messages to the queue

The only specific thing that is needed to send messages in a session to a queue, is to define the SessionId property on the specific message. In this case, I also write a value to the message properties to indicate that the last message of a session is being sent.

var message = BrokeredMessage.CreateMessage(msgContent); message.SessionId = "MySessionId"; message.MessageId = "MyMessageId"; if (isLastMessage) { message.Properties["LastMessageInSession"] = true; }Receiving messages from a session

The specific thing on receiving messages in a session, is to use a SessionReceiver. This receiver will make sure that all messages it receives will belong to the same session. It is possible to listen on a specific session (by passing in the session name) and to specify a session timeout.

QueueClient queueClient = msgFactory.CreateQueueClient("LargeFileQueue");

SessionReceiver sessionReceiver = queueClient.AcceptSessionReceiver();while (sessionReceiver.TryReceive(TimeSpan.FromSeconds(10), out receivedMessage)) { Console.WriteLine("Message received in session " + receivedMessage.SessionId); if (receivedMessage.Properties.ContainsKey("LastMessageInSession")) { Console.WriteLine("Last message of session received"); break; } }In the above mentioned sample, I am looping until I receive the ‘last message in the session’.

The sample Sending large messages in chunks to a session

In the sample that I upload, I send large messages in chunks to a session-enable queue. Using multiple receivers, I am guaranteed that each file will only be received by exactly one receiver.

Functionality

The sample contains a sender where you can specify a directory where all messages in that directory will be picked up and submitted (in parallel) to a session-enabled queue, if the checkbox is checked. Otherwise they will be sent to a session-less queue. (to demonstrate that messages will be received in random order).

The receiver is a console app that listens for incoming messages based on the session. You can startup multiple receivers, to indicate that each receiver will receive messages to its own session.

These are the most important design steps I took:

Sending messages

- In the sample, I am using a Parallel.ForEach to loop over a bunch of files and send them in parallel. This way, I am sure that messages won’t arrive in sequence on the queue.

- Each large message is being sent in a transaction to the queue.

- On every message, I use the file name as the session id.

- On the last message in the batch, I write the ‘LastMessageInSession’ property to make this visible to the receiver.

Receiving messages

- I am using the PeekLock receive method to make sure I only remove the messages from the queue, when the full session has been received.

- I receive the messages in a TransactionScope. This makes sure that the session on itself is being rollbacked, in case of an exception.

The code of this sample can be found here.

Conclusions

This post was a new example of another great messaging feature that comes with the AppFabric Service Bus Enhancements. We explored the sessions to group messages to the same receiver. This scenario in this post demonstrated that we can split large messages in smaller chunks but still are able to handle them in one atomic batch.

Keith Bauer posted Understanding the Windows Azure AppFabric Service Bus [QuotaExceededException] (Updated) to the AppFabricCAT blog on 6/6/2011:

In a previous blog post I discussed the undocumented connection quotas of the Azure AppFabric Service Bus and the, often times, unexpected QuotaExceededException. Well, I am happy to inform you that our Service Bus team has listened to your concerns and we have recently increased the default quota across the board to 2000 concurrent connections, regardless of your connection pack size!

Overview

Previously, solutions that leveraged the Service Bus were subjected to quotas which ranged from 50 concurrent connections to 825 concurrent connections, depending upon the connection pack size purchased (e.g., pay-as-you-go, 5, 25, 100, or 500). Now, the connection pack size no longer determines your maximum quota. In fact, all connection packs, and even the pay-as-you-go model, now default to 2000 concurrent connections.

Figure 1 represents the current quotas by connection pack size.

Figure 1 – Quotas by Connection Pack Size

Some Things Haven’t Changed

It is important to understand that if your solution exceeds this new connection quota, you will still receive a QuotaExceededException; however, you should not receive this as often as in in the past since the limit has been increased to 2000. In addition, the new quota is now publicized in this MSDN page so there should be no confusion as to why you are receiving this.

Also, as in the past, if your solution needs more concurrent connections than the current quota limit, then you can still work with our Business Desk to request a “custom” quota. You can request this by calling the phone number appropriate for your region, which can be found by completing the questions at our Microsoft Support for Windows Azure site.

Resources and References

Windows Azure AppFabric Service Bus Quotas (MSDN documentation)

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan reported the availability of the Azure VM Assistant (AzureVMAssist) : Windows Azure VM Information, Investigation and Diagnostics Utility on 6/5/2011:

Azure VM Assistant (AzureVMAssist) is an utility which runs inside Azure VM and provide important information about VM environment specific Role details, health information etc. It also helps VM users to do several tasks faster and designed for day to day work on Azure VM. Even though Azure VM are no designed to do day to day work however if are using this utility, it will help you so many ways and provide specific details with minimal efforts.

Download it from: http://azurevmassist.codeplex.com/releases/

Current Version 1.0.0.4 (Version 1.0.0.5 is in test)

The current version has the following functions (To learn more about each functionalities, please visit documentation section):

- Launch Pad: Launch Pad provided info about process, services and help common VM activities

- VM Config: VM Config provides information about Web and Worker Role Configuration

- VM Health: VM Health provided VM health status informtion since VM is running

- VM Info: VM Info check different configuration in your VM and provides consolidated information

- Event Log: Look Application Event log fr errors and wanrning and get some suggestions to solve errors

- Storage Access: Access Windows Azure Storage to upload and download blobs

- VHD Mount: Mount a VHD from Azure Storage to access contents directly on VHD inside VM

- CMD Prompt: Launch Command Prompt within tabs

- Scratch Pad: Scratch pad is to collect random data from Azure VM and then upload to Azure Storage

- Information: Launch Help documents, Check new updates.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

No significant articles today.

<Return to section navigation list>

Visual Studio LightSwitch

Michael Washington (@ADefWebServer) announced LightSwitch Tips Forum Has Been Launched in a 6/5/2011 post:

Do you ever come up with a cool new thing you learned about LightSwitch, and you want to share it?

Now you can simply post it to the new LightSwitchHelpWebsite.com “LightSwitch Tips Forum” located at:

http://lightswitchhelpwebsite.com/Forum/tabid/63/aff/19/Default.aspx

It allows you to upload photos of your tip.

When you use the Format Code Block…

… it formats your code nicely.

It even has a .RSS Feed:

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Neil MacKenzie (@mknz) described AzureWatch – Autoscaling a Windows Azure Hosted Service in a 6/6/2011 post:

The desire for cost-efficient hosting of services provides the impetus for moving services to the cloud. An important driver of that cost-efficiency is the ease with which hosted services can be scaled elastically – up and down – so that service capacity more closely matches service demand.

Windows Azure hosted services are scaled by modifying the instance count of a role (or roles) in the service configuration file. This can be done manually on the Windows Azure Portal which supports either the in-place editing or the uploading of a new service configuration file. The Windows Azure Service Management REST API also exposes operations allowing the service configuration to be replaced with a new version containing different instance counts for the roles.

The Service Management REST API uses X.509 certificates for authentication. This requires that a (self-signed) X.509 certificate be created and uploaded as a management certificate to the Windows Azure Portal. Unlike the service certificates used to provide SSL capability for a hosted service, management certificates are associated with the subscription not an individual hosted service. They are not usually deployed to the hosted service. The reason for this is that the Service Management REST API has visibility across all the hosted services and storage accounts associated with the subscription.

The Windows Azure team created a set of Windows Azure Platform PowerShell cmdlets which allow various Service Management REST API operations to be invoked directly from PowerShell. Cerebreta has released its Azure Management Cmdlets which implement a more extensive set of Service Management REST API operations. Since both of these cmdlets use the Service Management REST API they also authenticate using a management certificate which must be provided with every PowerShell cmdlet invoked.

The ability to modify the instance count of a role is a necessary but not sufficient requirement for achieving cost-efficiency for a hosted service. It is also important to ensure that the number of deployed instances matches the number of instances required to satisfy demand. Consequently, it is important to monitor that demand so that the appropriate number of instances can be deployed. Furthermore, since it takes about 10 minutes to add instances it is important that likely demand is taken into account when choosing the appropriate number of instances.

The reality is that it is much easier to modify an instance count than it is to know what that instance count should actually be. There are many variables affecting demand, including time of the day or the day of the week. The hosted service may be undergoing rapid growth or even, alas, slow decay.

The Windows Azure Diagnostics API supports the capture of performance counter data from each instance and its persistence to Windows Azure Storage. This data can be analyzed to provide a retrospective quantification of service demand. The Windows Azure Diagnostics API supports the remote management of Windows Azure Diagnostics, so that the performance counters being captured can be modified to provide additional visibility into a hosted service.

It is not particularly difficult to capture performance counter data, and it is not particularly difficult to modify the service configuration. However, it requires some analysis to use historic performance counter data along with predictions of future service demand to choose the appropriate number of role instances to run at any particular time. Since this is not likely to be a core feature of any hosted service it makes sense to outsource this to a third party service focused specifically on autoscaling a hosted service.

AzureWatch

Paraleap Technologies has released AzureWatch which it promotes as elasticity-as-a-service for Windows Azure. AzureWatch supports the elastic scaling of a Windows Azure hosted service entirely through configuration with no code change required – as long as Windows Azure Diagnostics has been configured for the hosted service. Brian Prince (@brianhprince) has a nice demonstration of AzureWatch in his Tech Ed 11 presentation on Ten Must-Have Tools for Windows Azure.

AzureWatch is billed at 1.275 cents per instance hour which comes to $0.33 per instance per day. It also has a free introductory offer of 14 days or 500 hours, whichever lasts longer.

AzureWatch uses the Windows Azure Diagnostics API to manage the performance counters captured and persisted to Windows Azure Storage. It monitors that data periodically and uses it to select an appropriate instance count for each role. AzureWatch then uses the Service Management REST API to set the instance counts to the appropriate values. Consequently, the AzureWatch monitoring service must be configured with the subscription ID containing the hosted service, a management certificate for that subscription, and the storage account to which the performance counter data is persisted. AzureWatch can create a management certificate as part of its initial configuration, but this certificate must be uploaded manually through the Windows Azure Portal. Note that AzureWatch monitors and scales only those roles which have been configured to use Windows Azure Diagnostics.

AzureWatch comprises the AzureWatch Monitoring Service and the AzureWatch Control Panel. The Monitoring Service can be run locally, though Paraleap recommends that it hosts the service remotely. The Control Panel is used to control the Monitoring Service and to configure the rules used to scale the hosted service.

The Control Panel is used to configure a set of Raw Metrics for each hosted service role to be monitored. These raw metrics can include any performance counter – including custom counters – as well as the message count for a queue. AzureWatch also creates raw metrics out of various system metrics such as the instance count in various states (e.g. ready, stopped and busy).

The raw metrics are used to configure a set of Aggregated Metrics, each of which represents some computed value for a raw metric over a period of time. These computations are:

- average

- total

- minimum

- maximum

- latest

The time period is expressed in minutes up to 30 days. For example, an aggregated value can be calculated for the average CPU usage over 30 minutes. Each aggregated value has a unique name. The same raw metric can be used multiple times with different computations so that, for example, there can be aggregated values for both the minimum and maximum CPU use over the same or even different periods of time.

The aggregated values are then used to define a set of Rules used to configure the autoscaling. A rule is comprised of a Boolean formula using the aggregated values. For example:

CPUTime > 70

where CPUTime might be an aggregated value for the average CPU time over the last 30 minutes.

The rules are evaluated sequentially each minute and if a rule evaluates to true the configured action is invoked. These actions are:

- scale the instance count up or down by a specified number of instances

- set the instance count to a specified number of instances

- do nothing

Furthermore, the Monitoring Service can be configured to send an email notification when a rule evaluates to true. This is useful when trying out AzureWatch since an email notification can be sent instead of actually increasing the instance count.

A rule can be configured to be invoked only during part of the day, and/or part of an hour. Additionally, to prevent a rule from being satisfied too often it can be disabled for some period of time after it has evaluated to true. AzureWatch also supports hard upper and lower instance counts for each role. This is useful for avoiding surprises if a rule is misconfigured. In fact, Windows Azure also implements a soft quota of 20 instances per subscription.

AzureWatch contains some beta functionality in which it accesses a specified web page in a hosted service and retrieves a payload in a simple XML format. This can be used to specify metrics other than those in performance counters. Once an External Feed has been configured, the metrics specified in it are added to the list of raw metrics where they can be used similarly to the other raw metrics.

Once the the configuration of raw metrics, aggregated metrics and rules has been completed the configuration can be published to the Monitoring Service so that monitoring can commence. The Monitoring Service ensures that any required changes are made to the Windows Azure Diagnostics configuration. It then initiates the periodic evaluation of the rules – and autoscaling is ready to go.

Having done all that, I thought it was pretty cool to receive an email with the following:

Scaling action was successful

Rule CPUTime Rule triggered myservice\Staging\WorkerRole1 to perform scale action: ‘Scale up by’

From instance count of 1 to 2

Rule formula: CPUTime > 70

All known parameter values:

CPUTime: 78.9118115714286;

CurrentInstanceCount: 1;And this having done nothing more than configure AzureWatch. No working out how to download the performance counters from Windows Azure Storage. No working out averages over time of the CPU performance counter. No working out how to download the service configuration. No working out how to modify the instance count for a role. No working out how to upload the service configuration to Windows Azure. Autoscaling is not something you need to implement yourself.

If you are interested in autoscaling hosted services in Windows Azure you should look at AzureWatch.

Microsoft should provide its own autoscaling feature for Windows Azure. Auto-sharding with SQL Azure Federations to provide relational database autoscaling is in a private CTP now. See my Build Big-Data Apps in SQL Azure with Federation cover story for the March 2011 issue of Visual Studio Magazine.

Lori MacVittie (@lmacvittie) asserted “The choice of load balancing algorithms can directly impact – for good or ill – the performance, behavior and capacity of applications. Beware making incompatible choices in architecture and algorithms” in the introduction to her Load Balancing Fu: Beware the Algorithm and Sticky Sessions post of 6/6/2011:

One of the most persistent issues encountered when deploying applications in scalable architectures involves sessions and the need for persistence-based (a.k.a. sticky) load balancing services to maintain state for the duration of an end-user’s session. It is common enough that even the rudimentary load balancing services offered by cloud computing providers such as Amazon include the option to enable persistence-based load balancing. While the use of persistence addresses the problem of maintaining session state, it introduces other operational issues that must also be addressed to ensure consistent operational behavior of load balancing services.

In particular, the use of the Round Robin load balancing algorithm in conjunction with persistence-based load balancing should be discouraged if not outright disallowed.

ROUND ROBIN + PERSISTENCE –> POTENTIALLY UNEQUAL DISTRIBUTION of LOAD

When scaling applications there are two primary concerns: concurrent user capacity and performance. These two concerns are interrelated in that as capacity is consumed, performance degrades. This is particularly true of applications storing state as each request requires that the application server perform a lookup to retrieve the user session. The more sessions stored, the longer it takes to find and retrieve the session. The exactly efficiency of such lookups is determined by the underlying storage data structure and algorithm used to search the structure for the appropriate session. If you remember your undergraduate classes in data structures and computing Big (O) you’ll remember that some structures scale more efficiently in terms of performance than do others. The general rule of thumb, however, is that the more data stored, the longer the lookup. Only the amount of degradation is variable based on the efficiency of the algorithms used. Therefore, the more sessions in use on an application server instance, the poorer the performance. This is one of the reasons you want to choose a load balancing algorithm that evenly distributes load across all instances and ultimately why lots of little web servers scaled out offer better performance than a few, scaled up web servers.

Now, when you apply persistence to the load balancing equation it essentially interrupts the normal operation of the algorithm, ignoring it. That’s the way it’s supposed to work: the algorithm essentially applies only to requests until a server-side session (state) is established and thereafter (when the session has been created) you want the end-user to interact with the same server to ensure consistent and expected application behavior. For example, consider this solution note for BIG-IP. Note that this is true of all load balancing services:

A persistence profile allows a returning client to connect directly to the server to which it last connected. In some cases, assigning a persistence profile to a virtual server can create the appearance that the BIG-IP system is incorrectly distributing more requests to a particular server. However, when you enable a persistence profile for a virtual server, a returning client is allowed to bypass the load balancing method and connect directly to the pool member. As a result, the traffic load across pool members may be uneven, especially if the persistence profile is configured with a high timeout value.

-- Causes of Uneven Traffic Distribution Across BIG-IP Pool Members

So far so good. The problem with round robin- – and reason I’m picking on Round Robin specifically - is that round robin is pretty, well, dumb in its decision making. It doesn’t factor anything into its decision regarding which instance gets the next request. It’s as simple as “next in line", period. Depending on the number of users and at what point a session is created, this can lead to scenarios in which the majority of sessions are created on just a few instances. The result is a couple of overwhelmed instances (with performance degradations commensurate with the reduction in available resources) and a bunch of barely touched instances. The smaller the pool of instances, the more likely it is that a small number of servers will be disproportionately burdened. Again, lots of little (virtual) web servers scales out more evenly and efficiently than a few big (virtual) web servers.

Assuming a pool of similarly-capable instances (RAM and CPU about equal on all) there are other load balancing algorithms that should be considered more appropriate for use in conjunction with persistence-based load balancing configurations. Least connections should provide better distribution, although the assumption that an active connection is equivalent to the number of sessions currently in memory on the application server could prove to be incorrect at some point, leading to the same situation as would be the case with the choice of round robin. It is still a better option, but not an infallible one. Fastest response time is likely a better indicator of capacity as we know that responses times increase along with resource consumption, thus a faster responding instance is likely (but not guaranteed) to have more capacity available. Again, this algorithm in conjunction with persistence is not a panacea.

Better options for a load balancing algorithm include those that are application aware; that is, algorithms that can factor into the decision making process the current load on the application instance and thus direct requests toward less burdened instances, resulting in a more even distribution of load across available instances.

NON-ALGORITHMIC SOLUTIONS

There are also non-algorithmic, i.e. architectural, solutions that can address this issue.

DIVIDE and CONQUER

In cloud computing environments, where it is less likely to find available algorithms other than industry standard (none of which are application-aware), it may be necessary to approach the problem with a divide and conquer strategy, i.e. lots of little servers. Rather than choosing one or two “large” instances, choose to scale out with four or five “small” instances, thus providing a better (but not guaranteed) statistical chance of load being distributed more evenly across instances.

FLANKING STRATEGY

If the option is available, an architectural “flanking” strategy that leverages layer 7 load balancing, a.k.a. content/application switching, will also provide better consumptive rates as well as more consistent performance. An architectural strategy of this sort is in line with sharding practices at the data layer in that it separates out by some attribute different kinds of content and serves that content from separate pools. Thus, image or other static content may come from one pool of resources while session-oriented, process intensive dynamic content may come from another pool. This allows different strategies – and algorithms – to be used simultaneously without sacrificing the notion of a single point of entry through which all users interact on the client-side.

Regardless of how you choose to address the potential impact on capacity, it is important to recognize the intimate relationship between infrastructure services and applications. A more integrated architectural approach to application delivery can result in a much more efficient and better performing application. Understanding the relationship between delivery services and application performance and capacity can also help improve on operational costs, especially in cloud computing environments that constrain the choices of load balancing algorithms.

As always, test early and test often and test under high load if you want to be assured that the load balancing algorithm is suitable to meet your operational and business requirements.

InformationWeek::Analytics posted a Research IT Automation: Bending the 80/20 Barrier: Automation Is Just the Start white paper on 6/6/2011 (requires a premium subscription):

Download: Premium subscription required

Bending the 80/20 Barrier: Automation Is Just the Start

As the economy recovers, IT is caught in a squeeze between requests for new applications, services and device support and demands from upper management to keep budgets lean, staffing light and operations tight. These are irreconcilable objectives as long as we spend the vast majority of our resources on legacy services—a variant on the familiar 80/20 rule, whereby 80% of IT’s time, effort and dollars are spent on routine “keep the lights on” activities with only 20% going to develop new services and applications.Our InformationWeek Analytics 2011 IT Automation Survey shows that, while automation is an important pillar of a larger IT management strategy to bend the rule in favor of greater business-enhancing innovation, it’s no panacea and must be coupled with a shift to an IT services portfolio, more rigorous IT governance using industry best practices with clear service and process definitions, and a reassessment of which services IT should deliver internally vs. outsourcing.

While enterprise-class automation suites have been around for years, adoption hasn’t exactly spread like wildfire. Our survey shows that just 45% of respondents use automation software, with an additional 40% exploring the idea. And unfortunately, the definition of “run book automation” is in the eye of the beholder, since a large plurality of those who have automated are using homegrown software. Now, we’re sure a few of these systems approximate the capabilities of commercial run book software. But let’s face it, most are sets of quick hacks admins have patched together and reused.

One reason to consider buying automation software is that it now does more than just repeatably complete administrative tasks. It also provides a platform for capturing, organizing and reusing unstructured IT knowledge, like novel solutions to common help-desk problems or lessons learned from application pilot tests, further mproving our operational efficiency. In fact, our survey shows most IT organizations have ample opportunity to improve operational efficiency through automation—and that there’s plenty of potential for growth in the automation software market.

Still, not everything that can be automated should be. With today’s abundance of MSPs, cloud service providers and SaaS applications, it’s often better to let someone else do routine IT tasks, such as front-line end user support or email. If you can’t identify where your internal staff adds unique business value or security, you should at least launch a cost analysis on outsourcing. Collectively, automating some internally delivered services and opportunistically outsourcing or cloudsourcing others can boost that 20% development ratio by 10% or more.

In this report, we’ll analyze the state of IT automation: where it’s most frequently applied, implementation barriers and the benefits it brings to the enterprise. We’ll examine gaps in automation deployment and spotlight areas, such as virtual server provisioning, routine system maintenance and user management, where we should be increasing use. (R2780611)

- Survey Name: InformationWeek Analytics 2011 IT Automation Survey

- Survey Date: April 2011

- Region: North America

- Number of Respondents: 388; respondents screened into the survey as being involved with IT automation technologies

Table of Contents

5 Author’s Bio

6 Executive Summary

8 Research Synopsis

9 Operations vs. Innovation

10 Impact Assessment

12 Other Ways to Skin This Cat

23 IT Governance: Trust, but Verify

24 Automation: Tactics and Trends

30 Functions Most Often Automated

32 Capture and Reuse Knowledge

33 Why Now?

36 Best Practice Frameworks

40 Appendix

52 Want More Like This?

InformationWeek::Analytics posted a Research IT Automation: Promises, Promises: A Not -So-New SLA Model white paper on 6/6/2011 (requires a premium subscription):

Download Premium subscription required

Promises, Promises: A Not -So-New SLA Model

Every day, our lives are affected by the services we use. From accessing email to making calls on our iPhones, we expect a certain level of availability and quality. Underlying that expectation is a chain of promises that begins with a provider delivering (or not) a well-defined service to an organization, which then builds on to that service until finally an employee or customer is provided with something—maybe a dial tone, or a message that a reimbursement check was lost. A lot can go wrong between the time an ISP provisions a T1 to when Joe in accounting gets the note that a payment needs to be reissued.Companies have long used service-level agreements to make sure they’re getting their money’s worth. But as many IT functions move to the cloud, are external SLAs still relevant? Are there better ways to make sure our companies get what we’re paying for? To find out how external SLAs are being defined, managed, monitored, acted on and fought over, InformationWeek Analytics crafted a survey that we opened to both providers of IT services and those writing the checks. Of 562 business technology professionals responding, 360 are consumers; companies with 10,000 or more employees are the top demographic represented, at 28%. We got an earful about the impact that SLAs have on service quality today, with a focus on how these agreements are being treated in cloud computing environments.

“I believe large-scale IT customers define too many SLA reports,” says a project manager from a large service provider. “Some of our customers require over 200 SLA reports. I think once you get into that realm, you’re looking at the law of diminishing returns. It creates a waste of money on both sides.” A VP and COO from another large service provider adds that SLAs, in the traditional server- or application-availability sense, don’t tell us much about how we’re meeting our users’ needs. “We have moved to business-critical events that mean something,” he says.

And, of course, cloud providers have their own perspective.

Sure, we’d all like to return to an idyllic (and, let’s face it, mythical) time when a handshake sealed a promise between vendor and consumer. But the reality is, it’s human nature to cut corners in an effort to increase the bottom line. Few providers are above sacrificing service quality where they think they can get away with it. If that weren’t the case, we wouldn’t need SLAs. “SLAs are written to protect the guilty, not encourage great service,” says one respondent. It doesn’t need to be that way. The goal for today’s IT service environments is a “trust but verify” philosophy, with SLAs the bedrock of that verification. In this report, we’ll analyze our survey responses and discuss how IT can develop effective SLAs. We’ll explain how monitoring tools are used within a cloud environment and discuss ways to discover and take action on violations. (R2450611)

- Survey Name: InformationWeek Analytics 2011 SLA Survey

- Survey Date: February 2011

- Region: North America

- Number of Respondents: 562 business technology professionals consuming and providing services covered by SLAs

Table of Contents

4 Author’s Bio

5 Executive Summary

7 Research Synopsis

8 Get It in Writing

11 Core Concepts: Utility and Warranty

11 What Have You Done for Us Lately?

13 Who Wants It More?

14 SLA Tools

19 Penalty Box

22 SLAs in the Cloud

22 Negotiating Cloud SLAs

26 Service Providers’ Perspectives

29 Moving Toward Service Management

32 Appendix

36 Related Reports

Brian Gracely (@bgracely) described PaaS - The Ultimate Survival Contest for IT in a 6/5/2011 post:

Even all the confusion around Cloud Computing, there are a few things that are fairly well accepted by everyone involved in the IT industry:

- Developers have led the early waves of Cloud Computing and they will continue to lead the next phases, especially as more open-source options are made available to them.

- Corporate IT organizations are typically slow to change, as they are focused on stability and 3-7yr budget/depreciation cycles.

These two dynamics are creating a challenging environment within the IT industry. As more "in house" developers leverage public cloud resources, typically due to the pace of IT (operational) responsiveness, this is creating a "shadow IT" environment outside the corporate IT walls. On the flip-side, corporate IT is growing more concerned about external Cloud Computing resources [1][2][3] because of well-publicized outages and concerns about security and compliance.

The latest wrinkle in all this development is the emergence of several PaaS projects and platforms which will give developers the option of running their applications in public or private environments. They also come with the promise of application portability between public and private environments. Whether or not this happens is still TBD and will probably take several years to work out the kinks (and levels of trust from developers). But it introduces an interesting crossroads for corporate IT organizations, which has experts predicting a variety of potential outcomes.

For the most part, developers don't care about costs, they care about working code. They have been bypassing IT organizations to "speed up their time to code". The ultimate measurements for developers is if code works and when code ships. The IT organization can negatively impact this due to lack of speed or complexity of deployment environments (eg. network, security, authentication, etc.).Some people argue that that the future of IT is all about costs and commoditization. Unfortunately too many of these arguments only take into consideration "acquisition costs" (VM, GB/Storage) and "basic operations" (power, cooling, SW licenses), but don't consider broader context costs like security, compliance, switching-costs, re-training, downtime, etc. They also rarely consider that for most organizations, IT costs make up less than 10% of overall expenses, with many industries being less than 5-6%. [NOTE: Pure technology companies are the outliers to those numbers].

For the past few years, IT organizations were spending most of their time focused on keeping the existing services functional. Keeping the lights on. But now they have an opportunity to potentially break the cycle of "shadow IT" by developing the skills and architectures to support these PaaS environments in-house. To give their developers an alternative option that combines the speed of availability with the potential to comply with corporate security and regulation requirements. Will it be delivered at the same price-point as a public service? Probably not, since the utilization levels in-house will be cyclical and not normalized by multiple tenants/customers. But will they potentially be able to deliver the "pace of availability"? The answer may ultimately determine the survivability of IT in the long run.

Will developers trust the IT organizations? Not initially, as there are years of animosity and uncertainty built up between those groups. But the one thing IT has going for it is that developers hate sitting in meetings, or just about anything that takes them away from creating and deploying code. If IT can create an in-house PaaS environment that will reduce (or eliminate) the myriad of security/compliance/costs meetings the developers will be subject to if they expand the shadow IT environments, there is a chance that IT will appear to add-value to those groups.

So if you're an IT organization hearing the buzz about these new PaaS offerings, now my be the perfect time to not only reconnect with your developers, but also start looking at how to establish those environments in-house.

Brian is a Cloud Evangelist for @Cisco and Co-Host of @thecloudcastnet.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) claimed Security: “There’s No Discipline In Our Discipline” in a 6/6/2011 post:

Martin McKeay (@mckeay) reminded me of something this morning with his tweet:

To which I am compelled to answer with another question from one of my slides in my “Commode Computing” talk, which is to say “which part of “security” are you referring to?:

“Security” is so heavily fragmented, siloed, specialized and separated from managing “risk,” that Martin’s question, while innocent enough, opens a can of worms not even anti-virus can contain (and *that* is obviously a joke.)

<Return to section navigation list>

Cloud Computing Events

IDC and IDG Enterprise will present the 2011 Cloud Leadership Forum on 6/20 and 6/21/2011 at the Hyatt Regency Silicon Valley hotel in Santa Clara, CA:

Topics include implementing new governance tactics and other best practices, such as:

- Cloud governance frameworks

- Future-proofing Private Cloud Investments

- Making Your Existing File Storage Infrastructure “Cloud-Ready”

- Building A Next Generation Cloud-Based IT Function

- Customizing vendor agreements with the language that should be in all of your cloud contracts today.

- PLUS: Special luncheon networking opportunities specifically for attendees from the Bay Area

View the full Cloud Leadership Forum agenda at http://cloudleadershipforum.com/agenda

Don’t miss our CIO, legal and expert speakers including Todd Papaioannou, VP of Cloud Architecture, Yahoo!

- Paul Holden, CIO and Managing Director, and David Black, CISO, Aon eSolutions

- Chris Laping, SVP of Business Transformation and CIO, Red Robin

- John Murray, CIO, Genworth Financial Wealth Management

- Brian Clark, Managing Director, CTO, Moody's Corp.

- Frank Wander, SVP & CIO, Guardian Life Insurance Co.

- Scott Skellenger, Senior Director of Global Information Systems, Illumina, Inc.

- Michael R. Overly Esq., Partner, Foley & Lardner LLP

- Eric A. Marks, President and CEO, AgilePath Corp.

- Robert Mahowald, Research Vice President, SaaS and Cloud Services, IDC

- Dave McNally, IT Executive Advisor, IDC

- David Potterton, VP of Research, IDC Financial Insights

- Richard L. Villars, VP, Storage and IT Executive Strategies, IDC

Your Conference Hosts:

• John Gallant, Senior Vice President and Chief Content Officer, IDG Enterprise, Publishers of Computerworld, Network World, InfoWorld, CIO & CSO

• Frank Gens, SVP & Chief Analyst, IDCCloud Leadership Forum

June 20-21

Hyatt Regency Silicon Valley

http://cloudleadershipforum.com/ECLF_9

Registration is free for qualified applicants.

Jeff Price announced a Building Rich Applications Targeting Heterogeneous Cloud Platforms presentation to the San Francisco Bay Area Azure Developers group by Dream Factory CTO Bill Appleton on 6/13/2011 6:30 PM at the Microsoft San Francisco office:

DreamFactory Software CTO Bill Appleton will discuss the advantages and disadvantages of various cloud platforms, including speed comparisons, marketplace differences, and software development issues straight from the trenches of cloud computing.

Bill started working with cloud services more than ten years ago, back in the XML-RPC days before SOAP. Since then, as CTO of DreamFactory Software, Bill and team have done low level integration work with all the modern cloud platforms including Salesforce.com, Intuit Partner Platform, Cisco Webex Connect, Windows Azure, SQL Azure, and Amazon Web Services. Under the circumstances, he has lots of experience evaluating the advantages and disadvantages of the various platforms, and plenty of first hand information about what’s really going on under the hood. Join us for information on speed comparisons, industry analysis, data portability, marketplace differences, software development issues, and up to date information straight from the trenches of cloud computing.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Matt Prigge asserted “With a few simple steps, you can stand up a capable virtual Linux server using Amazon Web Services in minutes. Best of all, it's free for a whole year” as a deck for his How to take Amazon EC2 for a test-drive post of 6/6/2011 to InfoWorld’s Data Explosion blog:

Just about everyone I know in IT has gotten the dreaded phone call from a friend or acquaintance that begins: "Can you help me fix my computer?" If the person on the the line is persistent enough, the result always seems to be a late night drinking beer and cleaning spyware off a PC that was manufactured during the Clinton era.

Earlier this week, however, I got a more interesting query -- one that may be a sign of the times. A friend wanted to set up a customized forum and image-sharing website for a group he's associated with and wasn't sure where to host it. I could have pointed him to any number of Web hosting companies, but he had a few requirements that indicated he might need a server he could fully control. The kicker: It had to be cheap -- really, really cheap.

That last requirement ruled out just about every option except a cloud-based solution. I had used Amazon.com's EC2 before, but mostly to poke around and see what it looked like, never to actually work on a project. I figured we'd give it a try.

Getting started with Amazon EC2

After you go to the Amazon Web Services site and create an account, you simply sign up for whichever AWS product you need. It's like any other e-commerce experience, credit card info included, but with one rather significant exception: a so-called Free Tier that gives you enough compute, storage, and Internet throughput resources to run a fairly capable Linux-based Web server for free for a year. After that, the services revert to pay-as-you-go pricing that works out to around $20 per month, depending upon your choices during setup and how much traffic actually arrives. It's not too shabby for a highly available Linux server with which you can do anything you want.AWS encompasses a wide range, from compute resources to various storage products to server monitoring, messaging, and application services. In my case, I had my eye on the well-known Elastic Compute Cloud or EC2, which includes the paravirtualized compute instances that actually run your applications, Amazon EBS (Elastic Block Storage) for instance storage, and Internet access.

Choosing resources and paying for them

EC2 compute instances range from Micro, which I ended up using, all the way up to massive GPU-equipped clusters targeted at HPC environments. The Micro instance includes one virtual core with up to two ECUs of burstable CPU bandwidth, 613MB of RAM, no dedicated instance storage, and "low" I/O performance -- and costs 2 cents per hour after the first year. The other type I considered was the Small instance, which includes one virtual core with a single ECU, 1.7GB of RAM, 160GB of dedicated instance storage, and "moderate" I/O performance -- at a rate of 8.5 cents per hour from the first time you power it up.At a rate four times more expensive than that of the Micro instance, the Small instance immediately disqualified itself. Another reason to go with a Micro instance was the burstable CPU allocation. On balance, a Micro instance ends up with less CPU bandwidth than a Small instance, but for short periods of time, it's allowed to use double the maximum the Small instance can offer. Since Web traffic (especially for small sites) is often very bursty, that makes the Micro instance a better choice in many cases.

Nati Shalom asked NoSQL Pain? Learn How to Read/write Scale Without a Complete Re-write in a 6/6/2011 post to the High Scalability blog:

Lately I've been reading more cases were different people have started to realize the limitations of the NoSQL promise to database scalability. Note the references below:

- Why does Quora use MySQL as the data store instead of NoSQLs such as Cassandra, MongoDB, CouchDB etc?

- Why did Diaspora abandon MongoDB for MySQL?

- How scalable is CouchDB in practice, not just in theory?

Take MongoDB for example. It's damn fast, but it doesn't really know how to save data reliably to disk. I've had it set up in a replica pair to mitigate that risk. Guess what - both servers in the pair failed and corrupted their data files at the same day.

It appears that for many, the switch to NoSQL can be rather painful. IMO that doesn't necessarily mean that NoSQL is wrong in general, but it's a combination of 1) lack of maturity 2) not the right tool for the job.

That brings the question of what's the alternative solution?

In the following post I tried to summarize the lessons from Ronnie Bodinger

(Head of IT at Avanza Bank AB) presentation on how they turned their current read-mostly scale architecture into a complete read/write scale without a complete re-writing of their existing application and while keeping the database as-is.

The lessons learned:

- Minimize the change by clearly Identifying the scalability hotspots

- Keep the database as is

- Put an In Memory Data Grid as a front end to the database

- Use write-behind to reduce the synchronization overhead

- Use O/R mapping to map the data back into its original format

- Use standard Java API and framework to leverage existing skillset

- Use two parallel (old/new) sites to enable gradual transition

- Use RAM for high performance access and disk for long term storage

- Use commodity Database and HW

For a more detailed explanation read more here.

Here is Nati’s detailed description of Avanza Bank’s existing architecture and the changes made, including partitioning the data.

Judith Hurwitz (@jhurwitz) asked Is Big Data Real and Can IBM Execute on its Vision? in a 6/6/2011 post:

It is inevitable that data would emerge as the most complex and important topics of the next decade. The expansion of the amount and types of data that we have been accumulating across more systems—physical and virtual, applications, and electronic devices – is astounding. These environments generate huge amounts of data – from structured to unstructured – and with increasing use of digital images, social media, and data streams this explosion of data is continuing beyond what we could have imagined. Many organizations have created workarounds to manage these large volumes of complex data using warehouses, data marts, and moving subsets of data into an analytic tool. But in my view we have reached a tipping point where these approaches aren’t enough as much of these data remain in their existing silos.

Making matters worse, there is no way to leverage this data based on the context of the business problem being addressed. Enterprises need a big data strategy that enables query and analysis across different sources and types of data. The key to success in the future will require that all of this data be managed in new ways.

Some of the initial big data use cases have evolved from the problem that occurs when applications generate huge volumes of data – like search engine data, information generated from gene screening, or when a company is trying to analyze customer buying patterns incorporating both traditional structured customer data with unstructured customer call center notes. Many technical early adopters are assuming that big data is synonymous with Hadoop (a way to break data into small fragments of work in a way that they can be executed and analyzed efficiently across a highly distributed hardware environment. Hadoop (managed by the Apache standards organization) has been closely associated with the difficulty of handling large volumes of data generated from web environments. While Hadoop is extremely important, it is still immature and will require time to evolve.

Making things more complicated is that many vendors in the market are slapping the big data moniker on whatever technology they happen to be offering at the moment. But capturing the value of big data is one of the most critical challenges for companies and it needs a thoughtful and innovative approach. So, if big data is much bigger than web data management, what is it? In brief, big data is the ability to manage the huge amounts of data in a way allows customers to gain business value no matter how the volume of data, the form of that data, or the status of that data. It is a big issue that will take many years to address. It is the ability to manage massive amounts of structured and unstructured data at a petabyte scale.

A few weeks ago I attended IBM’s big data summit. During this event IBM made it clear that it is viewing big data in the context with its information management strategy. Like many vendors in the market, IBM is wasting no time in putting a stake in the ground around Big Data. IBM is viewing big data as a way to holistically bring together all of the elements of corporate data. There are three dimensions to the way IBM is planning its approach to data: variety (different types of data), velocity (the speed required to manage the data), and volume (the amount of data in the mix). While the IBM big data strategy is evolving, there were five key take-aways from the meeting:

- Gaining insight from unstructured data requires powerful analytic engines to process and analyze in real-time

- Big data requires Internet scale; it is not for the timid.

- For customer to get a handle on massive amounts of data requires levels of abstraction with the right user interface based on the type of user (developer, business executive, etc.)

- What do you need to know from your data? It depends on what business you are in and how you can leverage both your structured and unstructured data. This requires sophisticated management of both structured combined with unstructured data based on well-defined master data management. Even more fundamental is the requirement to have a way to query that data for both employees and the entire partner community.

- The way you analyze this data is the key. You need to understand the context of the information so you are looking at the right elements in the right way. Separating the information that is noise from the information that is insight is imperative. A misreading of the results can send a business down the wrong path with potentially catastrophic results.

IBM is making a significant investment in analytics and applying this to big data. As IBM executives who spoke at this meeting were quick to admit, it is early in the evolution of big data. IBM is trying to execute an ambitious strategy of bringing together hardware, all varieties of information management, with service management software, and middleware combined with industry frameworks and best practices. The vision will take time to mature but I believe it is based on the right customer pain.

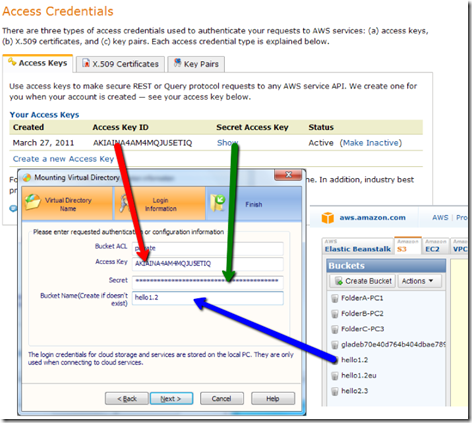

Jerry Huang posted Amazon S3 as a Network Drive on 6/1/2011 to the Gladinet blog:

Amazon S3 is the leading and most mature cloud storage service in the market now. We have seen more and more customers started to use Amazon S3 for their storage need.

For these customers, the ability to map Amazon S3 as a Network Drive is critical. Drive mapping allows them to double click on a file and editing it in place. From a usability perspective, there is no more user interface to learn because hard drive, or USB drive and now a cloud based drive is a very familiar concept in Windows user interface.

This article will document the steps it takes to map Amazon S3 as a network drive with the latest Gladinet Cloud Desktop.

Step 1 – Getting Started

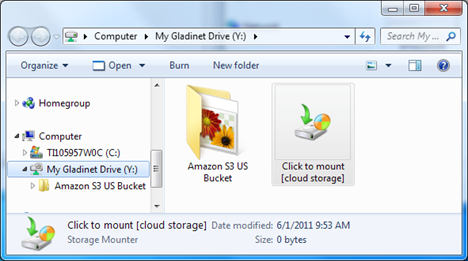

After downloading and installing Gladinet Cloud Desktop, there are two places you can mount your Amazon S3 bucket as a network drive. First you can do it from the Management Console.

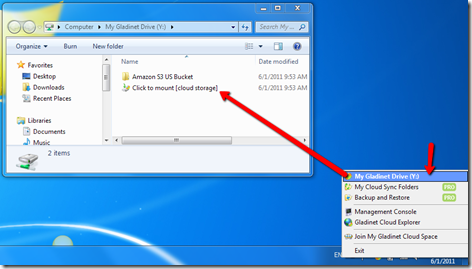

The other place is from the Gladinet Cloud Desktop System-Tray icon. You can click on the My Gladinet Drive menu entry first. After that, you can click on the ‘Click to mount [cloud storage]’ entry to initiate the mounting process.

Step 2 – Mounting Wizard

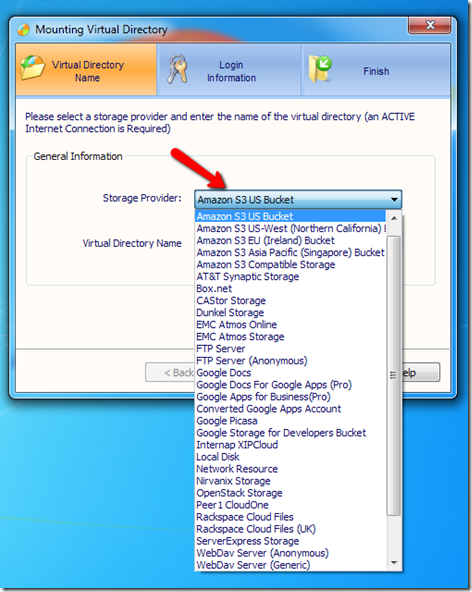

There are several Amazon S3 related entries for you to mount Amazon S3. Mostly it is based on region, such as US-East (US), US-West, EU and Asia regions.

If you have Amazon S3 compatible storage, such as Dunkel, Scality or Eucalyptus/Walus based cloud storage services, you can use the Amazon S3 Compatible Storage entry.

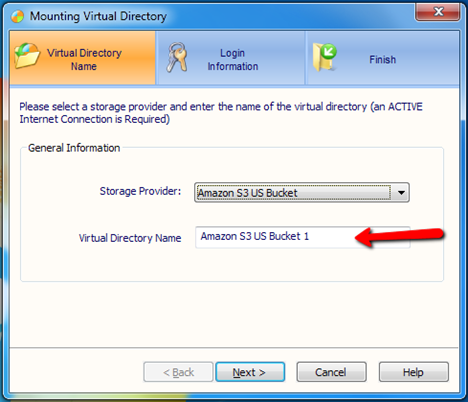

Step 3 - Name Your Amazon S3 Folder (for Windows Explorer)

In this step, you would name the virtual directory that represent your Amazon S3 Bucket. A good practice is to name it the same as your Amazon S3 bucket.

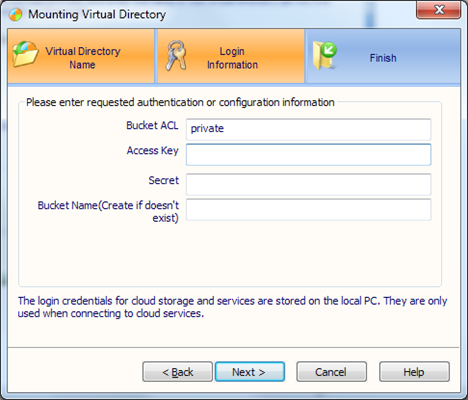

Now you will need to put in the Amazon S3 credentials. You will need to login to your http://aws.amazon.com/account/ web portal to get your account information.

That is it! Now you have a network drive, with Amazon S3 Bucket showing up as a folder.

If you wish to both map the drive to amazon s3 and also share your drive with your colleagues as a Microsoft Network Share, you can use Gladinet CloudAFS product.

Related Posts

SD Times on the Web reported CloudBees Introduces First Commercial Java Platform as a Service With Advanced Clustering and Auto-Scaling Features in a 6/6/2011 reprint of a CloudBees press release:

CloudBees, the Java PaaS innovation leader, today announced a for-pay offering for its RUN@cloud Java Platform as a Service (PaaS). Developers can now decide, per application, whether they'd like to utilize a free plan (with limited memory and computing resources) or benefit from increased memory, more computing capacity and/or additional features through a pay-as-you go pricing scheme.

Generally available since January 2011, RUN@cloud has deployed more than 4,000 applications and offers developers everything they need to quickly and easily deploy applications to the cloud -- without having to purchase, configure and maintain hardware, and without having to program applications for a specific underlying infrastructure service (IaaS).

New features found in the Premium model of RUN@cloud include:

- Application elasticity based on a number of metrics (requests, CPU, etc.) with auto-scaling, developers focus on their application and pay for only what they consume -- RUN@cloud transparently delivers the needed capacity. Capacity can also be set manually and changed at any time, dynamically.

- Clustering with load-balancing, failover and session replication advanced clustering capabilities allow users to deploy new versions of their application into production while preserving existing web sessions.

- CNAME aliasing and SSL support enables developers to make their applications visible on the Internet under their own domains name and benefit from increase security by enabling HTTPS for their applications and domains.

Adding to the power of the new RUN@cloud, CloudBees' DEV@cloud service is a fully integrated development infrastructure that makes it easier for developers to quickly write, build and test applications in the cloud -- and then instantly deploy them to RUN@cloud. DEV@cloud features Jenkins, the popular open source continuous integration server. More than 2,000 customers have logged more than 400,000 Jenkins build minutes in the cloud. CloudBees rolled out a for-pay offering for DEV@cloud two months ago. With DEV@cloud and RUN@cloud, developers can manage their complete develop-to-deploy Java application lifecycle in the cloud.

With proven track records at JBoss, Macromedia/Allaire, Sun Microsystems and WebSphere, the CloudBees team has the deep middleware experience and expertise to quickly accelerate innovation in the PaaS space, the "middleware" layer of the cloud. CloudBees supports all Java applications, including Java EE and JVM-based languages.

Derrick Harris (@derrickharris) adds a third-party perspective with his CloudBees goes premium with pay-per-use Java PaaS post to Giga Om’s Structure blog of 6/6/2011:

CloudBees, the Java-focused Platform-as-a-Service company, is now offering a paid “Premium” version of its RUN@cloud PaaS offering. CloudBees, it appears, is trying to gain a foothold in the PaaS space while other Java-focused efforts are still getting underway.

The new, paid version provides a number of benefits over the free version, including access to more computing resources, flexibility in auto-scaling and clustering, and advanced security capabilities. CloudBees is a relatively small company that just emerged in November, but it has been very active. Led by a team of JBoss veterans, it has since bought potential competitor Stax Networks, moved its platform into general availability, and expanded its usefulness into private VMware- and OpenStack-based clouds. As of today, it’s probably the most-advanced Java-focused PaaS offering on the market in terms of maturity.

As opposed to .NET and Ruby, which have relatively mature PaaS options in the forms of Microsoft Windows Azure for .NET and Engine Yard and Heroku for Ruby, many Java efforts are still in the beta phase or look more like vaporware. CumuLogic and Red Hat’s OpenShift are among the products still in beta, as are multi-language offerings such as Cloud Foundry and DotCloud, while the VMforce collaboration between VMware and Salesforce.com has yet to materialize. [Emphasis added.]