Windows Azure and Cloud Computing Posts for 6/2/2011+

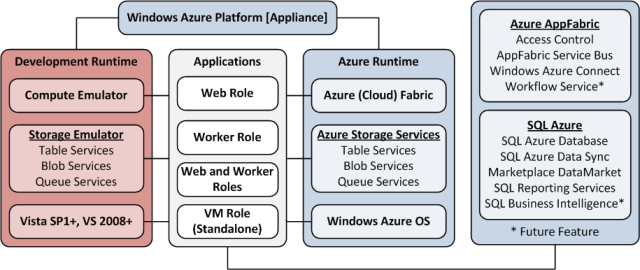

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 6/2/2011 2:00 PM PDT and later with articles marked • by Bruce Guptil, Patrick Wood, Victoria Reitano, Carl Brooks, the Windows Azure Team, Jervis from ASPHostPortal, Mike Benkovich, Adam Grocholski, Chris Hoff, Gavin Clarke and Joe Panettieri.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Brian Prince (@brianhprince) explained Using the Queuing Service in Windows Azure in a 6/2/2011 post to the DeveloperFusion blog:

Windows Azure is Microsoft’s cloud computing platform, and it is comprised of a series of services. The storage family of services is REST based, making it available to any developer on any platform. These services include

- BLOB storage, for your files,

- Tables for your structured, non-relational data, and

- Queues to store messages that will be picked up later.

The Windows Azure Platform also offers SQL Azure for relational data. SQL Azure, while a way to store data in Windows Azure, is not technically part of Windows Azure Storage, it is its own product. SQL Azure is also not based on REST, but on TDS.

In this article we are going to focus on the easiest of these services to work with, the Queues. We will also look at when and how you might use Queues in your application.

What are Queues?

A queue is simply a list of messages. The messages flow from the bottom of the queue to the top of the queue in the order they were added to the queue. It is known by computer scientists as a FIFO data structure. FIFO stands for First In-First Out.

You can think of a queue like a line at the bank. As customers enter the bank, they enter the bottom of the queue (or the back of the line). As the single teller finishes with each customer, the line moves forward, and people eventually get to the head of the line, and get their turn with the teller.

Just like how a bank may have many sets of doors that a customer may arrive through, a queue may have several message producers adding messages to the queue. These producers may have nothing to do with each other, and in some instances may create messages with different content and purposes.

A bank may, when the line gets long enough, open up more teller windows, and you application can do the same. You can change how many consumers you have taking messages off of the queue and processing the data.

Queues in and of themselves are pretty simple beasts, and have been around for a long time as a technology. They are also relatively simple to work with, highly reliable and performant: a single queue in Windows Azure can handle 500 operations per second, including putting, getting, and removing messages.

Windows Azure uses a storage container to hold your data - . You can create a storage account as part of your Windows Azure subscription. Each subscription can have up to five storage accounts by default. The limit can be increased by calling tech support.

A storage container will have a name, for example, OrdersData, and a storage key. The storage key is a private key of a certificate which acts as your password into that storage account. If anyone has both the name and the key, they will have full permissions to your storage, so you want to protect these.

A single storage account can hold any combination of data from Blobs, Queues, and Tables, up to a total capacity of 100TB. Any data stored in a storage container is replicated three times to provide for high availability and reliability. Each Windows Azure subscription can have many different storage accounts.

Starting the Sample

We are going to create a sample comprised of two console applications. One will be the consumer, and put messages on the queue. These messages are meant to be commands for a robot. The other console application will play the role of the robot, the consumer.

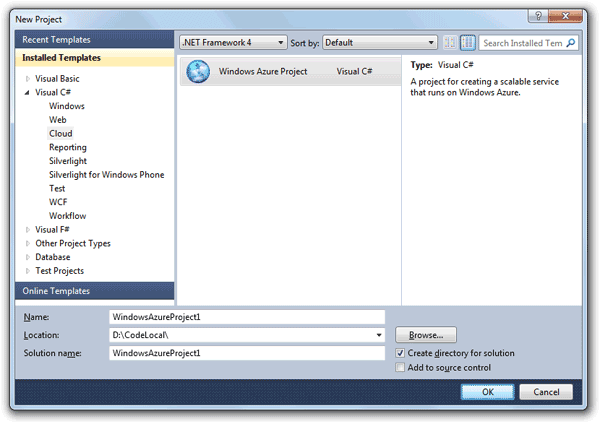

To get started, you will need to install the Windows Azure Tools for Microsoft Visual Studio. The current version is 1.4, and that is the version we will be using. You can download it from http://www.microsoft.com/windowsazure/sdk/.

Once you have the SDK installed, start the storage emulator. You should find it as Start > All Programs > Windows Azure SDK v1.4 > Storage Emulator. You must run this as an Administrator. The emulator runs a simulation of the real Windows Azure storage services locally for development purposes.

Now open Visual Studio 2010, also in Admin mode. The Windows Azure SDK requires Admin mode because of how the Windows Azure emulator works behind the scenes.

- Create a new blank solution.

- Add a C# Console Application Project to it. We will name this first console project Producer because it will be our little application for producing messages and adding them to the queue.

- Add a reference to the Microsoft.WindowsAzure.StorageClient assembly to the new project. You’ll find it in %ProgramFiles%\Windows Azure SDK\v1.4\bin.

- Add a second reference to the System.configuration assembly.

- Add an app.config file to your solution.

When app.config appears in Visual Studio, add the following appSettings element. This tells the Storage Client knows where to connect. This is a lot like providing a connection string to a database. We are using a connection string that will connect to and use the local storage emulator instead of connecting to the real Queue service in the cloud.

<?xml version="1.0" encoding="utf-8" ?> <configuration> <appSettings> <add key="DataConnectionString" value="UseDevelopmentStorage=true" /> </appSettings> </configuration>Now open program.cs if it isn’t open already and add the following using statements

using Microsoft.WindowsAzure.StorageClient; using Microsoft.WindowsAzure; using System.Configuration; using System.Threading;Now we start writing our application in the Main method of Program.cs. To begin with, we need to tell the Windows Azure Storage Client not to look in the Windows Azure project for its configuration details. There isn’t a Windows Azure project in this solution as this code will run on our local PC instead of in the cloud, so we have to add a few lines of code that tell it to look in app.config for its configuration.

private static void Main(string[] args) { CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSetter) => { configSetter(ConfigurationManager.AppSettings[configName]); });The next step is to get a reference to our queue. In order to connect to the queue we need to first connect to the Storage Account, and then your Queue service. They live in a hierarchy. The queue is contained in the Queue service, which is contained inside your Storage Account in Windows Azure.

Once we have done that we will get a reference to the queue itself. The trick here is that you can get a reference to a queue, even when it doesn’t exist yet. This is how you create a queue. It seems weird, but you will get used to it. The FromConfigurationSetting() method will look in your cloud service configuration file for the DataConnectionString configuration value. Of course you can name the configuration element anything you would like.

var storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); var queueClient = storageAccount.CreateCloudQueueClient(); var queue = queueClient.GetQueueReference("robotcommands"); queue.CreateIfNotExist();In our case, the queue named ‘robotcommands’ doesn’t exist yet.

It is important to note that all queue names must be lower case. You will forget this one day, and you will spend hours figuring out why your code isn’t working, and then you will remember me saying over and over again that the queue name must be lower case.

The CreateIfNotExist() method will see if the queue really does exist in Windows Azure, and if it doesn’t it will create it for you. This code will leave you with a queue object (of type CloudQueue) that will let you work with the queue you have selected or created.

What are Messages?

So now that we have a queue, what do we put in it? Well, messages of course. Messages in Windows Azure queues are meant to be very small, limited to 8KB in size. This is to help make sure the queue can stay super-fast, and make it easy for these messages to travel over the wire as part of REST.

Creating a message is fairly simple. You create a CloudQueueMessage with the contents of the message, and then add it to the queue object from above. You can put any text in the message that you want, including encoded binary data. In our sample, we are now going to create a message, and add it to the queue. We will use some user entered input as the contents of the message. We are using an infinite loop to continuously receive input from the user. If the user enters ‘exit’ then we will break the loop and end the program.

string enteredCommand = string.Empty; Console.WriteLine("Welcome to the robot command queue system. Enter 'exit' to stop sending commands."); while (true) { Console.Write("Enter a command to be queued up for the robot:"); enteredCommand = Console.ReadLine(); if (enteredCommand != "exit") { queue.AddMessage(new CloudQueueMessage(enteredCommand)); Console.WriteLine("Command sent."); } else break; }The important line here is the queue.AddMessage() line. In this line we create a new CloudQueueMessage passing in the data entered by the users. This creates the message we want to send. We then hand that message to the AddMessage method which sends it to the queue.

That’s all we need to do to create our producer application. We can now send messages, through a queue, to our robot.

Writing the Consumer Application

We now need to write the application that will represent our robot. It will continually check the queue for any messages that have been sent to it, and assumedly execute them somehow.

- In Visual Studio, click File > Add > New Project.

- Select Console Application, set its name to Consumer and hit OK.

- Add references to the Microsoft.WindowsAzure.StorageClient and System.configuration assemblies as you did for the Producer solution.

- Add an app.config file to the Consumer project and add the same appSettings element to this file as you did for the Producer solution.

Now open program.cs for the consumer solution if it isn’t already open. Initially, this application needs to do the same configuration and queue setup as the producer application, so our first additions replicate those made in the Starting the Sample section.

namespace Consumer { using System; using System.Linq; using Microsoft.WindowsAzure.StorageClient; using Microsoft.WindowsAzure; using System.Configuration; using System.Threading; public static class Program { private static void Main(string[] args) { CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSetter) => { configSetter(ConfigurationManager.AppSettings[configName]); }); var storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); var queueClient = storageAccount.CreateCloudQueueClient(); var queue = queueClient.GetQueueReference("robotcommands"); queue.CreateIfNotExist(); } } }Now to move on to the guts of our consumer application. The consumer of the queue will connect to the queue just like the message producer code. Once you have a reference to the queue you can call GetMessage(). A consumer will normally do this from within a polling loop that will never end. An example of this type of loop, without all of the error checking that you would normally include, is below.

In this while loop we will get the next message on the queue. If the queue is empty, the GetMessage() method will return a null. If we get a null then we want to sleep for some period of time. In this example we are sleeping for five seconds before we poll again. Sometimes you might sleep a shorter period of time (speeding up the poll loop and fetching messages more aggressively), and sometimes you might want to slow the poll loop down. We will look at how to do this later in this article.

The pattern you should follow in this loop is:

- Get Message

- If no message available, sleep for five seconds

- Process the Message

- Delete the Message

The code that will do this is as follows. Add it to the Main() method after the call to queue.CreateIfNotExist().

CloudQueueMessage newMessage = null; double secondsToDelay = 5; Console.WriteLine("Will start reading the command queue, and output them to the screen."); Console.WriteLine(string.Format("Polling queue every {0} second(s).", secondsToDelay.ToString())); while (true) { newMessage = queue.GetMessage(); if (newMessage != null) { Console.WriteLine(string.Format("Received Command: {0}", newMessage.AsString)); queue.DeleteMessage(newMessage); } else Thread.Sleep(TimeSpan.FromSeconds(secondsToDelay)); }If there is a message found we will then want to process it. This is whatever work you have for that message to do. Messages generally follow what is called the Work Ticket pattern. This means that the message includes key data for the work to be done, but not the real data that is needed. This keeps the message light and easy to move around. In this case the message is just simple commands for the robot to process.

After the work is completed we want to remove the message from the queue so that it is not processed again. This is accomplished with the DeleteMessage() method. In order to do this we need to pass in the original message, because the service needs to know the message id and the pop receipt (more on this in The Message Lifecycle section) to perform the delete. And then the loop continues on with its polling and processing.

Running the Sample

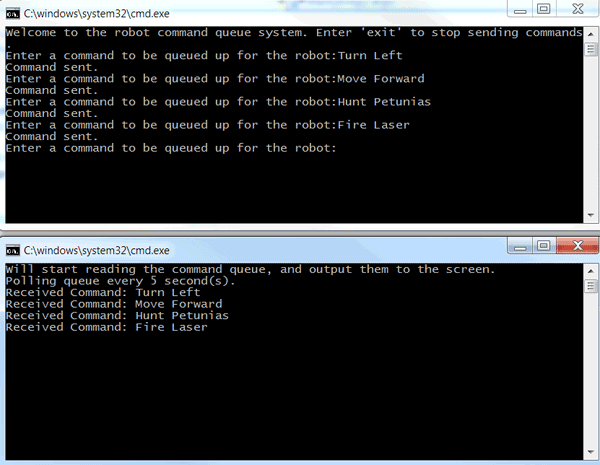

You should now have a Visual Studio solution that has two projects in it. A console application called Producer that will generate robot commands and submit them to your queue. You will also have a second console application called Consumer that plays the role of the robot, consuming messages from the queue.

We need to run both of these console applications at the same time, which you can’t normally do with f5 in Visual Studio. The trick to running both is to right click on each project name, select the debug menu, and then select ‘start new instance’. It doesn’t matter which one you start first.

After you do this you will have two DOS windows open, one for each application. Use the Producer application to start creating messages to be sent to the queue. Here is what it looks like. Make sure the storage emulator from the Windows Azure SDK is already running before you start the applications.

The Message Lifecycle

The prior section mentioned something called a pop receipt. The pop receipt is an important part of the lifecycle of a message in the queue. When a message is grabbed from the top of the queue it is not actually removed from the queue. This doesn’t happen until DeleteMessage is called later. The message stays on the queue but is marked invisible. Every time a message is retrieved from the queue, the consumer can determine how long this timeout of invisibility should be, based on their processing logic. This defaults to 30 seconds, but can be as long as two hours. The consumer is also given a unique pop receipt for that get operation. Once a message is marked as invisible, and the time out clock starts ticking, there isn’t a way to end it quicker. You must wait for the full timeout to expire.

When the consumer comes back, within the timeout window, with the proper receipt id, the message can then be deleted.

If the consumer does not try to delete the message within the timeout window, the message will become visible again, at the position it had in the queue to begin with. Perhaps during this window of time the server processing the message crashed, or something untoward happened. The queue remains reliable by marking the message as visible again so another consumer can pick the message up and have a chance to process it. In this way a message can never be lost, which is critical when using a queuing system. No one wants to lose the $50,000 order for pencils that just came in from your best customer.

This does lead us to one small problem. Let’s say our message was picked up by server A, but server A never returned to delete it, and the message timed out. The message then became visible again, and our second server, server B, finds the message, picks it up and processes it. When it picks up the message it receives a new pop receipt, making the pop receipt originally given to server A invalid.

During this time, we find out that server A didn’t actually crash, it just took longer to process the message than we predicted with the timeout window. It comes back after all of its hard work and tries to delete the message with its old pop receipt. Because the old pop receipt is invalid server A will receive an exception telling it that the message has been picked up by another processor.

This failure recovery process rarely happens, and it is there for your protection. But it can lead to a message being picked up more than once. Each message has a property, DequeueCount, that tells you how many times this message has been picked up for processing. In our example above, when server A first received the message, the dequeuecount would be 0. When server B picked up the message, after server A’s tardiness, the dequeuecount would be 1. In this way you can detect a poison message and route it to a repair and resubmit process. A poison message is a message that is somehow continually failing to be processed correctly. This is usually caused by some data in the contents that causes the processing code to fail. Since the processing fails, the messages timeout expires and it reappears on the queue. The repair and resubmit process is sometimes a queue that is managed by a human, or written out to Blob storage, or some other mechanism that allows the system to keep on processing messages without being stuck in an infinite loop on one message. You need to check for and set a threshold for this dequeuecount for yourself. For example:

if (newMessage.DequeueCount > 5) { routePoisonMessage(newMessage); }Word of the Day: Idempotent

Since a message can actually be picked up more than once, we have to keep in mind that the queue service guarantees that a message will be delivered, AT LEAST ONCE.

This means you need to make sure that the ‘do work here’ code is idempotent in nature. Idempotent means that a process can be repeated without changing the outcome. For example, if the ATM was not idempotent when I deposited $10, and there was a failure leading to the processing of my deposit more than once, I would end up with more than ten dollars in my account. If the ATM was idempotent, then even if the deposit transaction is processed ten times, I still get only ten dollars deposited into my account.

You need to make sure that your processing code is idempotent. There are several ways to do this. Most usually you should just build it into the nature of the backend systems that are consuming the messages. In our robot example we wouldn’t want the robot to execute a single ‘Turn Left’ command twice because it is accidentally handling the same message twice. In this scenario we might track the message id of each message processed, and check that list before we execute a command to make sure we haven’t processed it.

When Queues are Useful

We can see that Windows Azure Queues are very simple to use. Queues become an important tool when we try to decouple parts of our system from each other. They provide an excellent way for two components (either in the same system, or in different systems altogether) to communicate (in a single-directional manner) without having any dependencies on each other.

These two sides of the communication (the producer and the consumer of the messages) don’t have to be running in Windows Azure. Perhaps the producer is a laptop application that is used by the field sales force to process and submit orders back to corporate. The consumer could be a mainframe behind the firewall at corporate that then reaches out and pulls down the messages in the queue to process them.

This is a great way to reduce the dependency from the sender on the receiver, giving you much more flexibility in your architecture, and reducing brittleness. If that mainframe is ever updated to a .NET application running on servers in the corporate datacentre, the producers of the message never need to know or care.

Other Queue Tips

We mentioned earlier that you may want to adjust how often you poll the queue. How often you poll the queue will mostly depend on how you need to consume the messages. In our mainframe example, we might be tied to a nightly batch process. In this case the mainframe is only connecting once an evening to pull down all of the orders that built up during the day. This is called a long queue, because you expect messages to stay in the queue for a longer period of time before they are processed.

Other queue polling techniques rely on self-adjusting the delay in the loop. A common algorithm for this is called the Truncated Exponential Back Off. This approach is taken from how TCP manages the to the sending and receiving of packets over the network. You can read more about the TCP scenario at http://en.wikipedia.org/wiki/Retransmission_(data_networks).

With this algorithm you will define a minimum polling delay (perhaps 1 second) and a maximum delay (perhaps 60 seconds). We will vary the delay of the polling loop over time. Each time the queue is found to be empty we will double the current delay. As the queue remains empty we will poll less and less often. First delaying the loop by 1 second per poll, then 2, then 4, 8, 16, 32, and so on until we reach our maximum delay of 60 seconds.

If we ever find a message in the queue, then we know that there is some traffic and we should speed up our polling loop. There are two approaches to take in this case. The first is that you start to gradually speed up the loop by cutting the delay in half each time you find a message. In this manner your delay would go from 60 to 30, to 15, and eventually back down to 1 second if there is enough messages in the queue. The alternative approach is to immediately shorten your polling delay to 1 second as soon as you find a message in the queue. This is useful when you know the message pattern involves groups of messages, instead of lone messages.

Summary

In this article we have explained how queues work, and how we can use them to decouple our systems, provide the robustness to our architecture we often need. Using messages is quite easy, with simple methods for putting and getting messages onto and off of the queue. There are many ways you can use a queue in your system, and we looked at only a few possibilities including a regular polling loop, a long queue used for infrequent use, and truncated exponential back off polling that allows our queue to speed up and slow down depending on usage.

<Return to section navigation list>

SQL Azure Database and Reporting

• Patrick Wood questioned Microsoft Access DSN-Less Linked Tables: TableDef.Append or TableDef.RefreshLink? for SQL Azure in a 6/2/2011 post:

When it came to creating DSN-Less Linked Tables I had always used a procedure that deleted the TableDef and appended a new one until a problem occurred. The code I was using to save Linked Tables as DSN-Less Tables was not working with some of the Views in SQL Azure. This was a serious problem because the application I was developing would be distributed to clients who would then distribute it to their clients. We did not want to use a DSN file. But now the code that normally worked without a hitch was failing.

Because I was developing for SQL Azure, I had to use SQL Azure Security which includes the Username and Password in the Connection string. Even though I explicitly set the dbAttachSavePWD (Enum Value: 131072) when I appended the new TableDefs the Connection Property of my views still did not include my Username and Password. So I quickly wrote some code to loop through the TableDef properties to see if I could discover the problem.

Sub ListODBCTableProps() Dim db As DAO.Database Dim tdf As DAO.TableDef Dim prp As DAO.Property Set db = CurrentDb For Each tdf In db.TableDefs If Left$(tdf.Connect, 5) = "ODBC;" Then Debug.Print "----------------------------------------" For Each prp In tdf.Properties 'Skip NameMap (dbLongBinary) and GUID (dbBinary) Properties here If prp.Name <> "NameMap" And prp.Name <> "GUID" Then Debug.Print prp.Name & ": " & prp.Value End If Next prp End If Next tdf Set tdf = Nothing Set db = Nothing End Sub

I discovered that the TableDef Attributes of the Views for which my code was not working was 536870912 but for the Tables and Views that were working it was 537001984. After checking the TableDefAttributeEnum Enumeration values I was puzzled. The Attributes value for the Views which were not working was 537001984 which is the value for dbAttachedODBC (Linked ODBC database table). And the value of the Attribute for the Tables and Views that were working was 536870912 which is not in the list. After a few moments I figured it out. I saw that if you add the dbAttachedODBC value of 536870912 to the dbAttachSavePWD value of 131072 it equals the 537001984 Attributes value of the DSN-Less Tables and Views that were set properly. This made sense since the documentation Description for dbAttachSavePWD is “Saves user ID and password for linked remote table”. Apparently the Views needed both Attributes. But how could I set it?

Even though my code explicitly set the Attributes value to dbAttachSavePWD when creating the new TableDefs it was not working. Eventually I found some code that used the TableDef.RefreshLink Method, added the TableDefs Attributes dbAttachSavePWD (131072) value, and tested it. This solution worked. Below is the code I used.

Function SetDSNLessTablesNViews() As Boolean Dim db As DAO.Database Dim tdf As DAO.TableDef Dim strConnection As String SetDSNLessTablesNViews = False 'Default Value Set db = CurrentDb 'Use a Function to get the Connection string 'Note: In actual use I never use "Connection" in my Variables or Procedure names. 'I disguise them to make it hard for a hacker to use code to get my Connection string strConnection = GetCnnString() 'Loop through the TableDefs Collection For Each tdf In db.TableDefs 'Verify the table is an ODBC linked table If Left$(tdf.Connect, 5) = "ODBC;" Then 'Skip System tables If Left$(tdf.Name, 1) <> "~" Then Set tdf = db.TableDefs(tdf.Name) tdf.Connect = strConnection tdf.Attributes = dbAttachSavePWD 'dbAttachSavePWD = 131072 tdf.RefreshLink End If End If Next tdf SetDSNLessTablesNViews = True Set tdf = Nothing Set db = Nothing End FunctionI felt better about using the tdf.RefreshLink Method rather than deleting the TableDefs and appending them again. I read that you could delete your TableDefs and not be able to append a new one if there is an error in the Connection string at this page on Doug Steele’s website at the bottom of the page.

I found an interesting discussion about whether to delete and then append a new TableDef or use the RefreshLink Method on Access Monster. However the latest Developer’s Reference documentation settles the matter for me when it states the TableDef.RefreshLink Method “Updates the connection information for a linked table (Microsoft Access workspaces only).”

You may also want to see the sample code from The Access Web by Dev Ashish using the RefreshLink Method.

Below is an example of the code used to get the Connection string. As I stated in the procedure notes I never use “Connection” in Constants, Variables, or Procedure names. Nor do I use cnn, con, cnnString, etc. Instead I disguise the name of my Procedure to make it hard for a hacker to use to get my Connection string. Constants and Procedure names, along with some variables, are easily seen by opening up even an accde or mde file with a free Hex editor unless you have encrypted the database file. If I can see the name of your Constant I can very easily get its value.

'Don't forget to change the name of this procedure Function GetCnnString() As String GetCnnString = "ODBC;" _ & "DRIVER={SQL Server Native Client 10.0};" _ & "SERVER=MyServerName;" _ & "UID=MyUserName;" _ & "PWD=MyPassW0rd;" _ & "DATABASE=MySQLDatabaseName;" _ & "Encrypt=Yes" End FunctionYou can see or download the code used in this article from our Free Code Samples page.

Get the free Demonstration Application that shows how effectively Microsoft Access can use SQL Azure as a back end.

Jason Bloomberg (@TheEbizWizard) engaged in “Rethinking data consistency” for his BASE Jumping in the Cloud article of 6/2/2011 for the Cloud Computing Journal:

Your CIO is all fired up about moving your legacy inventory management app to the Cloud. Lower capital costs! Dynamic provisioning! Outsourced infrastructure! So you get out your shoehorn, provision some storage and virtual machine instances, and forklift the whole mess into the stratosphere. (OK, there's more to it than that, but bear with me.)

Everything seems to work at first. But then the real test comes: the Holiday season, when you do most of your online business. You breathe a sigh of relief as your Cloud provider seamlessly scales up to meet the spikes in demand. But then your boss calls, irate. Turns out customers are swamping the call center with complaints of failed transactions.

You frantically dive into the log files and diagnostic reports to see what the problem is. Apparently, the database has not been keeping an accurate count of your inventory-which is pretty much what an inventory management system is all about. You check the SQL, and you can't find the problem. Now you're really beginning to sweat.

You dig deeper, and you find the database is frequently in an inconsistent state. When the app processes orders, it decrements the product count. When the count for a product drops to zero, it's supposed to show customers that you've run out. But sometimes, the count is off. Not always, and not for every product. And the problem only seems to occur in the afternoons, when you normally experience your heaviest transaction volume.

The Problem: Consistency in the Cloud

The problem is that while it may appear that your database is running in a single storage partition, in reality the Cloud provider is provisioning multiple physical partitions as needed to provide elastic capacity. But when you look at the fine print in your contract with the Cloud provider, you realize they offer eventual consistency, not immediate consistency. In other words, your data may be inconsistent for short periods of time, especially when your app is experiencing peak load. It may only be a matter of seconds for the issue to resolve, but in the meantime, customers are placing orders for products that aren't available. You're charging their credit cards and all they get for their money is an error page.From the perspective of the Cloud provider, however, nothing is broken. Eventual consistency is inherent to the nature of Cloud computing, a principle we call the CAP Theorem: no distributed computing system can guarantee (immediate) consistency, availability, and partition tolerance at the same time. You can get any two of these, but not all three at once.

Of these three characteristics, partition tolerance is the least familiar. In essence, a distributed system is partition tolerant when it will continue working even in the case of a partial network failure. In other words, bits and pieces of the system can fail or otherwise stop communicating with the other bits and pieces, and the overall system will continue to function.

With on-premise distributed computing, we're not particularly interested in partition tolerance: transactional environments run in a single partition. If we want ACID transactionality (atomic, consistent, isolated, and durable transactions), then we should stick with a partition intolerant approach like a two-phase commit infrastructure. In essence, ACID implies that a transaction runs in a single partition.

But in the Cloud, we require partition tolerance, because the Cloud provider is willing to allow that each physical instance cannot necessarily communicate with every other physical instance at all times, and each physical instance may go down unpredictably. And if your underlying physical instances aren't communicating or working properly, then you have either an availability or a consistency issue. But since the Cloud is architected for high availability, consistency will necessarily suffer.

The Solution: Rethink Your Priorities

The kneejerk reaction might be that since consistency is nonnegotiable, we need to force the Cloud providers to give up partition tolerance. But in reality, that's entirely the wrong way to think about the problem. Instead, we must rethink our priorities.As any data specialist will tell you, there are always performance vs. flexibility tradeoffs in the world of data. Every generation of technology suffers from this tradeoff, and the Cloud is no different. What is different about the Cloud is that we want virtualization-based elasticity-which requires partition tolerance.

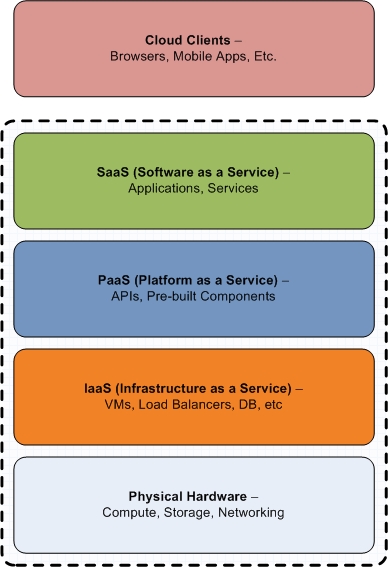

If we want ACID transactionality then we should stick with an on-premise partition intolerant approach. But in the Cloud, ACID is the wrong priority. We need a different way of thinking about consistency and reliability. Instead of ACID, we need BASE (catchy, eh?)

BASE stands for Basic Availability (supports partial failures without leading to a total system failure), Soft-state (any change in state must be maintained through periodic refreshment), and Eventual consistency (the data will be consistent after a set amount of time passes since an update). BASE has been around for several years and actually predates the notion of Cloud computing; in fact, it underlies the telco world's notion of "best effort" reliability that applies to the mobile phone infrastructure. But today, understanding the principles of BASE is essential to understanding how to architect applications for the Cloud.

Thinking in a BASE Way

Let's put the BASE principles in simple terms.

- Basic availability: stuff happens. We're using commodity hardware in the Cloud. We're expecting and planning for failure. But hey, we've got it covered.

- Soft state: the squeaky wheel gets the grease. If you don't keep telling me where you are or what you're doing, I'll assume you're not there anymore or you're done doing whatever it is you were doing. So if any part of the infrastructure crashes and reboots, it can bootstrap itself without any worries about it being in the wrong state.

- Eventual consistency: It's OK to use stale data some of the time. It'll all come clean eventually. Accountants have followed this principle since Babylonian times. It's called "closing the books."

So, how would you address your inventory app following BASE best effort principles? First, assume that any product quantity is approximate. If the quantity isn't near zero you don't have much of a problem. If it is near zero, set the proper expectation with the customer. Don't charge their credit card in a synchronous fashion. Instead, let them know that their purchase has probably completed successfully. Once the dust settles, let them know if they got the item or not.

Of course, this inventory example is an oversimplification, and every situation is different. The bottom line is that you can't expect the same kind of transactionality in the Cloud as you could in a partition intolerant on-premise environment. If you erroneously assume that you can move your app to the Cloud without reworking how it handles transactionality, then you are in for an unpleasant surprise. On the other hand, rearchitecting your app for the Cloud will improve it overall.

The ZapThink Take

Intermittently stale data? Unpredictable counts? States that expire? Your computer science profs must be rolling around in their graves. That's no way to write a computer program! Data are data, counts are counts, and states are states! How could anything work properly if we get all loosey-goosey about such basics?Welcome to the twenty-first century, folks. Bank account balances, search engine results, instant messaging buddy lists-if you think about it, all of these everyday elements of our wired lives follow BASE principles in one way or another.

And now we have Cloud computing, where we're bundling together several different modern distributed computing trends into one neat package. But if we mistake the Cloud for being nothing more than a collection of existing trends then we're likely to fall into the "horseless carriage" trap, where we fail to recognize what's special about the Cloud.

The Cloud is much more than a virtual server in the sky. You can't simply migrate an existing app into the Cloud and expect it to work properly, let alone take advantage of the power of the Cloud. Instead, application migration and application modernization necessarily go hand in hand, and architecting your app for the Cloud is more important than ever.

Of course, the alternative is to take advantage of the transactional consistency features of cloud-based, enterprise-scale relational databases, such as SQL Azure to manage inventory data.

Jason is Managing Partner and Senior Analyst at Enterprise Architecture advisory firm ZapThink LLC.

The AppFabricCAT Team posted SQL Azure Federations – First Look on 5/31/2011:

At Microsoft TechEd 2011, the SQL Azure Database Federations feature was announced and that the product evaluation program was now open for nominations. You can read more about the program here.

In this blog I wanted to touch on a couple of the enhancements in Transact SQL to support SQL Azure federations. There is no better way than an example with short excerpts for each step.

Create the Federation

A federation is a collection of database partitions that are defined by a federation scheme. To create the federation scheme you must execute the ‘CREATE FEDERATION’ command. In this example we have a federation named Visitor_Fed with a distribution name of range_id followed by a RANGE partition type of BIGINT. Only RANGE partitions are supported for this release. Range types can be BIGINT, UNIQUEIDENTIFIER OR VARBINARY(n) where n can be up to 900.

CREATE FEDERATION Visitor_Fed (range_id BIGINT RANGE) GOConnect to a Federation

Connection to a federation member is performed with the ‘USE FEDERATION’ statement. In the statement below we connect to the Visitor_Fed federation member with range_id distribution name equal to 0. This connects us to the first for now only federation in this example. The FILTERING=OFF option denotes that the connection is scoped to the federation member’s full range. Setting FILTERING=ON scopes the connection to a particular federation key instance within a federation member. The RESET keyword is required and is used explicitly reset the connection after use.

USE FEDERATION Visitor_Fed (range_id = 0) WITH FILTERING = OFF, RESET GOCreate a Table

The syntax to create a table has a new enhancement, the ‘FEDERATED ON’ clause which allows the table to be included in multiple federation members. Only one FEDERATE ON column is supported on any given table and it must refer the federation key. Another stipulation, all unique indexes on federated tables must contain the federation column, in this case the visitor_id is defined as the federated column and has a primary key associated with it.

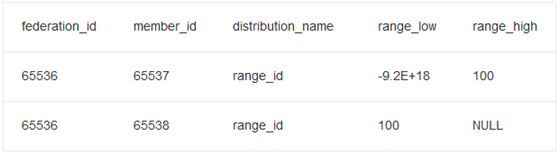

CREATE TABLE visitor ( visitor_id BIGINT PRIMARY KEY, col2 varchar(10) ) FEDERATED ON (range_id = visitor_id) GOSplit a Federation

A federation member is split using the ‘ALTER FEDERATION name SPLIT AT’ command. In the example below, we connect to the root member and perform a SPLIT of the Visitor_Fed federation at the value 100, thus creating two federation members. We can see by querying the sys.federation_member_distributions DMV and from table 1 that we have a two members, member_id=65537 and member_id=65538. The ranges are aligned at a split value of 100 and thus we have a member from the min to 100 and another from 100 to max. A visitor_id value of 100 is included in the second member.

USE FEDERATION ROOT WITH RESET GO ALTER FEDERATION Visitor_Fed SPLIT AT (range_id = 100) GO

Table 1 Federation Member Distributions

Inserting/Querying

Connect to the appropriate federation member. Insert/delete/update/select as one would typically do if not utilizing federations.

USE FEDERATION Visitor_Fed (range_id = 0) WITH FILTERING = OFF, RESET GO INSERT INTO visitor VALUES (1, 'visitor 1') INSERT INTO visitor VALUES (2, 'visitor 2') GO USE FEDERATION Visitor_Fed (range_id = 100) WITH FILTERING = OFF, RESET GO INSERT INTO visitor VALUES (100, 'visitor 100') INSERT INTO visitor VALUES (101, 'visitor 101') GO

<Return to section navigation list>

MarketPlace DataMarket and OData

Alex James (@adjames) will present Using OData to create your web-api to the Aarhus International Software Development Conference in Aarhus, Denmark on 10/10/2011 at 13:20 to 13:40:

This session will explore using OData (odata.org) to power your Web API. Given the trend towards the web as a platform, OData is often ideal for building Web APIs that are powerful, flexible and predictable. You’ll also learn how OData uses appropriate web-standards and idioms, and can be integrated with authentication protocols like OAuth 2.0. But perhaps most importantly you’ll see how OData can extend the reach of your Web API out to most major platforms and form factors, through its built-in support for both JSON and ATOM formats and most major development platforms.

Biography: Alex James

Alex is the Senior Program Manager on the OData team at Microsoft. He is responsible designing new features in the OData protocol and working with the OData community.

Prior to that Alex worked on the Entity Framework team for a couple of years, helping create the vastly improved v2.

Before joining Microsoft Alex created 2 object relational mappers, a relational file-system, dabbled in his own startup, and consulted for about 10 years, so he is something of a veteran in the data programmability space.

His vision is a world of devices and software that are simple enough even for his mum.

Twitter: http://twitter.com/adjames

Blog: http://blogs.msdn.com/b/alexj

Other Links: http://odata.org

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• Victoria Reitano (@giornalista515) reported DotNetOpenAuth joins the Outercurve stable in a 6/2/2011 post to the SD Times on the Web blog:

DotNetOpenAuth, created by Andrew Arnott, was accepted to the Outercurve Foundation’s ASP.NET gallery today. The open-source project is a free, community-based library of standards-based authentication and authorization protocols used in websites and Web applications for .NET developers and others.

The project is housed on SourceForge, and will continue to be hosted and maintained by Andrew Arnott and the DotNetOpenAuth community. Outercurve will provide IT support where needed along with a larger community, according to Stephen Walli, technical director at Outercurve, a not-for-profit foundation that hosts three galleries and 12 projects, with DotNetOpenAuth being the sixth in the ASP.NET gallery.

“Andrew assigned the copyright to us and we manage the software intellectual property. We will provide services and help him grow the project, and encourage the community’s growth,” Walli said, adding that commercial interests are much more likely to become involved with the project now that it has been accepted by the foundation.

Walli explained that this is initially how software foundations came to be created, such as Apache in the late 1990s. He said that commercial companies are often anxious about working with projects maintained by individuals as individuals cannot offer assurances that the work will not contribute to a competitors’ success, something many vendors do not want to do.

Walli said mobile vendors will probably be the first to look into the project, as that is where much of the growth in the tech industry is happening—particularly with security.

“The Web is maturing; we’re finally hitting the next wave and [DotNetOpenAuth] is really timely," said Walli. "We’re just stepping into a new space, with SaaS projects and offerings, and to incorporate these authorization and authentication protocols is a fabulous opportunity for the ASP.NET world."

Paula Hunter, executive director of Outercurve, said that the project was presented to the foundation a few weeks ago. Outercurve does not have a classic incubation stage; instead gallery managers find, vet and present the projects to the board. Hunter said Bradley Millington, the ASP.NET gallery's manager, has done well in “spreading the word in the .NET open-source community” to attract new contributors and committers, which is how he recruited Arnott and his DotNetOpenAuth project and community.

Richard Seroter (@rseroter, avartared below) continued his Interview Series: Four Questions With … Sam Vanhoutte about the AppFabric Service Bus on 6/2/2011:

Hello and welcome to my 31st interview with a thought leader in the “connected technology” space. This month we have the pleasure of chatting with Sam Vanhoutte who is the chief technical architect for IT service company CODit, Microsoft Virtual Technology Specialist for BizTalk and interesting blogger. You can find Sam on Twitter at http://twitter.com/#!/SamVanhoutte.

Microsoft just concluded their US TechEd conference, so let’s get Sam’s perspective on the new capabilities of interest to integration architects.

Q: The recent announcement of version 2 of the AppFabric Service Bus revealed that we now have durable messaging components at our disposal through the use of Queues and Topics. It seems that any new technology can either replace an existing solution strategy or open up entirely new scenarios. Do these new capabilities do both?

A: They will definitely do both, as far as I see it. We are currently working with customers that are in the process of connecting their B2B communications and services to the AppFabric Service Bus. This way, they will be able to speed up their partner integrations, since it now becomes much easier to expose their internal endpoints in a secure way to external companies.

But I can see a lot of new scenarios coming up, where companies that build Cloud solutions will use the service bus even without exposing endpoints or topics outside of these solutions. Just because the service bus now provides a way to build decoupled and flexible solutions (by leveraging pub/sub, for example).

When looking at the roadmap of AppFabric (as announced at TechEd), we can safely say that the messaging capabilities of this service bus release will be the foundation for any future integration capabilities (like integration pipelines, transformation, workflow and connectivity). And seeing that the long term vision is to bring symmetry between the cloud and the on-premise runtime, I feel that the AppFabric Service Bus is the train you don’t want to miss as an integration expert.

Q: The one thing I was hoping to see was a durable storage underneath the existing Service Bus Relay services. That is, a way to provide more guaranteed delivery for one-way Relay services. Do you think that some organizations will switch from the push-based Relay to the poll-based Topics/Queues in order to get the reliability they need?

A: There are definitely good reasons to switch to the poll-based messaging system of AppFabric. Especially since these are also exposed in the new ServiceBusMessagingBinding from WCF, which provides the same development experience for one-way services. Leveraging the messaging capabilities, you now have access to a very rich publish/subscribe mechanism on which you can implement asynchronous, durable services. But of course, the relay binding still has a lot of added value in synchronous scenarios and in the multi-casting scenarios.

And one thing that might be a decisive factor in the choice between both solutions, will be the pricing. And that is where I have some concerns. Being an early adopter, we have started building and proposing solutions, leveraging CTP technology (like Azure Connect, Caching, Data Sync and now the Service Bus). But since the pricing model of these features is only being announced short before being commercially available, this makes planning the cost of solutions sometimes a big challenge. So, I hope we’ll get some insight in the pricing model for the queues & topics soon.

Q: As you work with clients, when would you now encourage them to use the AppFabric Service Bus instead of traditional cross-organization or cross-departmental solutions leveraging SQL Server Integration Services or BizTalk Server?

A: Most of our customer projects are real long-term, strategic projects. Customers hire us to help designing their integration solution. And most of the cases, we are still proposing BizTalk Server, because of its maturity and rich capabilities. The AppFabric Services are lacking a lot of capabilities for the moment (no pipelines, no rich management experience, no rules or BAM…). So for the typical EAI integration solutions, BizTalk Server is still our preferred solution.

Where we are using and proposing the AppFabric Service Bus, is in solutions towards customers that are using a lot of SaaS applications and where external connectivity is the rule.

Next to that, some customers have been asking us if we could outsource their entire integration platform (running on BizTalk). They really buy our integration as a service offering. And for this we have built our integration platform on Windows Azure, leveraging the service bus, running workflows and connecting to our on-premise BizTalk Server for EDI or Flat file parsing.

Q [stupid question]: My company recently upgraded from Office Communicator to Lync and with it we now have new and refined emoticons. I had been waiting a while to get the “green faced sick smiley” but am still struggling to use the “sheep” in polite conversation. I was really hoping we’d get the “beating a dead horse” emoticon, but alas, I’ll have to wait for a Service Pack. Which quasi-office appropriate emoticons do you wish you had available to you?

A: I am really not much of an emoticon guy. I used to switch off emoticons in Live Messenger, especially since people started typing more emoticons than words. I also hate the fact that emoticons sometimes pop up when I am typing in Communicator. For example, when you enter a phone number and put a zero between brackets (0), this gets turned into a clock. Drives me crazy. But maybe the “don’t boil the ocean” emoticon would be a nice one, although I can’t imagine what it would look like. This would help in telling someone politely that he is over-engineering the solution. And another fun one would be a “high-five” emoticon that I could use when some nice thing has been achieved. And a less-polite, but sometimes required icon would be a male cow taking a dump.

Zoiner Tejada (@ZoinerTejada) claimed they’re “A portable way to reliably queue work between different clients and services” in his REST Easy with AppFabric Durable Message Buffers article of 6/1/2011 for DevProConnections:

Representational State Transfer (REST)–enabled HTTP programming platforms broaden the client base that can be used to interact with services beyond those that are purely based on the Microsoft .NET Framework or that support SOAP. The Azure AppFabric Service Bus Durable Message Buffers that are broadened in this way provide platform-level support for reliable and replicated message queuing.

In this article, I examine the updated version of the Durable Message Buffers feature as it applies to the Windows Azure AppFabric Community Technology Preview (CTP) February 2011 release. I will show the feature's capabilities and how to take advantage of them from REST-based .NET clients in a fashion that translates well to other REST-enabled HTTP programming platforms such as Silverlight, Flash, Ruby, and even web pages.

To take advantage of the new release, you need to access the Labs portal. Also, you need to download Windows Azure AppFabric SDK v2.0, samples. Optionally, you can download the offline CHM Help file. You might also consider using the RestMessageBufferClient class provided within the samples as a starting point for your own .NET helper classes because the SDK libraries currently do not include helper classes for a message buffer.

AppFabric Durable Message Buffers

The AppFabric Durable Message Buffers component gives you a message queuing service that uses replicated storage and provides an internal failover feature for increased reliability. That the component is exposed to a REST operation means that it is available to a large swath of clients.

Generally, the service can be divided into two major feature sets: management and runtime. The Management feature set lets you create, delete, get a description of, and list buffers. The Runtime feature set lets you send, retrieve, and delete messages. These features are accessed and secured separately by making requests to the following Uniform Resource Identifiers (URIs):

When you first provision your account, you select the service namespace to use, and that single namespace is used by both management and runtime operations. Interaction for both management and runtime centers on REST, where requests and responses comply with the HTTP/1.1 spec. Figure 1 shows a high-level flow of messages across the Durable Message Buffer.

- Management Address: http(s)://{serviceNameSpace}-mgmt.servicebus.appfabriclabs.com

- Runtime Address: http(s)://{serviceNameSpace}.servicebus.appfabriclabs.com

Figure 1: AppFabric Durable Message BuffersIn the current CTP, the maximum message size is 256KB. Each buffer can store up to 100MB of message payload data, and there is a limit of 10 buffers per account.

Using the Durable Message Buffers

To use the service, you need to create a namespace through the management portal. With a namespace in place, you can then programmatically manage buffers and interact with them.

I will examine the steps in detail to set up a namespace, create a buffer, send messages to the buffer, retrieve messages from the buffer, and delete the buffer. I will also briefly cover related functionality for how to get a buffer's description, list all buffers created under an account, perform atomic read/delete operations, and unlock messages that were examined but were not processed.

Namespaces, Service Accounts, and Tokens

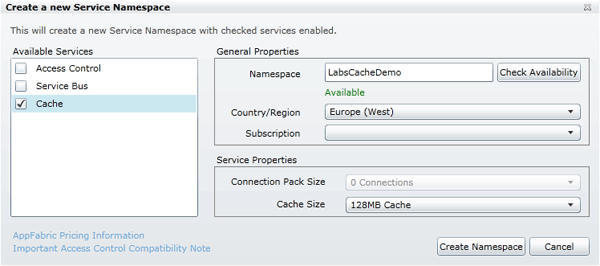

To start, you need to create a namespace that scopes the buffers you will create. To do this, log on to the Windows Azure AppFabric Labs Management Portal, and click New Namespace. The Create a new Service Namespace dialog box appears. You will need to select an Azure subscription and provide a globally unique service namespace, region, and connection pack size. For this CTP, the region and connection pack size options are fixed, which Figure 2 shows. …

Figure 2: Creating a namespace

The AppFabricCAT Team posted How to best leverage Windows Azure AppFabric Caching Service in a Web role to avoid most common issues on 6/1/2011:

Having spent the last day and a half in air-travelling “stand-by hell”, made me realize how much a simple well-constructed explanation of what is taking place can help you better deal with things a lot better. The following example leverages the caching service in a web role, this can help you better learn the intricacies of a custom approach that lets you cache your own .NET serializable objects. The bases on which should help minimized your time spent, in some type of “coding-stand-by hell”.

Currently, a simple search on Azure web role can give you lots of information on how to create one, and in the same manner you can get information on how to leverage the power of Azure AppFabric Cache Service in your web role to cache session state (this requires a configuration update). However, there is little information for those who want to use a customized approach and cache their own .NET serializable objects in a web role. At first, this does not seem to be much of a problem, since merely using the Azure AppFabric Cache APIs in your web role should suffice. Well, that is not exactly the whole history and hence, in this blog, I will explain the things that must be taken into consideration to minimize any possible frustration, increase performance and use the correct AppFabric Cache connection quota.

The method

Since I have created a blog that shows the concepts of the APIs used in AppFabric Cache, I went ahead and leveraged the same sample code to migrate the whole solution into a web role. Since both cache technologies (on-prem and in the cloud) are very similar and have kept API parity (some exceptions may exist, but that will be a topic in a different blog) then it follows that the transition from one to the other should also be simple, which is for the most part true. However, we are not just going from on-prem to cloud, but also from Windows forms to an ASP.Net page – we have two “bridges” to cross.

For reference on the difference between the on-prem and cloud technologies see this MSDN article.

The Interface

With these plans in mind, I went ahead and created a web role in Visual studio 2010 using the latest SDK and then removed all the default content (the one showing “Welcome to ASP.NET”) from the ASP.Net page. Then, I literally copied, via the traditional method of holding the Ctrl key while selecting, via mouse clicks, all the controls in the windows form from the old project (Form1.cs [Design] page). Then I released the keys and clicked “Ctrl + c”, and then pasted (Ctrl + v) all of the controls into the new web role project (Default.aspx file). Then I hit F5 and it worked! This meant that all my controls were going to have the same name. This only copied the interface and not the code behind. Below I show the old and new interface.

Figure 1: The windows form

Figure 2: The ASP.Net form

To break down my steps, instead of copying and pasting “all” of the code sections (in the windows form it is the Form1.cs file, and in the ASP.NET/web role it is the default.aspx.cs file), I only copied the code that updates the tStatus WebControls.TextBox and throw my own test exceptions (i.e. no cache code is used instead instrumented exceptions were thrown). At this point, I tested the interface, and received the following exceptions.

Figure 3: Dangerous request error

You will notice that the first action (say “Add”) will correctly update the text box with the instrumented exception, but the 2nd action will think that you are trying to do a post back to the server with the remaining information (the instrumented exception) from the status box and this is seen as a type of dangerous request. As per the message shown in the exception details above, one option is to change the request validation mode, but since we are not really trying to send any exception information to the server, the recommended approach is to disable the state of the text box to not perform a post back to the server. This can be achieved by changing the text box property from Enabled=True to Enabled=false, as shown in Figure 4.

Figure 4: Change to the TextBox property

Note that I have to do this since, for didactical purposes, I show the raw exception on the web UI but for production purposes, the exception should be handled in a separate class (anywhere but in the web UI), and probably put into a tracelog and in parallel throw a more friendly message, which will be shown to the Web UI on a stateless control (as per the property change mentioned above). Similar schemes can be created for value checking, such null return values from DataCacheItemVersion, all this with the goal of handling errors outside default.aspx.cs.

Another important thing to notice, which will become even more apparent later, is that the code within default.aspx.cs runs in the context of HttpHandler, which ASP.NET uses to map each HTTP request (one for every request in essence every web user). This means that every single line of code written in this context will be duplicated for every HTTP request. Hence, keep the amount of code in this context to a minimum (more code = more instances of that code repeatedly running), which will include but will not be limited to error tracing and validation. Notice, however, that I am not doing this in this sample for the purpose of simplicity and clarity.

Plugging the AppFabric Cache Code

The information on how to create and connect to a Windows Azure AppFabric Caching service can be found in a few blogs, here is one from MSDN. In essence, to use the AppFabric cache cloud Service, one takes the old code and modifies the way the DataCacheServerEndpoint is instantiated (use the cache end point name instead of the DNS name of a node or nodes) and then leverage the given ACS token to authenticate against the service, as shown below in Figure 5. Note that the same can be done using the Application Configuration file, for more on it, see this MSDN article.

Figure 5: Cloud (code on the left) vs. On-Prem (code on the right)

Now paste all the code, as is, from the old windows form project and the web role will serve a web forms that works, but only for the first one or two simple actions (e.g., adding, removing, etc…). Using local cache or version handles will throw exceptions of missing or uninitialized objects.

The issue is that the default.aspx.cs code is instantiated for each HTTP request, and hence the DataCacheFactory , the DataCacheItemVersion and DataCacheLockHandle objects are all instantiated many times on every request (on every HttpHandler). If by chance you somehow get the same instance that handled your previous request then things may work but we need to make the solution more predictable. In the case of simple operations like Add() the DataCacheFactory is only needed once so those may work. It worked great on the windows form because it maintains state (i.e. the same object instanced was the one used) but due to the nature of HttpHandler this state needs to be handle in a different manner.

The easy solution would be to simply label all of these objects to be private static, which will not keep only one instance of this object so in the case of the DataCacheFactory, it will minimize the amount of connections created against the Windows Azure AppFabric Caching service. As mentioned in my blog on the release of the product, this has direct influence on your maximum amount of connections quota. If you create many instances of the DataCacheFactory object, you will quickly fill your connections quota, which in turn will render the service unusable for the next hour (as per quota behavior), at which point the service will go back online until the connection quotas are quickly drained again.

Why did not we care so much about this when we were on-prem? The fact was that the same thing was taking place, but as long as the DataCacheFactory was not Disposed after every use (or in any frequency), then the effect may not have been noticed. But, the fewer cache factories you need to recreate the less performance penalty you will pay. AppFabric cache (in both on-prem and cloud) leverage WCF for its network traffic, which means that each time a cache factory is recreated, a new WCF channel is created and this can be expensive in terms of performance. I will show this in practice in my next blog.

As previously stated, the best performance will be reached by keeping as little code as possible under the default.aspx.cs file. The solution that you will find in the final project (which you can download from this link) is to encapsulate all of the AppFabric cache objects created, API usage and some validation, into a separate class, which in the sample is named the AFCache class. To keep things further “locked”, the AFCache Class is encapsulated into a static class of its own. In effect, it is made into a singleton. This class can be seen in Figure 5 above, which also shows how the code only differs a little bit in the constructor. Notice that for simplicity and encapsulation, at this point there are no static assignments. You can also see how to initialize a DataCacheServerEndpoint and how the ACS token is handled.

Another very important distinction is the added configuration line to assign MaxConnectionsToServer. By default, this is only one. If we had two, then AppFabric Cache client will allow itself to create up to two WCF channels, if needed. Hence, if you find that performance with one channel is not enough then increasing this value may be an option, but connection quotas should be always kept in mind, more so if several Web roles are used. Each webrole will need at least one cache factory, and as it is advised for redundancy. The minimum solution requires at least two web roles; hence you will need at least two connections. In this minimum case, I will recommend reserving at least 3 available connections since a drop in connection within one hour, may be assumed as a third connection.

The code below, shows the static encapsulation of the AFCache class

//Encapsulating the AFCache class to be used as a singleton public static class MyDataCache { private static AFCache _SingleCache = null; static MyDataCache() { _SingleCache = new AFCache(); } public static AFCache CacheFactory { get{ return _SingleCache; } } }Another important point is the encapsulation of AppFabric APIs within the AFCache class itself, here is how it is done in the sample, noticed how the highlighted areas indicate the action AppFabric Cache API.

public class AFCache { //...<Initializing cache factories and other private members>... public AFCache() { //...<Constructor, checking that cache factory is still alive>... } public DataCacheItemVersion Add(string myKey, string myObjectForCaching) { if (CheckOnFactoryInstance()) { return myDefaultCache.Add(myKey, myObjectForCaching); } return null; } public string Get(string myKey, out DataCacheItemVersion myDataCacheItemVersion) { if (!this.UseLocalCache && this.CheckOnFactoryInstance()) { return (string)myDefaultCache.Get(myKey, out myDataCacheItemVersion); } //Calling CheckOnLocalCacheFactoryInstance() will setup the local cache enable factory else if (this.UseLocalCache && this.CheckOnLocalCacheFactoryInstance()) { return (string)myDefaultCache.Get(myKey, out myDataCacheItemVersion); } else { myDataCacheItemVersion = null; } return null; } public DataCacheItemVersion Put(string myKey, string myObj) {...} public bool Remove(string myKey) {...} ... }Here is a sample of how the get method, which is wrapped within the cache AFCache class, is called from default.aspx.cs. This is triggered when the Get_Click()method is fired from the web form by the user

RetrievedStrFromCache = (string)MyDataCache.CacheFactory.Get(myKey, out myVersionBeforeChange)In Conclusion

Although parity does exist between the two versions of the technology (on-Prem and Cloud), there are small considerations that need to be seriously taken into account as part of the architecture. As a friend at work says, “we are making accountants out of coders”. That may be a little exaggerated, but it does illustrate the point that usability of the service is a concept that needs consideration. When working with cloud services, quotas have to be taken into account, which in turn makes the application take a more holistic, and even smarter approach to the usage of available services. This in turn, as in the case shown above, will also streamline execution – all and all, a good idea.

The AppFabricCAT Team described AppFabric Cache – Encrypting at the Client in a 5/31/2011 post:

Introduction

This blog follows the previous post in which we looked at a scheme to compress data before it is added to Windows Azure AppFabric Cache. In that blog we observed some interesting results that gave credence to notion that compressing data before placing it into cache was valuable at cutting down on the cache size with minimal tradeoff in performance. Our team is planning to expand those tests and will post a follow-up when the tests are completed. In this blog we expand the scope slightly to include the extra step of securing the data at rest. That is, encrypting the data prior to adding it into cache.

The Azure AppFabric SDK includes API’s to secure the message (cache data) over the wire using a symmetric cryptography protocol called SSL. But the ‘resting’ data is exposed if the Windows Azure AppFabric Access Control Service (ACS) key, which secures access to your cache, has been compromised. We have a customer discerning whether they should put their HR data into cache for fast lookup. There ask was encryption of data at rest. This blog will encrypt the data used in the previous blog with two little helper methods and summarize some of the performance numbers.

Implementation

Note: These tests are not meant to provide exhaustive coverage, but rather a probing of the feasibility and performance impact of using encryption to secure data saved into Azure AppFabric Cache.

Keeping things really simple, static methods were created to encrypt/decrypt the data. The test cases in the previous blog were enriched to include the encryption prior to sending it to the cache. SSL was used to secure the message transmission.

Cryptography Overview

.NET Framework 4 includes the System.Security.Cryptography namespace which provides cryptographic services, including those to secure data by encryption and decryption. The specific instrument used in this blog is the RijndaelManaged class, which greatly simplifies the amount of energy required to implement the encryption. The RijndaelManaged class can be used to encrypt/decrypt data according to the AES standard, but since encryption is a full topic in its own right; I will refer you to this blog or this generic MSDN sample for more details. I exploited the vanilla/default settings of the cryptography classes provided by .NET 4.

Encryption Method

Shown below is the static method used to return a byte array of encrypted data. The EncryptData static method takes the byte array to be encrypted, the secret key to use for the symmetric algorithm, the initialization vector to use for the symmetric algorithm and returns an encrypted byte array. By default, the SymmetricAlgorithm.Create method creates an instance of the RijndaelManaged class. The CreateEncryptor method provides the encryption algorithm for an instance of CryptoStream which provides the cryptographic transformation link to the MemoryStream.

public static byte[] EncryptData(byte[] inb, byte[] rgbKey, byte[] rgbIV) { SymmetricAlgorithm rijn = SymmetricAlgorithm.Create(); byte[] bytes; using (MemoryStream outp = new MemoryStream()) { using (CryptoStream encStream = new CryptoStream(outp, rijn.CreateEncryptor(rgbKey, rgbIV), CryptoStreamMode.Write)) { encStream.Write(inb, 0, (int)inb.Length); encStream.FlushFinalBlock(); bytes = outp.ToArray(); } } return bytes; }Decryption Method

The DecryptData static method is the essentially the inverse of the EncryptData method, it takes in an encrypted by array and returns the decrypted representation. The only subtle difference is that we are using the CreateDecryptor method to provide a decryption algorithm to the CryptoStream.

public static byte[] DecryptData(byte[] inb, byte[] rgbKey, byte[] rgbIV) { SymmetricAlgorithm rijn = SymmetricAlgorithm.Create(); byte[] bytes; using (MemoryStream outp = new MemoryStream()) { using (CryptoStream encStream = new CryptoStream(outp, rijn.CreateDecryptor(rgbKey, rgbIV), CryptoStreamMode.Write)) { encStream.Write(inb, 0, (int)inb.Length); encStream.FlushFinalBlock(); bytes = outp.ToArray(); } } return bytes; }Key Generation

Key generation was straight forward. The default key generation methods of the RijndaelManaged class were used to generate the secret key and initialization vector. The keys were generated once and used for all encryption and decryption calls.

SymmetricAlgorithm alg = SymmetricAlgorithm.Create(); alg.GenerateIV(); alg.GenerateKey(); byte[] rgbKey = alg.Key; byte[] rgbIV = alg.IV;

Results

See the previous blog post for data model and a more comprehensive test case description. The average duration in milliseconds were computed. The test cases were run 3 times and the values grouped by test case. All Encryption test cases were run with SSL enabled. The keys for the tables are as follows.

- ProdCat – A ProductCategory object

- Product: A Product object

- ProdMode: A Product object which includes the ProductModel

- ProdDes: A Product object which includes ProductModel and ProductDescriptions

Time to ‘Get’ and Object

Figure 1 displays the time to retrieve an object from the AppFabric Cache. The AppFabric SDK call executed was DataCache.Get. The chart shows that there is a small impact securing your data, either with SSL or the encryption methods detailed in this blog.

Figure 1 Time to Get an Object from Cache

Time to ‘Add’ and Object

Figure 2 displays the time to put an object into the AppFabric Cache. The call made was DataCache.Add. From the table it is apparent that the numbers are quite comparable align with those retrieve an object from cache that has been encrypted.

Figure 2 Time to Add an Object to Cache

Conclusion

In this blog we enhanced our compression algorithms to include encryption to secure the Azure AppFabric data at rest. The results clearly indicate that encryption adds a level of overhead to the performance, particularly as the object size increases. Compressing those large objects before encrypting will afford you a measure of performance gain with the added bonus of less cache space used.

Reviewers : Jaime Alva Bravo

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• Mike Benkovich (@mbenko) and Adam Grocholski (@adamgrocholski) will present an MSDN Webcast: Windows Azure Boot Camp: Connecting to Windows Azure (Level 200) on 6/13/2011 at 12:00 Noon PDT:

- Event ID: 1032485093

- Language(s): English

- Product(s): Windows Azure.

- Audience(s): Pro Dev/Programmer.

We explore the new features of Windows Azure Connect to connect on-premises compute and storage to the cloud during this webcast. We look at how to configure and deploy a typical configuration and explore the challenges that may be presented along the way.

Technology is changing rapidly, and nothing is more exciting than what's happening with cloud computing. Join us as we dive deeper into Windows Azure and cover requested topics during these sessions that extend the Windows Azure Boot Camp webcast series.

Try Azure Now: Try the cloud for 30 days for free! Enter promotional code: WEBCASTPASS

Presenters: Mike Benkovich, Senior Developer Evangelist, Microsoft Corporation and Adam Grocholski, Technical Evangelist, RBA Consulting

Energy, laughter, and a contagious passion for coding: Mike Benkovich brings it all to the podium. He's been programming since the late 1970s when a friend brought a Commodore CPM home for the summer. Mike has worked in a variety of roles, including architect, project manager, developer, and technical writer. Mike is a published author with WROX Press and APress Books, writing primarily about getting the most from your Microsoft SQL Server database. Since appearing in Microsoft's DevCast in 1994, Mike has presented technical information at seminars, conferences, and corporate boardrooms across America. This music buff also plays piano, guitar, and saxophone, but not at his MSDN events. For more information, visit www.BenkoTIPS.com.

Adam Grocholski is currently a technical evangelist at RBA Consulting. Recently he has been diving into the Windows Azure and Windows Phone platforms as well as some more obscure areas of the Microsoft .NET Framework (i.e., T4 and MEF). From founding and presenting at the Twin Cities Cloud Computing user group to speaking at the local .NET and Silverlight user groups, code camps, and a number conferences, Adam is committed to building a great community of well-educated Microsoft developers. When he is not working, he enjoys spending time with his three awesome daughters and amazing wife. You can catch up with his latest projects and thoughts about technology at http://thinkfirstcodelater.com, or if that's too verbose for your liking you can always follow him on twitter at http://twitter.com/agrocholski.

If you have questions or feedback, contact us.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• The Windows Azure Team announced the availabilty of New Content: Windows Azure Video Tutorials Now Available in a 6/2/2011 post: