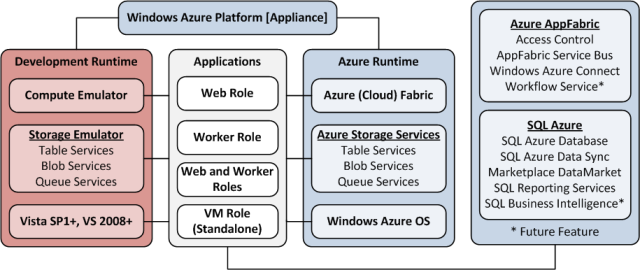

Windows Azure and Cloud Computing Posts for 4/2/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework 4+

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Chris Ismael posted The Windows Azure Table – IKEA analogy to the Innovative Singapore blog on 4/3/2011:

A few days ago I gave an overview presentation of Windows Azure to some BizSpark startups. One of the questions that I got asked often during and after the presentation was why they would bother with a Windows Azure Table when there’s a SQL Azure available.

I admit that after the presentation I felt that I have not explained this quite well too. Unfortunately, I don’t think it can be explained in one shot, but rather as series of explanations that eventually build up to one “Aha” moment. So I hope this post will provide helpful information in understanding the existence of Azure Tables and why it’s cheap. In a later post, we will touch on some uses for Azure Tables.

Why an Azure Table is cheaper

To start off, let me point out an interesting characteristic of Tables that will tie all this together: a very simplistic structure.

(Picture from Bing)

When I think of Azure Tables, I always remember IKEA’s brochure explaining how they can sell furniture cheaper than anyone else. (If you need a primer on what IKEA is, here’s a Wikipedia link). They refer to it as “flat-packing” and it is the way they design the furniture pieces so that more can be produced and transported. Assembling the furniture is also generally the responsibility of the buyer, not IKEA’s. As most of you know, when you buy stuff from IKEA, the parts are neatly arranged in the slimmest way possible in a box, and has easy to follow instructions to assemble the parts.

In a very similar way, Tables are much like IKEA’s flat-packing process. It is structured in a very simplistic manner so that it’s easier to transport and cheaper to produce.

Easier To Transport/Move

You might be asking right now, where are we supposed to move the Tables, and why would I do that anyway? Well, first and foremost, “moving” the table is handled by Microsoft for you (explanation coming in a bit). So you need not worry about that part. The interesting question is why Microsoft would need to “move” Tables.

By now (hopefully), you should understand that one of the benefits of cloud computing is being able to provide a platform that will stand up to hardware breakdown. This is done through the concept of having redundant “machines”, which is achieved by having several virtualized instances of your application i.e., I can have 3 virtualized instances of my application so if 1 goes down, the my users can still access my application because their requests are load- balanced to the other 2 instances/machines. Thus we can achieve 100% uptime (in ideal cases).

Now going back to the IKEA analogy, try to imagine IKEA running a sale for $1 dinner table. They’d be swarmed with hungry buyers (high load). Fortunately for IKEA, they have designed and flat-packed each dinner table into slim boxes, so they can stack more of it and sell/deliver more if they need to.

In the same way for Tables, Microsoft is replicating your Tables so that it can stand up to high load. If one of your tables goes down (a defective IKEA dinner table that needs to be replaced), Microsoft re-routes requests to the duplicate copies while it tries to start another instance a.k.a “move” so that you maintain a redundant setup. Microsoft can do this very quickly and easily because Tables, unlike a relational database (say SQL Azure), have a simpler structure that is easier to replicate across several machines. There are no table relationships to worry about (each IKEA dinner table will have its own assembling instructions and tools), so an Azure table could be sitting in one machine, and another table on another, and they don’t have to worry about each other. User “queries” will just be re-routed to wherever Microsoft “moved” the Tables to, and it will independently satisfy the user requests.

In a relational database, it’s much more complex to “move” tables because you’d have to bring along all the other tables related to the table you’re querying (assuming you have relationships across them) to make sure that it works on one machine. Each machine in the redundant set-up would need to have all related tables in one machine for the “JOINS” to work. This would take a lot of time and processing power. Time and power cost money. In Windows Azure Tables, a JOIN statement doesn’t apply, because it was designed that way to make each table independent and easier/faster to replicate. The term for this is a denormalized table.

Now you might be asking, are you saying SQL Azure doesn’t have redundancy? Absolutely not! Redundancy is also built-in for SQL Azure. But as I said, the less-complex structure of an Azure Table makes it much cheaper.

Cheaper To Produce

Now you know why it’s like this: (Pay-As-You-Go Pricing from http://www.microsoft.com/windowsazure/pricing/).

Azure Tables

- $0.15 per GB stored per month

- $0.01 per 10,000 storage transactions

SQL Azure

- Web Edition

- $9.99 per database up to 1GB per month

- $49.95 per database up to 5GB per month

- Business Edition

- $99.99 per database up to 10GB per month

- $199.98 per database up to 20GB per month

- $299.97 per database up to 30GB per month

- $399.96 per database up to 40GB per month

- $499.95 per database up to 50GB per month

The IKEA dinner table is cheap because IKEA designed it with simplicity in mind, it doesn’t take up too much space when moving it, and they let the buyer assemble the table themselves. The non-IKEA dinner table is expensive because it’s already an assembled piece. Moving it around the factory and delivering it to your house is much more hassle than moving a flat box. Also, IKEA can respond to demand much faster because the design of their tables allows them to easily move it around and produce them faster.

At this point I hope one thing is obvious, that to get the benefit out of a cloud computing platform for data storage in terms of standing up to unpredictable load, replication is a necessity. And the easiest, fastest, and cheapest way for Microsoft to replicate your data is to employ a simple structure. Hence, Azure Tables were provided as an option.

In a later post we will explore in more detail how queries can be much faster in Windows Azure Tables, and sample scenarios for using Tables.

I’m still waiting for Windows Azure Table storage to support secondary indexes.

Keith Bauer published Comparing REST API’s: Windows Azure Queue Service vs. Amazon Simple Queue Service (SQS) on 3/30/2011:

A recent customer engagement prompted me to share details regarding a comparison of the REST API’s used to manage the Windows Azure Queue Service and the Amazon Simple Queue Service (SQS). As expected, these services both provide similar types of message queuing functionality for their respective platforms: create queues, delete queues, send messages, retrieve messages, etc… However, what is not typically expected is the fact that Amazon’s SQS service does not support a REST API. As stated in the 2009-02-01 SQS migration guide, “the REST API is not available”.

This wasn’t always the case. In fact, the Amazon SQS used to have a REST interface which provided all of the basic message queuing services. This was originally provided in their SQS API version 2006-04-01 as well as in their SQS API version 2007-05-01, both of which have been superseded by a newer API which no longer provides a REST interface. Figure 1 below illustrates how the REST API’s used to compare to one another.

Figure 1 – REST API for Common Queue Service Operations

Implications

Contemplating the differences between these REST API’s is now a moot point. If you are steadfast on using a REST architectural style for your cloud-based message queuing needs then the Windows Azure Queue Service should provide what you are looking for. However, to be fair, even though Amazon’s SQS REST API is no longer available, they do support other methods which use simple HTTP/HTTPS requests and provide all of the same functionality as their previous REST API. One of the methods used to manage SQS today is the SQS Query API, which uses both GET and POST methods. Although similar to REST in many ways, the SQS Query API does not adhere to all of the guidelines of a REST architecture style, as defined in the often referenced dissertation by Roy Thomas Fielding. Optionally, you can leverage the SOAP protocol for making SOAP requests and calling SQS service actions. This, however, is only supported by Amazon over an HTTPS connection.

The Amazon SQS REST API is no longer available. SQS service management is provided via the SQS Query API, or SQS SOAP API.

Some of you may be wondering, if the SQS Query API uses HTTP/HTTPs coupled with GET and POST methods, then why is this not REST? Well, for starters, the SQS Query API does not represent a resource as a URI. This violates one of the requirements for calling an API a REST API. However, if you are in the camp of people which accept various levels of REST (i.e. level 0, 1, 2, 3), then according to the Richardson Maturity Model as a way of measuring the restful maturity of a service, the SQS Query API can arguably be considered a Level 0 REST interface. Although, Roy Thomas Fielding, the individual known for coining the REST term, would likely not call anything a REST API until at least a Level 3 interface has been achieved.

Summary

The purpose of this article is not to debate whether REST is good or bad, or to determine if a service which supports a REST API is any better or worse than the other. Rather, this should provide clarity that the Windows Azure Queue Service leverages a REST API, while Amazon’s Simple Queue Service (SQS) leverages the SQS Query API, as well as a SOAP API. Hopefully this brief synopsis has provided some bit of useful insight with the necessary background for better understanding how these common cloud-based message queuing solutions are managed.

Resources and References

- Windows Azure “Queue Service API” article in the MSDN Library

- Amazon Simple Queue Service “Developer Guide – API Version 2006-04-01” PDF

- Amazon Simple Queue Service “Developer Guide – API Version 2007-05-01” PDF

- Amazon Simple Queue Service “Developer Guide – API Version 2008-01-01” PDF

- Amazon Simple Queue Service “Developer Guide – API Version 2009-02-01” Online

- Dissertation by Roy Thomas Fielding

- Richardson Maturity Model

<Return to section navigation list>

SQL Azure Database and Reporting

Mauricio Rojas described Windows Azure Migration: Database Migration, Post 1 in a 4/2/2011 post:

When you are doing an azure migration, one of the first thing you must do is

collect all the information you can about your database.Also at some point in your migration process you might consider between migration to SQL Azure or Azure Storage or Azure Tables.

Make all the appropriate decisions you need to collect at least basic data like:

- Database Size

- Table Size

- Row Size

- User Defined Types or any other code that depends on the CLR

- Extended Properties

Database Size

You can use a script like this to collect some general information:

create table #spaceused( databasename varchar(255), size varchar(255), owner varchar(255), dbid int, created varchar(255), status varchar(255), level int) insert #spaceused (databasename , size,owner,dbid,created,status, level) exec sp_helpdb select * from #spaceused for xml raw drop table #spaceusedWhen you run this script you will get an XML like:

<row databasename="master" size=" 33.69 MB" owner="sa" dbid="1" created="Apr 8 2003" status="Status=ONLINE, ..." level="90"/> <row databasename="msdb" size=" 50.50 MB" owner="sa" dbid="4" created="Oct 14 2005" status="Status=ONLINE, ..." level="90"/> <row databasename="mycooldb" size=" 180.94 MB" owner="sa" dbid="89" created="Apr 22 2010" status="Status=ONLINE, ..." level="90"/> <row databasename="cooldb" size=" 10.49 MB" owner="sa" dbid="53" created="Jul 22 2010" status="Status=ONLINE, ..." level="90"/> <row databasename="tempdb" size=" 398.44 MB" owner="sa" dbid="2" created="Feb 16 2011" status="Status=ONLINE, ..." level="90"/>And yes I know there are several other scripts that can give you more detailed information about your database

but this one answers simple questions likeDoes my database fits in SQL Azure?

Which is an appropriate SQL Azure DB Size?Also remember that SQL Azure is based on SQL Server 2008 (level 100).

- 80 = SQL Server 2000

- 90 = SQL Server 2005

- 100 = SQL Server 2008

If you are migrating from an older database (level 80 or 90) it might be necessary to upgrade first.

This post might be helpful: http://blog.scalabilityexperts.com/2008/01/28/upgrade-sql-server-2000-to-2005-or-2008/

Table Size

Table size is also important.There great script for that:

http://vyaskn.tripod.com/sp_show_biggest_tables.htm

If you plan to migrate to Azure Storage there are certain constraints. For example consider looking at the number of columns:

You can use these scripts: http://www.novicksoftware.com/udfofweek/vol2/t-sql-udf-vol-2-num-27-udf_tbl_colcounttab.htm (I just had to change the alter for create)

Row Size

I found this on a forum (thanks to Lee Dice and Michael Lee)

DECLARE @sql VARCHAR (8000) , @tablename VARCHAR (255) , @delim VARCHAR (3) , @q CHAR (1) SELECT @tablename = '{table name}' , @q = CHAR (39) SELECT @delim = '' , @sql = 'SELECT ' SELECT @sql = @sql + @delim + 'ISNULL(DATALENGTH ([' + name + ']),0)' , @delim = ' + ' FROM syscolumns WHERE id = OBJECT_ID (@tablename) ORDER BY colid SELECT @sql = @sql + ' rowlength' + ' FROM [' + @tablename + ']' , @sql = 'SELECT MAX (rowlength)' + ' FROM (' + @sql + ') rowlengths' PRINT @sql EXEC (@sql)Remember to change the {table name} for the name of the table you need

User Defined Types or any other code that depends on the CLR

Just look at your db scripts at determine if there are any CREATE TYPE statements with the assembly keyword.

Also determine if CLR is enabled with a query like:select * from sys.configurations where name = 'clr enabled'

If this query has a column value = 1 then it is enabled.

Extended Properties

Look for calls to sp_addextendedproperty dropextendedproperty OBJECTPROPERTY and sys.extended_properties in your scripts.

Mark Kromer (@mssqldude) posted More on Cloud BI @ SQL Mag BI Blog on 4/2/2011:

I’ve started a new series on building Cloud BI with Microsoft technologies at the SQL Server BI Blog, starting with ETL using SSIS to move data from traditional on-premises SQL Server to SQL Azure databases, serving as a data mart: http://bit.ly/hggUeT.

Steve Yi posted a Recap of CloudConnect, and the Future of Data to the SQL Azure Team blog on 4/1/2011:

On Wednesday March 9th, I had the opportunity to talk at Cloud Connect about cloud computing, the Windows Azure platform - and I also took some time to talk about what the public cloud is along with some growing trends that will affect and shape the future of the cloud. If you're interested, you can find the deck here. In our discussions with customers and partners, there are two things that are quickly converging currently separate conversations about cloud, web, data, and mobile devices:

- Public Cloud and Platform -As-A-Service (PaaS) abstract away the complexity of infrastructure maintenance, still providing high-availability, failover, and scalability, and are open, flexible, and heterogeneous.

- The future of the web is about data - sharing it to multiple user experiences, extending it beyond the silos of the office, and deriving new insights by easily joining your data with external sources of information.

The unique opportunities that public cloud and platform-as-a-service (PaaS) bring to developers and businesses is the ability to focus specifically user experience and features that benefit users, rather than focusing on non-functional requirements like failover and high-availability. While critical to the operation of a system, users don't necessarily experience any of those benefits tangibly, except of course, if the system goes down.

A great example of a solution using the full potential of public cloud and PaaS is Eye On Earth. As a service of the European Environment Agency (EEA), it collects data from 6,000 monitoring stations across the European Union, coordinating efforts across all 32 member countries to present a centralized visualization of air and water quality to 600 million citizens. Eye On Earth also connects 600 partner organizations across research institutes, universities, ministries and agencies.

In a strictly on-premises world, solutions like this would never exist. The capital expenditures necessary to serve and maintain and infrastructure to serve 600 million people is daunting, with much of it lying idle much of the time. Additionally, with the matrix of different agencies, ministries, sharing the cost of such a solution would have been a nightmare. The economics of the cloud made this feasible. There's also the challenge of collecting and aggregating data efficiently across 6,000 remote monitoring stations. Cloud databases such as SQL Azure now make this possible. You can read more here, and see a video about it here.

With the growing reach of mobile devices everywhere, the web has evolved to more than just a mere browser experience - it is a heterogeneous mix of browser, smartphone, tablet applications and the application marketplaces. Users demand applications that are more agile, robust and accessible via the web. The cloud provides the perfect platform to step into delivering again this demand by users. In this new demand generation frontier, it is critical for developers to create hybrid applications and premises aware systems that are synchronized and provide multi-form factor user experiences.

Shifting gears here, to my second assertion - that the future of the web is about data. The past dozen or so years have seen the explosion of the web, and over the past few years that's evolved to include user experiences on mobile devices and tablets. What's quickly evolving is the necessity of extending data beyond user experiences - now to developers, content partners, and available via web APIs to compose n-number of variable new user experiences. Some interesting numbers to note:

- “LinkedIn Founder: Web 3.0 Will Be About Data: Mashable Mar 30 2011 - http://mashable.com/2011/03/30/reid-hoffman-data/

- “Smartphone shipments surpass PCs” (2010 Q4): Financial Times Feb 08 2011 - http://www.ft.com/cms/s/2/d96e3bd8-33ca-11e0-b1ed-00144feabdc0.html#axzz1DV7zJ5nj

- “Twitter Reveals: 75% of Our Traffic is via API (3 billion calls per day): Programmable Web Apr 15 2010 - http://blog.programmableweb.com/2010/04/15/twitter-reveals-75-of-our-traffic-is-via-api-3-billion-calls-per-day/

- Proliferation of Web APIs fuel the popularity of a web service…increasingly the API is the service: Wired Mar 08 2011 - http://www.webmonkey.com/2011/03/thousand-of-apis-paint-a-bright-future-for-the-web/

The web has evolved to more than just a browser experience; it's a heterogeneous mix of browser, smartphone, tablet applications and app-markets. The cloud has an important role to play in this evolution, by easily extending data from on-premises data sources and synchronizing it to the cloud through technologies like SQL Azure Data Sync and making it available to everyone, every developer, and every device.

Through initiatives we're taking to support open web data protocols such as OData, embracing this world of cloud data is available now, where one cloud service can power multiple experiences across web, device, and plug into existing social media and geospatial user experiences.

Industry-wide, this evolution will undoubtedly take time. It's exciting to be participating in this change, watching the transition happen, and watching how public cloud and PaaS are connecting data across the on-premises world to the web.

Jonathan Gao posted SQL Azure SQL Authentication to the TechNet Wiki on 3/31/2011:

SQL Azure supports only SQL Server authentication. Windows authentication (integrated security) is not supported. You must provide credentials every time when you connect to SQL Azure.

The SQL Azure provisioning process gives you a SQL Azure server, a master database, and a server-level principal login of your SQL Azure server. This server-level principal is similar to the sa login in SQL Server. Additional SQL Azure databases and logins can then be created in the server, as needed.

You can use Transact-SQL to administrate additional users and logins using either Database Manager for SQL Azure or SQL Server Management Studio 2008 R2. Both tools will list the users and logins associated with the databases; however, at this time it does not provide a graphical user interface for creating the users and logins.

Note: The current version of SQL Azure supports only one Account Administrator and one Service administrator account.

In this Article

- The master database

- Creating logins

- Creating users

- Configuring user permissions

- Deleting users and logins

The Master Database

A SQL Azure Server is a logical group of databases. Databases associated with one server Azsre server may spread on different physical computers at the Microsoft data center. You must perform server-level administration for all of the database on the master database. For example, the master database keeps track of the logins. You must connect to the master database to create and drop logins.

Creating Logins

Logins are server wide login and password pairs, where the login has the samepassword across all databases. You must be connected to the master database on SQL Azure with the administrative login to execute the CREATE LOGIN command. Some of the common SQL Server logins can be used like sa, Admin, root. For a complete list, see Managing Databases and Logins in SQL Azure at http://msdn.microsoft.com/en-us/library/ee336235.aspx.

--create a login named "login1"

CREATE LOGIN login1 WITH password='pass@word1';

--list logins. You must run this statement separately from the CREATE LOGIN statement

SELECT * FROM sys.sql_logins;Note: SQL Azure does not allow the USE Transact-SQL statement, which means that you cannot create a single script to execute both the CREATE LOGIN and CREATE USER statements, since those statements need to be executed on different databases.

Creating Users

Users are created per database and are associated with logins. You must be connected to the database in where you want to create the user. In most cases, this is not the master database.

--create a user named "user1"

CREATE USER user1 FROM LOGIN login1;

Configuring User Permissions

Just creating the user does not give them permissions to the database. You have to grant them access. For a full list of roles, see Database-level roles

--give user1 read-only permissions to the database via the db-datareader role

EXEC sp_addrolemember 'db_datareader', 'user1';

Deleting Users and Logins

Fortunately, SQL Server Management Studio 2008 R2 does allow you to delete users and logins. To do this traverse the Object Explorer tree and find the Security node, right click on the user or login and choose Delete. You can also use the DROP LOGIN and the DROP USER statements.

See Also

<Return to section navigation list>

MarketPlace DataMarket and OData

Steve Marx (@smarx) and Wade Wegner (@WadeWegner) produced a 00:34:14 Cloud Cover Episode 42 - The Meaning of Life, the Universe, and Everything (also DataMarket) Channel9 video segment on 4/1/2011:

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show @CloudCoverShow.

In this episode, Christian "Littleguru" Liensberger joins Steve and Wade as they discuss the Windows Azure Marketplace DataMarket. Christian explains the purpose of DataMarket, shows an ASP.NET MVC 3 demo, and shares some tips and tricks.

Also covered in this show:

- Channel 9: Getting Started with the Windows Azure Toolkit for Windows Phone 7

- Database Import/Export in SQL Azure

- Windows Azure sessions at MIX11 posted

- The Rock, Paper, Azure! challenge (also on YouTube)

- Updated MSDN documentation: Steps to Set-up Invoicing

If you’re looking to try out the Windows Azure Platform free for 30-days—without using a credit card—try the Windows Azure Pass with promo code "CloudCover".

Arlo Belshee started Geospatial Question: Common Operations and More Scenarios threads on the OData Mailing List on 4/1/2011. These topics might interest current and potential users of SQL Server’s geometry and geography data types.

Jason Birch’s (@jasonbirch) response describes two common operations and mentions many geospatial data formats. His Geospatial Ramblings blog is here.

OData is one of GeoREST’s Example Format Outputs; simple configuration data is here.

The thread archive and mailing list signup link is here. Arlo is a senior program manager on Microsoft’s OData team.

Marshall Kirkpatrick reported Data.gov & 7 Other Sites to Shut Down After Budgets Cut in a 3/31/2011 post to the ReadWriteWeb blog:

Two years ago the incoming Obama administration launched a number of ambitious websites, most notably Data.gov, that were dedicated to offering public and government data to the outside world. The stated intention was to foster transparency and offer a platform for the development of new software and services. It appears those experiments may be over for now.

Today the Sunlight Foundation and Federal News Radio reported that the public projects Data.gov, USASpending.gov, Apps.gov/now, IT Dashboard and paymentaccuracy.gov as well as a number of internal government sites including Performance.gov, FedSpace and many of the efforts related the FEDRamp cloud computing cybersecurity effort would be taken offline in coming weeks due to budget cuts by Congress. Perhaps things like electronic government, software platforms and public accountability were just fads, anyway.

Update:. We're hearing from several places that there's a potentially viable effort to save these sites and organizations. Here is one perspective on that and you can also see the Sunlight Foundation's Save the Data petition. See also Alex Howard's in-depth reporting on this news published on Friday.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Valery Mizonov explained Windows Azure AppFabric Service Bus and Location Virtualization without the Loch Ness Monster in a 3/31/2011 post to the AppFabric CAT blog:

The following post is not about my trip to Scottish Highlands in 2005 even though it was fun having been able to spend a few days with “Nessie”. I just thought I would share some observations from a recent customer engagement that attracted no legendary monsters whatsoever. Instead, it has proved the value of a monstrous capability that exists in the Windows Azure AppFabric Service Bus and this prompted me to spend a few minutes documenting some key insights.

Problem Space

Software components in modern solutions can present the degree of multi-scale complexity in their behavior. Tracing the source of a calculation error in the algorithmic trading module that is part of a highly distributed trading system is not trivial. It’s just hard, although the word “hard” doesn’t appear to be sufficiently reflective to express the true pain. Luckily, modern developer tools have rapidly caught up and made it easier to debug and troubleshoot puzzling code on a developer machine. Next, there was “It works on my box!” theme. Again, the developer tools did not lose this battle and started offering remote debugging capabilities. Next, there was “The Cloud” theme. And this is where it has landed itself on the developer’s desk, this time with no painkiller pills.

Problem Definition

Let’s just say, a service in my solution was developed by a group of ex-rocket scientists and mostly due to its over-engineered complexity, it’s not subject to any forms of troubleshooting except attaching a debugger and stepping through the source code. Let’s also say that for some obvious reasons, my goal is to be able to run this service outside my local, easily accessible networking infrastructure. I even may take the liberty to say that I want my service to be running on the Cloud, and not where the bunch of brain surgeons code geeks prescribe me to host it. In essence, I’m running into a problem that can be generically abstracted as follows:

If things go wrong, how do I step into and debug my technically challenging cloud service component?

In principle, all I would like to know is how I go about troubleshooting a complex issue in my live cloud-based multi-layer heavily distributed solution, ideally using the same tools and techniques which I rely on in the traditional on-premises world. I want it to be as easy as practically possible, as painless as humanly possible and as effective as clinically possible while ensuring that I’m not going against physics of time travel and not losing my brand as being a Rock Star problem solver. The last statement doesn’t apply to me personally though.

Problem Refined

Enough with abstractions, let’s now face the reality. Deploying and setting up remote debugging service, opening up ports and keeping fingers crossed is one way. It works in theory but fails to derive the expected results in practice. The remote debugging components in Visual Studio require both TCP and UDP ports to be traversable through a firewall, and I’m confident that it is going to be a tough dialog with the networking team trying to convince them to patch the firewall solely for remote debugging.

The other way would be to bring the faulty service into my on-premises environment where I can enjoy having the luxury of diagnostic tools to help facilitate the root cause analysis. Note that this service is just a small needle in the end-to-end architecture. It is barely survivable without the entire landscape of other services, back-end systems, middleware components and so on.

This is exactly where Windows Azure AppFabric Service Bus steps in. In addition to providing the messaging infrastructure for secure service interaction across network boundaries, it comes with rich location virtualization capability making services agnostic with respect to their physical location. The AppFabric Service Bus maps service endpoints into the shared, hierarchical federated namespace model enabling the endpoints to be identifiable by a well-known URI that is accessible regardless of the endpoint’s location. The endpoint URI does not change if service is relocated outside its original hosting boundaries.

So, what is there for me? Simply put, there is an opportunity to have a complete flexibility as it pertains to placement of services in a complex, distributed solution architecture. Services that register their endpoints on the AppFabric Service Bus can be located anywhere where there is an Internet connection – in a Windows Azure data center, private hosting environment, a box in the server room, a Hyper-V VM running on a developer workstation, whatever have you.

The AppFabric Service Bus infrastructure takes the responsibility for performing all the heavy lifting associated with registering an endpoint in the federated naming system, efficiently routing traffic to its virtual address, reconfiguring the virtual address when service deployment topology or location changes. What is left for the developer is a decision as to where to re-locate a buggy service so that both its binaries and source code (or PDB files) can be effectively accessed to provide familiar, much-desired local debugging experience.

What Did We Really Learn?

In a complex distributed solution, software components can be present at many physical locations, not always living together under a single roof. Abstracting these locations through the means of location virtualization provides the foundation of building highly agile service interactions. This in turn enables the interacting participants to be moveable across geo boundaries without having to make anyone aware of a change.

The Windows Azure AppFabric Service Bus addresses the location virtualization requirement and makes it easier to connect location-independent services in a discoverable, secure and dynamic fashion. This capability is attributed to the ESB space and comes very handy regardless whether the end goal is as big and bold as “providing true loose coupling between services and their consumers” and as small and naïve as “bringing error-prone cloud-based solution components into a development box for surgery”.

Additional Resources/References

- “Service Bus Namespace Model” article in the MSDN Library.

- “A Developer’s Guide to Windows Azure AppFabric Service Bus“ whitepaper by Aaron Skonnard.

- “Add the ability to remote debug my application running in the cloud” ask on Windows Azure Feature Voting Forum.

Christian Weyer announced a new 3/31/2011 drop of the thinktecture IdentityServer on CodePlex:

Project Description

thinktecture IdentityServer is the follow-up project to the very popular StarterSTS. It's an easy to use security token service based on WIF, WCF and MVC 3.

Disclaimer

IdentityServer did not go through the same testing or quality assurance process as a "real" product like ADFS2 did. IdentityServer is also lacking all kinds of enterprisy features like configuration services, proxy support or operations integration. The main goal of IdentityServer is to give you a starting point for building non-trivial security token services. Furthermore the current code base is in early stages - if you like a more tested version of this,please use StarterSTS.

High level features

- Active and passive security token service

- Supports WS-Federation, WS-Trust, REST, SAML 1.1/2.0 and SWT tokens

- Supports username/password and client certificate authentication

- Defaults to standard ASP.NET membership, roles and profile infrastructure

- Control over security policy (SSL, encryption, SOAP security) without having to touch WIF/WCF configuration directly

- Automatic generation of WS-Federation metadata for federating with relying parties and other STSes

Intro Screencast: http://identity.thinktecture.com/download/identityserver/identityserverctp1.wmvMy Blog: http://www.leastprivilege.com

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Avkash Chauhan explained Windows Azure Commandlets installation Issues with Windows Azure SDK 1.4 and Windows 7 SP1 in a 4/3/2011 post:

Installing Windows Azure Cmdlets on Windows 7 SP1 OS based machine with Windows Azure SDK 1.4 could results following TWO errors:

1. You will get an error that installer is not Compatible with your OS (Windows 7 SP1).

2. You will get an error that a depend component Windows Azure SDK 1.3 is missing.

If you don't know where to get Windows Azure Cmdlets:

- You can download Windows Azure Cmdlets form the link below:

http://archive.msdn.microsoft.com/azurecmdlets/Release/ProjectReleases.aspx?ReleaseId=3912

- After it, please expand "WASM Cmdlets" installer (WASMCmdlets.Setup.exe) to a location in your machine. (i.e. C:\Azure\WASMCmdlets)

We will solve these two problems here:

To fix the installation problem related with Windows 7 SP1 please do the following:

1. Please browse C:\Azure\WASMCmdlets\setup folder and open Dependencies.dep file in notepad:

You will see the install script is searching for OS build numbers as below however the Windows 7 SP1 build number #7601 is missing:

<dependencies>

<os type="Vista;Server" buildNumber="6001;6002;6000;6100;6200;7100;7600">

2. Now Add Window 7 SP1 Build version 7601 at the end of the "os type" string as below:

<dependencies>

<os type="Vista;Server" buildNumber="6001;6002;6000;6100;6200;7100;7600;7601">

3. Save the file.

To fix the installation problem related with Windows Azure SDK 1.4 please do the following:

1. Please browse the C:\Azure\WASMCmdlets\setup\scripts\dependencies\check folder and open CheckAzureSDK.ps1 file in notepad:

You will see the install script is searching for Window Azure SDK version 1.3.11122.0038 as below:

$res1 = SearchUninstall -SearchFor 'Windows Azure SDK*' -SearchVersion '1.3.11122.0038' -UninstallKey 'HKLM:SOFTWARE\Wow6432Node\Microsoft\Windows\CurrentVersion\Uninstall\';

$res2 = SearchUninstall -SearchFor 'Windows Azure SDK*' -SearchVersion '1.3.11122.0038' -UninstallKey 'HKLM:SOFTWARE\Microsoft\Windows\CurrentVersion\Uninstall\';

2. Please replace the above value with 1.4.20227.1419 as below:

$res1 = SearchUninstall -SearchFor 'Windows Azure SDK*' -SearchVersion '1.4.20227.1419' -UninstallKey 'HKLM:SOFTWARE\Wow6432Node\Microsoft\Windows\CurrentVersion\Uninstall\';

$res2 = SearchUninstall -SearchFor 'Windows Azure SDK*' -SearchVersion '1.4.20227.1419' -UninstallKey 'HKLM:SOFTWARE\Microsoft\Windows\CurrentVersion\Uninstall\';

3. Save the file.

The above two steps should [cure] your installation problem.

Avkash Chauhan described How to change your Windows Azure Deployment (Stage or Production) Cloud OS Version on 4/3/2011:

Windows Azure Cloud OS is available in following two Windows Server 2008 Versions:

- Windows Server 2008 SP2

- Windows Server 2008 R2

Older Windows Azure Cloud OS versions 1.0 - 1.11 are available with Windows Server 2008 SP2. (OLDER). Newer Windows Azure Cloud OS version 2.0 - 2.2 are Available with Windows Server 2008 R2 (NEWER). If you are using Windows Azure SDK 1.3 or (newer 1.4) you will get newer version of OS.

If you decided to change Cloud OS version by any reason you can follow these steps:

1. Changing Cloud OS version to Windows Server 2008 R2

2. Changing Cloud OS version to Windows Server 2008 SP2

Tony Bishop (a.k.a., tbtechnet and tbtonsan, pictured below) described The Small, Small Business App on Azure that Shouts Big in a 4/1/2011 post to the TechNet blogs:

I was in an email thread today chatting about the apparent lack of cloud apps for the small, small business [1-10 persons]. Like magic, my friend Joe [Dwyer] emails me with a “Tony, just thought you’d like to see this” email.

Typical bright, smart yet humble Joe.

Wow.

Joe has built on the Windows Azure platform, a Facebook storefront application that let's merchants create a mini-storefront directly on their Fan page. People can share the storefront by "liking" products and then purchase directly from within Facebook, using PayPal. It's also connected to QuickBooks.

http://www.onewaycommerce.com for more info.

So for those small businesses that worry about ways to not only setup an ecommerce site, but more importantly to share with the world all the great reviews about the business, OneWay Commerce just helped you.

Sales, marketing and campaigns all through Facebook, on Azure with QuickBooks. Auction and e-resellers watch out.

And, if there is any doubt that Azure is a game changer in terms of the lowest development costs and the fastest deployment cycles, just ask Joe [Dwyer].

Try Azure now. For free. 30-days. No credit card required. Promo code TBBLIF

http://www.windowsazurepass.com/?campid=A8A1D8FB-E224-E011-9DDE-001F29C8E9A8

Avkash Chauhan posted a List of Performance Counters for Windows Azure Web roles on 4/1/2011:

Here is a list of performance counters, which you can use with Windows Azure Web Role:

// .NET 3.5 counters @"\ASP.NET Apps v2.0.50727(__Total__)\Requests Total" @"\ASP.NET Apps v2.0.50727(__Total__)\Requests/Sec" @"\ASP.NET v2.0.50727\Requests Queued" @"\ASP.NET v2.0.50727\Requests Rejected" @"\ASP.NET v2.0.50727\Request Execution Time" @"\ASP.NET v2.0.50727\Requests Queued" // Latest .NET Counters (4.0) @"\ASP.NET Applications(__Total__)\Requests Total" @"\ASP.NET Applications(__Total__)\Requests/Sec" @"\ASP.NET\Requests Queued" @"\ASP.NET\Requests Rejected" @"\ASP.NET\Request Execution Time" @"\ASP.NET\Requests Disconnected" @"\ASP.NET v4.0.30319\Requests Current" @"\ASP.NET v4.0.30319\Request Wait Time" @"\ASP.NET v4.0.30319\Requests Queued" @"\ASP.NET v4.0.30319\Requests Rejected" @"\Processor(_Total)\% Processor Time" @"\Memory\Available MBytes @"\Memory\Committed Bytes" @"\TCPv4\Connections Established" @"\TCPv4\Segments Sent/sec" @""\TCPv4\Connection Failures" @""\TCPv4\Connections Reset" @"\Network Interface(Microsoft Virtual Machine Bus Network Adapter _2)\Bytes Received/sec" @"\Network Interface(Microsoft Virtual Machine Bus Network Adapter _2)\Bytes Sent/sec" @"\Network Interface(Microsoft Virtual Machine Bus Network Adapter _2)\Bytes Total/sec" @"\Network Interface(*)\Bytes Received/sec" @"\Network Interface(*)\Bytes Sent/sec" @"\.NET CLR Memory(_Global_)\% Time in GC"

You can define "AddAzurePerformanceCounter" class in your Web Role as below:

public class AddAzurePerformanceCounter { private IList<PerformanceCounterConfiguration> _perfCounters; public AddAzurePerformanceCounter (IList<PerformanceCounterConfiguration> counterCollection) { _perfCounters = counterCollection; } public void AddPerformanceCounter(string counterName, int minuteInterval) { PerformanceCounterConfiguration perfCounter = new PerformanceCounterConfiguration(); perfCounter.CounterSpecifier =counterName; perfCounter.SampleRate = System.TimeSpan.FromMinutes(minuteInterval); _perfCounters.Add(perfCounter); } }Add Performance Counters as Below:

AddAzurePerformanceCounter counters = new AddAzurePerformanceCounter(diagConfig.PerformanceCounters.DataSources); counters.AddPerformanceCounter(@"\Processor(_Total)\% Processor Time", 5); counters.AddPerformanceCounter(@"\Memory\Available MBytes", 5); counters.AddPerformanceCounter(@"\ASP.NET v4.0.30319\Requests Current", 5); counters.AddPerformanceCounter(@"\TCPv4\Connections Established", 5); counters.AddPerformanceCounter(@"\TCPv4\Segments Sent/sec", 5); counters.AddPerformanceCounter(@"\Network Interface(Microsoft Virtual Machine Bus Network Adapter _2)\Bytes Received/sec", 5); counters.AddPerformanceCounter(@"\Network Interface(Microsoft Virtual Machine Bus Network Adapter _2)\Bytes Sent/sec", 5); counters.AddPerformanceCounter(@"\Network Interface(Microsoft Virtual Machine Bus Network Adapter _2)\Bytes Total/sec", 5); counters.AddPerformanceCounter(@"\.NET CLR Memory(_Global_)\% Time in GC", 5); counters.AddPerformanceCounter(@"\ASP.NET Applications(__Total__)\Requests/Sec", 5);

The Windows Azure Team posted Real World Windows Azure: Interview with Declan Rudden, Director of Distribution at Irish Music Rights Organisation on 4/1/2011:

As part of the Real World Windows Azure series, we talked to Declan Rudden, Director of Distribution at Irish Music Rights Organisation (IMRO), about using the Windows Azure platform to deliver the organization's Online Member Services portal. Here's what he had to say:

MSDN: Tell us about the Irish Music Rights Organisation and the services you offer.

Rudden: Founded in 1995, IMRO is a nonprofit national organization that administers performing rights and distributes royalties for copyrighted music in Ireland on behalf of its members. Members include songwriters, composers, and music publishers, plus members of other international copyright organizations to which we are affiliated.

MSDN: What was the situation that IMRO faced prior to implementing the Windows Azure platform?

Rudden: In 2006, because of the emergence of additional radio stations as well as streaming and download music providers on the Internet, IMRO was faced with a massive growth in the amount of data it needed to process to serve its members. We hired Spanish Point Technologies, a Microsoft Gold Certified Partner, to build a system that would calculate royalty payments for music performances that take place in the public domain. The new system, launched in 2007, automates the royalty collection and distribution process. The system was a great success. It significantly improved the match rates and increased efficiencies therefore we were able to reduce headcount and save money.

MSDN: Describe the solution you built with the Windows Azure platform?

Rudden: We decided to develop the IMRO Online Member Services portal on the Windows Azure platform as a way to show tangible benefits to our members. Members heard that our royalty system increased matches to musical performances. With the Windows Azure portal, members can see the system's benefits, and data is exposed in a very secure way.

IMRO royalty distributions are based on the number of seconds of music a broadcaster plays in a given period.

MSDN: What makes your solution unique?

Rudden: One of the challenges that Spanish Point Technologies had to address when it built the Online Member Services portal was how it would protect sensitive member information in the cloud. We specified that we wanted data to remain largely on-premises. Only information needed to fulfill an interaction would be available through the portal. We used web services to connect the portal to the on-premises Microsoft SQL Server database, where the sensitive data resides.

MSDN: Describe how IMRO members interact with the Online Member Services portal.

Rudden: Members can sign in to the Online Member Services portal by using their Windows Live IDs. Available information includes an inventory of each artist's works, how works are stored, any instance of the work being performed or played, what royalty payments were distributed, and how payments were calculated. It plainly illustrates how if something gets played, you get paid. This gives our members comfort because our calculations are transparent and precise. We also do crowd sourcing. We allow our members to match works that we've been unable to match.

MSDN: What benefits have you seen since implementing the Windows Azure platform?

Rudden: Our members are amazed by the Online Member Services functionality. Plus, the Windows Azure platform is hosted in one of the largest data centers in Europe, and it includes a stringent SLA [service level agreement] for uptime while enabling services to scale easily as needed by transaction volumes. It's been a great success.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000009116

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

Avkash Chauhan described a workaround for Windows Azure Worker Role Exception: System.Runtime.Fx+IOCompletionThunk.UnhandledExceptionFrame on 4/1/2011:

If you have a worker role crashing with the following exception details, it is possible I have solution for you:

Application: WaWorkerHost.exe Framework Version: v4.0.30319 Description: The process was terminated due to an unhandled exception. Exception Info: System.Runtime.CallbackException Stack: at System.Runtime.Fx+IOCompletionThunk.UnhandledExceptionFrame(UInt32, UInt32, System.Threading.NativeOverlapped*) at System.Threading._IOCompletionCallback.PerformIOCompletionCallback(UInt32, UInt32, System.Threading.NativeOverlapped*)

If you further debug this issue you will see more details on this exception as below:

Microsoft.WindowsAzure.ServiceRuntime Critical: 1 : Unhandled Exception: System.InvalidProgramException: Common Language Runtime detected an invalid program. at System.ServiceModel.Dispatcher.ErrorBehavior.HandleErrorCommon(Exception error, ErrorHandlerFaultInfo& faultInfo) at System.ServiceModel.Dispatcher.ChannelDispatcher.HandleError(Exception error, ErrorHandlerFaultInfo& faultInfo) at System.ServiceModel.Dispatcher.ChannelHandler.HandleError(Exception e) at System.ServiceModel.Dispatcher.ChannelHandler.OpenAndEnsurePump() at System.Runtime.IOThreadScheduler.ScheduledOverlapped.IOCallback(UInt32 errorCode, UInt32 numBytes, NativeOverlapped* nativeOverlapped) at System.Runtime.Fx.IOCompletionThunk.UnhandledExceptionFrame(UInt32 error, UInt32 bytesRead, NativeOverlapped* nativeOverlapped) at System.Threading._IOCompletionCallback.PerformIOCompletionCallback(UInt32 errorCode, UInt32 numBytes, NativeOverlapped* pOVERLAP) Microsoft.WindowsAzure.ServiceRuntime Critical: 1 : Unhandled Exception: System.InvalidProgramException: Common Language Runtime detected an invalid program. at System.ServiceModel.Dispatcher.ErrorBehavior.HandleErrorCommon(Exception error, ErrorHandlerFaultInfo& faultInfo) at System.ServiceModel.Dispatcher.ChannelDispatcher.HandleError(Exception error, ErrorHandlerFaultInfo& faultInfo) at System.ServiceModel.Dispatcher.ChannelHandler.HandleError(Exception e) at System.ServiceModel.Dispatcher.ChannelHandler.OpenAndEnsurePump() at System.Runtime.IOThreadScheduler.ScheduledOverlapped.IOCallback(UInt32 errorCode, UInt32 numBytes, NativeOverlapped* nativeOverlapped) at System.Runtime.Fx.IOCompletionThunk.UnhandledExceptionFrame(UInt32 error, UInt32 bytesRead, NativeOverlapped* nativeOverlapped) at System.Threading._IOCompletionCallback.PerformIOCompletionCallback(UInt32 errorCode, UInt32 numBytes, NativeOverlapped* pOVERLAP) (a64.69c): CLR exception - code e0434352 (first chance)

Possible Reason for this issue:

Based on investigation what we found is that the problem is caused by a known issue with Visual Studio 2010 RTM base libraries.

Solution:

The above problem is fixed in Visual Studio 2010 SP1. Install Visual Studio 2010 SP1 (VS1010 SP1) and recompile the Windows Azure Application. After that, you can test the Worker Role in the development fabric and Windows Azure.

Note: This issue is also reference here: http://social.msdn.microsoft.com/Forums/en-ZA/windowsazuretroubleshooting/thread/543da280-2e5c-4e1a-b416-9999c7a9b841

Josh Holmes awarded points to the Windows Azure Toolkit for Windows Phone 7 in a 3/31/2011 post:

The Windows Azure Toolkit for Windows Phone 7 is a starter kit that was recently released out to CodePlex. Wade Wegner, one of my former team mates when both of us were in Central Region, is the master mind behind this fantastic starter.

This starter kit is designed to make it easier for you to build mobile applications that leverage cloud services running in Windows Azure.

Screencast

In the screencast, you’ll get a great little walkthrough of the starter kit and how to get your first Windows Phone 7 application with a Windows Azure backend up and running. The toolkit includes a bunch of stuff including Visual Studio project templates that will create the Windows Phone 7 and Windows Azure projects, class libraries optimized for use on the phone, sample applications and documentation.

Why Windows Azure

Windows Azure is Microsoft’s Platform as a Service (PaaS) offering that allows you to build and scale your application in the cloud so that you don’t have to build out your local infrastructure. If you are selling an application in the Windows Phone 7 Marketplace and really don’t know how many customers you’ll end up with, you might need to scale the backend dramatically to meet the demand.

What you’ll need

Hopefully obviously you’ll need an Azure account and the tools to build and deploy the solution. The tools include one of the versions of Visual Studio (either Express which is free or higher), the Windows Azure Toolkit and then obviously the starter kit itself. I also recommend looking at Expression Blend for doing your Windows Phone 7 design and the like.

Good Luck!

By looking through the resources on the Windows Azure Toolkit for Windows Phone 7 site, you’ll see lots of great little tutorials and getting started guides.

Let me know how you’re getting on with the toolkit and what you’ve done with it. I’d like to see and possibly blog about it all…

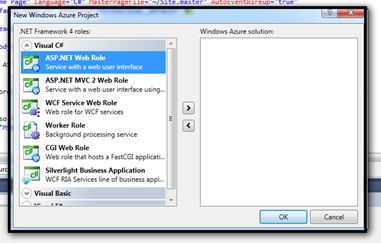

Edu Lorenzo explained Deploying an ASP.Net webapp to Azure in a 3/31/2011 post:

Okay, so you’re and ASP.Net developer doing webapps/Webforms. And you are curious about Azure and what you need to learn, and do, to deploy your app to an azure service.

I also went the same path and here is what I got… a short blog on how to move an asp.net app to the cloud.

The app I will be using is going to be the easiest app to build.. the default app that VS gives you when you create a new asp.net webapp. Why? Baby steps.. let’s get into what to modify in an existing app to make it azure ready in the future. Besides, not all apps are created equal, so in the interest of uniformity and repeatability, I’ll use the default app, so you can do it too.

SO, I start off with the default sight right. By habit, I run my visual studio as admin, and I make a new project that is an ASP.Net web application. *What about MVC? Next blog.

This should give you that default site. We stop ASP.Net development here, as what I would like to focus on is how to add this to your azure subscription.

The next thing we do now, is add a cloud application by rightclicking the solution then adding a new project of type Windows Azure Project from the Cloud template.

Give it any name you want to.

Then it will ask you what kind of role this project will be. Leave it blank, and just click OK since we want to add the existing “app” as the webrole for this project.

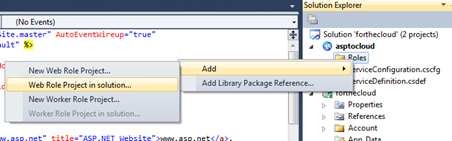

Your solution explorer should look something like this:

Right click the Roles folder of the cloud application and choose “Add web project in solution”

Then choose the existing app from the dialog that comes out.

And there you go! This app is now ready for publishing to Azure!

Michael Washman described a proof of concept for Migrating a Windows Service to Windows Azure in a 3/30/2011 post:

The overall proof of concept I’m trying out is how you could migrate a Windows Service to Azure and communicate with this service from another role instance in the cloud. I borrowed most of the implementation of my service from a Windows SDK sample named "Service". The service itself is written in C and just creates a secured named pipe that receives data, processes it (reverses it), and sends it back to the client.

Let’s look at some of the dependencies this service has just from what we know by the above description:

- Security: The named pipe has an ACL on it so whatever machine is writing/reading the pipe will need to be authenticated.

- Distributing binaries: I’ll need to deploy the service executable and the VC++ Runtime library (which is not installed by default on Azure nodes).

- Since the bulk of my code is running in a Windows Service and not directly in the worker role how will Azure know if something went wrong?

- How will the two roles communicate? Named pipes? How is that configured?

To get started with this project I created a solution with the following projects:

- Cloud Project named "ServiceDemo"

- Worker Role named "Service Watcher"

- Web Role named "ASPXServerClient"

- Windows Service named "Service"

So let’s tackle the dependencies one at a time.

Security Dependency

Here is the security descriptor set by my service during the call to CreateNamedPipe(...):

TCHAR * szSD = TEXT("D:") // Discretionary ACL TEXT("(D;OICI;GA;;;BG)") // Deny access to built-in guests TEXT("(D;OICI;GA;;;AN)") // Deny access to anonymous logon TEXT("(A;OICI;GRGWGX;;;AU)") // Allow read/write/execute to authenticated users TEXT("(A;OICI;GA;;;BA)"); // Allow full control to administratorsIf I want to authenticate from my web role to my Windows service how can I do that? There is no Active Directory in this scenario. A relatively simple solution to this problem is to create duplicate local accounts on both the web and worker role instances. Then impersonate the local account from the ASP.NET site to access the service.

This can be accomplished by using an elevated startup task running the following commands:

Startup.cmd

net user serviceUser [somePassword] /add

exit /b 0The first line creates a local user named serviceUser with whatever password you set. The second line returns from the batch file. This particular user does not have to be part of the administrators groups due to my services service descriptor allowing the named pipe to be written to by users in the Users group. My ASP.NET code will have to authenticate as this user when it writes to the named pipe (I’ll show that a bit later).

This startup task needs to be run for the web role AND the worker role so you will need to add the following to both roles in the ServiceDefinition.csdef. Note: my startup tasks are in a folder I created in each project called “Startup” and put startup.cmd within.

<Task commandLine="Startup\Startup.cmd" executionContext="elevated" taskType="simple" />

Note the elevated requirement due to creating users.

Distributing Binaries

This one is pretty straightforward. To have additional files deploy with your worker role add them to your project (in a folder). Once added select each file in Visual Studio and change Build Action to Content and Copy to Output Directory to Copy if Newer.

For instance you will need to download the VC runtime that your service is compiled with. In my case it is the VC++ 2010 for x64 (vcredist_x64.exe). Once downloaded add the file to your Startup folder.

These steps will ensure the VC++ runtime is copied to the Azure servers when the project is published.

The next step is to add another step to the startup task for your worker role only:

"%~dp0vcredist_x64.exe" /q /norestart

This command will start the install quietly (and tell it not to reboot). The %~dp0 is a batch file constant that essentially means the current directory that the batch file is running in.

Service Installation

I also need to install my service silently. In my case the service supports a command line argument –install that performs this properly. Your service will need to perform similarly for it to install in a worker role.

The complete startup.cmd for the worker role is here:net user serviceUser [somePassword] /add

"%~dp0vcredist_x64.exe" /q /norestart

"%~dp0Service.exe" -install

exit /b 0

Monitoring the Windows ServiceAs the name of my worker role implies (ServiceWatcher) I want to monitor my Windows Service so the Azure runtime is aware of any problems and can reimage/start the role as needed.

I have a simple class (probably not real robust either!) that checks if my service is running and if not tries to start it. If it is not started after these steps it returns false otherwise true.class ServiceMonitor { public static bool CheckAndStartService(String ServiceName, int StartTimeOutSeconds) { ServiceController mySC = new ServiceController(ServiceName); if (IsServiceStatusOK(mySC.Status) == false) { System.Diagnostics.Trace.WriteLine("Starting Service....."); try { mySC.Start(); } catch(Exception) {} mySC.WaitForStatus(ServiceControllerStatus.Running, new TimeSpan(0, 0, StartTimeOutSeconds)); if (IsServiceStatusOK(mySC.Status)) return true; else return false; } return true; } static private bool IsServiceStatusOK(ServiceControllerStatus Status) { if (Status != ServiceControllerStatus.Running && Status != ServiceControllerStatus.StartPending && Status != ServiceControllerStatus.ContinuePending) { return false; } return true; } }My worker role’s Run() method is as follows:

public override void Run() { String ServiceName = "SimpleService"; int ServiceFailCount = 0; const int MAXFAILS = 5; while (true) { // if the service failed more than 5 times return if (ServiceFailCount >= MAXFAILS) { Trace.TraceError(String.Format(String.Format("{0} has failed to start {1} times", ServiceName, ServiceFailCount))); return; } try { // Check if the service is running if (ServiceMonitor.CheckAndStartService("SimpleService", 10) == true) { Trace.TraceInformation("Service is Running"); } else { // if not increment the fail count ServiceFailCount++; Trace.TraceError("Service is no longer Running"); } } catch (Exception e) { Trace.TraceError("Exception occurred: " + e.Message); } Thread.Sleep(10000); } }The worker role's Run method stays and checks the status of the Windows service every 10 seconds. If the service fails to start five times (MAXFAILS) then it returns which tells Azure that something went wrong.

Using the ServiceController class does require elevation. So in ServiceDefinition.csdef add the following line for the ServiceWatcher role instance:

<Runtime executionContext="elevated" />

How do the roles communicate?

The port for named pipes is 445. To allow communication between the web and worker roles I will need an internal endpoint opened up on the ServiceWatcher worker role.

Impersonating the Local User

My ASP.NET code will need to use impersonation to connect to the service to authenticate:

I’ve created a helper class for this:

public class LogonHelpers { // Declare signatures for Win32 LogonUser and CloseHandle APIs [DllImport("advapi32.dll", SetLastError = true)] public static extern bool LogonUser( string principal, string authority, string password, LogonSessionType logonType, LogonProvider logonProvider, out IntPtr token); [DllImport("kernel32.dll", SetLastError = true)] public static extern bool CloseHandle(IntPtr handle); public enum LogonSessionType : uint { Interactive = 2, Network, Batch, Service, NetworkCleartext = 8, NewCredentials } public enum LogonProvider : uint { Default = 0, // default for platform (use this!) WinNT35, // sends smoke signals to authority WinNT40, // uses NTLM WinNT50 // negotiates Kerb or NTLM } }Load Balancing the Internal Endpoint

One other helper function I want to mention is the following:

private String GetRandomServiceIP() { var endpoints = RoleEnvironment.Roles["ServiceWatcher"].Instances.Select(i => i.InstanceEndpoints["NamedPipes"]).ToArray(); Random r = new Random(DateTime.Now.Millisecond); int ipIndex = r.Next(endpoints.Count()); return endpoints[ipIndex].IPEndpoint.Address.ToString(); }This method is needed because internal endpoints are not load balanced by Azure. So if you configured multiple instances of your service to run you will want to load balance these calls.

Writing to and Reading from the Named Pipe

Now for the magic to actually write data to and read data from the service’s named pipe:

protected void cmdProcessText_Click(object sender, EventArgs e) { IntPtr token = IntPtr.Zero; WindowsImpersonationContext impersonatedUser = null; String ServiceUserName = "serviceUser"; String ServicePassword = "somePassword"; String ServiceInstanceIP = String.Empty; try { ServiceInstanceIP = GetRandomServiceIP(); bool impResult = LogonHelpers.LogonUser(ServiceUserName, ".", ServicePassword, LogonHelpers.LogonSessionType.Interactive, LogonHelpers.LogonProvider.Default, out token); if (impResult == false) { lblProcessedText.Text = "LogonUser failed"; return; } WindowsIdentity id = new WindowsIdentity(token); // Begin impersonation impersonatedUser = id.Impersonate(); // Resource access here uses the impersonated identity NamedPipeClientStream pipe = new NamedPipeClientStream(ServiceInstanceIP, "simple", PipeDirection.InOut); // connect to the pipe - give 10 seconds before timing out pipe.Connect(10000); String tmpInput = txtTextToProcess.Text; // null terminate for our native C code tmpInput += '\0'; // Write to the pipe service StreamWriter sw = new StreamWriter(pipe); sw.AutoFlush = true; sw.Write(tmpInput); // Wait for the result after processing StreamReader sr = new StreamReader(pipe); String result = sr.ReadToEnd(); lblProcessedText.Text = "Processed Text: " + result + " by instance: " + ServiceInstanceIP; } catch (Exception exc) { lblProcessedText.Text = "Exception occurred: " + exc.Message; } finally { if (impersonatedUser != null) impersonatedUser.Undo(); if (token != null) LogonHelpers.CloseHandle(token); } }

Simple Enough!

Michael is a Microsoft developer evangelist in Redmond.

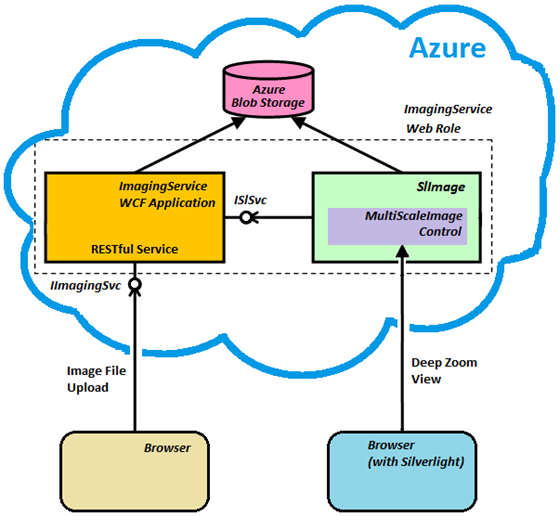

Igor Ladnik posted Image Upload and Silverlight Deep Zoom Viewing with Azure to The Code Project on 3/27/2011 (missed when published):

This article presents Azure based software to upload image and view it with Silverlight MultiScaleImage control. Browser performs both upload and viewing with no additional installation on user machine/device (the viewing browser should support Silverlight).

Introduction

One obvious application of Cloud computing is providing storage, processing and fast access to large volume of data, particularly images. Dealing for some time with a non-cloud-based image access system I'd like to implement a simple software allowing to upload images to Azure Blob Storage and then easily view them. It is preferred to provide both upload and viewing using only browser with minimum (ideally zero) installation on client machine. Bearing this goal in mind, I dug the Web for relevant information and samples. Pretty soon, I found a lot of writings covering various aspects of the problem. But I didn't come across a complete code sample addressing the above problem. This article presents such sample. In my code, I actually used many fragments and even entire classes developed by other people. Although I made some changes in the code, I'd preserve references to them and provide links to their works at the end of this article. Some fragments of other developers' code not relevant for this article I left, but commented out.

Background

It seems logical that file upload should be carried out from a simple HTML page readable by any browser. This allows the user to upload image from virtually any computer and mobile device. WCF RESTful service

IImagingSvcacts as a counterpart on the server (Azure cloud) side. This service is responsible for providing HTML page for uploading the image to Azure. For image viewing, Microsoft Silverlight with itsMultiScaleImagecontrol is employed. So the viewing browser should support Silverlight. Currently, most of browsers do meet this requirement (except probably browser of mobile devices).

MultiScaleImagecontrol is based on the Deep Zoom (DZ) technology [1-6]. Wikipedia describes DZ as follows [1]:Deep Zoom is an implementation of the open source technology, provided by Microsoft, for use in for image viewing applications. It allows users to pan around and zoom in a large, high resolution image or a large collection of images. It reduces the time required for initial load by downloading only the region being viewed and/or only at the resolution it is displayed at. Subsequent regions are downloaded as the user pans to (or zooms into them); animations are used to hide any jerkiness in the transition.

DZ requires pyramid of tiles images constructed from original image [2, 3]. The image pyramid exceeds size of original image (according to some estimation in 1.3 time in average). But this technique permits fast and smooth image download for viewing. Microsoft provides a special Deep Zoom Composer [7] tool for the tile image pyramid generation. But usage of this tool does not help in our task since this first requires installation of the tool, and second, considerably increases volume of data to be uploaded. Clearly, we have to provide a more suitable to our purposes tool for tile pyramid generation.

Design

The CodeProject articles [8, 9] and blog entry [10] were chosen as departure points for the design. The former provide algorithm and code for image tile pyramid generation. The change required was to store the images as blobs in the Azure Blob Storage rather than in database. The latter contains useful tips to set permissions to Azure Blob Storage container. While developing, I also used Cloudberry Explorer tool to inspect and manage Azure Blob Storage.

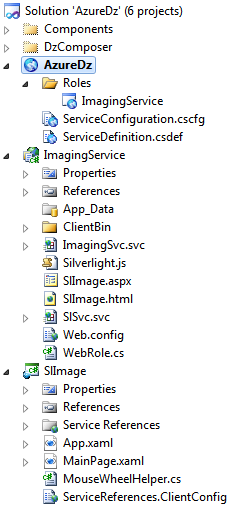

A Web Role WCF application

ImagingServicewas created with VS2010 wizard. Two WCF service components, namely,ImagingSvcandSlSvc, and a Silverlight component were added to the application. The VS2010 solution structure is depicted in the figure below:

The RESTful WCF service

ImagingSvcprovides means for uploading image from client machine / device, its processing to a DZ image tile pyramid and storage for the image pyramid to Azure Blob Storage. This service haswebHttpBinding.SlSvcWCF service intends internal-to-Azure-application communication to provide data forSlImageSilverlight-based component. The service hasbasicHttpBindingand a relative address to simplify access to it bySlImagecomponent. Interfaces and configuration of both WCF services are shown below:

Collapse | Copy Code

[ServiceContract] interface IImagingSvc { [OperationContract] [WebGet(UriTemplate = "/{data}", BodyStyle = WebMessageBodyStyle.Bare)] Message Init(string data); [OperationContract] [WebInvoke(Method = "POST", BodyStyle = WebMessageBodyStyle.Bare)] Message Upload(Stream stream); }

Collapse | Copy Code

[ServiceContract] public interface ISlSvc { [OperationContract] string BlobContainerUri(); [OperationContract] string[] BlobsInContainer(); }

Collapse | Copy Code

<configuration> <system.diagnostics> <trace> <listeners> <add type="Microsoft.WindowsAzure.Diagnostics. DiagnosticMonitorTraceListener, Microsoft.WindowsAzure.Diagnostics, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" name="AzureDiagnostics"> <filter type="" /> </add> </listeners> </trace> </system.diagnostics> <system.web> <compilation debug="true" targetFramework="4.0" /> <httpRuntime maxRequestLength="2147483647"/> </system.web> <system.serviceModel> <serviceHostingEnvironment multipleSiteBindingsEnabled="true" /> <bindings> <webHttpBinding> <binding name="StreamWebHttpBinding" maxBufferPoolSize="1000" maxBufferSize="2147483647" maxReceivedMessageSize="2147483647" closeTimeout="00:25:00" openTimeout="00:01:00" receiveTimeout="01:00:00" sendTimeout="01:00:00" transferMode="Streamed" bypassProxyOnLocal="false"> <readerQuotas maxDepth="333333" maxStringContentLength="333333" maxArrayLength="333333" maxBytesPerRead=" 333333" maxNameTableCharCount="333333" /> </binding> </webHttpBinding> </bindings> <behaviors> <endpointBehaviors> <behavior name="RestBehavior"> <webHttp /> </behavior> </endpointBehaviors> <serviceBehaviors> <behavior name="LargeUploadBehavior"> <serviceDebug includeExceptionDetailInFaults="true" /> </behavior> <behavior name=""> <serviceMetadata httpGetEnabled="true" /> <serviceDebug includeExceptionDetailInFaults="false" /> </behavior> </serviceBehaviors> </behaviors> <services> <service name="ImagingService.ImagingSvc" behaviorConfiguration="LargeUploadBehavior" > <endpoint address="" contract=" ImagingService.IImagingSvc" binding="webHttpBinding" bindingConfiguration="StreamWebHttpBinding" behaviorConfiguration="RestBehavior" /> </service> <service name="ImagingService.SlSvc" > <endpoint address="" contract=" ImagingService.ISlSvc" binding="basicHttpBinding" /> </service> </services> </system.serviceModel> <system.webServer> <modules runAllManagedModulesForAllRequests="true"/> </system.webServer> </configuration>

SlImagecomponent is used for viewing of previously uploaded and processed images. It containsMultiScaleImagecontrol for DZ.SlImageis publicly accessed via dedicated SlImage.html file. Being a Silverlight-based, objectSlImagecannot communicate with Azure objects directly. For this communication (e.g. to get list of stored image blobs),SlImagerelies onSlSvcWCF service. VS2010 allows the developer to easily create Service Reference onSlSvcWCF service and generate appropriate classSlImage.SlSvc_ServiceReference.SlSvcClient.SlImageuses instance of this class (proxy) for communication with main application.SlImageconfiguration fileServiceReferences.ClientConfigis shown below:</BINDING /></BASICHTTPBINDING /></BINDINGS /></CLIENT /></SYSTEM.SERVICEMODEL /></CONFIGURATION />

Collapse | Copy Code

<configuration> <system.serviceModel> <bindings> <basicHttpBinding> <binding name="BasicHttpBinding_ISlSvc" maxBufferSize="2147483647" maxReceivedMessageSize="2147483647"> <security mode="None" /> </binding> </basicHttpBinding> </bindings> <client> <endpoint address="../SlSvc.svc" binding="basicHttpBinding" contract="SlSvc_ServiceReference.ISlSvc" /> </client> </system.serviceModel> </configuration>Code Sample

Code for this article may be tested in local Azure development environment. You should start VS2010 "as Administrator", load AzureDz.sln solution to it, build and run

AzureDzproject (highlighted in the figure above). To upload an image file, you should navigate your browser to http://127.0.0.1:81/ImagingSvc.svc/Init and from that page, perform image file upload. To view uploaded image with SilverlightMultiScaleImagecontrol navigate browser to http://127.0.0.1:81/SlImage.html. To operate the solution in Azure local development environment values ofStorageAccountNameandStorageAccountKeyparameters in file ServiceConfiguration.cscfg should be left empty.To operate the sample from Azure cloud, you should first create a Storage Account and assign its name and primary access key to

StorageAccountNameandStorageAccountKeyparameters in file ServiceConfiguration.cscfg. Then, you should create a Hosted Service place-holder on the cloud, build release version, publish it and deploy to the newly created Hosted Service. Image upload can be performed from http://place-holder.cloudapp.net/ImagingSvc.svc/Init page. Image can be viewed navigating to http://place-holder.cloudapp.net/SlImage.html.The workflow looks as following. Client sharing an image, navigates his/her browser to http://.../ImagingSvc.svc/Init on any browser-equipped device. Method

Init()ofImagingSvcRESTful WCF service is called and returns a simple HTML page to browser in response. This HTML page allows the client to upload an image labeling with a blob token (the image file name by default) with the WCF service. The image is uploaded as astreambyUpload()method of theImagingSvcWCF service. This method also processes received image to a DZ image tile pyramid and puts it to Azure Blob Storage. Now the image can be viewed using any Silverlight-equipped browser. To view the image, client should navigate his/her browser to http://.../SlImage.html and choose the image from combo-box. The selected image is shown by MultiScaleImage Silverlight control. ItsSourceproperty is assigned to the image pyramid XML blob residing in Azure Blob Storage.User uploads image file operating HTML

inputtag of type "file" of his/her browser. It causes streamed HTTP POST request containing byte representation of image. This request is received byUpload()method ofImagingSvcWCF service. Sample of such request and its structure are shown in the table below:

The request is parsed in order to extract byte array for image as well as blob token and data type. Currently, this parsing is carried out "manually" with methods of

StaticHttpParserclass (I am almost sure that there is a standard way with ready available types to parse this request, but I failed to find it quickly. PerhapsUpload()method should use parameter ofSystem.ServiceModel.Channels.Messagetype...).Discussion

Code presented in this article was tested on real Azure cloud with the largest image of 11 MB. After upload, the appropriate message appeared in the browser (some time for large images, you may get some abnormal HTML response after upload, but normally upload was successful). In local development environment for large image file

OutOfMemoryexception may occur while preparing the image pyramid. The source of exception is put in thetry-catchblock, and appropriate after-upload message informs user that not all image layers will be viewed properly. So additional efforts to improve algorithm and code are desirable.As it was stated above, usage of DZ technology required considerably larger storage space than simply storing of original image. If storage space is an issue, then the DZ image pyramid may be generated for a stored image ad hoc, or some caching mechanism (may be combining with appropriate scheduling) should be employed. For example, if it is known that tomorrow a doctor will examine X-ray images of certain patients, then DZ image pyramid may be generated for these images during the night before the examination and destroyed after the examination.

Conclusions

This article presents a tool to upload image file to Azure cloud, process the image to a Deep Zoom image tile pyramid, store the pyramid images as blobs to Azure Blob Storage and view the image with

MultiScaleImagecontrol provided by Microsoft Silverlight. All the above operations are performed with just browser without any additional installations on user machine (the viewing browser however should support Silverlight).References

[1] Deep Zoom. Wikipedia

[2] Daniel Gasienica. Inside Deep Zoom – Part I: Multiscale Imaging

[3] Daniel Gasienica. Inside Deep Zoom – Part II: Mathematical Analysis

[4] Building a Silverlight Deep Zoom Application

[5] Jaime Rodriguez. A deepzoom primer (explained and coded)

[6] Sacha Barber. DeepZoom. CodeProject

[7] Microsoft Deep Zoom Composer

[8] Berend Engelbrecht. Generate Silverlight 2 DeepZoom Image Collection from Multi-page TIFF. CodeProject

[9] Joerg Lang. Silverlight Database Deep Zoom. CodeProject.