Windows Azure and Cloud Computing Posts for 3/14/2011+

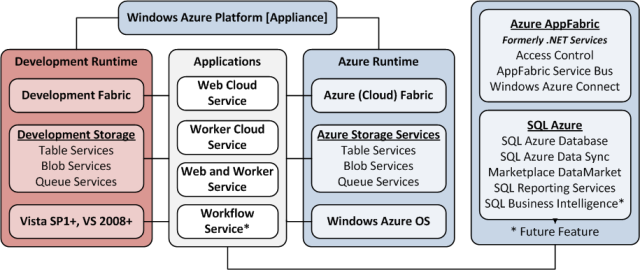

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Herve Roggero (@hroggero) asserted Cloud Computing = Elasticity * Availability in a 3/14/2011 post:

What is cloud computing? Is hosting the same thing as cloud computing? Are you running a cloud if you already use virtual machines? What is the difference between Infrastructure as a Service (IaaS) and a cloud provider? And the list goes on… these questions keep coming up and all try to fundamentally explain what “cloud” means relative to other concepts. At the risk of over simplification, answering these questions becomes simpler once you understand the primary foundations of cloud computing: Elasticity and Availability.

Elasticity

The basic value proposition of cloud computing is to pay as you go, and to pay for what you use. This implies that an application can expand and contract on demand, across all its tiers (presentation layer, services, database, security…). This also implies that application components can grow independently from each other. So if you need more storage for your database, you should be able to grow that tier without affecting, reconfiguring or changing the other tiers. Basically, cloud applications behave like a sponge; when you add water to a sponge, it grows in size; in the application world, the more customers you add, the more it grows. Pure IaaS providers will provide certain benefits, specifically in terms of operating costs, but an IaaS provider will not help you in making your applications elastic; neither will Virtual Machines. The smallest elasticity unit of an IaaS provider and a Virtual Machine environment is a server (physical or virtual). While adding servers in a datacenter helps in achieving scale, it is hardly enough. The application has yet to use this hardware. If the process of adding computing resources is not transparent to the application, the application is not elastic.

As you can see from the above description, designing for the cloud is not about more servers; it is about designing an application for elasticity regardless of the underlying server farm.

Availability

The fact of the matter is that making applications highly available is hard. It requires highly specialized tools and trained staff. On top of it, it's expensive. Many companies are required to run multiple data centers due to high availability requirements. In some organizations, some data centers are simply on standby, waiting to be used in a case of a failover. Other organizations are able to achieve a certain level of success with active/active data centers, in which all available data centers serve incoming user requests. While achieving high availability for services is relatively simple, establishing a highly available database farm is far more complex. In fact it is so complex that many companies establish yearly tests to validate failover procedures.

To a certain degree certain IaaS provides can assist with complex disaster recovery planning and setting up data centers that can achieve successful failover. However the burden is still on the corporation to manage and maintain such an environment, including regular hardware and software upgrades. Cloud computing on the other hand removes most of the disaster recovery requirements by hiding many of the underlying complexities.

Cloud Providers

A cloud provider is an infrastructure provider offering additional tools to achieve application elasticity and availability that are not usually available on-premise. For example Microsoft Azure provides a simple configuration screen that makes it possible to run 1 or 100 web sites by clicking a button or two on a screen (simplifying provisioning), and soon SQL Azure will offer Data Federation to allow database sharding (which allows you to scale the database tier seamlessly and automatically). Other cloud providers offer certain features that are not available on-premise as well, such as the Amazon SC3 (Simple Storage Service) which gives you virtually unlimited storage capabilities for simple data stores, which is somewhat equivalent to the Microsoft Azure Table offering (offering a server-independent data storage model). Unlike IaaS providers, cloud providers give you the necessary tools to adopt elasticity as part of your application architecture. [Emphasis added.]

Some cloud providers offer built-in high availability that get you out of the business of configuring clustered solutions, or running multiple data centers. Some cloud providers will give you more control (which puts some of that burden back on the customers' shoulder) and others will tend to make high availability totally transparent. For example, SQL Azure provides high availability automatically which would be very difficult to achieve (and very costly) on premise.

Keep in mind that each cloud provider has its strengths and weaknesses; some are better at achieving transparent scalability and server independence than others.

Not for Everyone

Note however that it is up to you to leverage the elasticity capabilities of a cloud provider, as discussed previously; if you build a website that does not need to scale, for which elasticity is not important, then you can use a traditional host provider unless you also need high availability. Leveraging the technologies of cloud providers can be difficult and can become a journey for companies that build their solutions in a scale up fashion. Cloud computing promises to address cost containment and scalability of applications with built-in high availability. If your application does not need to scale or you do not need high availability, then cloud computing may not be for you. In fact, you may pay a premium to run your applications with cloud providers due to the underlying technologies built specifically for scalability and availability requirements. And as such, the cloud is not for everyone.

Consistent Customer Experience, Predictable Cost

With all its complexities, buzz and foggy definition, cloud computing boils down to a simple objective: consistent customer experience at a predictable cost. The objective of a cloud solution is to provide the same user experience to your last customer than the first, while keeping your operating costs directly proportional to the number of customers you have. Making your applications elastic and highly available across all its tiers, with as much automation as possible, achieves the first objective of a consistent customer experience. And the ability to expand and contract the infrastructure footprint of your application dynamically achieves the cost containment objectives.

Herve is a SQL Azure MVP and co-author of Pro SQL Azure (APress). He is the co-founder of Blue Syntax Consulting (www.bluesyntax.net), a company focusing on cloud computing technologies.

<Return to section navigation list>

MarketPlace DataMarket and OData

I updated my (@rogerjenn) Access Web Databases on AccessHosting.com: What is OData and Why Should I Care? on 3/14/2011 with instructions for logging in to the Northwind Traders demonstration Web Database with public, View Only permission and viewing list/table data with PowerPivot for Excel.

See the post’s “Browsing OData-formatted Web Database Content in PowerPivot for Excel” section below for details:

After downloading and installing PowerPivot for Excel 2010 from http://powerpivot.com/, click the PowerPivot tab to open the PowerPivot ribbon and click the PowerPivot Window Launch button to open its ribbon.

Update 3/14/2011: If you want to run a live test with the NorthwindTraders Web Database site hosted in an AccessHosting.com Trial account, connect to http://oakleaf.accesshoster.com/NorthwindTraders/_vti_bin/listdata.svc in a browser. When the Windows Security dialog appears, type AH\devtest1 as the username and access as the password, and mark the Remember My Credentials check box:

Figure 1.

Click OK to display the OData metadata:

Figure 2.

Leave the metadata window open, click the From Data Feeds button to open the Table Import Wizard’s Connect to Data Feed dialog, type or paste the same Data Feed URL, http://oakleaf.accesshoster.com/NorthwindTraders/_vti_bin/listdata.svc for the demonstration Web Database, and add a Friendly Connection Name, as shown here:

Figure 3.

Click Next to open the Select Tables and Views dialog, after providing your credentials again, if requested. Mark the check boxes for the Source Tables (lists) you want to include:

Figure 4.

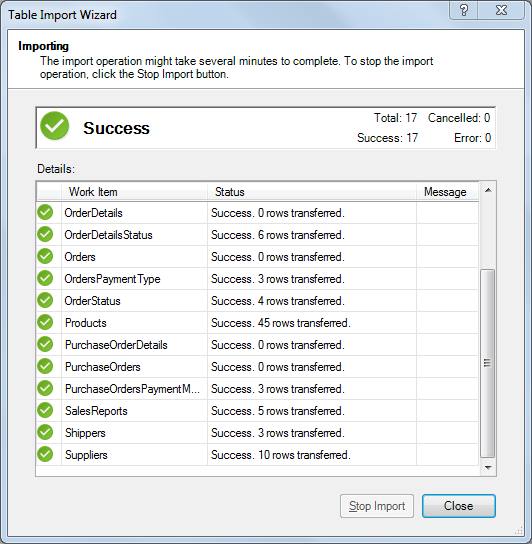

Click Finish to import the data:

Figure 5.

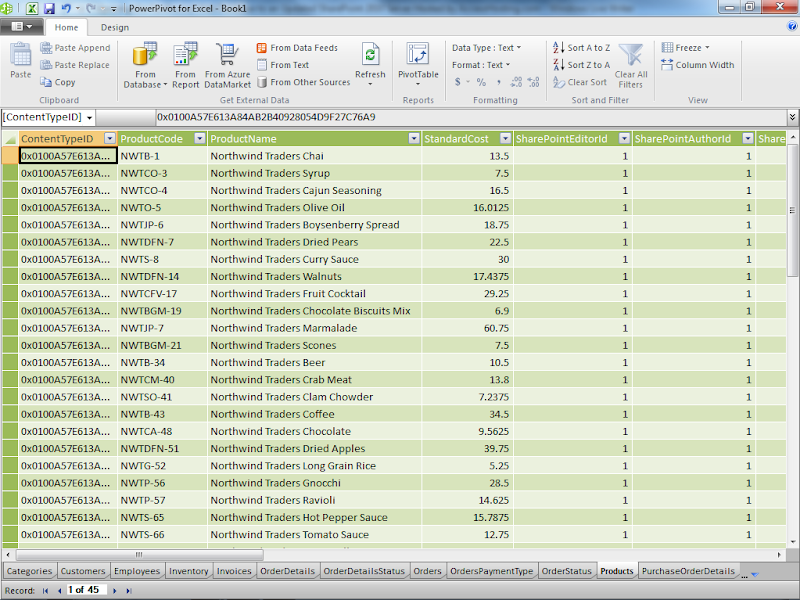

Click Close to display the contents of the first list in alphabetical order (Categories). Click the tab at the bottom of the page to display the list you want (Products for this example):

Figure 6.Select the columns that don’t contain interesting information, right click a selected column and choose Hide or Delete to remove it fom the Pivot table, and drag foreign key (lookup) values, such as CategoryID and SupplierID to the left:

Figure 7.

At this point, you can perform all common Excel PivotTable operations on the PowerPivot data.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Todd Baginski recommended that you Get Started Building SharePoint Online Solutions with the Windows Azure AppFabric in this 3/14/2011 article for Windows IT Pro:

For almost a year, I’ve been working with SharePoint Online (SPO) and cloud integration. I’ve found that the latest version of SPO provides many new capabilities and more flexibility for developers who want to create business solutions that run on SharePoint sites in the cloud. After I give a quick overview of what you can do with SPO, I’ll discuss how to do it. As you’ll soon find out, there are many different design patterns that you can use to integrate SPO solutions with cloud components.

What You Can Do

SPO is essentially SharePoint 2010 running in the Microsoft cloud. It lets you create sites for sharing documents and information with colleagues and customers. The latest version of SPO falls under the Office 365 umbrella of Microsoft Online Services, which includes Exchange Online, Lync Online, and integration with the Office Web Apps. You can sign up for the beta version of Office 365 and learn more about the new SPO version.

In past SPO versions, the main development tools have been the web browser and SharePoint Designer. In the latest version of SPO, you can do much more than configure solutions with a web browser and enhance them with SharePoint Designer. Now, you can deploy custom code to SPO in the form of sandboxed solutions.

Sandboxed solutions can deploy coded components, declarative components, Silverlight applications, and more. For a complete list of the components you can use in SPO, check out the Microsoft article “SharePoint Online: An Overview for Developers”. You might be surprised when you see how many different components you can deploy to SPO. Table 1 lists some of those components and gives examples of how you might use them to create SPO solutions and, in several cases, interact with cloud components. Although this list isn’t exhaustive, it provides a good starting point that can get you thinking about how to create SPO solutions that include sandbox-compatible components that integrate with cloud components.

Table 1: Components Available in Sandboxed Solutions for SPOHow You Can Do It

Now that you’ve seen many different ways to extend and enhance SPO sites, you’re probably wondering how to go about developing SPO solutions. To get started, you’ll need a standard SharePoint 2010 development environment. If you haven’t built one yourself, you can download the 2010 Information Worker Demonstration and Evaluation Virtual Machine. This virtual machine (VM) set includes everything you need to develop SharePoint 2010 solutions. Also, make sure you check out the SharePoint Online Developer Resource Center and the SharePoint Developer Center for training kits, SDKs, and sample code.

Next, if you’re creating Silverlight applications for use in your SPO sites, download and install the Microsoft Silverlight 4 Tools for Visual Studio 2010. You’ll also need the Silverlight 4 Developer Runtime.

Finally, download and install the following:

- Windows Azure SDK and Windows Azure Tools for Microsoft Visual Studio

- Hotfix Rollup in .NET 3.5 SP1 for Windows 7 and Windows 2008 R2

- Windows Azure AppFabric SDK

The Windows Azure Developer Center contains training kits and videos to help you get up to speed on this platform. After your development environment is set up, sign up for a Windows Azure account.

SPO Design Patterns

As Table 1 shows, there are many ways to integrate SPO sandbox-compatible components with cloud components. How you choose to go about integrating the two will depend on your environment and requirements. There are several options available. To begin, let’s discuss the client portion of the implementation.

By design, sandboxed components (which run in the context of the User Code Service) don’t have permission to make calls to external services. This behavior is identical to that in the on-premises version of SharePoint 2010. To learn more about the User Code Service and the sandbox execution model, check out the Sandboxed Solutions web page in the SharePoint 2010 Patterns & Practices documentation.

To make calls to external resources, such as cloud resources, you need to use code that runs on client machines in a web browser session. Both jQuery-based and Silverlight client solutions can be deployed as SPO sandboxed-solution components that interact with external services, such as cloud resources. This makes jQuery-based and Silverlight applications the perfect choice for clients that interact with cloud components.

After you decide which technology you want to use to implement your SPO solution, you need to figure out how to access the services that support your solution. Perhaps the most common design pattern you’ll see at first is calling external web and Windows Communication Foundation (WCF) services from a client browser session. The reason this pattern will be so common is that there are already many services on the Internet that line of business (LOB) applications rely on, such as weather data, traffic data, and customer relationship management (CRM) services (e.g., Salesforce.com). In this pattern, users access a jQuery-based or Silverlight application deployed to an SPO site. The jQuery-based or Silverlight application calls out to services available on the Internet. Figure 1 illustrates this pattern.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

G. Marchetti explained how to Put a VM on Azure in a 3/12/2011 post to the Tech Net blogs:

I have summarized here all the steps you need to take in order to deploy an Azure VM.

Step 1: Get your certificates

I assume that you have an active Azure subscription and you have installed visual studio 2010, the azure sdk and tools and activated the VM role. You will need a management certificate for your subscription to deploy services and 1 or more service certificates to communicate with those securely. To generate a x509 certificate for use with the management API:

1. Open the IIS manager, click on your server.

2. Select "Server Certificates" in the main panel.

3. Click "Create Self-Signed Certificate" in the actions panel

4. Give the certificate a friendly name.

5. Close IIS manager and run certmgr.msc

6. Find your certificate in "Trusted Root Certification Authorities"

7. Right-Click on it, select All Tasks / Export

8. Do not export the private key, choose the DER format, give it a name.

9. Navigate to the Windows Azure management portal.

10. Select Hosted Services / Management Certificates / Add a Certificate

11. Browse to the management certificate file and upload it.

Step 2: Prepare the VM

I assume that you are familiar with Hyper-V and how to build a virtual machine on a hyper-v host.

- Create a virtual machine on hyper-v. Note that the maximum size of virtual hard disk you specify will determine what size of Azure VM you will be able to choose. An extra-small machine will mount a vhd up to 15 GB, small one up to 35 and medium or more up to 65 GB. This is just the size of the system VHD. You will still receive local storage, mounted as a separate volume.

- Install Windows Server 2008 R2 on the VHD. It is the only supported o/s as of writing.

- Install the Azure integration components in the VM. They are contained in the wavmroleic.iso file, which is typically located in c:\progam files\windows azure sdk\<version>\iso. You need to mount that file on the VM and then run the automatic installation process. This provisions the device drivers and management services required by the Azure hypervisor and fabric controller. Note that the setup process asks you for a local administrator password and reboots the VM. The password is encrypted and stored in c:\unattend.xml for future unattended deployment.

- Install and configure any application, role or update as you normally would.

- Configure the windows firewall within the VM to open the ports that your application requires. It is recommended that you use fixed local ports.

- Open and administrator command prompt and run c:\windows\system32\sysprep\sysprep.exe

- Select "OOBE", Generalize and Shutdown

This process removes any system-specific data (including the name and SID) from the image, in preparation for re-deployment on Azure. If your application is dependent on those data, you will have to take appropriate measures at startup on Azure (e.g. run a setup script for your application). The VHD is now ready to be uploaded. It is recommended to make a copy of it to keep as a template.

Note that any deployment to Azure starts from this vhd. No status is saved to local disk if the Azure VMs is recycled for any reason.

Step 3: Upload the VM to Azure

For this you will need a command-line utility provided with the Azure SDK.

- Open a windows azure command prompt as administrator.

- Type

csupload Add-VMImage -Connection "SubscriptionId=<YOUR-SUBSCRIPTION-ID>; CertificateThumbprint=<YOUR-CERTIFICATE-THUMBPRINT>" -Description "<IMAGE DESCRIPTION>" -LiteralPath "<PATH-TO-VHD-FILE>" -Name <IMAGENAME>.vhd -Location <HOSTED-SERVICE-LOCATION> -SkipVerify

The subscription ID can be retrieved from the Azure portal and the certificate thumbprint refers to the management certificate you created and uploaded before. The thumbprint can be retrieved from the portal as well. The description is an arbitrary string, the literal path is the full absolute path on the local disk where you stored your vhd. The image name is the name of the file once stored in Azure and the location is one of those available in the Azure portal. Note that the location must be specific, e.g. "North Central US". A region is not accepted (e.g. Anywhere US). SkipVerify will save you some time.

This command will create a blob in configuration storage and load your vhd file in it for future use, but not create a service or start a VM for you. In the Azure portal the stored virtual machine templates can be found under "VM Images"

Step 4: Prepare the service model

Azure requires a service definition and a service configuration file before deploying any role. These are .xml files that are packaged and uploaded to the fabric controller for interpretation. You can generate one for the VM using Visual Studio 2010.

1. Open Visual Studio 2010 and create a new Windows Azure project.

2. Do NOT add any role to the project from the project setup wizard.

3. In the solution explorer panel, right click on the project name and select New Virtual Machine Role. Note that a service may be made of several roles, including multiple VMs.

4. In the VHD configuration dialog, specify your Azure account credentials and which of the stored virtual machine templates you'd like to use.

5. In the Configuration panel specify how many instances you'd like and what type. Remember the size constraints on the system VHDs.

6. In Endpoints, specify which ports and protocol must be open for your applications within the virtual machine (they should match those configured before).

7. Note that RDP connections are configured elsewhere.

8. Once the VM role configuration is done, right-click on the project name and select Publish. You have an option to create the service configuration package only, to be uploaded later via the portal, or to actually deploy the project. I am assuming that you have not got a service defined yet. It is advisable to configure RDP connections for debugging purposes at least during staging.

9. Select Enable connections, then specify a service certificate. This will contain a private key used to encrypt your credentials. If you have none, you can create one from this interface. If you do create a new certificate, click View, Details and Copy to File to export it. Make sure to include the private key.

10. Specify a user name and password to connect to this virtual machine. Change the account expiration date as necessary (but set it before the certifcate expires).

11. Select "Create Service Package Only" and save the package file.

Step 5. Create the service in Azure

1. In the Azure Management Portal, select Hosted Services / New Service

2. Populate the form, specifying a name for your service and deployment options. Note that the location you select must be the same specified at upload time for the virtual machine you want to use. Select the configuration package and file that you saved before. Add the certificate that you exported before for RDP.

3. Click OK to deploy. Start your deployed machines.

Step 6: Connect and enjoy.

From the machine where you generated the RDP certificate, connect to your virtual machines and test. Simply select the virtual machine in the Azure portal and click "connect". A RDP file will be generated for you to save and open. Once debugging is finished, it is recommended to disable RDP connections for production.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Gaston Hillar explained High Performance C++ Code in Windows Azure Roles in a 3/11/2011 article for Dr. Dobbs Journal:

If you have to migrate an existing high performance application to Windows Azure, you will probably have highly optimized C and C++ code that takes advantage of multicore hardware and SIMD instructions. A long time ago, Windows Azure Mix 09 CTP introduced the ability to run Web and/or Worker Roles in full trust. The newest Windows Azure versions allow you to make P/Invoke (short for Platform Invoke) calls to invoke native code.

C++ code is very important in high performance computing. When you have to move compute-intensive algorithms to a Windows Azure cloud-based project, a Worker Role is usually an interesting option. However, there isn't a C++ Worker Role template in Windows Azure. You can choose between C#, Visual Basic and F#, and therefore, you have to work with managed code.

In "Multicore Programming Possibilities with Windows Azure SDK 1.3," I've already explained that you can use your parallelized algorithms that exploit modern multicore microprocessors in Windows Azure. If you have single-threaded or multi-threaded code in C++, you can reuse this code to create a C++ native assembly. Then, you must enable full trust support in Windows Azure and you will be able to invoke this native assembly by making a P/Invoke call. Remember that a P/Invoke call isn't free and it adds an overhead, and therefore, you have to consider the impact of this overhead.

It is easy to run a Web Role or a Worker Role in full trust. You just have to set the enableNativeCodeExecution flag in the service definition file to true. If a Web Role calls native code, you have to run it in full trust. You can check the documentation for the basic format of a service definition file containing a Web Role here. You can find the same information for a Worker Role here.

You can include the C++ code that builds the native assembly within the Azure solution, and then you can deploy it. Microsoft provides a very simple MSDN Virtual Lab "Windows Azure Native Code." The virtual lab teaches you to include a simple C++ native assembly in a Windows Azure solution, enable full trust, and then create a simple ASP.NET Website that calls the native assembly. If you don't want to run the virtual lab, you can download the lab manual, which includes step-by-step instructions to perform the aforementioned tasks with Visual Studio 2008.

You can follow these instructions in Visual Studio 2010 with Windows Azure SDK 1.3 and you will be able to run C++ code in your Azure solutions. The lab manual is very useful because you have to perform some complex steps and configurations that might confuse an expert developer. The lab manual includes screenshots and makes it really easy to build and deploy the Azure solution that calls native code. You can use this information to migrate C and C++ code to your cloud-based solutions that target Windows Azure.

TechNet Edge presented TechNet Radio: Developing Applications on Windows Azure on 3/1/2011 (missed when posted):

In today’s episode we hear from Abe Ray and Bart Robertson as they discuss how Microsoft IT is developing applications on the Windows Azure platform. Listen in as they go in depth into the three main business benefits for developing applications in the Cloud as well as how Windows Azure is optimizing the online social and digital media experience at Microsoft. [Emphasis added.]

Check out Windows Azure Subscription offers

Learn more about key deployment tools and essentials for Windows Azure by clicking on any of the free resources, videos or virtual labs below:

Resources:Videos:

- TechNet Radio: Windows Azure – A Non-Microsoft Perspective

- TechNet Radio: Windows Azure in Education

- TechNet Radio: SQL Azure – Growing opportunities for Data in the Cloud

- TechNet Radio: Managing your systems in the Cloud

- TechNet Radio: Data Storage Solutions in the Cloud

- TechNet Radio: Business Intelligence in the Cloud

Virtual Labs:

<Return to section navigation list>

Visual Studio LightSwitch

Tim Leung posted links to LightSwitch resources on 3/14/2011:

I’m currently interested in Microsoft LightSwitch, a rapid application development tool for creating Silverlight applications. LightSwich makes it very easy to create data centric applications for the desktop and web.

Here are some useful links related to LightSwitch:

01 – Getting Started

- Download the full beta 1 ISO from here (as opposed to the 3.6MB web installer):

http://www.microsoft.com/downloads/en/details.aspx?FamilyID=37551a54-bfd3-4af6-a513-676bbb2dfb69&displaylang=en02 – Learning how to use Lightswitch

- The MSDN help is here:

http://msdn.microsoft.com/en-us/library/ff851953.aspx- Watch the Beth Massi videos here:

http://msdn.microsoft.com/en-us/lightswitch/ff949858.aspx03 – Getting Help

- Ask for help on the MSDN forums here:

http://social.msdn.microsoft.com/Forums/en-us/lightswitchgeneral/threads?page=1- Report bugs on Connect here:

http://connect.microsoft.com/VisualStudio04 – Other resources

- The official LightSwitch Blog

http://blogs.msdn.com/b/lightswitch/- Beyond the Basics Video on Channel 9

http://channel9.msdn.com/Blogs/funkyonex/Visual-Studio-LightSwitch-Beyond-the-Basics- Beth Massi on Dotnetrocks TV

http://www.dnrtv.com/default.aspx?showNum=185

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Benjamin Grudin asserted “It’s just smart business” in a deck for his Cloud Computing: A Clear SLA Now Can Save a Lot of Headaches Later post of 3/14/2011:

If you're like a lot of IT pros, you probably are tired of arguing with business units about service issues. The beauty of the private cloud approach is it lets you run IT like an external business. That means you set up a service-level agreement (SLA) with your business units just like any vendor in the public cloud, setting expectations and outlining responsibilities.

What this approach does is provide a process for defining the interface between IT and the business units in your company. The SLA should include several key components:

- It lists the components necessary for a given service to operate.

- It provides a service guarantee based on all of those pieces operating.

- It outlines the consequences for failing to meet the service guarantee.

From an IT perspective, you know that a given service requires certain components to operate. Since they are all interdependent, you also know the service is toast if one goes down. Therefore, the service-level agreement should list all of the required components with the understanding they all need to be operating.

From a business user's point of view, it's no different than buying a service outside of the company. If you buy storage on Amazon.com, you can expect to pay a certain amount per megabyte, and you can expect that you will get a certain level of uptime. You also know what will happen if the service goes down and you can't access it.

Some may look at this approach and think it's silly to operate this way in-house, but it's like any business deal. Everyone in the company needs to look at your cloud business services for what they are: a transaction-based service. If you set up an SLA with the terms of the agreement, you are setting up clear guidelines and expectations for both parties.

It's just smart business.

Benjamin is the Director of Data Center Management for Novell.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Ericka Chickowski asserted “Many larger organizations have opted for private cloud computing deployments, fearing that public clouds can't offer the proper level of security. But a new survey shows that private clouds may be just as much at risk” in a deck for her Private Cloud Computing No Safer than Public Cloud post of 3/14/2011 to the ChannelInsider blog:

Many IT departments have put off public cloud deployments in favor of private cloud virtualization projects under the guise of security concerns -- but new findings show that these private cloud deployments may be no more secure than their public counterparts. That's good news for managed service providers with the right security chops and infrastructure to support cloud deployments, some managed services experts say.

Conducted by Unisphere Research on behalf of security vendor AppSec, the survey asked 430 members of the Oracle Application Users Group about their database security and risk management practices. Among these respondents, 45 percent said they think there is still risk in private cloud computing and had reservations about sharing data and application services outside of their business units.

"One of the major show-stoppers so far with public cloud is fears about putting your data and your business out with an outside third-party provider," says Joe McKendrick, lead analyst for Unisphere and author of the survey report.

"There's a lot of discussion about private cloud where companies basically assemble their own clouds and provide their own online services across the enterprise as a remedy for this. They believe that because the data and applications stay within the bounds of the enterprise, there's a little bit more control. But the problem is then that there aren't enough controls within the enterprise to guard this data."According to the survey, three out of four organizations did not have a defined strategy for cloud security. One of the biggest problems McKendrick believes hampers private cloud security is the rampant replication and scattering of database information with few controls that occurs when many businesses implement cloud solutions within the firewall.

"The foundation of private cloud is essentially having the enterprise make data and applications accessible to anybody across the enterprise who needs it and there are a lot of questions that raises," he explains. "What happens is a lot of data is replicated or taken out of the production environment, where it may be secure, to other environments where controls may not be as stringent."

These findings could be just the statistical foundation some managed service providers are looking for to strengthen their cloud and virtualization service pitches. For example, Miami, Fla.-based Terremark woos customers to use its cloud services with security, compliance and monitoring all leveraged as competitive differentiators."We have that same argument (about public versus private clouds) continuously," says Pete Nicoletti, vice president of Secure Information Services for Terremark. "We have the security skill sets and a lot of companies don't have skill sets, or 24 by seven security operation centers."

Not only do service providers have more resources, but they also have the advantage of handling cloud security daily for hundreds or even thousands of customers, operational experience that can offer definite security advantages over the in-house experience of a staff gung-ho on building out private clouds.

"We have what we refer to as collective intelligence, where we're doing it for a lot of other people. So what we learn here, we apply there," Nicoletti says. "I like to say that we're audited millions of times per year, because not only are we audited by the people showing up that do physical audits and PCI audits and NIST audits and HIPAA audits, but we're also hit by Qualys a gazillion times a day and hit by all these other scanning companies to make sure we're doing the right thing. Whereas a single company may only be audited once."

Herman Mehling asserted “Users will expect your private and hybrid clouds to deliver the kinds of infrastructure services that big public cloud players do. Here is how to build a cloud infrastructure that can do just that” in a deck for his 5 Essential Building Blocks for Your Private Cloud Infrastructure post of 3/14/2011 to the DevX.com blog:

While self-service private and hybrid clouds are creating a lot of interest, pressure is mounting on IT departments to deliver the kinds of simple, self-service, on-demand infrastructure services readily available from public clouds such as Rackspace and Amazon EC2.

However, most IT organizations lack the infrastructure to provide the dynamic elasticity found in the public cloud. At the same time, most public cloud services lack the governance, management and control required for enterprise IT.

"Cloud computing is a megatrend that is shaping the future of IT," says Julie Craig, research director at Enterprise Management Associates (EMA), in a report titled Cloud Coalition: rPath, newScale, and Eucalyptus Systems Partner on Self-Service Public and Private Cloud. "It is forcing companies to reevaluate their approach to delivering IT services, to staffing and budgeting, and to application governance and management."

rPath, newScale, and Eucalyptus Systems recently teamed up to create an integrated technology platform designed to provide an onramp to private and hybrid clouds in the enterprise. The three vendors have combined self-service, automation, and elasticity functionality in a solution to help IT departments create their own private clouds and manage access to public cloud resources. Another vendor worth checking out is Cloud.com.

"Cloud technologies are fast evolving, bringing with them opportunities to run IT more efficiently," says Michael Cote, industry analyst, RedMonk. To cope with that landscape, Cote thinks the best strategy now is to adopt an open and heterogeneous cloud infrastructure that can span private and public clouds.

Here are five essential building-blocks developers should consider when they create their cloud infrastructure:

1. Offer Comprehensive Support for New and Old Technologies

Whether your cloud is a private or hybrid model, it must be able to support the newest hardware, virtualization software solutions, as well as your data center's existing infrastructure, especially its data management tools. The name of the game is heterogeneous support.

Your data center should support open source and proprietary technologies from leading companies, such as Citrix, Cisco, EMC, IBM, Microsoft, Red Hat, and VMware.

2. Define and Meter the Cloud's Service Offerings

It's vital to define and meter service offerings -- whether the cloud is private or public. Such offerings should include resource guarantees, metering rules, resource management, and billing cycles.

Integrate these offerings into the overall portfolio of services, so they can be quickly and easily deployed and managed by users.

3. Create Dynamic Workload and Resource Management

Every cloud must deliver on-demand, elastic performance while simultaneously meeting service level agreements (SLAs). To achieve that goal of optimum efficiency, IT and business folks need to create policies that will enable the various systems and resources to expand and contract on-the-fly based on the business priorities of the various workloads.

4. Make sure it's reliable, constantly available and secure

Constant availability, flawless reliability, and outstanding security are three of the must-haves of a cloud. Users expect a cloud to be fully operational even if a few components fail in one or more of the various shared resource pools of the cloud, external or internal.

Users also expect their cloud to be very secure. Security policies must cover every piece of your architecture, processes, and technologies.

5. Implement Strong Visibility and Reporting Procedures

The success of all cloud services depends on strong visibility and reporting. Without those vital mechanisms, it can be very difficult to manage customer service levels, system performance, compliance, and billing.

All of the above areas also require high levels of granular visibility and reporting.

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie (@lmacvittie) asserted Internal processes may be the best answer to mitigating risks associated with third-party virtual appliances in a preface to her Quarantine First to Mitigate Risk of VM App Stores post to F5’s DevCentral blog of 3/14/2011:

The enterprise data center is, in most cases, what aquarists would call a “closed system.” This is to say that from a systems and application perspective, the enterprise has control over what goes in.

The problem is, of course, those pesky parasites (viruses, trojans, worms) that find their way in. This is the result of allowing external data or systems to enter the data center without proper security measures. For web applications we talk about things like data scrubbing and web application firewalls, about proper input validation codified by developers, and even anti-virus scans of incoming e-mail.

But when we start looking at virtual appliances, at virtual machines, being hosted in “vm stores” much in the same manner as mobile applications are hosted in “app stores” today, the process becomes a little more complicated. Consider Stuxnet as a good example of the difficulty in completely removing some of these nasty contagions. Now imagine public AMIs or other virtual appliances downloaded from a “virtual appliance store”.

Hoff first raised this as a potential threat vector a while back, and reintroduced it when it was tangentially raised by Google’s announcement it had “pulled 21 popular free apps from the Android Market” because “the apps are malware aimed at getting root access to the user’s device.” Hoff continues to say:

This is going to be a big problem in the mobile space and potentially just as impacting in cloud/virtual datacenters as people routinely download and put into production virtual machines/virtual appliances, the provenance and integrity of which are questionable. Who’s going to police these stores?

-- Christofer Hoff, “App Stores: From Mobile Platforms To VMs – Ripe For Abuse”

Even if someone polices these stores, are you going to run the risk, ever so slight as it may be, that a dangerous pathogen may be lurking in that appliance? We had some similar scares back in the early days of open source, when a miscreant introduced a trojan into a popular open source daemon that was subsequently downloaded, compiled, and installed by a lot of people. It’s not a concept with which the enterprise is unfamiliar.

THE DATA CENTER QUARANTINE (TANK)

I cannot count the number of desperate pleas for professional advice and help with regards to “sick fish” that start with: I did not use a quarantine tank. A quarantine tank (QT) in the fish keeping hobby is a completely separate (isolated) tank maintained with the same water parameters as the display tank (DT).

The QT provides a transitory stop for fish destined for the display tank that offers a chance for the fish to become acclimated to the water and light parameters of the system while simultaneously allowing the hobbyist to observe the fish for possible signs of infection.

Interestingly, the QT is used before an infection is discovered, not just afterwards as is the case with people infected with highly contagious diseases. The reason fish are placed into quarantine even though they may be free of disease or parasites is because they will ultimately be placed into a closed system and it is nearly impossible to eradicate disease and parasites in a closed system without shutting it all down first. To avoid that catastrophic event, fish go into QT first and then, when it’s clear they are healthy, they can join their new friends in the display tank.

Now, the data center is very similar to a closed system. Once a contagion gets into its systems, it can be very difficult to eradicate it. While there are many solutions to preventing contagion, one of the best solutions is to use a quarantine “tank” to ensure health of any virtual appliance prior to deployment.

Virtualization affords organizations the ability to create a walled-garden, an isolated network environment, that is suitable for a variety of uses. Replicating production environments for testing and validation of topology and architecture is often proposed as the driver for such environments, but use as a quarantine facility is also an option. Quarantine is vital to evaluating the “health” of any virtual network appliance because you aren’t looking just for the obvious – worms and trojans that are detectable using vulnerability scans – but you’re looking for the stealth infection. The one that only shows itself at certain times of the day or week and which isn’t necessarily as interested in propagating itself throughout your network but is instead focused on “phoning home” for purposes of preparing for a future attack.

It’s necessary to fire up that appliance in a constrained environment and then watch it. Monitor its network and application activity over time to determine whether or not it’s been infected with some piece of malware that only rears its ugly head when it thinks you aren’t looking. Within the confines of a quarantined environment, within the ‘turn it off and start it over clean’ architecture comprised of virtual machines, you have the luxury of being able to better evaluate the health of any third-party virtual machine (or application for that matter) before turning it loose in your data center.

QUARANTINE in the DATA CENTER is not NEW

The idea of quarantine in the data center is not new. We’ve used it for some time as an assist in dealing with similar situations; particularly end-users infected with some malware detectable by end-user inspection solutions.

Generally we’ve used that information to quarantine the end-user on a specific network with limited access to data center resources – usually just enough to clean their environment or install the proper software necessary to protect them. We’ve used a style of quarantine to aid in the application lifecycle progression from development to deployment in production in the QA or ‘test’ phase wherein applications are deployed into an environment closely resembling the production environment as a means to ensure that configurations, dependencies and integrations are properly implemented and the application works as expected.

So the concept is not new, it’s more the need to recognize the benefits of a ‘quarantine first’ policy and subsequently implementing such a process in the data center to support the use of third-party virtual network appliances. As with many cloud and virtualization-related challenges, part of the solution almost always involves process. It is in recognizing the challenges and applying the right mix of process, product and people to mitigate operational risks associated with the deployment of new technology and architectures.

Patrick Butler Monterde posted Security Resources for Windows Azure on 3/13/2011:

The following lists of papers, articles, blogs, videos, and webcasts provide a multitude of resources for learning how to handle security when developing applications for the Windows Azure platform.

Security for Windows Azure

Single Sign-On from Active Directory to a Windows Azure Application

This paper contains step-by-step instructions for using Windows Identity Foundation, Windows Azure, and Active Directory Federation Services (AD FS) 2.0 for achieving single sign-on (SSO) across web applications that are deployed both on premises and in the cloud. Previous knowledge of these products is not required for completing the proof of concept (POC) configuration. This document is meant to be an introductory document, and it ties together examples from each component into a single, end-to-end example.

This document from the Patterns and Practices team and developed with help from customers, field engineers, product teams, and industry experts provides solutions for securing common application scenarios on Windows Azure based on common principles, patterns, and practices.

Windows Azure Security Overview

This paper provides a comprehensive look at the security available with Windows Azure. Written by Charlie Kaufman and Ramanathan Venkatapathy, the paper examines the security functionality available from the perspectives of the customer and Microsoft operations, discusses the people and processes that help make Windows Azure more secure, and provides a brief discussion about compliance.

Security Best Practices for Developing Windows Azure Applications

This paper focuses on the security challenges and recommended approaches to design and develop more secure applications for Microsoft’s Windows Azure platform authored by Microsoft Security Engineering Center (MSEC) and Microsoft’s Online Services Security & Compliance (OSSC) team along with Windows Azure product group.

Cloud Security - Crypto Services and Data Security in Windows Azure

This MSDN Magazine article introduces some of the basic concepts of cryptography and related security within the Windows Azure platform. The article also reviews some of the cryptography services and providers in Windows Azure and discusses the security implications for any transition to Windows Azure.

Securing Microsoft's Cloud Infrastructure

This paper by the Online Services Security and Compliance (OSSC) team shows how the coordinated and strategic application of people, processes, technologies, and experience results in continuous improvements to the security of the Microsoft cloud environment.

J.D. Meier’s blog posts on Windows Azure security

J.D. Meier is a principal program manager for developer guidance at Microsoft and has contributed to several of Microsoft’s patterns and practices books. His blog gives him a way to convey his knowledge of his latest research efforts, which have recently been focused on security for Windows Azure.

TechNet Webcast - Windows Azure Security - A Peek Under the Hood (Level 100)

In this Security Talk webcast, Charlie Kaufman, a software architect on the Windows Azure team at Microsoft, describes how the Windows Azure software is structured to accept software and configuration requests from customers, deploy the software within virtual machines, and allocate storage and database resources to hold a persistent state—all while maintaining a minimal attack surface and several layers of defense in depth. Charlie also demonstrates how Windows Azure security compares with systems operated on a customer's premises. (60:00)

MSDN Webcast - Security Talk - Using Windows Azure Storage Securely (Level 200)

In this Security Talk webcast, Jai Haridas, an engineer on the Windows Azure Storage team at Microsoft, covers how to store and access data securely, and how to share blobs with other users using container access control lists (ACLs) and the SAS feature. Jai also discusses some of the best practices for using Windows Azure Storage. (60:00)

MSDN Webcast - Security Talk - Azure Federated Identity Security Using ADFS 2.0 (Level 300)

In this Security Talk webcast, John Steer, a security architect for the Microsoft IT Information Security group, explains how to create an Windows Azure application using Active Directory Federation Services (ADFS) 2.0 Security Token Service (STS), previously known as Geneva Server, for back-end authentication. (60:00)

Cloud Cover Episode 8 - Shared Access Signatures

In this episode of Cloud Cover, learn how to create and use Shared Access Signatures (SAS) in Windows Azure blob storage and discover how to easily create SAS signatures yourself. (41:50)

Cloud Cover Episode 15 - Certificates and SSL

In this episode of Cloud Cover, learn how certificates work in Windows Azure and how to enable SSL. Also, discover a tip on uploading public key certificates to Windows Azure. (29:08)

Security for SQL Azure

This MSDN library article describes the SQL Azure firewall and how to use it to protect data from unwanted access.

Security Guidelines and Limitations (SQL Azure Database)

This MSDN library article describes guidelines and limitations for the following security-related aspects of SQL Azure databases: firewall, encryption and certificate validation, authentication, login and users, and security best practices.

Getting Started with SQL Azure

This MSDN library article describes how to set the firewall settings using the SQL Azure portal. It also explains how to overcome firewall errors.

Security Guidelines for SQL Azure

This TechNet Wiki article provides an overview of security guidelines for customers connecting to SQL Azure Database, and building secure applications on SQL Azure.

Security-related posts on the SQL Azure team blog

These posts on the SQL Azure team blog help customers with a variety of security-related concerns that are top-of-mind for the community.

Microsoft SQL Azure Security Model

This IT Mentors training video covers authentication and authorization for SQL Azure. (30:00)

Security for Windows Azure AppFabric

Securing and Authenticating an AppFabric Service Bus Connection

This MSDN Library article discusses how develop applications that use the Windows Azure AppFabric Service Bus to perform secure connections.

Building Applications that Use AppFabric Access Control

This MSDN Library article discusses how to use the Windows Azure AppFabric Access Control service (AC) in your applications to build trust with Web services, request tokens, use the management service, and access control quot

<Return to section navigation list>

Cloud Computing Events

Shlomo Swidler (@ShlomoSwidler) offered a Roundup: CloudConnect 2011 Platforms and Ecosystems BOF on 3/14/2011:

The need for cloud provider price transparency. What is a workload and how to move it. “Open”ness and what it means for a cloud service. Various libraries, APIs, and SLAs. These are some of the engaging discussions that developed at the Platforms and Ecosystems “Birds of a Feather”/”Unconference”, held on Tuesday evening March 8th during the CloudConnect 2011 conference. What about the BOF worked? What didn’t? What should be done differently in the future? Below are some observations gathered from early feedback; please leave your comments, too.

Roundup

In true unconference form, the discussions reflected what was on the mind of the audience. Some were more focused than others, and some were more contentious than others. Each turn of the wheel brought a new combination of experts, topics, themes, and participants.

Provider transparency was a hot subject, both for IaaS services and for PaaS services. When you consume a utility, such as water, you have a means to independently verify your usage: you can look at the water meter. Or, if you don’t trust the supplier’s meter, you can install your own meter on your main intake line. But with cloud services there is no way to measure many kinds of usage that you pay for – you must trust the provider to bill you correctly. How many Machine Hours did it take to process my SimpleDB query, or CPU Usage for my Google App Engine request? Is that internal IP address I’m communicating with in my own availability zone (and therefore free to communicate with) or in a different zone (and therefore costs money)? Today, the user’s only option is to trust the provider. Furthermore, it would be useful if we had tools to help estimate the cost of a particular workload. We look forward to more transparency in the future.

As they rotated through the topics, two of the themes generated similar discussions: Workload Portability and Avoiding Vendor Lock-in. The themes are closely related, so this is not surprising. Lesson learned: next time use themes that are more orthogonal, to explore the ecosystem more thoroughly.

In total nine planned discussions took place over the 90 minutes. A few interesting breakaway conversations spun off as well, as people opted to explore other aspects separately from the main discussions. I think that’s great: it means we got people thinking and engaged, which was the goal.

Some points for improvement: The room was definitely too small and the acoustics lacking. We had a great turnout – over 130 people, despite competing with the OpenStack party – but the undersized room was very noisy and some of the conversations were difficult to follow. Next time: a bigger room. And more pizza: the pizza ran out during the first round of discussions.

Participants who joined after the BOF had kicked off told me they were confused about the format. It is not easy to join in the middle of this kind of format and know what’s going on. In fact, I spent most of the time orienting newcomers as they arrived. Lesson learned: next time show a slide explaining the format, and have it displayed prominently throughout the entire event for easy reference.

Overall the BOF was very successful: lots of smart people having interesting discussions in every corner of the room. Would you participate in another event of this type? Please leave a comment with your feedback.

Thanks

Many thanks to the moderators who conducted each discussion, and the experts who contributed their experience and ideas. These people are: Dan Koffler, Ian Rae, Steve Wylie, David Bernstein, Adrian Cole, Ryan Dunn, Bernard Golden, Alex Heneveld, Sam Johnston, David Kavanagh, Ben Kepes, Tony Lucas, David Pallman, Jason Read, Steve Riley, Andrew Shafer, Jeroen Tjepkema, and James Urquhart. Thanks also to Alistair Croll not only for chairing a great CloudConnect conference overall, but also for inspiring the format of this BOF.

And thanks to all the participants – we couldn’t have done it without you.

Brenda Michelson and David Linthicum combined to present a Cloud Connect Roundup Podcast on 3/14/2011:

Saturday morning, I joined Dave Linthicum on his cloud computing podcast to discuss our impressions and findings from Cloud Connect. Check out the podcast. Learn what Kung Fu Panda is doing in the cloud.

Related posts:

<Return to section navigation list>

Other Cloud Computing Platforms and Services

KlintFinley (@klintron) reported Couchbase Releases Its First Product Since Merger: Couchbase Server in a 3/14/2011 post to the ReadWriteCloud blog:

Couchbase has released its first product since its formation: Couchbase Server. It's powered by Apache CouchDB and is available in both Enterprise and Community editions. Couchbase is the result of the merger between the companies CouchOne and Membase.

According to J. Chris Anderson, chief architect of mobile at Couchbase, the new product isn't a huge infusion of Membase into CouchDB. But it's not just CouchDB either. "Couchbase Server includes CouchDB, Geocouch, and a pinch of Couchbase magic," he says.

According to Couchbase's announcement, here are the differences between the two editions:

Couchbase Server Enterprise Edition is recommended for organizations that plan to use the product in production. It is subjected to a rigorous quality assurance process and delivered with a "long-tail" maintenance guarantee, including indemnification and SLA-backed support options to minimize system downtime and revenue uptime. The API is fully compatible with Couchbase Server Community Edition.Couchbase Server Community Edition is for developers or hobbyists who want a compiled, easily installed binary distribution. Applications developed with the Community Edition can be deployed into production using the Enterprise Edition without modification.

Couchbase claims that CouchDB is the most widely deployed NoSQL database in the world. The database is used by organizations such as Apple, The BBC, CERN and Mozilla. You can read our coverage of CERN's use of CouchDB here. CouchDB also powers Canonical's Ubuntu One service.

The company also announced the members of its advisory board, including: SQLite creator Richard Hipp, Facebook Director of Infrastructure Software Engineering Robert Johnson, Zynga CTO Cadir Lee, Cloudera CEO Michael Olson and several other high profile business people and database experts. A full list of the advisors is here.

Couchbase also recently released a developer preview of its Mobile Couchbase package.

CouchOne was founded in Oakland, CA in 2009 as Relaxed. It changed its name first to Couchio and then to CouchOne in 2010. Membase was founded as NorthScale in 2010 in Mountain View, CA. It changed its name to Membase later that year.

Couchbase competes with other big data-oriented and NoSQL databases, especially the CouchDB-based BigCouch from Cloudant.

Oakland doesn’t have many hot technological startups.

Georg Singer pointed to a post by Daniel Berninger in his Comparing Cloud Computing Prices post of 3/14/2011 to his Cloud Computing Economics blog:

During our research we have recently come across an interesting blog with the title “The Case for a Cloud Computing Price War”, where the author, Daniel Berninger, argues that Amazon has kept prices for its EC2 offering stable over years, without handing over price performance improvements to its customers. The article further on argues that prices for cloud computing offerings are extremely hard to compare with up to 10x times difference between the least and the most expensive cloud computing offer.

In order to resolve this issue, the site offers a cloud price calculator that simply takes computing power (in ECU), memory (GB), storage (TB), bandwidth (TB) and price ($) as input and calculates a so-called cloud price normalization index (CPN). This index simply is the arithmetic sum of memory, storage, bandwidth divided by price. It can be interpreted as “calculation power per dollar”. This simple approach certainly makes the offers somehow comparable. What remains really is the question, if all those factors can be treated equally and if more memory can compensate for less storage. To our opinion such a calculation has of course some meaning, but if the imput factors to the calculation can be treated equally or not really depends a lot on the business context. What is also missing of course is the fact that this model is not applicable to other cloud computing offers (Microsoft’s Azure and Google Apps) that simply cannot be broken down into memory, storage, bandwidth and price.

<Return to section navigation list>

0 comments:

Post a Comment