Windows Azure and Cloud Computing Posts for 1/27/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

![AzureArchitecture2H640px3[3] AzureArchitecture2H640px3[3]](http://lh5.ggpht.com/_GdO7DQgAn3w/TUHVSBznE1I/AAAAAAAAIbM/KkfgG5pYN-4/AzureArchitecture2H640px3%5B3%5D%5B3%5D.png?imgmax=800)

• Updated 1/27/2011 at 3:30 PM PST with a few new articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• Steve Yi delivered a brief Overview of SQL Azure DataSync to the SQL Azure Team blog on 1/27/2011:

SQL Azure Data Sync provides a cloud based synchronization service built on top of the Microsoft Sync Framework. It provides bi-directional data synchronization and capabilities to easily share data across SQL Azure instances and multiple data centers. Last week Russ Herman posted a step-by-step walkthrough of SQL Azure to SQL Server synchronization to the TechNet Wiki.

Typical usage scenarios for Data Sync

- On-Premises to Cloud

- Cloud to Cloud

- Cloud to Enterprise

- Bi-directional or sync-to-hub or sync-from-hub synchronization

Conclusion

The SQL Azure Data Sync is a rapidly maturing synchronization framework meant to provide synchronization in cloud and hybrid cloud solutions utilizing SQL Azure. In a typical usage scenario, one SQL Azure instance is the "hub" database, which provides bi-directional messaging to member databases in the synchronization scheme.

Preview Chapter 5: An Introduction to SQL Azure from O’Reilly Media’s Microsoft Azure: Enterprise Application Development by Richard J. Dudley and Nathan Duchene by signing up for a Safari Books Online trial.

<Return to section navigation list>

MarketPlace DataMarket and OData

My (@rogerjenn) Vote for Windows Azure and OData Open Call Sessions at MIX 2011 of 1/26/2011 lists these six potential MIX 2011 sessions:

Following are the … Open Call page’s session abstracts that contain OData in their text:

Optimizing Data Intensive Windows Phone 7 Applications: Shawn Wildermuth

As many of the Windows Phone 7 applications we are writing are using data, it becomes more and more important to understand the implications of that data. In this talk, Shawn Wildermuth will talk about how to monitor and optimize your data usage. Whether you’re using Web Services, JSON or OData, there are ways to improve the user experience and we’ll show you how!

Creating OData Services with WCF Data Services: Gil Fink

Data is a first-class element of every application. The Open Data Protocol (OData) applies web technologies such as HTTP, AtomPub and JSON to enable a wide range of data sources to be exposed over HTTP in a simple, secure and interoperable way. This session will cover WCF Data Services best practices so you can use it the right way.

REST, ROA, and Reach: Building Applications for Maximum Interoperability: Scott Seely

We build services so that someone else can use those services instead of rolling their own. Terms like Representation State Transfer, Resource Oriented Architectures, and Reach represent how we reduce the Not Invented Here syndrome within our organizations. That’s all well and good, but it doesn’t necessarily tell us what we should actually DO. In this talk, we’ll get a common understanding of what REST and ROA is and then take a look at how these things allow us to expose our services to the widest possible audience. We’ll even cover the hard part: resource structuring. Then, we’ll look at how to implement the hard bits with ROA’s savior: OData!

Using T4 templates for deep customization with Entity Framework 4: Rick Ratayczak

Using T4 templates and customizing them for your project is fairly straight forward if you know how. Rick will show you how to generate you code for OData, WPF, ASP.NET, and Silverlight. Using repositories and unit-of-work patterns will help to reduce time to market as well as coupling to the data store. You will also learn how to generate code for client and server validation and unit-tests.

WCF Data Services, OData & jQuery. If you are an asp.net developer you should be embracing these technologies: James Coenen-Eyre

The session would cover the use of WCF Data Services, EntityFramework, OData, jQuery and jQuery Templates for building responsive, client side web sites. Using these technologies combined provides a really flexible, fast and dynamic way to build public facing web sites. By utilising jQuery Ajax calls and jQuery templating we can build really responsive public facing web sites and push a lot of the processing on to the client rather than depending on Server Controls for rendering dynamic content. I have successfully used this technique on the last 3 projects I have worked on with great success and combined with the use of MemoryCache on the Server it provides a high performance solution with reduced load on the server. The session would walk through a real world example of a new project that will be delivered in early 2011. A Musician and Artists Catalog site combined with an eCommerce Site for selling merchandise as well as digital downloads.

On-Premise Data to Cloud to Phone - Connecting with Odata: Colin Melia

You have corporate data to disseminate into the field, or service records that need to be updated in the field. How can you quickly make that data accessible from your on-premise system to Windows Phone users? Come take a look at OData with Microsoft MVP for Silverlight and leading WP7 trainer, Colin Melia, and see how you can expose data and services into the cloud and quickly connect to it from the phone, from scratch

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

• Itai Raz published Introduction to Windows Azure AppFabric blog posts series – Part 1: What is Windows Azure AppFabric trying to solve? on 1/27/2011 to the Windows Azure AppFabric Team blog:

Recently, in October 2010, at our Professional Developers Conference (PDC) we made some exciting roadmap announcements regarding Windows Azure AppFabric, and we have already gotten very positive feedback regarding this roadmap from both customers and analysts (Gartner Names Windows Azure AppFabric "A Strategic Core of Microsoft's Cloud Platform").

As result of these announcements we wanted to have a series of blog posts that will give a refreshed introduction to the Windows Azure AppFabric, its vision and roadmap.

Until the announcements at PDC we presented Windows Azure AppFabric as a set of technologies that enable customers to bridge applications in a secure manner across on-premises and the cloud. This is all still true, but with the recent announcements we now broaden this, and talk about Windows Azure AppFabric as being a comprehensive cloud middleware platform that raises the level of abstraction when developing applications on the Windows Azure Platform.

But first, let's begin by explaining what exactly it is we are trying to solve.

Businesses of all sizes experience tremendous cost and complexity when extending and customizing their applications today. Given the constraints of the economy, developers must find new ways to do more with less but at the same time simultaneously find new innovative ways to keep up with the changing needs of the business. This has led to the emergence of composite applications as a solution development approach. Instead of significantly modifying existing applications and systems, and relying solely on the packaged software vendor when there is a new business need, developers are finding it a lot cheaper and more flexible to build these composite applications on top of, and surrounding, existing applications and systems.

Developers are now also starting to evaluate newer cloud-based platforms, such as the Windows Azure Platform, as a way to gain greater efficiency and agility. The promised benefits of cloud development are impressive, by enabling greater focus on the business and not in running the infrastructure.

As noted earlier, customers already have a very large base of existing heterogeneous and distributed business applications spanning different platforms, vendors and technologies. The use of cloud adds complexity to this environment, since the services and components used in cloud applications are inherently distributed across organizational boundaries. Understanding all of the components of your application - and managing them across the full application lifecycle - is tremendously challenging.

Finally, building cloud applications often introduces new programming models, tools and runtimes, making it difficult for customers to enhance, or transition from, their existing server-based applications.

Windows Azure AppFabric is meant to address these challenges through 3 main concepts:

1. Middleware Services - pre-built higher-level services that developers can use when developing their applications, instead of the developers having to build these capabilities on their own. This reduces the complexity of building the application and saves a lot of time for the developer.

2. Building Composite Applications - capabilities that enable you to assemble, deploy and manage a composite application that is made up of several different components as a single logical entity.

3. Scale-out Application Infrastructure - capabilities that makes it seamless to get the benefit of cloud, such as: elastic scale, high availability, density, multi-tenancy, etc'.

So, with Windows Azure AppFabric you don't just get the common advantages of cloud computing such as not having to own and manage the infrastructure, but you also get pre-built services, a development model, tools, and management capabilities that help you build and run your application in the right way and enjoy more of the great benefits of cloud computing such as elastic scale, high-availability, multi-tenancy, high-density, etc'.

Tune in to the future blog posts in this series to learn more about these capabilities and how they help address the challenges noted above.

Other places to learn more on Windows Azure AppFabric are:

- Windows Azure AppFabric website and specifically the Popular Videos under the Featured Content section.

- Windows Azure AppFabric developers MSDN website

If you haven't already taken advantage of our free trial offer make sure to click on the image below and start using Windows Azure AppFabric already today!

A couple of detailed infographics would have contributed greatly to this post.

Sebastian W (@qmiswax) posted Windows Azure AppFabric and CRM 2011 online part 2 to his Mind the Cloud blog on 1/27/2011:

A while back I’ve published post about how to configure CRM 2011 Online out of the box integration with AppFabric, I showed all configuration on CRM so now it’s time to present client application I’d call that app listener, and main role of that app will be listen and get all messages send from CRM Online via AppFabric. Application itself will be hosted on premise (to be 100 % honest on my laptop).

The CRM SDK version from December 2010 contains [a] sample app and I’m not going to reinvent a wheel. I’ll show what you need to configure in that app to receive messages from our CRM system. Presented example will be the simplest one as mentioned in previous post it is one way integration, so message comes from CRM Online via AppFabric Service Bus to our app. Let’s get hands dirty. I attached [a] ready to compile solution, which is modified example from SDK. (I removed Azure storage code to simplify it ), all you need to do is change app.config

Values to change

- IssuerSecret : Current Management Key fom appfabric.azure.com

- ServiceNamespace : Service Namespace from appfabric.azure.com

- IssuerName : Management Key Name

- ServicePath : part of service bus url

Code of that application is very simple, example implements IServiceEndpointPlugin behaviour which has got only one method Execute. Functionality of sample app is even simpler it prints the contents of the Microsoft Dynamics CRM execution context sent from plugin to on premise app.

Despite simplicity of the example, it is very “powerful” solution from business point of view we integrated CRM 2011 online with on premise app without much hassle, so happy coding/integrating.:)

You can download source code from here

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Mary Jo Foley (@maryjofoley) reported Microsoft Research delivers cloud development kit for Windows Phone 7 in a 1/27/2011 post to ZDNet’s All About Microsoft blog:

Microsoft Research has made available for download a developer preview of its Windows Phone 7 + Cloud Services Software Development Kit (SDK).

The new SDK is related to Project Hawaii, a mobile research initiative which I’ve blogged about before. Hawaii is about using the cloud to enhance mobile devices. The “building blocks” for Hawaii applications/services include computation (Windows Azure); storage (Windows Azure); authentication (Windows Live ID); notification; client-back-up; client-code distribution and location (Orion).

The SDK is “for the creation of Windows Phone 7 (WP7) applications that leverage research services not yet available to the general public,” according to the download page.

The first two services that are part of the January 25 SDK are Relay and Rendezvous. The Relay Service is designed to enable mobile phones to communicate directly with each other, and to get around the limitation created by mobile service providers who don’t provide most mobile phones with consistent public IP addresses. The Rendezvous Service is a mapping service “from well-known human-readable names to endpoints in the Hawaii Relay Service.” These names may be used as rendezvous points that can be compiled into applications, according to the Hawaii Research page.

The Hawii team is working on other services which it is planning to release in dev-preview form by the end of February 2011. These include a Speech-to-Text service that will take an English spoken phrase and return it as text, as well as an “OCR in the cloud” service that will allow testers to take a photographic image that contains some text and return the text. “For example, given a JPEG image of a road sign, the service would return the text of the sign as a Unicode string,” the researchers explain.

Microsoft officials said earlier this week that the company sold last quarter 2 million Windows Phone 7 operating system licenses to OEMs for them to put on phones and provide to the carriers. (This doesn’t mean 2 million Windows Phone 7s have been sold, just to reiterate.) Microsoft launched Windows Phone 7 in October in Europe. There are still no Windows Phone 7 phones available from Verizon or Sprint in the U.S. Microsoft and those carriers have said there will be CDMA Windows Phone 7s on those networks some time in 2011. …

Eric Nelson (@ericnel) pointed out A little gem from MPN: FREE online course on Architectural Guidance for Migrating Applications to Windows Azure Platform on 1/27/2011:

I know a lot of technical people who work in partners (ISVs, System Integrators etc).

I know that virtually none of them would think of going to the Microsoft Partner Network (MPN) learning portal to find some deep and high quality technical content. Instead they would head to MSDN, Channel 9, msdev.com etc.

I am one of those people :-)

Hence imagine my surprise when I stumbled upon this little gem Architectural Guidance for Migrating Applications to Windows Azure Platform (your company and hence your live id need to be a member of MPN – which is free to join).

This is first class stuff – and represents about 4 hours which is really 8 if you stop and ponder :)

Course Structure

The course is divided into eight modules. Each module explores a different factor that needs to be considered as part of the migration process.

- Module 1: Introduction:

- This section provides an introduction to the training course, highlighting the values of the Windows Azure Platform for developers.

- Module 2: Dynamic Environment:

- This section goes into detail about the dynamic environment of the Windows Azure Platform. This session will explain the difference between current development states and the Windows Azure Platform environment, detail the functions of roles, and highlight development considerations to be aware of when working with the Windows Azure Platform.

- Module 3: Local State:

- This session details the local state of the Windows Azure Platform. This section details the different types of storage within the Windows Azure Platform (Blobs, Tables, Queues, and SQL Azure). The training will provide technical guidance on local storage usage, how to write to blobs, how to effectively use table storage, and other authorization methods.

- Module 4: Latency and Timeouts:

- This session goes into detail explaining the considerations surrounding latency, timeouts and how to assess an IT portfolio.

- Module 5: Transactions and Bandwidth:

- This session details the performance metrics surrounding transactions and bandwidth in the Windows Azure Platform environment. This session will detail the transactions and bandwidth costs involved with the Windows Azure Platform and mitigation techniques that can be used to properly manage those costs.

- Module 6: Authentication and Authorization:

- This session details authentication and authorization protocols within the Windows Azure Platform. This session will detail information around web methods of authorization, web identification, Access Control Benefits, and a walkthrough of the Windows Identify Foundation.

- Module 7: Data Sensitivity:

- This session details data considerations that users and developers will experience when placing data into the cloud. This section of the training highlights these concerns, and details the strategies that developers can take to increase the security of their data in the cloud.

- Module 8: Summary

- Provides an overall review of the course.

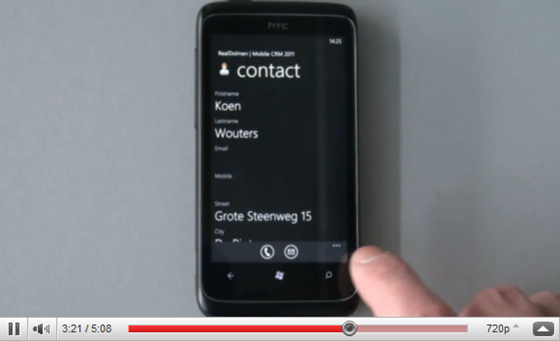

• Pradeep Viswav posted on 1/27/2011 a 00:05:08 RealDolmen Shows Dynamics CRM 2011 + Windows Phone 7 + Windows Azure demo video clip:

This is the video that played on the RealDolmen stand during the CRM 2011 Launch event in Belgium. In this demo application we integrate CRM Online with Windows Phone 7 using Windows Azure (the cloud).

Pradeep is a Microsoft Student Partner currently pursuing his Computer Science & Engineering degree.

• The Microsoft Case Studies Team posted Digital Marketing Startup [Kelley Street Digital] Switches Cloud Providers and Saves $4,200 Monthly on 1/21/2011 (missed when published):

Kelly Street Digital developed an application that tracks consumer interactions in digital marketing campaigns. For seven months, the company delivered the beta application in the cloud through Amazon Web Services and paid consultants to manage the environment and administer the database. However, when the service went down and no support was available, the company decided that it needed a faster, more reliable, and more cost-effective solution. After a successful pilot program, the company moved its application to the Windows Azure platform

. Because its developers use Microsoft development tools that are integrated with Windows Azure, it was simple to migrate the database-centric application to Microsoft SQL Azure

. The company pays only 16 percent of what it paid for Amazon Web Services, and it no longer has to pay consultants. Plus, its application runs much faster on Windows Azure.

Situation

Glen Knowles, the Cofounder of Kelly Street Digital, started his company after working for many years directing marketing campaigns. While working at a major advertising firm in Australia, he was constantly frustrated because he was unable to track consumer movements when conducting campaigns for large customers. “All the tracking tools were focused on pages and clicks,” say Knowles. “I wanted to focus on tracking people.”

In 2008, Knowles founded Kelly Street Digital, a self-funded startup company with eight employees—six of whom are developers. The company created Campaign Taxi, an application available by subscription that helps customers track consumer interactions across multiple marketing campaigns. Designed for advertising and marketing agencies, Campaign Taxi features an application programming interface (API) that customers can use to easily set up digital campaigns, add functionality to their websites, store consumer information in a single database, and present the data in reports.

Kelly Street Digital formally launched Campaign Taxi on September 1, 2010, and the company updates the product every four weeks. It has eight customers in Australia, including one government customer who uses the application to track user-generated content on its website. Among its corporate customers is an online book reseller who uses the application to plot online registrations, responses to monthly email offers, and genre preferences of buyers.

“The goal of Campaign Taxi is to follow consumers from one campaign to the next with a cost-effective solution,” says Knowles. “We build a list of all the consumers’ interactions over time, and the information is aggregated, so you can get a single, cumulative view across all consumer activity, across multiple campaigns, and measure your effectiveness.” (See Figure 1.)

Figure 1. The Campaign Taxi application aggregates consumer

interactions during marketing campaigns.When the company began developing Campaign Taxi, it ran the application with a local hosting company. It then intended to run the application in an on-premises data center. It made significant infrastructure purchases, including two instances of Microsoft SQL Server 2008 R2 data management software and two web servers. Then Kelly Street Digital discovered Amazon Web Services and, after a few trials, it moved the application to the Amazon cloud. “We liked the idea of scalability

so that as volume of customer activity grew, we could virtually add more servers,” says Knowles. “Plus, the cost of Amazon Web Services was about the same as the on-premises hardware infrastructure.”

The Campaign Taxi beta application resided on Amazon Web Services for seven months. Kelly Street Digital purchased third-party software to manage instances of Campaign Taxi in the Amazon cloud. The company secured the services of a consultant based in the United States to set up and manage the cloud environment. It also hired a consultant database administrator to help with the database servers. Says Knowles, “Not only was it expensive to hire consultants, but it was unreliable because they sometimes had conflicting priorities and they lived in different time zones.”

The savings we achieved by switching to Windows Azure is just outstanding. I can use the annual cost savings to pay a developer’s salary.Glen Knowles

Cofounder, Kelly Street Digital

In December 2009, a few days before the Christmas holiday, the instance of Campaign Taxi in the Amazon cloud stopped running. The cloud consultant that Kelly Street Digital had hired to manage its environment was on vacation in Paris, France. “I couldn’t call Amazon, and they provided no support options,” says Knowles. “The best we could do was post on the developer forum. When you rely on the developer community for support, you can’t rely on them at Christmas time because they’re on holiday.”

In general, Kelly Street Digital felt it needed a faster and more reliable cloud solution. “If the Campaign Taxi API is down, our customers’ sites don’t work,” says Knowles. “If they’re relying on our application for competition entries, consumer registrations, or any other functionality, we’ve broken their site. I did not want Kelly Street Digital to be managing servers and patching software.”

Solution

In April 2010, when the Microsoft cloud-computing platform—Windows Azure—became available for technology preview, Kelly Street Digital decided to try it. It conducted a two-week pilot program with Campaign Taxi on the Windows Azure platform, which provides developers with on-demand compute and storage to host, scale, and manage web applications on the Internet through Microsoft data centers.The first thing Kelly Street Digital noticed during the pilot program was that the response time of its application and API was significantly faster with Windows Azure

than it was with Amazon Web Services. Based on this improved latency alone, the company decided to move Campaign Taxi to the Windows Azure platform. It estimated that the process would take six weeks, but it took one developer only three weeks to migrate the application. By June 2010, Kelly Street Digital was running the application—which was still in beta—in the Windows Azure cloud.

With the application on the Windows Azure platform, Kelly Street Digital can take advantage of familiar tools and well-established technologies, and it can deploy its application in minutes. It created Campaign Taxi by using the Microsoft .NET Framework

, a software framework that supports several programming languages and is compatible with Windows Azure. The company’s developers write code in Microsoft Visual Studio 2010

, an integrated development environment, and collaborate by using Microsoft Visual Studio Team Foundation Server 2010

, an application lifecycle management solution that is used to manage development projects with tools for source control, data collection, reporting, and project tracking. “With Windows Azure, you press a button to test the application in the staging environment,” says Knowles. “Then you press another button to put the application into production in the cloud. It’s seamless.”

When Kelly Street Digital needed to rework Campaign Taxi to run on Microsoft SQL Azure

—which provides database capabilities as a fully managed service—it sent its lead developer to a four-hour training through Microsoft BizSpark

, a program that provides software startup companies with developer resources. The developer then wrote a script that quickly ported the application’s relational database to SQL Azure. “The migration from Microsoft SQL Server to Microsoft SQL Azure

was quite straightforward because they’re very similar,” says Knowles. “You have to code around a few things, but they are basically the same application.”

When Kelly Street Digital needed another instance of the application while it was using Amazon Web Services, it had to commission a second instance of Microsoft SQL Server and have a database consultant configure the mirroring of data. With SQL Azure, however, Kelly Street Digital can scale the application automatically in the cloud without adding more hardware. The company uses Blob storage in Windows Azure to import consumer data and temporarily store its customers’ uploaded data files. It also employs Blob storage to store backups of SQL Azure.

Windows Azure is an unbelievable product. I’m an evangelist for it in my network of startups. We’ve chosen this cloud platform and we’re sticking with it.Glen Knowles

Cofounder, Kelly Street Digital

Although it still backs up its database locally each night to conform to best practices, Kelly Street Digital has yet to experience any data loss since migrating its application to Windows Azure. “One thing that Microsoft is really clear about is that you should always have two instances of your application in the cloud, but at first we had only one,” says Knowles. “When installing patches, we always have one instance running and available to customers in the cloud.”

Benefits

Since Kelly Street Digital moved its Campaign Taxi application to the Windows Azure platform, it benefits from significantly reduced costs, faster run time and increased speed of deployment, a familiar development environment, reliable service, and built-in scalability. “Windows Azure is an unbelievable product,” says Knowles. “I’m an evangelist for it in my network of startups. We’ve chosen this cloud platform and we’re sticking with it.”

Decreased Costs

Kelly Street Digital paid U.S.$4,970 each month for Amazon Web Services; the cost of subscribing to the Windows Azure platform is only 16 percent of that cost—$795 a month—for the same configuration. “The savings we achieved by switching to Windows Azure is just outstanding,” says Knowles, “I can use the annual cost savings to pay a developer’s salary.”Additionally, the company no longer has to pay contractors to maintain its database or manage the Amazon Web Services environment—for an average savings of $600 a month. Kelly Street Digital staff members can now focus on improving the application. “With the Windows Azure platform, we don’t have to manage anything,” says Knowles. “We don’t have to manage the hosting environment. We don’t have to manage the databases. We just don’t have those discussions anymore. That’s a huge cost savings.”

Kelly Street Digital takes advantage of the Microsoft account tools that provide up-to-date reports on how much an organization is spending on cloud computing each month. Unlike Amazon, Microsoft offers pricing for Windows Azure

in Australian dollars, so the company avoids the uncertainty of currency fluctuations that it used to experience. “The Australian dollar goes up and down like a yo-yo,” says Knowles. “If you’re a startup—especially if you’re using a scalable resource—you need to accurately predict your cash flow requirements. I have to know what it’s going to cost.”

Table 1. Kelly Street Digital saves 68.1 percent with the Windows Azure platform.

Increased Speed

Everyone at Kelly Street Digital was surprised at how much faster the Campaign Taxi application worked on the Windows Azure platform compared to its running time on Amazon Web Services. Says Knowles, “For that kind of improved latency, we would have paid a premium to convert to the Windows Azure platform.”The increased speed of deployment impressed the team even more. “When we used Amazon Web Services, it took a whole afternoon to deploy our application,” says Knowles. “Now we can deploy our application by using Visual Studio Team Foundation Server and it takes maybe 20 minutes.”

Tightly Integrated Technologies

Kelly Street Digital takes advantage of familiar and reliable Microsoft technologies that make it simple to sustain a rapid development cycle for Campaign Taxi. Because they’re using familiar development tools, the company’s developers can focus on enhancing the application. “The integration of Windows Azure with Microsoft development tools is brilliant,” says Knowles. “The .NET Framework development environment is remarkably mature. If you have Visual Studio, Team Foundations Server, and the cloud, you’re in business.”Improved Reliability

Kelly Street Digital has confidence in the service level agreementand 24-hour support that comes with the Windows Azure platform. “Our business lives and dies on the reliability of our service,” says Knowles. “With the Windows Azure platform, all system administration issues are gone. We control the most important thing: our application. The cloud takes care of the rest.”

Enhanced Scalability

The company also makes use of the built-in scalability that comes with the Windows Azure platform to store ever-increasing quantities of consumer interaction data. Kelly Street Digital expects storage requirements to double each month for the next year. “With Windows Azure, we don’t have to worry about scalability because it’s automatic,” says Knowles. “Plus, I don’t have to worry about how fast the company grows. I can embrace growth because scalability is built in.”

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) described How To Send HTML Email from a LightSwitch Application in a 1/27/2010 post:

A while back Paul Patterson wrote an awesome step-by-step blog post based on a forum question on how to send an automated email using System.Net.Mail from LightSwitch. If you missed it here it is:

Microsoft LightSwitch – Send an Email from LightSwitch

This is a great solution if you want to send email in response to some event happening in the data update pipeline on the server side. In his example he shows how to send a simple text-based email using an SMTP server in response to a new appointment being inserted into the database. In this post I want to show you how you can create richer HTML mails from data and send them via SMTP. I also want to present a client-side solution that creates an email using the Outlook client which allows the user to see and modify the email before it is sent.

Sending Email via SMTP

As Paul explained, all you need to do to send an SMTP email from the LightSwitch middle-tier (server side) is switch to File View on the Solution Explorer and add a class to the Server project. Ideally you’ll want to put the class in the UserCode folder to keep things organized.

TIP: If you don’t see a UserCode folder that means you haven’t written any server rules yet. To generate this folder just go back and select any entity in the designer, drop down the “Write Code” button on the top right, and select one of the server methods like entity_Inserted.

The basic code to send an email is simple. You just need to specify the SMTP server, user id, password and port. TIP: If you only know the user ID and password then you can try using Outlook 2010 to get the rest of the info for you automatically.

Notice in my SMTPMailHelper class I’m doing a quick check to see whether the body parameter contains HTML and if so, I set the appropriate mail property.

Imports System.Net Imports System.Net.Mail Public Class SMTPMailHelper Const SMTPServer As String = "smtp.mydomain.com" Const SMTPUserId As String = "myemail@mydomain.com" Const SMTPPassword As String = "mypassword" Const SMTPPort As Integer = 25Public Shared Sub SendMail(ByVal sendFrom As String, ByVal sendTo As String, ByVal subject As String, ByVal body As String)Dim fromAddress = New MailAddress(sendFrom) Dim toAddress = New MailAddress(sendTo) Dim mail As New MailMessage With mail .From = fromAddress .To.Add(toAddress) .Subject = subject If body.ToLower.Contains("<html>") Then .IsBodyHtml = True End If .Body = body End With Dim smtp As New SmtpClient(SMTPServer, SMTPPort) smtp.Credentials = New NetworkCredential(SMTPUserId, SMTPPassword) smtp.Send(mail) End Sub End ClassCreating HTML from Entity Data

Now that we have the code to send an email I want to show you how we can quickly generate HTML from entity data using Visual Basic’s XML literals. (I love XML literals and have written about them many times before.) If you are new to XML literals I suggest starting with this article and this video. To use XML literals you need to make sure you have an assembly reference to System.Core, System.Xml, and System.Xml.Linq.

What I want to do is create an HTML email invoice for an Order entity that has children Order_Details. First I’ve made my life simpler by adding computed properties onto the Order_Details and Order entities that calculate line item and order totals respectively. The code for these computed properties is as follows:

Public Class Order_Detail Private Sub LineTotal_Compute(ByRef result As Decimal) ' Calculate the line item total for each Order_Detail result = (Me.Quantity * Me.UnitPrice) * (1 - Me.Discount) End Sub End ClassPublic Class Order Private Sub OrderTotal_Compute(ByRef result As Decimal) ' Add up all the LineTotals on the Order_Details collection for this Order result = Aggregate d In Me.Order_Details Into Sum(d.LineTotal) End Sub End ClassNext I want to send an automated email when the Order is inserted into the database. Open the Order entity in the designer and then drop down the “Write Code” button on the top right and select Order_Inserted to generate the method stub. To generate HTML all you need to do is type well formed XHTML into the editor and use embedded expressions to pull the data out of the entities.

Public Class NorthwindDataService Private Sub Orders_Inserted(ByVal entity As Order) Dim toEmail = entity.Customer.Email If toEmail <> "" Then Dim fromEmail = entity.Employee.Email Dim subject = "Thank you for your order!" Dim body = <html> <body style="font-family: Arial, Helvetica, sans-serif;"> <p><%= entity.Customer.ContactName %>, thank you for your order!<br></br> Order date: <%= FormatDateTime(entity.OrderDate, DateFormat.LongDate) %></p> <table border="1" cellpadding="3" style="font-family: Arial, Helvetica, sans-serif;"> <tr> <td><b>Product</b></td> <td><b>Quantity</b></td> <td><b>Price</b></td> <td><b>Discount</b></td> <td><b>Line Total</b></td> </tr> <%= From d In entity.Order_Details Select <tr> <td><%= d.Product.ProductName %></td> <td align="right"><%= d.Quantity %></td> <td align="right"><%= FormatCurrency(d.UnitPrice, 2) %></td> <td align="right"><%= FormatPercent(d.Discount, 0) %></td> <td align="right"><%= FormatCurrency(d.LineTotal, 2) %></td> </tr> %> <tr> <td></td> <td></td> <td></td> <td align="right"><b>Total:</b></td> <td align="right"><b><%= FormatCurrency(entity.OrderTotal, 2) %></b></td> </tr> </table> </body> </html> SMTPMailHelper.SendMail(fromEmail, toEmail, subject, body.ToString) End If End Sub End ClassThe trick is to make sure your HTML looks like XML (i.e. well formed begin/end tags) and then you can use embedded expressions (the <%= syntax) to embed Visual Basic code into the HTML. I’m using LINQ to query the order details to populate the rows of the HTML table. (BTW, you can also query HTML with a couple tricks as I show here).

So now when a new order is entered into the system an auto-generated HTML email is sent to the customer with the order details.

Sending Email via an Outlook Client

The above solution works well for sending automated emails but what if you want to allow the user to modify the email before it is sent? In this case we need a solution that can be called from the LightSwitch UI. One option is to automate Microsoft Outlook -- most people seem to use that popular email client, especially my company ;-). Out of the box, LightSwitch has a really nice feature on data grids that lets you to export them to Excel if running in full trust. We can add a similar Office productivity feature to our screen that auto generates an email for the user using Outlook. This will allow them to modify it before it is sent.

We need a helper class on the client this time. Just like in the SMTP example above, add a new class via the Solution Explorer file view but this time select the Client project. This class uses COM automation, a feature of Silverlight 4 and higher. First we need to check if we’re running out-of-browser on a Windows machine by checking the AutomationFactory.IsAvailable property. Next we need to get a reference to Outlook, opening the application if it’s not already open. The rest of the code just creates the email and displays it to the user.

Imports System.Runtime.InteropServices.Automation Public Class OutlookMailHelper Const olMailItem As Integer = 0 Const olFormatPlain As Integer = 1 Const olFormatHTML As Integer = 2 Public Shared Sub CreateOutlookEmail(ByVal toAddress As String, ByVal subject As String, ByVal body As String) Try Dim outlook As Object = Nothing If AutomationFactory.IsAvailable Then Try 'Get the reference to the open Outlook App outlook = AutomationFactory.GetObject("Outlook.Application") Catch ex As Exception 'If Outlook isn't open, then an error will be thrown. ' Try to open the application outlook = AutomationFactory.CreateObject("Outlook.Application") End Try If outlook IsNot Nothing Then 'Create the email ' Outlook object model (OM) reference: ' http://msdn.microsoft.com/en-us/library/ff870566.aspx Dim mail = outlook.CreateItem(olMailItem) With mail If body.ToLower.Contains("<html>") Then .BodyFormat = olFormatHTML .HTMLBody = body Else .BodyFormat = olFormatPlain .Body = body End If .Recipients.Add(toAddress) .Subject = subject .Save() .Display() '.Send() End With End If End If Catch ex As Exception Throw New InvalidOperationException("Failed to create email.", ex) End Try End Sub End ClassThe code to call this is almost identical to the previous example. We use XML literals to create the HTML the same way. The only difference is we want to call this from a command button on our OrderDetail screen. (Here’s how you add a command button to a screen.) In the Execute method for the command button is where we add the code to generate the HTML email. I also want to have the button disabled if AutomationFactory.IsAvailable is False and you check that in the CanExecute method.

Here’s the code we need in the screen:

Private Sub CreateEmail_CanExecute(ByRef result As Boolean) result = System.Runtime.InteropServices.Automation.AutomationFactory.IsAvailable End Sub Private Sub CreateEmail_Execute() 'Create the html email from the Order data on this screen Dim toAddress = Me.Order.Customer.Email If toAddress <> "" Then Dim entity = Me.Order Dim subject = "Thank you for your order!" Dim body = <html> <body style="font-family: Arial, Helvetica, sans-serif;"> <p><%= entity.Customer.ContactName %>, thank you for your order!<br></br> Order date: <%= FormatDateTime(entity.OrderDate, DateFormat.LongDate) %></p> <table border="1" cellpadding="3" style="font-family: Arial, Helvetica, sans-serif;"> <tr> <td><b>Product</b></td> <td><b>Quantity</b></td> <td><b>Price</b></td> <td><b>Discount</b></td> <td><b>Line Total</b></td> </tr> <%= From d In entity.Order_Details Select <tr> <td><%= d.Product.ProductName %></td> <td align="right"><%= d.Quantity %></td> <td align="right"><%= FormatCurrency(d.UnitPrice, 2) %></td> <td align="right"><%= FormatPercent(d.Discount, 0) %></td> <td align="right"><%= FormatCurrency(d.LineTotal, 2) %></td> </tr> %> <tr> <td></td> <td></td> <td></td> <td align="right"><b>Total:</b></td> <td align="right"><b><%= FormatCurrency(entity.OrderTotal, 2) %></b></td> </tr> </table> </body> </html> OutlookMailHelper.CreateOutlookEmail(toAddress, subject, body.ToString) Else Me.ShowMessageBox("This customer does not have an email address", "Missing Email Address", MessageBoxOption.Ok) End If End SubNow when the user clicks the Create Email button on the ribbon, the HTML email is created and the Outlook mail message window opens allowing the user to make changes before they hit send.

I hope I’ve provided a couple options for sending HTML emails in your LightSwitch applications. Select the first option to use SMTP when you want automated emails sent from the server side. Select the second option to use the Outlook client when you want to interact with users that have Outlook installed and LightSwitch is running out-of-browser.

Mauricio Rojas described Restoring simple lookup capabilities to Silverlight ListBox in a 1/27/2011 post:

VB6 and WinForms ListBox has the built in capability to provide a simple data look up. But the Silverlight ListBox does not.

So if you have a list with items:

- Apple

- Airplane

- Blueberry

- Bee

- Car

- Zoo

- Animal Planet

And your current item is Apple when you press A the next current item will be Airplane

- Apple

- Airplane

- Blueberry

- Bee

- Car

- Zoo

- Animal Planet

And the next time you press A the next current item will be Animal Planet

- Apple

- Airplane

- Blueberry

- Bee

- Car

- Zoo

- Animal Planet

And the next time you press A the next current item will be Apple again

Ok to do in Silverlight you need to add a event handler. You can create a user control and this event handler and replace your listbox for your custom listbox or just add this event handler for the listboxes that need it. The code you need is the following:

void listbox1_KeyDown(object sender, KeyEventArgs e) { String selectedText = this.listbox1.SelectedItem.ToString(); String keyAsString = e.Key.ToString(); int maxItems = listbox1.Items.Count; if (!String.IsNullOrEmpty(selectedText) && !String.IsNullOrEmpty(keyAsString) && keyAsString.Length == 1 && maxItems > 1) { int currentIndex = this.listbox1.SelectedIndex; int nextIndex = (currentIndex + 1) % maxItems; while (currentIndex != nextIndex) { if (this.listbox1.Items[nextIndex].ToString().ToUpper().StartsWith(keyAsString)) { this.listbox1.SelectedIndex = nextIndex; return; } nextIndex = (nextIndex + 1) % maxItems; } //NOTE: theres is a slight different behaviour because for example in //winforms if your only had an item that started with A and press A the selectionIndex //will not change but a SelectedIndexChanged event (equivalent to SelectionChanged in Silverlight) //and this is not the Silverlight behaviour } }

Mihail Mateev explained Using Visual Studio LightSwitch Applications with WCF RIA Services in a 1/27/2011 post to the Infragistics Community blog:

LightSwitch is primarily targeted at developers who need to rapidly product business applications.

Visual Studio LightSwitch application could connect to a variety of data sources. For now it can connect to database servers, SharePoint Lists and WCF RIA Services.

Connection with WCF RIA Services requires some settings that are not "out of the box". Despite this, it is a very often expected scenario and developers want to have a sample where step by step to create a LightSwitch application, using as data source WCF RIA Services. That is the reason to create this walkthrough.

Demo Application:

Requirements:

- Visual Studio LightSwitch Beta 1:

- SQL Server 2008 Express or higher license

Steps to reproduce:Steps to implement the application:

- Create a sample database (HYPO database)

- Create a WCF RIA Services Class Library

- Add an ADO.NET Entity Data Model

- Add a Domain Service Class

- Create a Visual Studio LightSwitch application

- Add a WCF RIA Services data source

- Create a screen, using a WCF RIA Services data source

Steps to Reproduce:

- Create a sample database

Create a database, named HYPO

Set a fields in the table “hippos”.

- Create a WCF RIA Services Class Library, named RIAServicesLibrary1

- Add an ADO.NET Entity Data Model

Delete generated Class1 and add an ADO.NET Entity Data Model using data from database “HYPO”.

- Add a Domain Service Class

Create a Domain Service Class, named HippoDomainService using the created ADO.NET Entity Data Model (HYPOEntityModel).

LightSwitch applications require WCF RIA Data Source to [have a] default query and key for entities.

Modify HippoDomainService class in HippoDomainService.cs : add an attribute [Query(IsDefault=true)] to the method HippoDomainService.GetHippos().

1: [Query(IsDefault=true)]2: public IQueryable<hippos> GetHippos()3: {4: return this.ObjectContext.hippos;5: }Add an attribute [Key] to the ID field in the hippos class (HippoDomainService.metadata.cs)

1: internal sealed class hipposMetadata2: {3:4: // Metadata classes are not meant to be instantiated.5: private hipposMetadata()6: {7: }8:9: public Nullable<int> Age { get; set; }10:11: [Key]12: public long ID { get; set; }13:14: public string NAME { get; set; }15:16: public string Region { get; set; }17:18: public Nullable<decimal> Weight { get; set; }19: }

- Create a Visual Studio LightSwitch application

Add a new LightSwitch application (C#) to the solution with WCF RIA Class Library:

- Add a WCF RIA Services data source

Add a reference to RIAServicesLibrary1.Web and add HippoDomainService as data source.

Add the entity “hippos” as a data source object:

Ensure “hippos” properties:

Switch view type for the LightSwitch application to “File View” and display hidden files.

Open in RIAServicesLibrary1.Web App.config file and copy the connection string to Web.config in a LightSwitch ServerGenerated project.

Connection string:

1: <add name="HYPOEntities" connectionString="metadata=res://*/HYPOEntityModel.csdl|res://*/HYPOEntityModel.ssdl|res://*/HYPOEntityModel.msl; providerName="System.Data.EntityClient" /2: provider=System.Data.SqlClient;3: provider connection string="4: Data Source=.\SQLEXPRESS;Initial Catalog=HYPO;Integrated Security=True;5: MultipleActiveResultSets=True""6: ></connectionStrings>

- Create a screen, using a WCF RIA Services data source

Add a new Search Data Screen, named Searchhippos using hippos entity:

Ensure screen properties:

Run the application: hippos data is displayed properly.

Modify hippos data.

Source code of the demo application you could download here: LSRIADemo.zip

Avkash Chauhan published a workaround for System.ServiceModel.Channels.ServiceChannel exception during ASP.NET application upgrade from Windows Azure SDK 1.2 to 1.3 on 1/27/2011:

When you upgrade your ASP.NET based application from Windows Azure SDK 1.2 to 1.3 it is possible you may hit the following exception:

System.ServiceModel.CommunicationObjectFaultedException was unhandled Message=The communication object, System.ServiceModel.Channels.ServiceChannel, cannot be used for communication because it is in the Faulted state. Source=mscorlib StackTrace: Server stack trace: at System.ServiceModel.Channels.CommunicationObject.Close(TimeSpan timeout) Exception rethrown at [0]: at System.Runtime.Remoting.Proxies.RealProxy.HandleReturnMessage(IMessage reqMsg, IMessage retMsg) at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type) at System.ServiceModel.ICommunicationObject.Close(TimeSpan timeout) at System.ServiceModel.ClientBase'1.System.ServiceModel.ICommunicationObject.Close(TimeSpan timeout) at Microsoft.WindowsAzure.Hosts.WaIISHost.Program.Main(String[] args) InnerException:

This is a known issue with Windows Azure SDK 1.3 when ASP.NET based application is upgraded from SDK 1.2 to 1.3.

There are two potential causes for such problem as below:

1. Web.config file marked read-only in compute emulator

2. Multiple role instances writing to same configuration file in compute emulator

The problem is described in details along with potential workaround at:

http://msdn.microsoft.com/en-us/library/gg494981.aspx

Please refer the following link for another potential issue when upgrading Windows Azure SDK 1.2 to 1.3:

Campbell Gunn answered What are LightSwitch Extensions? in a 1/21/2011 thread of the LightSwitch Extensibility forum:

Extensions are custom content/data access that is not available in the LightSwitch product.

Extensions are a combination of code (in either VB or C#) and Entity Framework metadata (model data) that describes the custom content/data access you are creating and the add to LightSwitch.

You can get an understanding of what and how extensions are used, by downloading the LightSwitch Training kit at: http://www.microsoft.com/downloads/en/details.aspx?FamilyID=ac1d8eb5-ac8e-45d5-b1e3-efb8e4e3ebd1&displaylang=en

This shows currently two extension types, one is a custom control extension and the other is custom data source.

In our next release we will have more extension types available for you to develop on.

Campbell is Program Manager - Microsoft Visual Studio LightSwitch - Extensibility

Adron Hall (@adronbh) posted Cuttin’ Teeth With NuGet on 1/26/2011:

If you’re in the .NET Dev space you might have heard about the release of nuget. This is an absolutely great tool for pulling in dependencies within a Visual Studio 2010 Project. (It can also help a lot in Visual Studio 2008, and maybe even earlier versions)

Instead of writing up another tutorial I decided I’d put together specific links for putting together packages, installing packages, and other activities around using this tool. In order of importance in getting started, I’ve itemized the list below.

- Nuget Gallery: The first site to check out is the Nuget Codeplex Site.

- Check out the Nuget Gallery.

- Then check out the getting start[ed] page.

Once you’ve checked out all those sites & got rolling with nuget, be sure to check out some of the blogs from the guys that have put time in on the development and ongoing awesomeness of it!

- David Ebbo – Blog – @davidebbo

- Phil Haack – Haacked – @haack

That’s all I’ve got for now, check out the nuget, if you code against the .NET stack you owe it to yourself!

Also some of my cohorts have put together a few pieces of information related to nuget and getting it tied together a bit like Ruby on Rails Gems:

Return to section navigation list>

Windows Azure Infrastructure

• Dan Orlando posted Cloud Computing: Choosing a Provider for Platform as a Service (PaaS) on 1/27/2011:

I recently wrote a series of articles that will be published next month on the topic of cloud computing, and there was one thing that really stood out about the current state of cloud computing. A few years ago, cloud computing was mostly an abstract concept with varying definitions depending on who you asked. Things have changed though. It is evident that a large portion of the Information Technology and business sectors have gotten better at understanding cloud computing. However, when cloud computing is broken down into the three classifications of cloud computing, namely: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), most people find it very hard to understand the difference.

The most prevalent confusion seems to be with Platform as a Service. I’ve experienced IT professionals confusing Platform as a Service with Infrastructure as a Service so many times in the last few months that I lost track. The articles I wrote go into great detail on these classifications and their differences as a result of this, and I will post links to them here when they are published. In the meantime, I thought I would post a brief description of Platform as a Service and the players involved to assist in our continued understanding of cloud computing and it’s three major classifications.

Platform as a Service is like a middle-tier between infrastructure and software, consisting of what is known a “solution stack”. The solution stack is what sets companies apart who offer Platform as a Service. If you are a decision-maker for your company, this is something you will need to explore in greater depth before making a decision to jump on board the PaaS train. Let’s take a quick look at the top three contenders in the PaaS arena to give you an idea of the differences between them:

- Force.com. Force.com pages use a standard MVC design, HTML, and other Web technologies such as CSS, Ajax, Adobe Flash® and Adobe Flex®.

Windows Azure™ platform. Microsoft’s cloud platform is built on the Microsoft .NET environment using the Windows Server® operating system and Microsoft SQL Server® as the database.

- Google App Engine. Google’s platform uses the Java™ and Python languages and the Google App Engine datastore.

In conducting research for this article, I took the opportunity to spend some time with Google App Engine, Force.com, and Windows Azure. With App Engine, you have a Java run time environment in which you can build your application using standard Java technologies, including the Java Virtual Machine (JVM), Java servlets, and the Java programming language—or any other language using a JVM-based interpreter or compiler, such as JavaScript or Ruby. In addition, App Engine includes a dedicated Python run time environment, with a Python interpreter and the Python standard library. The Java and Python run time environments support multi-tenant computing so that applications run without interference from other applications on the system.

With Force.com, you can use standard client-side technologies in an MVC design pattern. For example, if you’re using HTML with Ajax, your JavaScript behaviors and Ajax calls will be separate from your styling, which is held in CSS, and the HTML will hold your page layout structure. With Windows Azure, you’re using the Microsoft .NET Framework with a SQL Server database.

Hopefully this was helpful in providing you with a means for comparing PaaS competitors and understanding what is available to you. If you did find it useful, you will definitely want to read the articles I mentioned earlier. Although I cannot disclose any further information about these articles right now, I promise to post links the moment each one is published.

Dan missed The Windows Azure Platform’s choice between Windows Azure NoSQL storage (tables, blobs and queues) and relational SQL Azure databases.

• Christian Weyer posted very important information about Network pipe capabilities of your Windows Azure Compute roles on 1/27/2010:

As this question shows up again and a again:

here is some good data from a PDC10 session called Inside Windows Azure Virtual Machines about which network bandwidth is available to which kind of VMs:

VM Type

CPU

Memory

Peak Mbps

Extra Small

1

768MB

5

Small

1

1.75GB

100

Medium

2

3.50GB

200

Large

4

7.00GB

400

XL

8

14.0GB

800

Hope this helps.

The Extra Small instance would better be named “Nano” when it comes to bandwidth.

Buck Woody (@buckwoody) explained Cloud Computing Pricing - It's like a Hotel in a 1/27/2011 post to his Carpe Data blog:

I normally don't go into the economics or pricing side of Distributed Computing, but I've had a few friends that have been surprised by a bill lately and I wanted to quickly address at least one aspect of it.

Most folks are used to buying software and owning it outright - like buying a car. We pay a lot for the car, and then we use it whenever we want. We think of the "cloud" services as a taxi - we'll just pay for the ride we take an[d] no more. But it's not quite like that. It's actually more like a hotel.

When you subscribe to Azure using a free offering like the MSDN subscription, you don't have to pay anything for [a one-role] service. But when you create an instance of a Web or Compute Role, Storage, that sort of thing, you can think of the idea of checking into a hotel room. You get the key, you pay for the room. For Azure, using bandwidth, CPU and so on is billed just like it states in the Azure Portal. so in effect there is a cost for the service and then a cost to use it, like water or power or any other utility.

Where this bit some folks is that they created an instance, played around with it, and then left it running. No one was using it, no one was on - so they thought they wouldn't be charged. But they were. It wasn't much, but it was a surprise.They had the hotel room key, but they weren't in the room, so to speak. To add to their frustration, they had to talk to someone on the phone to cancel the account.

I understand the frustration. Although we have all this spelled out in the sign up area, not everyone has the time to read through all that. I get that. So why not make this easier?

As an explanation, we bill for that time because the instance is still running, and we have to tie up resources to be available the second you want them, and that costs money. As far as being able to cancel from the portal, that's also something that needs to be clearer. You may not be aware that you can spin up instances using code - and so cancelling from the Portal would allow you to do the same thing. Since a mistake in code could erase all of your instances and the account, we make you call to make sure you're you and you really want to take it down.

Not a perfect system by any means, but we'll evolve this as time goes on. For now, I wanted to make sure you're aware of what you should do. By the way, you don't have to cancel your whole account not to be billed. Just delete the instance from the portal and you won't be charged. You don't have to call anyone for that.

And just FYI - you can download the SDK for Azure and never even hit the online version at all for learning and playing around. No sign-up, no credit card, PO, nothing like that. In fact, that's how I demo Azure all the time. Everything runs right on your laptop in an emulated environment.

Tony Bailey (a.k.a. tbtechnet) posted Common Sense and Windows Azure Scale Up on 1/27/2011:

I enjoyed this [Common Sense] technical webinar given by Juan De Abreu [pictured at right]. It covers using the Windows Azure platform for scale up, burst demand scenarios.

I like how this topic is covered from a real-world “we have really done this for clients” angle and the deck is clear, concise with some good background information too.

http://blog.getcs.com/2011/01/scalability-azure-webinar-success/

After you’ve watched Juan’s presentation, get a free Windows Azure platform account for 30-days. No credit card required: http://www.windowsazurepass.com/?campid=A8A1D8FB-E224-E011-9DDE-001F29C8E9A8

Promo code is TBBLIF

I’ve also come across more content on the scale-up scenario in this how-to lab: http://code.msdn.microsoft.com/azurescale

Kenneth van Sarksum reported RightScale will support Azure VMs in a 1/27/2011 post to the CloudComputing.info blog:

RightScale, which offers a management solution for Infrastructure-as-a-Service (IaaS) announced that it will support Azure VMs in its product Cloud Management Platform, the Register reports in a interview with CTO and founder Thorsten van Eicken.

RightScale currently supports a number of public IaaS clouds: Amazon EC2, GoGrid and FlexiScale. The company already announced the upcoming support for The Rackspace Cloud. It also supports those private IaaS clouds that are already managed by Eucalyptus, allowing customers to build and manage hybrid clouds architectures.

An early preview of the Azure VM role was released in December last year, and is still expected for the first half of this year.

Robert McNeill discussed Dealing with Cloud Computing Sprawl in a 1/27/2011 post to the Saugatuck Technology blog:

Saugatuck research indicates that 65 percent or more of all NEW business application / solution deployments in the enterprise will be Cloud-based or Hybrid by 2015 (up from 15-20 percent in 2009) (SSR-834, Key SaaS, PaaS and IaaS Trends Through 2015 – Business Transformation via the Cloud, 13Jan2011). One implication is that by 2015, 25 percent or more of TOTAL enterprise IT workloads will be Cloud-based or Hybrid.

In order to more effectively manage Cloud procurement and reduce “Cloud and SaaS sprawl”, IT asset management practices must evolve to define, discover and manage cloud based assets. This becomes critical as Cloud Computing adoption moves from an opportunistic point solution strategy to one that is deeply embedded as part of an integrated hybrid infrastructure. Saugatuck hears of horror stories from unmanaged Cloud Computing procurement and poor operational disciplines. Consider the following:

SaaS projects are bypassing centralized procurement functions. Saugatuck believes that most organizations may be underestimating SaaS use by up to 25 percent (see Strategic Research Report, Enterprise Ready, or Not – SaaS Enters the Mainstream, SSR-460, 10July2008). We are aware of one client that has at least six salesforce.com instances, and our experience shows that this is not dissimilar to other large decentralized organizations. Do you have a process in place to standardize procurement in order to drive economies of procurement and support?

- Small department driven (silo’d) projects have grown into enterprise wide deployments enabling business processes and hosting corporate data in external data centers. Security, standards and management of cloud computing assets has not kept up the business adoption (see Strategic Perspective, Mitigating Risk in Cloud-Sourcing and SaaS: Certifications and Management Practices, MKT-660, 30Oct2009). Do you have visibility into the performance, reliability and security of your data and applications?

- Client and mobile management groups require improved security practices to deal with new smart devices like iPhones that are accessing more than email, but corporate Cloud based applications. When users leave the organization, do you immediately cut off access to critical information?

Saugatuck’s advice is to identify and implement repeatable practices around management of the delivery of IT and business processes that are enabled by both on-premises and Cloud IT. The IT management team must evolve and expand their role and responsibilities in response to the Cloud providing information about the state of on-premises and Cloud IT to users such as risk, security, HR and vendor management.

New technology vendors will enter the market to focus on the technical challenges associated with managing Cloud IT. Conformity and Okta are two such vendors we recently had briefings with focused on addressing the challenges of identity and access to Cloud Based Solutions. But while IT organizations may add new tools to assist in discovering, provisioning and managing Cloud IT, the strategy and process for managing IT and business processes must remain coordinated across traditional on-premises and Cloud IT.

• Darryl K. Taft reported “As part of its Technical Computing initiative, Microsoft opens a new Technical Computing Labs project under its MSDN DevLabs banner” as a deck for his Microsoft Opens New Technical Computing Labs Project post of 1/26/2011 to eWeek.com’s Windows & Interoperability News blog:

As part of its Technical Computing initiative, Microsoft has launched a new effort known as Technical Computing Labs (TC Labs) for developers on Microsoft Developer Network’s (MSDN) DevLabs.

TC Labs provides developers with the opportunity to learn about Technical Computing technologies, get early versions of code and to provide feedback to Microsoft. TC Labs is a new resource for developers to access early releases of Microsoft Technical Computing software.

According to the TC Labs page on DevLabs, Microsoft is bringing multiple technologies and services to bear with this initiative including parallel development tools in Visual Studio, distributed computing environments with Windows High Performance Computing (HPC) Server, cloud computing with Windows Azure, and a broad ecosystem of partner applications.

“Microsoft Technical Computing is focused on empowering a broader group of people in business, academia, and government to solve some of the world’s biggest challenges,” Microsoft said on the TC Labs site. “It delivers the tools to harness computing capacity to make better decisions, fuel product innovation, speed research and development, and accelerate time to market – including decoding genomes, rendering movies, analyzing financial risks, streamlining crash test simulations, modeling global climate solutions and other highly complex problems. Doing this efficiently, at scale, necessitates a comprehensive platform that integrates well with your existing IT environment.”

Microsoft TC Labs projects include Sho, which provides those who are working on Technical Computing-styled workloads an interactive environment for data analysis and scientific computing that lets you seamlessly connect scripts (in IronPython) with compiled code (in .NET) to enable fast and flexible prototyping.

In a Jan. 26 blog post, S “Soma” Somasegar, senior vice president of Microsoft’s Developer Division, said of Sho, “The environment includes powerful and efficient libraries for linear algebra and data visualization, both of which can be used from any .NET language, as well as a feature-rich interactive shell for rapid development. Sho comes with packages for large-scale parallel computing (via Windows HPC Server and Windows Azure), statistics, and optimization, as well as an extensible package mechanism that makes it easy for you to create and share your own packages.” [Emphasis added.]

Another TC Labs project is the Task Parallel Library (TPL), which was introduced in the .NET Framework 4, providing core building blocks and algorithms for parallel computation and asynchrony.

Regarding TPL, Somasegar said, “.NET 4 saw the introduction of the Task Parallel Library (TPL), parallel loops, concurrent data structures, Parallel LINQ (PLINQ), and more, all of which were collectively referred to as Parallel Extensions to the .NET Framework. TPL Dataflow is a new member of that family, layering on top of tasks, concurrent collections, and more to enable the development of powerful and efficient .NET-based concurrent systems built using dataflow concepts. The technology relies on techniques based on in-process message passing and asynchronous pipelines and is heavily inspired by the Visual C++ 2010 Asynchronous Agents Library and DevLab's Axum language. TPL Dataflow provides solutions for buffering and processing data, building systems that need high-throughput and low-latency processing of data, and building agent/actor-based systems. TPL Dataflow was also designed to smoothly integrate with the new asynchronous language functionality in C# and Visual Basic I previously blogged about.”

And another TC Labs project, Dryad, DSC, and DryadLINQ, are a set of technologies that support data-intensive computing applications that run on a Windows HPC Server 2008 R2 Service Pack 1 cluster. Microsoft said these technologies enable efficient processing of large volumes of data in many types of applications, including data-mining applications, image and stream processing, and some scientific computations. Dryad and DSC run on the cluster to support data-intensive computing and manage data that is partitioned across the cluster. DryadLINQ allows developers to define data intensive applications using the .Net LINQ model.

Sho’ ‘nuff.

The Windows Azure Documentation team updated the Windows Azure SDK Schema Reference for ServiceConfiguration.cscfg and ServiceDefinition.csdef XML content on 1/24/2011 (missed when published):

A service requires two configuration files, which are XML files:

- The service definition file describes the service model. It defines the roles included with the service and their endpoints, and declares configuration settings for each role.

- The service configuration file specifies the number of instances to deploy for each role and provides values for any configuration settings declared in the service definition file.

This reference describes the schema for each file.

In This Section

See Also: Concepts

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

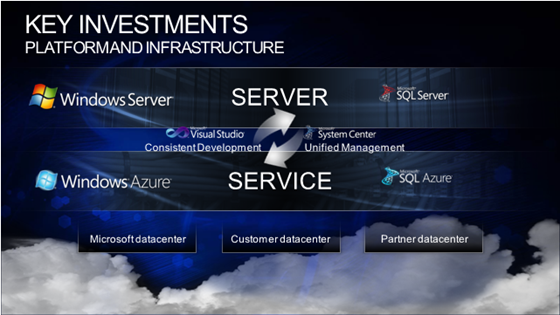

TechNet ON posted System Center in the Cloud to the TechNet Newsletter for 1/27/2010:

Bridging the Gaps (View All)

- Microsoft’s Cloud Computing Infrastructure Vision

& Approach (white paper) [illustration below]- Opalis IT Process Automation Overview

Manage the Private Cloud (View All)

Mitch Irsfeld asserted The System Center product portfolio provides a unified management approach for applications and workloads across on-premises datacenters, private cloud and public cloud environments in a preface to his Editors Note: Managing for the Future with System Center’s Datacenter-to-Cloud Approach for TechNet Magazine and published it on 1/27/2011:

With any transition to the cloud--whether it’s a wholesale shift or moving small pieces of your IT infrastructure to start with; whether it’s delivered on-premise or through a provider—the ability to manage and control the environment is paramount. In fact, the trust factor is one of the perceived barriers to cloud computing. Trust grows out of visibility into infrastructure, applications and data protection policies.

Microsoft’s System Center suite can help bridge those visibility gaps between the traditional enterprise datacenter, the virtual datacenter, and the cloud by taking the processes and management skills in place for your current infrastructure and applying it to whatever you provision over time.

That datacenter-to-cloud approach makes System Center and Windows Server 2008 R2 a powerful combined solution for implementing the IT-as-a-service model which promises cost efficiency and business agility gains.

To learn about Microsoft’s vision for cloud computing from an IT perspective, and why it’s important to have a datacenter-to-cloud approach, download the whitepaper Microsoft’s Cloud Computing Infrastructure Vision & Approach. You’ll notice that a key component of that approach is unified management across premises and cloud environments. That’s where System Center comes in. This edition of TechNet ON looks at the System Center suite as the foundation for managing private cloud and public cloud infrastructures.

For an overview of the System Center modules that can help accelerate your migration to the cloud, read Joshua Hoffman’s new TechNet Magazine article The Power of System Center in the Cloud. In it he addresses the primary tools for managing a private cloud infrastructure as well as providing operational insight into applications hosted on the Windows Azure platform in a public cloud or hybrid environment.

Virtual Machine Manager and the Private Cloud

System Center Virtual Machine Manager (VMM) 2008 R2 is the primary tool for managing a virtual private cloud infrastructure. It provides a unified view of an entire virtualized infrastructure across multiple host platforms and myriad guest operating systems, while delivering a powerful toolset to facilitate the onboarding of new workloads.

To make it easier to configure and allocate datacenter resources, customize virtual machine actions and provision self-service management for business units, Microsoft offers the Virtual Machine Manager Self-Service Portal 2.0 (VMMSSP). VMMSSP is a free, fully supported, partner-extensible solution that can be used to pool, allocate, and manage your compute, network and storage resources to deliver the foundation for a private cloud platform in your datacenter. For an overview of the VMMSSP, the Solution Accelerators team penned System Center Virtual Machine Manager Self Service Portal 2.0 -- Building a foundation for a dynamic datacenter with Microsoft System Center.

For more in-depth work with VMM, Microsoft Learning offers a free lesson, excerpted from Course 10215A: Implementing and Managing Microsoft Server Virtualization. The lesson, titled Configuring Performance and Resource Optimization, describes how to implement performance and resource optimization in System Center Virtual Machine Manager 2008 R2, an essential tool for managing your private cloud infrastructure.

While VMM simplifies the task of converting physical computers to virtual machines—an essential step in building your private cloud infrastructure—it’s not the only System Center component to consider for delivering IT services. As with a physical data infrastructure, you still need to monitor, manage and maintain the environment. You still need to ensure compliance with a good governance model. And you’ll want to streamline the delivery of services and gain efficiencies through process automation.

System Center Operations Manager can deliver operational insight across your entire infrastructure in a physical datacenter, in a private cloud, or deployed as public cloud services. If you are already assessing, deploying, updating and configuring resources using Operations Manager, you can provide the same degree of systems management and administration as workloads are migrated to a cloud environment. [I think we need to add/clarify that, even if you are using a hosted PaaS, like Azure, you’ll want to be informed about versioning and configuration in a dashboard, to track how Microsoft is evolving the platform you use.

Beyond the Datacenter

As your operations are ready to take advantage of the expanded computing capacity and cost efficiencies of the public cloud, System Center migrates with you. In particular, System Center Service Manager 2010, and Opalis 6.3 extend the process automation, compliance and SLA management to the cloud.

Service Manager can help provision services across the enterprise and cloud. It automatically connects knowledge and information from System Center Operations Manager, System Center Configuration Manager, and Active Directory Domain Services to provide built-in processes based on industry best practices for incident and problem resolution, change control, and asset lifecycle management.

By using Service Manager with Opalis, administrators can also automate IT processes for incident response, change and compliance, and service-lifecycle management. Opalis provides a wealth of interconnectivity and workflow capabilities, allowing administrators to standardize an automated process so that it can be delivered more quickly and more reliably because it is executing the same way each time. And it can do that across the System Center portfolio and across third party management tools and infrastructure.

Finally, if you are already running applications in the Windows Azure environment, the

Windows Azure Application Monitoring Management Pack works with Operations Manager 2007 R2 to monitor the availability and performance of applications running on Windows Azure.