Windows Azure and Cloud Computing Posts for 12/16/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

MSDN updated the Web and Data Services for Windows Phone’s Storing Data in the Windows Azure Platform for Windows Phone topic on 12/15/2010:

The Windows Azure platform provides several data storage options for Windows Phone applications. This topic introduces components of the Windows Azure platform and describes how they relate to a general architecture for storing non-public data in the cloud. For more information about how Windows Phone applications can use the Windows Azure platform, see Windows Azure Platform Overview for Windows Phone.

This topic describes current Windows Azure platform features and a basic Windows Azure application architecture. For information about the latest Windows Azure features, see the Windows Azure home page and the Windows Azure AppFabric home page.

WCF services and WCF Data Services hosted on the Windows Azure platform can be consumed by Windows Phone applications just like other HTTP-based web services. For more information about consuming web services in your Windows Phone applications, see Connecting to Web and Data Services for Windows Phone.

Architectural Overview

This basic “client-server” architecture is comprised of three tiers. The Windows Phone application is the “client” application in the client tier; the Windows Azure Web role is the “server” application in the Web services tier; and Windows Azure storage services and SQL Azure provide data storage in the data storage tier. This architecture is shown in the following diagram:

Note: This architecture is designed for non-public data that requires authentication. For public data, blobs and blob containers can be directly exposed to the Web and read via anonymous requests. For more information, see Setting Access Control for Containers.

Client Tier

The client tier is comprised of the Windows Phone application and isolated storage. Isolated storage is used to store the application data that is needed for subsequent launches of the application. Isolated storage can also be used to temporarily store data before it is saved to the data storage tier. For more information about isolated storage, see Isolated Storage Overview for Windows Phone.

Web Service Tier

The Web service tier is comprised of a Windows Azure web role that hosts one or more web services based on Windows Communication Foundation (WCF) or WCF data services. WCF is a part of the .NET Framework that provides a unified programming model for rapidly building service-oriented applications. WCF Data Services enables the creation and consumption of Open Data Protocol (OData) services from the Web (formerly known as ADO.NET Data Services). For more information, see the WCF Developer Center and the WCF Data Services Developer Center.

In this architecture, the Web service tier enables abstraction of the data storage tier. By using widely available public specifications to define the protocols and abstract data structures that the web service implements, a wide variety of clients can interact with the service, including Windows Phone applications. Abstraction of the data storage tier also allows the data storage implementation to adapt to changing business requirements without affecting the client tier.

For WCF services, abstract data structures are defined by a data contract. The data contract is an agreement between the client and service that describes the data to be exchanged. For more information, see Using Data Contracts.

For OData services, abstract data structures are defined by a data model. WCF Data Services supports a wide variety of data models. For more information, see Exposing Your Data as a Service (WCF Data Services).

In this architecture, the Windows Phone application communicates with the web service to authenticate users based on their username and password. Depending on the value of the data, user credentials for accessing the Web service tier may or may not be stored on the phone. The Windows Phone application does not directly connect to the data storage tier. Instead, the Web role accesses the data storage tier on behalf of the Windows Phone application.

Security Note: We recommend that Windows Phone applications do not connect to the data storage tier directly. This prevents keys and credentials for the data storage tier from being stored or entered on the phone. In this architecture, only the Web role is granted access to the data storage tier. For more information about web service security, see Web Service Security for Windows Phone.

Data Storage Tier

The data storage tier is comprised of the Windows Azure storage services and SQL Azure. The Windows Azure storage services include the Blob, Queue, and Table services. SQL Azure provides a relational database service. A Windows Azure role can use any combination of these services to store and serve data to a Windows Phone application. For more information about these services, see Understanding Data Storage Offerings on the Windows Azure Platform.

Note: The Windows Azure platform also provides Windows Azure roles a temporary storage repository named local storage. A Windows Azure role can access local storage like a file system. Local storage is not recommended for long-term durable storage of your data.

Configuring a Windows Azure Storage Service

Before using a Windows Azure storage service, the respective endpoint must first be created and configured programmatically. For example, to store images to a blob service for the first time, the Web role must first create and configure the blob container that will store the images.

Configuring a SQL Azure Database

There are several ways that you can create and configure a SQL Azure database:

Windows Azure Platform Management Portal: Use Windows Azure Platform Management Portal to create and manage databases.

SQL Server Management Studio: Manage a SQL Azure database similar to an on-premise instance of SQL Server, using SQL Server Management Studio from SQL Server 2008 R2.

Transact-SQL: Use a Windows Azure role to programmatically issue Transact-SQL statements to SQL Azure.

Important Note: You must first configure the SQL Azure firewall to connect to the database from inside or outside of the Windows Azure platform. For more information, see How to: Configure the SQL Azure Firewall.

Getting Started with the Windows Azure Platform

Perform the following steps to get started building web services like the one described in this topic:

Description

Reference

Learn: Review the developer centers for the latest information about the products and educational references.

Windows Communications Foundation (WCF) Developer Center

Install: Install the development tools that emulate Windows Azure on your computer. Install SQL Server 2008 R2 Express to develop local or SQL Azure relational databases.

Join: To use Windows Azure or SQL Azure, you will need an account. Note: An account is not required for developing applications and databases locally.

Windows Azure Getting Started - Get a Paid Account

Create: Create your first Windows Azure local application. Create a local or SQL Azure database.

Walkthrough: Create Your First Windows Azure Local Application

Video: Use SQL Azure to build a cloud application with data access

Develop: Develop a web role that hosts a WCF service or WCF data service. For storage, the web role can use development storage (a local simulation of Windows Azure storage services) or a relational database. If using a database, the local web service can use SQL Azure or a local database that is hosted with SQL Express.

Building Windows Azure Services

Windows Azure Storage Services - Using Development Storage

Development Considerations in SQL Azure

Deploy: Deploy your web role application and database to the cloud.

<Return to section navigation list>

SQL Azure Database and Reporting

LarenC posted Clarifying Sync Framework and SQL Server Compact Compatibility to the Sync Framework Team blog on 12/16/2010:

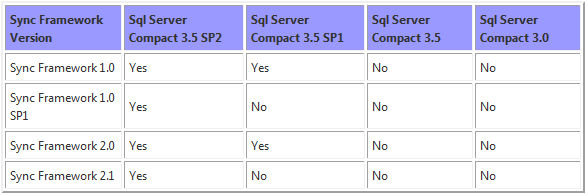

Sync Framework and SQL Server Compact install with several versions of Visual Studio and SQL Server, and each version of Sync Framework is compatible with different versions of SQL Server Compact. This can be pretty confusing!

This article on TechNet Wiki clarifies which versions of Sync Framework are installed with Visual Studio and SQL Server, lays out a matrix that shows which versions of SQL Server Compact are compatible with each version of Sync Framework, and walks you through the process of upgrading a SQL Server Compact 3.5 SP1 database to SQL Server Compact 3.5 SP2.

Sync Framework and SQL Server Compact install with several versions of Visual Studio and SQL Server, and each version of Sync Framework is compatible with different versions of SQL Server Compact. This article clarifies which versions of Sync Framework are installed with Visual Studio and SQL Server, lays out a matrix that shows which versions of SQL Server Compact are compatible with each version of Sync Framework, and walks you through the process of upgrading a SQL Server Compact 3.5 SP1 database to SQL Server Compact 3.5 SP2.

Sync Framework and SQL Server Compact Versions that Install with Visual Studio and SQL Server

The following table lists the version of Sync Framework and SQL Server Compact that is installed with Visual Studio or SQL Server.

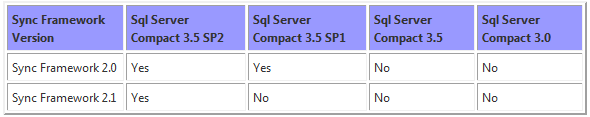

Compatibility between Sync Framework and SQL Server Compact

Early versions of Sync Framework were built to work with SQL Server Compact 3.5 SP1. When SQL Server Compact 3.5 SP2 was released, Sync Framework was redesigned to work with the new public change tracking API provided as part of the SQL Server Compact 3.5 SP2 release. Sync Framework components that use this new API are not compatible with earlier releases of SQL Server Compact, which is why later versions of Sync Framework are no longer compatible with SQL Server Compact 3.5 SP1.

The following table lists the versions of SQL Server Compact that are compatible with the SqlCeSyncProvider class on a desktop computer.

The following table lists the versions of SQL Server Compact that are compatible with the offline-only provider that is represented by the SqlCeClientSyncProvider class on a desktop computer.

Note that the SqlCeClientSyncProvider class should be used for existing applications only and has been superseded by the SqlCeSyncProvider class.

The following table lists the versions of SQL Server Compact that are compatible with the offline-only provider that is represented by the SqlCeClientSyncProvider class on a Windows Mobile 6.1 or 6.5 device.

Upgrading from SQL Server Compact 3.5 SP1 to SQL Server Compact 3.5 SP2

If you have a SQL Server Compact 3.5 SP1 database that participates in a synchronization community and you want to upgrade to a version of Sync Framework that is not compatible with SQL Server Compact 3.5 SP1, you can upgrade both Sync Framework and SQL Server Compact and continue to synchronize your database by following these steps:

- You have a working application that uses Sync Framework 1.0 or Sync Framework 2.0 to synchronize a SQL Server Compact 3.5 SP1 database.

- Install Sync Framework 1.0 SP1 or Sync Framework 2.1.

- Install SQL Server Compact 3.5 SP2.

- Rebuild your application to use Sync Framework 2.1 or use assembly redirection to load the 2.1 assemblies. For more information, see Sync Framework Backwards Compatibility and Interoperability.

- Synchronize your SQL Server Compact database. Sync Framework detects that the version of SQL Server Compact has changed and automatically upgrades the format of your database metadata to work correctly with Sync Framework 2.1. For more information, see Upgrading SQL Server Compact.

- If you prefer, you can also explicitly upgrade the database by using the SqlCeSyncStoreMetadataUpgrade class.

LaurenC is a technical writer on the Microsoft Sync Framework team.

Michael Otey listed “4 reasons that prove Microsoft is serious about SQL Azure” as a preface to his SQL Azure Enhancements article of 12/15/2010 for SQL Server Magazine:

At its recent Professional Developers Conference (PDC) in Redmond, Microsoft reaffirmed its commitment to SQL Azure, the new cloud-based version of SQL Server, by announcing several important enhancements for SQL Azure. Microsoft is serious about addressing customers’ needs with the SQL Azure platform and bringing it more on par with the capabilities of an on-premises SQL Server installation. Four of the most important recent announcements for SQL Azure follow:

4. Database Backup

Announced before PDC as a part of SQL Azure Service Update 4, the ability to back up SQL Azure databases has been added to SQL Azure. I’m not really sure what Microsoft was thinking when it released the earlier versions without the ability to perform backups—perhaps that SQL Azure’s built-in availability abrogated the need to perform backups.

However, it overlooked the need to provide protection for end-user error. Backing up with bcp or SQL Server Integration Services (SSIS) wasn’t a suitable replacement for database backup.

With SQL Azure Service Update 4, you can use the new copy feature to make SQL Azure-based database backups. Being copies of the database, they do count toward the SQL Azure limit of 150 databases. SQL Azure database backup is available for SQL Azure now. Learn more about it at Microsoft's MSDN site.

3. Database Manager for SQL Azure

In the past, managing SQL Azure databases was more difficult than managing on-premises systems, mainly because of the lack of management tools. As part of SQL Server 2008 R2, SQL Server Management Studio was modified to be able to connect to SQL Azure. You can find the free SQL Azure compatible version of SQL Server 2008 R2 Management Studio Express at Microsoft's download site.

However, this still means using an on-premises tool to manage your database cloud. Database Manager for SQL Azure is a free web-based management tool that can be used to create schema and run queries against SQL Azure databases. Watch a video demo at MSDN's blog about SQL Azure.

2. SQL Azure Data Sync

Tacitly acknowledging that SQL Azure will need to work in conjunction with one or more on-premises SQL Server systems, Microsoft announced the SQL Azure Data Sync feature. SQL Azure Data Sync is a cloud-based data synchronization service that’s built using the Microsoft Sync Framework. It will be able to synchronize data between on-premises SQL Server systems and SQL Azure in the cloud.

It can also replicate data to remote offices, and it will support scheduled synchronization and conflict handling for duplicate data. A second SQL Azure Data Sync CTP should appear by the end of 2010, and the service is expected in the first half of 2011.

Learn more about SQL Azure Data Sync and download the first CTP at Microsoft's SQL Azure site.1. SQL Azure Reporting

Without a doubt, the most important new announcement at PDC was support for SQL Azure Reporting Services. Reporting Services is one of the most important features of an on-premises SQL Server installation, and it was definitely needed to drive adoption of SQL Azure.

With SQL Azure Reporting Services, reports can be created using BIDs, published to SQL Azure, and managed using the cloud-based Windows Azure Developer Portal. SQL Azure Reporting is expected to be available in a CTP by the end of 2010 and will be generally available in the first half of 2011.

See the video demoing the new service at the Microsoft website.

Related Reading:

- 3 Month Free Trial of SQL Azure, by Michael Otey

- Azure: Data Mining in the Cloud, by Derek Comingore

- Considering SQL Azure, by Caroline Marwitz

Pawel Kadluczka described EF Feature CTP5: Validation in a 12/15/2010 post to the ADO.NET Team Blog:

Validation is a new feature introduced in Entity Framework Feature Community Technology Preview 5 (CTP5) which enables to automatically validate entities before trying to save them to the database as well as to validate entities or their properties "on demand".

Why?

Validating entities before trying to save changes can save trips to the database which are considered to be costly operations. Not only can they make the application look sluggish due to latency but they also can cost real money if the application is using SQL Azure where each transaction costs. Also using the database as a tool for validating entities is not really a good idea. The database will throw an exception only in most severe cases where the value violates database schema/constraints. Therefore the validation that can be performed by the database is not really rich (unless you start using some advanced mechanisms like triggers but then – is this kind of validation really the responsibility of the database?

Should not this be part of business logic layer which – with CodeFirst - may already have all the information needed to actually perform validation).

In addition, figuring out the real cause of the failure may not be easy. While the application developer can unwrap all the nested exceptions and get to the actual exception message thrown by the database to see what went wrong the application user will not usually be able to (and should not even be expected to be able to) do so. Ideally the user would rather see a meaningful error message and a pointer to the value that caused the failure so it is easy for him to fix the value and retry saving data. [Emphasis added.]How?

Automatically! In the CTP5 validation is turned on by default. It is using validation attributes (i.e. attributes derived from the System.ComponentModel.DataAnnotations.ValidationAttribute class) in order to validate entities. “Accidentaly” J one of the ways to configure a model when working with Code First is to use validation attributes. As a result when the model is configured with validation attributes validation can kick in and validate whether entities are valid according to the model. But there is more to the story.

CodeFirst uses just a subset of validation attributes to configure the model, while validation can use any attribute derived from the ValidationAttribute class (this includes CustomValidationAttribute) giving even more control over what is really saved to the database.

Validation will also respect validation attributes put on types and will drill into complex properties to validate their child properties. In addition, if an entity or a complex type implements IValidatableObject interface the IValidatableObject.Validate method will be invoked when validating the given entity or complex property.

Finally, it is also possible to decorate navigation or collection property with validation attributes. In this case only the property itself will be validated but not the related entity or entities.

By default entities will be validated automatically when saving changes. It is also possible to validate all entities, a single entity or a single property (be it complex property or primitive property) “on demand”. Each of these scenarios is described in more details below.One important thing to mention is that in some cases validation will enforce detecting changes. This is especially visible in case of automatic validation invoked from DbContext.SaveChanges(). In this case DetectChanges() will be actually called twice. Once by validation and once by the “real” SaveChanges(). The reason for detecting changes before validation happens is obvious – the latest data should be validated because this is what will be sent to the database. The reason for detecting changes after validation is that the entities could have been changed either during validation (CustomValidationAttributes, IValidatableObject.Validate(), validation attributes created by the user have full access to entities and/or properties) or by the user in one of the validation customization points.

Another thing worth noting is that validation currently works only when doing Code First development. We are considering extending it to also work for Model First and Database First.

What’s the big deal here?

Since CodeFirst uses validation attributes you could potentially use Validator from System.ComponentModel.DataAnnotations to validate entities even before CTP5 was released. Unfortunately Validator can validate only properties that are only direct child properties of the validated object. This means that you need to do extra work to be able to validate complex types that wouldn’t be validated otherwise. Even if you do it you need to make sure that you are able to actually access the invalid value and still know which entity it belongs to – looks like a few more lines. By the. Way, is your solution generic enough to work with different models and databases? Hopefully, but making it generic must have cost another dozen of lines. Now the custom validation is probably pretty big. Hey, someone has added a few lines in OnModelCreatingMethod and that property that was attributed with [Required] validation attribute is no longer required. Now what? Validation prevents from saving a valid value to the database since the Validator is using the attribute that is no longer valid. This is kind of a problem…

Fortunately the built-in validation is able to solve all the above problems without having you add any additional code.

First, validation leverages the model so it knows which properties are complex properties and should be drilled into and which are navigation properties that should not be drilled into. Second, since it uses the model it is not specific for any model or database. Third, it respects configuration overrides made in OnModelCreating method. And you don’t really have to do the whole lot to use it. …

Pavel continues with validation source code for several scenarios.

Here are links to previous posts about EF v4 CTPs:

<Return to section navigation list>

Marketplace, DataMarket and OData

MSDN updated the Web and Data Services for Windows Phone’s Open Data Protocol (OData) Overview for Windows Phone topic on 12/15/2010:

The Open Data Protocol (OData) is based on an entity and relationship model that enables you to access data in the style of representational state transfer (REST) resources. By using the OData client library for Windows Phone, Windows Phone applications can use the standard HTTP protocol to execute queries, and even to create, update, and delete data from a data service. This functionality is available as a separate library that you can download and install from the OData client libraries download page on CodePlex. The client library generates HTTP requests to any service that supports the OData protocol and transforms the data in the response feed into objects on the client. For more information about OData and existing data services that can be accessed by using the OData client library for Windows Phone, see the OData Web site.

The two main classes of the client library are the DataServiceContext class and the DataServiceCollection class. The DataServiceContext class encapsulates operations that are executed against a specific data service. OData-based services are stateless. However, the DataServiceContext maintains the state of entities on the client between interactions with the data service and in different execution phases of the application. This enables the client to support features such as change tracking and identity management.

Tip: We recommend employing a Model-View-ViewModel (MVVM) design pattern for your data applications where the model is generated based on the model returned by the data service. By using this approach, you can create the DataServiceContext in the ViewModel class along with any needed DataServiceCollection instances. For more general information about the MVVM pattern, see Implementing the Model-View-ViewModel Pattern in a Windows Phone Application.

When using the OData client library for Windows Phone, all requests to an OData service are executed asynchronously by using a uniform resource identifier (URI). Accessing resources by URIs is a limitation of the OData client library for Windows Phone when compared to other .NET Framework client libraries that support OData.

Generating Client Proxy Classes

You can use the DataSvcUtil.exe tool to generate the data classes in your application that represent the data model of an OData service. This tool, which is included with the OData client libraries on CodePlex, connects to the data service and generates the data classes and the data container, which inherits from the DataServiceContext class. For example, the following command prompt generates a client data model based on the Northwind sample data service:

datasvcutil /uri:http://services.odata.org/Northwind/Northwind.svc/ /out:.\NorthwindModel.cs /Version:2.0 /DataServiceCollectionBy using the /DataServiceCollection parameter in the command, the DataServiceCollection classes are generated for each collection in the model. These collections are used for binding data to UI elements in the application.

Binding Data to Controls

The DataServiceCollection class, which inherits from the ObservableCollection class, represents a dynamic data collection that provides notifications when items get added to or removed from the collection. These notifications enable the DataServiceContext to track changes automatically without your having to explicitly call the change tracking methods.

A URI-based query determines which data objects the DataServiceCollection class will contain. This URI is specified as a parameter in the LoadAsync method of the DataServiceCollection class. When executed, this method returns an OData feed that is materialized into data objects in the collection.

The LoadAsync method of the DataServiceCollection class ensures that the results are marshaled to the correct thread, so you do not need to use a Dispatcher object. When you use an instance of DataServiceCollection for data binding, the client ensures that objects tracked by the DataServiceContext remain synchronized with the data in the bound UI element. You do not need to manually report changes in entities in a binding collection to the DataServiceContext object.

Accessing and Changing Resources

In a Windows Phone application, all operations against a data service are asynchronous, and entity resources are accessed by URI. You perform asynchronous operations by using pairs of methods on the DataServiceContext class that starts with Begin and End respectively. The Begin methods register a delegate that the service calls when the operation is completed. The End methods should be called in the delegate that is registered to handle the callback from the completed operations.

Note: When using the DataServiceCollection class, the asynchronous operations and marshaling are handed automatically. When using asynchronous operations directly, you must use the BeginInvoke method of the System.Windows.Threading.Dispatcher class to correctly marshal the response operation back to the main application thread (the UI thread) of your application.

When you call the End method to complete an asynchronous operation, you must do so from the same DataServiceContext instance that was used to begin the operation. Each Begin method takes a state parameter that can pass a state object to the callback. This state object is retrieved using the IAsyncResult interface that is supplied with the callback and is used to call the corresponding End method to complete the asynchronous operation.

For example, when you supply the DataServiceContext instance as the state parameter when you call the DataServiceContext.BeginExecute method on the instance, the same DataServiceContext instance is returned as the IAsyncResult parameter. This instance of the DataServiceContext is then used to call the DataServiceContext.EndExecute method to complete the query operation. For more information, see Asynchronous Operations (WCF Data Services).

Querying Resources

The OData client library for Windows Phone enables you to execute URI-based queries against an OData service. When the BeginExecute method on the DataServiceContext class is called, the client library generates an HTTP GET request message to the specified URI. When the corresponding EndExecute method is called, the client library receives the response message and translates it into instances of client data service classes. These classes are tracked by the DataServiceContext class.

Note: OData queries are URI-based. For more information about the URI conventions defined by the OData protocol, see OData: URI Conventions.

Loading Deferred Content

By default, OData limits the amount of data that a query returns. However, you can explicitly load additional data, including related entities, paged response data, and binary data streams, from the data service when it is needed. When you execute a query, only entities in the addressed entity set are returned.

For example, when a query against the Northwind data service returns Customers entities, by default the related Orders entities are not returned, even though there is a relationship between Customers and Orders. Related entities can be loaded with the original query (eager loading) or on a per-entity basis (explicit loading).

To explicitly load related entities, you must call the BeginLoadProperty and EndLoadProperty methods on the DataServiceContext class. Do this once for each entity for which you want to load related entities. Each call to the LoadProperty methods results in a new request to the data service. To eagerly load related entries, you must include the $expand system query option in the query URI. This loads all related data in a single request, but returns a much larger payload.

Important Note: When deciding on a pattern for loading related entities, consider the performance tradeoff between message size and the number of requests to the data service.

The following query URI shows an example of eager loading the Order and Order_Details objects that belong to the selected customer:

http://services.odata.org/Northwind/Northwind.svc/Customers('ALFKI')?$expand=Orders/Order_DetailsWhen paging is enabled in the data service, you must explicitly load subsequent data pages from the data service when the number of returned entries exceeds the paging limit. Because it is not possible to determine in advance when paging can occur, we recommend that you enable your application to properly handle a paged OData feed. To load a paged response, you must call the BeginLoadProperty method with the current DataServiceQueryContinuation token. When using a DataServiceCollection class, you can instead call the LoadNextPartialSetAsync method in the same way that you call LoadAsync method. For an example of this loading pattern, see How to: Consume an OData Service for Windows Phone.

Modifying Resources and Saving Changes

Use the AddObject, UpdateObject, and DeleteObject methods on DataServiceContext class to manually track changes on the OData client. These methods enable the client to track added and deleted entities and also changes that you make to property values or to relationships between entity instances.

When the proxy classes are generated, an AddTo method is created for each entity in the DataServiceContext class. Use these methods to add a new entity instance to an entity set and report the addition to the context. Those tracked changes are sent back to the data service asynchronously when you call the BeginSaveChanges and EndSaveChanges methods of the DataServiceContext class.

Note: When you use the DataServiceCollection object, changes are automatically reported to the DataServiceContext instance.

The following example shows how to call the BeginSaveChanges and EndSaveChanges methods to asynchronously send updates to the Northwind data service:

private void saveChanges_Click(object sender, RoutedEventArgs e) { // Start the saving changes operation. svcContext.BeginSaveChanges(SaveChangesOptions.Batch, OnChangesSaved, svcContext); } private void OnChangesSaved(IAsyncResult result) { // Use the Dispatcher to ensure that the // asynchronous call returns in the correct thread. Dispatcher.BeginInvoke(() => { svcContext = result.AsyncState as NorthwindEntities; try { // Complete the save changes operation and display the response. WriteOperationResponse(svcContext.EndSaveChanges(result)); } catch (DataServiceRequestException ex) { // Display the error from the response. WriteOperationResponse(ex.Response); } catch (InvalidOperationException ex) { messageTextBlock.Text = ex.Message; } finally { // Set the order in the grid. ordersGrid.SelectedItem = currentOrder; } } ); }Note: The Northwind sample data service that is published on the OData Web site is read-only; attempting to save changes returns an error. To successfully execute this code example, you must create your own Northwind sample data service. To do this, complete the steps in the topic How to: Create the Northwind Data Service (WCF Data Services/Silverlight).

Maintaining State during Application Execution

To enable seamless navigation by limiting the phone to run one application at a time, Windows Phone activates and deactivates applications dynamically, raising events for applications to respond to when their state changes. By implementing handlers for these events, you can save and restore the state of the DataServiceContext class as well as any DataServiceCollection instances when your application transitions between active and inactive states. This behavior creates an experience in which it seems to the user like the application continued to run in the background.

The OData client for Windows Phone includes a DataServiceState class that is used to help manage these state transitions. This state management is typically implemented in the code-behind page of the main application. The following table shows Windows Phone state changes and how to use the DataServiceState class for each change in state. …

Table with source code omitted for brevity.

… For an example of this pattern, see the sample application that is posted on the OData client libraries download page on CodePlex.

MSDN updated the Web and Data Services for Windows Phone’s How to: Consume an OData Service for Windows Phone on 12/15/2010:

This topic describes how to consume an Open Data Protocol (OData) feed in a Windows Phone application using the OData client library for Windows Phone. This library is not part of the Windows Phone Application Platform; it must be downloaded separately from the Open Data Protocol - Client Libraries download page on CodePlex.

The OData client library for Windows Phone generates HTTP requests to a data service that supports OData and transforms the entries in the response feed into objects on the client. Using this client, you can bind Windows Phone controls, such as Listbox or Textbox, to an instance of a DataServiceCollection class that contains an OData data feed. This class handles the events raised by the controls to keep the DataServiceContext class synchronized with changes that are made to data in the controls. For more information about using the OData protocol with Windows Phone applications, see Open Data Protocol (OData) Overview for Windows Phone.

A uniform resource identifier (URI)-based query determines which data objects the DataServiceCollection class will contain. This URI is specified as a parameter in the LoadAsync method of the DataServiceCollection class. When executed, this method returns an OData feed that is materialized into data objects in the collection. Data from the collection is displayed by controls when this collection is the binding source for the controls. For more information about querying an OData service by using URIs, see the OData: URI Conventions page at the OData Web site.

The procedures in this topic show how to perform the following tasks:

Create a new Windows Phone application

Download and install the OData client for Windows Phone

Generate client data service classes that support accessing an OData service

Query the OData service and bind the results to controls in the application

Note: This example demonstrates basic data binding to a single page in a Windows Phone application by using a DataServiceCollection binding collection. For an example of a multi-page Windows Phone application that uses the Model-View-ViewModel (MVVM) design pattern and the DataServiceState object to maintain state during execution, see the ODataClient_WinPhone7SampleApp.zip project on the Open Data Protocol - Client Libraries download page on CodePlex.

This example uses the Northwind sample data service that is published on the OData Web site. This sample data service is read-only; attempting to save changes will return an error.

To create the Windows Phone application

In Solution Explorer, right-click the Solution, point to Add, and then select New Project.

In the Add New Project dialog box, select Silverlight for Windows Phone from the Installed Templates pane, and then select the Windows Phone Application template. Name the project ODataNorthwindPhone.

Click OK. This creates the application for Silverlight.

Download the ODataClient_BinariesAndCodeGenToolForWinPhone.zip file from the Open Data Protocol - Client Libraries download page on CodePlex and extract the contents of the compressed file to your development computer. This library is permitted for use in production applications, which means that it can be used to build applications that qualify for submission to the Windows Phone marketplace.

Navigate to the directory where you extracted the library files and execute the following command at the command prompt (without line breaks):

datasvcutil /uri:http://services.odata.org/Northwind/Northwind.svc/ /out:.\NorthwindModel.cs /Version:2.0 /DataServiceCollection

This generates the client proxy classes that are required by the Windows Phone application to access the Northwind sample data service. These data classes are created in the NorthwindModel namespace.

In Solution Explorer, right-click the project and select Add Reference. In the Add Reference dialog box, click the browse tab, navigate to the directory where you extracted the library files, select System.Data.Services.Client.dll, and then click OK. This adds a reference to the OData client library assembly to the project.

In Solution Explorer, right-click the project, select Add and then Existing Item.In the Add Existing Item dialog box, navigate to the directory where you extracted the library files and select the NorthwindModel.cs file. This adds the generated client data classes to the project.

To define the Windows Phone application user interface

In the project, double-click the MainPage.xaml file. This opens XAML markup for the MainPage class that is the user interface for the Windows Phone application.Replace the existing XAML markup with the following markup that defines the user interface for the main page that displays customer information:

<phone:PhoneApplicationPage x:Class="PhonyNorthwindData.MainPage" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:phone="clr-namespace:Microsoft.Phone.Controls;assembly=Microsoft.Phone" xmlns:shell="clr-namespace:Microsoft.Phone.Shell;assembly=Microsoft.Phone" xmlns:d="http://schemas.microsoft.com/expression/blend/2008" xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006" xmlns:my="clr-namespace:NorthwindModel" mc:Ignorable="d" d:DesignWidth="480" d:DesignHeight="768" d:DataContext="{d:DesignInstance Type=my:Customer, CreateList=True}" FontFamily="{StaticResource PhoneFontFamilyNormal}" FontSize="{StaticResource PhoneFontSizeNormal}" Foreground="{StaticResource PhoneForegroundBrush}" SupportedOrientations="Portrait" Orientation="Portrait" shell:SystemTray.IsVisible="True" Loaded="PhoneApplicationPage_Loaded"> <Grid x:Name="LayoutRoot" Background="Transparent"> <Grid.RowDefinitions> <RowDefinition Height="Auto"/> <RowDefinition Height="*"/> </Grid.RowDefinitions> <StackPanel x:Name="TitlePanel" Grid.Row="0" Margin="12,17,0,28"> <TextBlock x:Name="ApplicationTitle" Text="Northwind Sales" Style="{StaticResource PhoneTextNormalStyle}"/> <TextBlock x:Name="PageTitle" Text="Customers" Margin="9,-7,0,0" Style="{StaticResource PhoneTextTitle1Style}"/> </StackPanel> <Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0"> <ListBox x:Name="MainListBox" Margin="0,0,-12,0" ItemsSource="{Binding}" SelectionChanged="MainListBox_SelectionChanged"> <ListBox.ItemTemplate> <DataTemplate> <StackPanel Margin="0,0,0,17" Width="432"> <TextBlock Text="{Binding Path=CompanyName}" TextWrapping="NoWrap" Style="{StaticResource PhoneTextExtraLargeStyle}"/> <TextBlock Text="{Binding Path=ContactName}" TextWrapping="NoWrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/> <TextBlock Text="{Binding Path=Phone}" TextWrapping="NoWrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/> </StackPanel> </DataTemplate> </ListBox.ItemTemplate> </ListBox> </Grid> </Grid> </phone:PhoneApplicationPage>To add the code that binds data service data to controls in the Windows Phone application

In the project, open the code page for the MainPage.xaml file, and add the following using statements:

using System.Data.Services.Client; using NorthwindModel;Add the following declarations to the MainPage class:

private DataServiceContext northwind; private readonly Uri northwindUri = new Uri("http://services.odata.org/Northwind/Northwind.svc/"); private DataServiceCollection customers; private readonly Uri customersFeed = new Uri("/Customers", UriKind.Relative);This includes the URIs of both the data service and the Customers feed.

Add the following PhoneApplicationPage_Loaded method to the MainPage class:

private void PhoneApplicationPage_Loaded(object sender, RoutedEventArgs e) { // Initialize the context and the binding collection. northwind = new DataServiceContext(northwindUri); customers = new DataServiceCollection(northwind); // Register for the LoadCompleted event. customers.LoadCompleted += new EventHandler(customers_LoadCompleted); // Load the customers feed by using the URI. customers.LoadAsync(customersFeed); }When the page is loaded, this code initializes the binding collection and content, and registers the method to handle the LoadCompleted event of the DataServiceCollection object, raised by the binding collection.

Insert the following code into the MainPage class:

void customers_LoadCompleted(object sender, LoadCompletedEventArgs e) { if (e.Error == null) { // Handling for a paged data feed. if (customers.Continuation != null) { // Automatically load the next page. customers.LoadNextPartialSetAsync(); } else { // Set the data context of the listbox control to the sample data. this.LayoutRoot.DataContext = customers; } } else { MessageBox.Show(string.Format("An error has occured: {0}", e.Error.Message)); } }When the LoadCompleted event is handled, the following operations are performed if the request returns successfully:

The LoadNextPartialSetAsync method of the DataServiceCollection object is called to load subsequent results pages, as long as the Continuation property of the DataServiceCollection object returns a value.

The collection of loaded Customer objects is bound to the DataContext property of the element that is the master binding object for all controls in the page.

Atanas Korchev described Binding Telerik Grid for ASP.NET MVC to OData on 12/16/2010:

We have just made a nice demo application showing how to bind Telerik Grid for ASP.NET MVC to OData using Telerik TV as OData producer. The grid supports paging, sorting and filtering using OData’s query options.

To do that we implemented a helper JavaScript routine (defined in an external JavaScript file which is included in the sample project) which is used to bind the grid. Here is how the code looks like:

@(Html.Telerik().Grid<TelerikTVODataBinding.Models.Video>()

.Name("Grid")

.Columns(columns =>

{

columns.Bound(v => v.ImageUrl).Sortable(false).Filterable(false).Width(200).HtmlAttributes(new { style="text-align:center" });

columns.Bound(v => v.Description);

columns.Bound(v => v.DatePublish).Format("{0:d}").Width(200);

})

.Sortable()

.Scrollable(scrolling => scrolling.Height(600))

.Pageable()

.Filterable()

.ClientEvents(events => events.OnDataBinding("Grid_onDataBinding").OnRowDataBound("Grid_onRowDataBound"))

)

<script type="text/javascript">

function Grid_onRowDataBound(e) {

e.row.cells[0].innerHTML = '<a href="' + e.dataItem.Url +'"><img src="' + e.dataItem.ImageUrl + '" /></a>';

}

function Grid_onDataBinding(e) {

var grid = $(this).data('tGrid');

// the bindGrid function is defined in telerik.grid.odata.js which is located in the ~/Scripts folder

$.odata.bindGrid(grid, 'http://tv.telerik.com/services/odata.svc/Videos');

}

@{

//Include the helper JavaScript file

Html.Telerik().ScriptRegistrar().DefaultGroup(g => g.Add("telerik.grid.odata.js"));

}We will provide native (read ‘codeless’) OData binding support in a future release.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Alik Levin reported Windows Azure AppFabric CTP December 2010 – New Features in Access Control Service on 12/16/2010:

Original announcement is available on Windows Azure AppFabric team’s blog here

Here is the list of the new features/changes as per the post:

- Improved error messages by adding sub-codes and more detailed descriptions.

- Adding primary/secondary flag to the certificate to allow an administrator to control the lifecycle.

- Added support for importing the Relying Party from the Federation Metadata.

- Updated the Management Portal to address usability improvements and support for the new features.

- Support for custom error handing when signing in to a Relying Party application.

Details on each change and new feature are available at Release Notes - December Labs Release.

Related Books

- Programming Windows Identity Foundation (Dev - Pro)

- A Guide to Claims-Based Identity and Access Control (Patterns & Practices) – free online version

- Developing More-Secure Microsoft ASP.NET 2.0 Applications (Pro Developer)

- Ultra-Fast ASP.NET: Build Ultra-Fast and Ultra-Scalable web sites using ASP.NET and SQL Server

- Advanced .NET Debugging

- Debugging Microsoft .NET 2.0 Applications

Related Info

- Integrating RESTful WCF Services With Windows Azure AppFabric Access Control Service (ACS) – Scenario and Solution

- Integrating ASP.NET Web Applications With Azure AppFabric Access Control Service (ACS) – Scenario and Solution

- Azure AppFabric Access Control Service (ACS) v 2.0 High Level Architecture – REST Web Service Application Scenario

- Azure AppFabric Access Control Service (ACS) v 2.0 High Level Architecture – Web Application

- SSO, Identity Flow, Authorization In Cloud Applications and Services – Challenges and Solution Approaches

- Windows Identity Foundation (WIF) Fast Track

- Windows Identity Foundation (WIF) Code Samples

- Windows Identity Foundation (WIF) SDK Help Overhaul

- Windows Identity Foundation (WIF) and Azure AppFabric Access Control (ACS) Service Survival Guide

See PRNewswire reported Novell Joins Microsoft Windows Azure Technology Adoption Program to Test and Validate Novell Cloud Security Service on 12/16/2010 in the Cloud Security and Governance section.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

Shaun Xu described Communication Between Your PC and Azure VM via Windows Azure Connect in a detailed tutorial of 12/16/2010:

With the new release of the Windows Azure platform there are a lot of new features available. In my previous post I introduced a little bit about one of them, the remote desktop access to azure virtual machine. Now I would like to talk about another cool stuff – Windows Azure Connect.

What’s Windows Azure Connect

I would like to quote the definition of the Windows Azure Connect in MSDN

With Windows Azure Connect, you can use a simple user interface to configure IP-sec protected connections between computers or virtual machines (VMs) in your organization’s network, and roles running in Windows Azure. IP-sec protects communications over Internet Protocol (IP) networks through the use of cryptographic security services.

There’s an image available at the MSDN as well that I would like to forward here

As we can see, using the Windows Azure Connect the Worker Role 1 and Web Role 1 are connected with the development machines and database servers which some of them are inside the organization some are not.

With the Windows Azure Connect, the roles deployed on the cloud could consume the resource which located inside our Intranet or anywhere in the world. That means the roles can connect to the local database, access the local shared resource such as share files, folders and printers, etc.

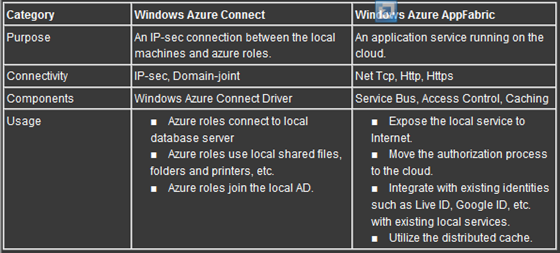

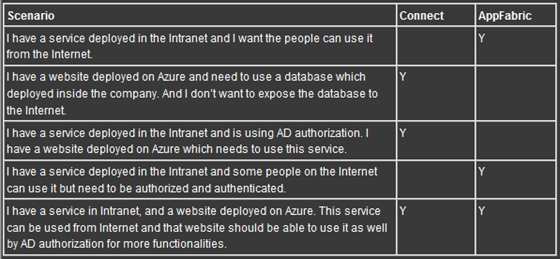

Difference between Windows Azure Connect and AppFabric

It seems that the Windows Azure Connect are duplicated with the Windows Azure AppFabric. Both of them are aiming to solve the problem on how to communication between the resource in the cloud and inside the local network. The table below lists the differences in my understanding.

And also some scenarios on which of them should be used.

How to Enable Windows Azure Connect

OK we talked a lot information about the Windows Azure Connect and differences with the Windows Azure AppFabric. Now let’s see how to enable and use the Windows Azure Connect. First of all, since this feature is in CTP stage we should apply before use it. On the Windows Azure Portal we can see our CTP features status under Home, Beta Program page.

You can send the apply to join the Beta Programs to Microsoft in this page. After a few days the Microsoft will send an email to you (the email of your Live ID) when it’s available.

In my case we can see that the Windows Azure Connect had been activated by Microsoft and then we can click the Connect button on top, or we can click the Virtual Network item from the left navigation bar.

The first thing we need, if it’s our first time to enter the Connect page, is to enable the Windows Azure Connect.

After that we can see our Windows Azure Connect information in this page.

Add a Local Machine to Azure Connect

As we explained below the Windows Azure Connect can make an IP-sec connection between the local machines and azure role instances. So that we firstly add a local machine into our Azure Connect. To do this we will click the Install Local Endpoint button on top and then the portal will give us an URL. Copy this URL to the machine we want to add and it will download the software to us.

This software will be installed in the local machines which we want to join the Connect. After installed there will be a tray-icon appeared to indicate this machine had been joint our Connect.

The local application will be refreshed to the Windows Azure Platform every 5 minutes but we can click the Refresh button to let it retrieve the latest status at once. Currently my local machine is ready for connect and we can see my machine in the Windows Azure Portal if we switched back to the portal and selected back Activated Endpoints node.

Add a Windows Azure Role to Azure Connect

Let’s create a very simple azure project with a basic ASP.NET web role inside. To make it available on Windows Azure Connect we will open the azure project property of this role from the solution explorer in the Visual Studio, and select the Virtual Network tab, check the Activate Windows Azure Connect.

The next step is to get the activation token from the Windows Azure Portal. In the same page there is a button named Get Activation Token. Click this button then the portal will display the token to me.

We copied this token and pasted to the box in the Visual Studio tab.

Then we deployed this application to azure. After completed the deployment we can see the role instance was listed in the Windows Azure Portal - Virtual Connect section.

Establish the Connect Group

The final task is to create a connect group which contains the machines and role instances need to be connected each other. This can be done in the portal very easy.

The machines and instances will NOT be connected until we created the group for them. The machines and instances can be used in one or more groups.

In the Virtual Connect section click the Groups and Roles node from the left side navigation bar and clicked the Create Group button on top. This will bring up a dialog to us. What we need to do is to specify a group name, description; and then we need to select the local computers and azure role instances into this group.

After the Azure Fabric updated the group setting we can see the groups and the endpoints in the page.

And if we switch back to the local machine we can see that the tray-icon have been changed and the status turned connected.

The Windows Azure Connect will update the group information every 5 minutes. If you find the status was still in Disconnected please right-click the tray-icon and select the Refresh menu to retrieve the latest group policy to make it connected.

Test the Azure Connect between the Local Machine and the Azure Role Instance

Now our local machine and azure role instance had been connected. This means each of them can communication to others in IP level. For example we can open the SQL Server port so that our azure role can connect to it by using the machine name or the IP address.

The Windows Azure Connect uses IPv6 to connect between the local machines and role instances. You can get the IP address from the Windows Azure Portal Virtual Network section when select an endpoint.

I don’t want to take a full example for how to use the Connect but would like to have two very simple tests. The first one would be PING.

When a local machine and role instance are connected through the Windows Azure Connect we can PING any of them if we opened the ICMP protocol in the Filewall setting. To do this we need to run a command line before test. Open the command window on the local machine and the role instance, execute the command as following

netsh advfirewall firewall add rule name="ICMPv6" dir=in action=allow enable=yes protocol=icmpv6

Thanks to Jason Chen, Patriek van Dorp, Anton Staykov and Steve Marx, they helped me to enable the ICMPv6 setting. For the full discussion we made please visit here.

You can use the Remote Desktop Access feature to logon the azure role instance. Please refer my previous blog post to get to know how to use the Remote Desktop Access in Windows Azure.

Then we can PING the machine or the role instance by specifying its name. Below is the screen I PING my local machine from my azure instance.

We can use the IPv6 address to PING each other as well. Like the image following I PING to my role instance from my local machine thought the IPv6 address.

Another example I would like to demonstrate here is folder sharing. I shared a folder in my local machine and then if we logged on the role instance we can see the folder content from the file explorer window.

Summary

In this blog post I introduced about another new feature – Windows Azure Connect. With this feature our local resources and role instances (virtual machines) can be connected to each other. In this way we can make our azure application using our local stuff such as database servers, printers, etc. without expose them to Internet.

So Xiyan transliterates to Shaun?

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

MSDN updated the Web and Data Services for Windows Phone’s Windows Azure Platform Overview for Windows Phone topic on 12/15/2010:

The Windows Azure platform is an internet-scale cloud services platform hosted through Microsoft data centers. It provides highly-scalable processing and storage capabilities, a relational database service, and premium data subscriptions that you can use to build compelling Windows Phone applications.

This topic provides an overview of the Windows Azure platform features that you can use with the Windows Phone Application Platform. For information about how to use the Windows Azure platform for data storage, see Storing Data in the Windows Azure Platform for Windows Phone. For information about using web services with your Windows Phone applications, see Connecting to Web and Data Services for Windows Phone.

Windows Azure Compute Service

The Windows Azure Compute service is a runtime execution environment for managed and native code. An application built on the Windows Azure Compute service is structured as one or more roles. When it executes, the application typically runs two or more instances of each role, with each instance running as its own virtual machine (VM).

You can use Windows Azure roles to offload work from your Windows Phone applications and perform tasks that are difficult or not possible with the Windows Phone Application Platform. For example, a web role could directly query a SQL Azure relational database and expose the data via a Windows Communication Foundation (WCF) service. For more information about writing Windows Phone applications that consume web services, see Connecting to Web and Data Services for Windows Phone.

There are several benefits to using a Windows Azure Compute service in conjunction with your Windows Phone application:

Programming options: When writing managed code for a Windows Azure role, developers can use many of the .NET Framework 4 libraries common to server and desktop applications. Although a substantial number of Silverlight and XNA components are available for developing a Windows Phone application, there are limits to what can be done with those components.

Availability: Windows Azure roles run in a highly-available internet-scale hosting environment built on geographically distributed data centers. Considering that the phone can be turned off, a Windows Azure role may be a better choice for long-running tasks or code that needs to be running all the time.

Processing capabilities: The processing capabilities of a Windows Azure role can scale elastically across servers to meet increasing or decreasing demand. In contrast, on a Windows Phone, a single processor with finite capabilities is shared by all applications on the phone.

A Windows Azure web role can provide Windows Phone applications access to data by hosting multiple web services including Windows Communication Foundation (WCF) services and WCF data services. WCF is a part of the .NET Framework that provides a unified programming model for rapidly building service-oriented applications. WCF Data Services enables the creation and consumption of Open Data Protocol (OData) services from the Web (formerly known as ADO.NET Data Services). For more information, see the WCF Developer Center and the WCF Data Services Developer Center.

Windows Azure Storage Services

Storage resources on the phone are limited. To optimize the user experience, Windows Phone applications should minimize the use of isolated storage and only store what is necessary for subsequent launches of the application. One way to minimize the use of isolated storage is to use Windows Azure storage services instead. For more information about isolated storage best practices, see Isolated Storage Best Practices for Windows Phone.

The Windows Azure storage services provide persistent, durable storage in the cloud. As with the Windows Azure Compute service, Windows Azure storage services can scale elastically to meet increasing or decreasing demand. There are three types of storage services available:

Blob service: Use this service for storing files, such as binary and text data. For more information, see Blob Service Concepts.

Queue service: Use this service for storing and delivering messages that may be accessed by another client (another Windows Phone application or any other application that can access the Queue service). For more information, see Queue Service Concepts.

Table service: Use this service for structured storage of non-relational data. A Table is a set of entities, which contain a set of properties. For more information, see Table Service Concepts.

Note: To access Windows Azure storage services, you must have a storage account, which is provided through the Windows Azure Platform Management Portal. For more information, see How to Create a Storage Account.

We do not recommend that Windows Phone applications store the storage account credentials on the phone. Rather than accessing the Windows Azure storage services directly, we recommend that Windows Phone applications use a web service to store and retrieve data. The exception to this recommendation is for public blob data that is intended for anonymous access. For more information about using Windows Azure storage services, see Storing Data in the Windows Azure Platform for Windows Phone.

SQL Azure

Microsoft SQL Azure Database is a cloud-based relational database service built on SQL Server technologies. It is a highly available, scalable, multi-tenant database service hosted by Microsoft in the cloud. SQL Azure Database helps to ease provisioning and deployment of multiple databases. Developers do not have to install, set up, update, or manage any software. High availability and fault tolerance are built-in and no physical administration is required.

Similar to an on-premise instance of SQL Server, SQL Azure exposes a tabular data stream (TDS) interface for Transact-SQL-based database access. Because the Windows Phone Application Platform does not support the TDS protocol, a Windows Phone application must use a web service to store and retrieve data in a SQL Azure database. For more information about using SQL Azure with Windows Phone, see Storing Data in the Windows Azure Platform for Windows Phone.

SQL Azure enables a familiar development environment. Developers can connect to SQL Azure with SQL Server Management Studio (SQL Server 2008 R2) and create database tables, indexes, views, stored procedures, and triggers. For more information about SQL Azure, see SQL Azure Database Concepts.

Windows Azure Marketplace DataMarket

Windows Azure Marketplace DataMarket is an information marketplace that simplifies publishing and consuming data of all types. The DataMarket enables developers to discover, preview, purchase, and manage premium data subscriptions. For more information, see the Windows Azure Marketplace DataMarket home page.

The DataMarket exposes data using OData feeds. The Open Data Protocol (OData) is a Web protocol for querying and updating data. The DataMarket OData feeds provide a consistent Representational State Transfer (REST)-based API across all datasets to help simplify development. Because DataMarket feeds are based on OData, your Windows Phone application can consume them with the OData Client Library for Windows Phone or use the HttpWebRequest class. For more information, see Connecting to Web and Data Services for Windows Phone.

Note: In this release of the Windows Phone Application Platform, the Visual Studio Add Service Reference feature is not supported for OData data services. To generate a proxy class for your application, use the DataSvcUti.exe utility that is part of the OData Client Library for Windows Phone. For more information, see How to: Consume an OData Service for Windows Phone.

There are two types of DataMarket datasets: those that support flexible queries and those that support fixed queries. Flexible query datasets support a wider range of REST-based queries. Fixed query datasets support only a fixed number of queries and supply a C# client library to help client applications work with data. For more information about these query types, see Fixed and Flexible Query Types.

See Also: Other Resources

- Connecting to Web and Data Services for Windows Phone

- How to: Consume an OData Service for Windows Phone

- Web Service Security for Windows Phone

- Storing Data in the Windows Azure Platform for Windows Phone

- Windows Azure Documentation

- SQL Azure Documentation

- Windows Azure Marketplace DataMarket Documentation

- WCF Developer Center

- WCF Data Services Developer Center

Cory Fowler (@SyntaxC4) published Export & Upload a Certificate to an Azure Hosted Service on 12/16/2010:

Last night I started doing some research into the new features of the Windows Azure SDK 1.3 for a future blog series which I’ve been thinking about lately. The first step was to figure out what was installed on the default Windows Azure image, in order to determine what would need to be installed for my Proof of Concept.

There are two ways to set up the RDP connection into an Azure instance: a developer centric approach, which is configured in Visual Studio, and an IT centric approach which is configured through the [new] Windows Azure Platform Portal. I had thought it might be cool if this functionality was available using the Service Management API, however this is not publicly exposed [which probably is a good thing].

To minimize content repetition I decided to split the export and upload process to this blog post.

Exporting a Certificate

1. Open the Certificate (From Visual Studio Dialog, IIS or Certificate Snap-in in MMC)

2. Navigate to the Details Tab. Click on Copy to File…

3. Start the Export Process.

4. Select “Yes, export the private key”.

5. Click Next.

6. Provide a password to protect the private key.

7. Browse to a path to save the .pfx file.

8. Save the file.

9. Finish the Wizard.

Setting up a Windows Azure Hosted Service

If you’d like to see a more detailed explanation of this, I released some videos with Barry Gervin in my last entry, “Post #AzureFest Follow-up Videos”.

1. Create New Hosted Service.

2. Fill out the Creation Form.

Setting up a Windows Azure Storage Service

The Visual Studio Tools will not allow you to deploy a project without setting up a Storage Service.

1. Create a New Storage Service.

2. Fill out the Creation Form.

Upload the Certificate

1. Select the Certificates folder under the Hosted Service to RDP into. Click Add Certificate.

2. Browse to the Certificate (saved in last section).

3. Enter the Password for the Certificate.

4. Ensure the Certificate is Uploaded.

Moving Forward

This entry overviewed some of the common setup steps between Setting up RDP using Visual Studio, and Manual Configuration. In the Manual Configuration post I will overview how to use the Service Management API to install the Certificate to the server (instead of the Portal as described above).

Happy Clouding!

Wade Wegner recommended that you Use the WAPTK to help setup your Windows Azure development environment in a 12/16/2010 post:

As awesome as it is to have a lot of great local development tools, it’s also be difficult to setup new development environments. Downloading and installing the Windows Azure SDK is really only one step – you also have to ensure that local services are configured correctly (e.g. IIS), you may need additional SDKs (e.g. Windows Identity Foundation SDK), setup additional tools (e.g. SSMS), and so on. Not only does this take time but also organizational skills.

So, is there anything that can help manage this process?

Yes. As part of the Windows Azure Platform Training Kit (WAPTK), we ship a Dependency Checker tool along with scripts that check your system for all the required software to complete the hands-on labs in the kit. I routinely use this tool to ensure that I have all the software required in order to build great applications for Windows Azure.

Try it out. First, grab latest version of the WAPTK here. Then follow these steps.

- Click the Prerequisites tab.

- Click the Check dependencies link.

- If you are prompted to install the Dependency Checker tool, click OK to start the installation.

- Once the Dependency Checker tool is install, hit F5 to refresh the page (this will allow the script to call to launch the Dependency Checker tool).

- When prompted to allow the ConfigurationWizard to run, click the Allow button.

- Now that the Configuration Wizard has launched, click Next to begin.

- The first (and only) step is to check prerequisites for the Training Kit. Click Next to continue.

- The tool will scan your system and look for required software. When it finds that your system is missing required software, you are both notified and provided with a link to Install the software.

- Clicking the Install link will generally launch a process to install the feature.

- In some cases you will have the option to download the missing feature or software. Click the Download links to launch a download. You will then have to walk through the installation process for that feature.

- At any point you can click the Rescan button to scan your system again. Any updates you’ve made will be reflected on the scan.

- Once you have all of the required software, you’ll be able to complete the tool. However, if there is software you do not need or want to install, you can cancel at any time to finish.

I hope you find this useful! Please let me know if you have any feedback.

![]() I’m checking to see if the December WAPTK has the problems I reported for the November issue in my Strange Behavior of Windows Azure Platform Training Kit with Windows Azure SDK v1.3 under 64-bit Windows 7 post of 12/8/2010.

I’m checking to see if the December WAPTK has the problems I reported for the November issue in my Strange Behavior of Windows Azure Platform Training Kit with Windows Azure SDK v1.3 under 64-bit Windows 7 post of 12/8/2010.

![]() Update 12/16/2010 1:45 PM PST: WAPTK’s December 2010 Update solved most problems reported in my post. However, a runtime exception still occurs in the GuestBook_WorkerRole.WorkerRole.vb’s OnStart() function. Check updated post here.

Update 12/16/2010 1:45 PM PST: WAPTK’s December 2010 Update solved most problems reported in my post. However, a runtime exception still occurs in the GuestBook_WorkerRole.WorkerRole.vb’s OnStart() function. Check updated post here.

Microsoft Showcase interviewed Chris Kabat in a 00:06:10 MPS Partners Windows Azure Channel 9 Video segment on 12/15/2010:

MPS Partners, a Microsoft Gold Certified Partner, implements [I]nternet scale applications for their customers on a regular basis. The company has a go-to-market solution that defines a set of services around ‘Cloud Composite Applications’, and this solution involves the ability to pull data from both on premise and off premise applications and deliver in a single portal hosted in the cloud. The company has made an on-premise custom content management framework available on the Cloud in two days. MPS Partners sees value in how on-premise applications can be exposed to the cloud using the Service Bus.

Check the other 70 Azure-related videos, too.

Microsoft PressPass reported “Payment solution provided by NVoicePay is based on Windows Azure platform with Silverlight interface across the Web, PC and phone” as a preface to its Customer Spotlight: ADP Enables Plug-and-Play Payment Processing for Thousands of Car Dealers Throughout North America With Windows Azure press release of 12/16/2010:

The Dealer Services Group of Automatic Data Processing Inc. has added NVoicePay as a participant to its Third Party Access Program. NVoicePay, a Portland, Ore.-based software provider, helps mutual clients eliminate paper invoices and checks with an integrated electronic payments solution powered by the Windows Azure cloud platform, Microsoft Corp. reported today. [Link added.]

NVoicePay’s AP Assist e-payment solution offers substantial savings for dealerships that opt into the program. NVoicePay estimates that paying invoices manually ends up costing several dollars per check; however, by reducing that transaction cost, each dealership stands to save tens of thousands of dollars per year depending on its size.

“Like most midsize companies, many dealerships are using manual processes for their accounts payables, which is fraught with errors and inefficiency,” said Clifton E. Mason, vice president of product marketing for ADP Dealer Services. “NVoicePay’s hosted solution is integrated to ADP’s existing dealer management system, which allows our clients to easily process payables electronically for less than the cost of a postage stamp.”

The NVoicePay solution relies heavily on Microsoft Silverlight to enable a great user experience across multiple platforms, including PC, phone and Web. For example, as part of the solution, a suite of Windows Phone 7 applications allow financial controllers to quickly perform functions such as approving pending payments and checking payment status while on the go.

On the back end, the solution is implemented on the Windows Azure platform. This gives it the ability to work easily with a range of existing systems, and it also provides the massive scalability essential for a growth-stage business. According to NVoicePay, leveraging Windows Azure to enable its payment network allowed the NVoicePay solution to go from zero to nearly $50 million in payment traffic in a single year.

“The Windows Azure model of paying only for the resources you need has been key for us as an early stage company because the costs associated with provisioning and maintaining an infrastructure that could support the scalability we require would have been prohibitive,” said Karla Friede, chief executive officer, NVoicePay. “Using the Windows Azure platform, we’ve been able to deliver enterprise-class services at a small-business price, and that’s a requirement to crack the midmarket.”

About ADP

Automatic Data Processing, Inc. (Nasdaq: ADP), with nearly $9 billion in revenues and about 560,000 clients, is one of the world’s largest providers of business outsourcing solutions. Leveraging 60 years of experience, ADP offers the widest range of HR, payroll, tax and benefits administration solutions from a single source. ADP’s easy-to-use solutions for employers provide superior value to companies of all types and sizes. ADP is also a leading provider of integrated computing solutions to auto, truck, motorcycle, marine, recreational, heavy vehicle and agricultural vehicle dealers throughout the world. For more information about ADP or to contact a local ADP sales office, reach us at 1-800-CALL-ADP ext. 411 (1-800-225-5237 ext. 411).

About NVoicePay

NVoicePay is a B2B Payment Network addressing the opportunity of moving invoice payments from paper checks to electronic networks for mid-market businesses. NVoicePay’s simple efficient electronic payments have made the company the fastest growing payment network for business.

About Microsoft

Founded in 1975, Microsoft (Nasdaq “MSFT”) is the worldwide leader in software, services and solutions that help people and businesses realize their full potential.

Mark Kovalcson posted MS CRM 2011, the “Cloud”, Azure and the future to his CRM Scape blog on 12/15/2010:

Something really important is happening right now, and if you are involved in MS CRM take notice or fall by the wayside! This is a real game changer!

Almost all of my MS CRM installations were on premises installations until just this Fall. Sure there were IFD configurations and web portals with Silverlight etc., but the customer was always in tight control of the servers, even if the servers were hosted.