Windows Azure and Cloud Computing Posts for 7/21/2010+

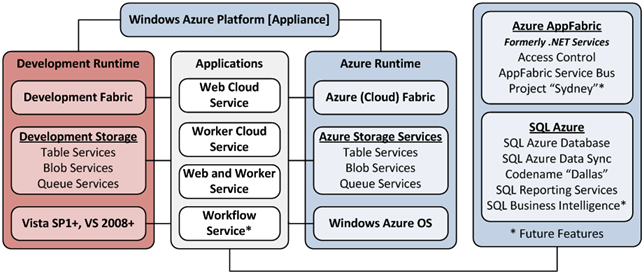

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure, Codenames “Dallas” & “Houston” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

Brian Swan explains Accessing Windows Azure Queues from PHP in this 7/19/2010 post:

I recently wrote posts on how to access Windows Azure table storage from PHP and how to access Windows Azure Blob storage from PHP. To round out my look at the storage options that are available in Windows Azure, I’ll look at accessing Windows Azure queues from PHP in this post. As in the first two posts, I’ll rely on the Windows Azure SDK for PHP to do the heavy lifting. In fact, I found the Microsoft_WindowsAzure_Storage_Queue class to be so intuitive and easy to use that I don’t think it’s worth walking you through my PHP code in this post. Instead, I’ve attached a single file that can serve as a PHP-based utility for interacting with Windows Azure queues. The PHP code in the utility should be enough to give you an understanding of how to use the Microsoft_WindowsAzure_Storage_Queue class.

Disclaimer: The attached code isn’t necessarily pretty and is meant only to give you an idea of how Windows Azure queues work and how to use the Microsoft_WindowsAzure_Storage_Queue class. It is certainly not meant to be used as a utility for managing queues that are being used in production applications.

What I found interesting when building the utility (and what I will focus on in this post) was understanding how Windows Azure queues (and queues in general) work.

What is Windows Azure Queue?

Very simply, Windows Azure Queue is an asynchronous message (up to 8KB in size) delivery mechanism which can be used to connect different components of a cloud application. If you are familiar with a queue data structure, then you already understand the basics of Windows Azure Queue. A classic example of queue usage is in order processing. Consider a web application that accepts orders for widgets. To make order processing efficient and scalable, orders can be placed on a queue to be processed by workers that are running in the background.

However, as with any distributed messaging system in the cloud, Azure Queue differs from the familiar queue data structure in some important ways. Windows Azure Queue…

Guarantees at-least-once message delivery, which is performed in two-steps consumption:

- A worker dequeues a message and marks it as invisible

- A worker deletes the message when finished processing it

If worker crashes before fully processing a message, then the message becomes visible for another worker to process.

Doesn’t guarantee “only once” delivery. You have to build an application with the idea that a message could be delivered multiple times.

Doesn’t guarantee ordering of messages. Azure Queue will make a best effort at FIFO (first in, first out) message delivery, but doesn’t guarantee it. You have to build an application with this in mind.

Note that for the “order processing” example that I mentioned, all of these issues can easily be addressed (which may not be the case for other types of applications).

For a more detailed look at Azure queues, see the Windows Azure Queue: Programming Queue Storage whitepaper available here: http://www.microsoft.com/windowsazure/whitepapers/.

How do I get access to a Windows Azure Queue?

To get access to a Windows Azure Queue, you just need a Windows Azure storage account. Instructions for creating a storage account are in this earlier post (in the How to I create a storage account? section).

How do I access a Windows Azure Queue from PHP?

In the attached script, I used the Windows Azure SDK for PHP to create and manage Windows Azure queues. I found the API to be intuitive and easy to use. Here’s a list of the methods available on the Microsoft_WindowsAzure_Storage_Queue class (note that API examples are available here, and complete documentation is available as part of the SDK download):

__construct([string $host = Microsoft_WindowsAzure_Storage::URL_DEV_QUEUE], [string $accountName = Microsoft_WindowsAzure_SharedKeyCredentials::DEVSTORE_ACCOUNT], [string $accountKey = Microsoft_WindowsAzure_SharedKeyCredentials::DEVSTORE_KEY], [boolean $usePathStyleUri = false], [Microsoft_WindowsAzure_RetryPolicy $retryPolicy = null])

clearMessages([string $queueName = ''])

createQueue([string $queueName = ''], [array $metadata = array()])

deleteMessage([string $queueName = ''], Microsoft_WindowsAzure_Storage_QueueMessage $message)

deleteQueue([string $queueName = ''])

getErrorMessage($response, [string $alternativeError = 'Unknown error.'])

getMessages([string $queueName = ''], [string $numOfMessages = 1], [int $visibilityTimeout = null], [string $peek = false])

getQueue([string $queueName = ''])

getQueueMetadata([string $queueName = ''])

isValidQueueName([string $queueName = ''])

listQueues([string $prefix = null], [int $maxResults = null], [string $marker = null], [int $currentResultCount = 0])

peekMessages([string $queueName = ''], [string $numOfMessages = 1])

putMessage([string $queueName = ''], [string $message = ''], [int $ttl = null])

queueExists([string $queueName = ''])

setQueueMetadata([string $queueName = ''], [array $metadata = array()])

See the attached script for examples of using almost all of these methods.

If you have used a cloud-based queue before, I don’t think you will find any “gotchas” in using this API, but here’s the things that stood out for me:

Queue names must be valid DNS names: http://msdn.microsoft.com/en-us/library/dd179349.aspx

The default time-to-live (TTL) for a message is 7 days.

The default visibility timeout for a message is 30 seconds. The maximum is 2 hours.

In order to delete a message, you must GET it first by calling getMessages() (and it must be deleted within its visibility timeout). If you retrieve a message with peekMessages(), the message will not be available for deletion.

The getQueue() method returns both queue metadata AND the approximate message count for the queue. (The getQueueMetadata() method only returns the queue metadata.)

There is no way to update queue metadata. The setQueueMetadata() method overwrites the existing queue metadata.

That’s it. If you create a storage account, download the attached script, and modify it by adding your storage account name and primary access key, you can get a better feel for how Windows Azure Queues work by actually interacting with it.

Let me know what I missed and/or what else you’d like to know.

Attachment:

queuestorage.zip

<Return to section navigation list>

SQL Azure, Codenames “Dallas” & “Houston” and OData

My (@rogerjenn) Test Drive Project “Houston” CTP1 with SQL Azure post of 7/21/2010 is a fully-illustrated tutorial for using the Project “Houston” UI with an existing SQL Azure database, such as Northwind. Following is a preview:

My (@rogerjenn) Test Drive Project “Houston” CTP1 with SQL Azure post of 7/21/2010 is a fully-illustrated tutorial for using the Project “Houston” UI with an existing SQL Azure database, such as Northwind. Following is a preview:

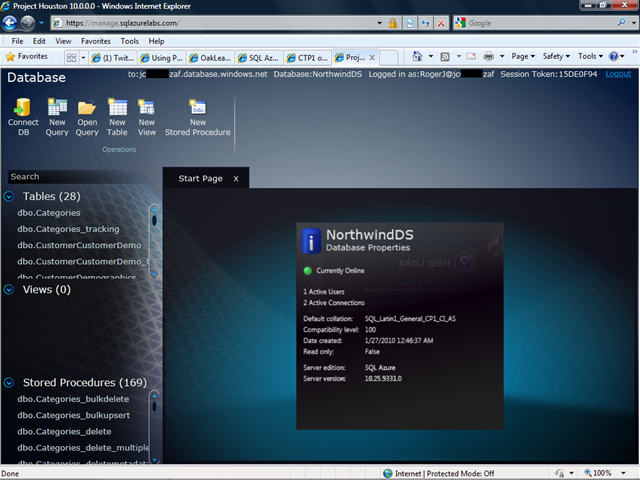

David Robinson announced CTP1 of Microsoft® Project Code-Named “Houston” now available in a post to the SQL Azure Team blog of 7/21/2010. Following is a quick test drive of the app with a NorthwindDS database generated by SQL Azure Data Sync.

1. Open the Project “Houston” connect dialog at https://manage.sqlazurelabs.com/.

2. Type the full servername plus the database.windows.net suffix, database name, login name in AdminName@servername format, and password as shown below:

3. Click Connect to open the main Project “Houston” Start Page (click for full-size screen capture):

You create new database objects with the Start Page active or by closing other open page(s) to activate Database mode and display the Operations group.

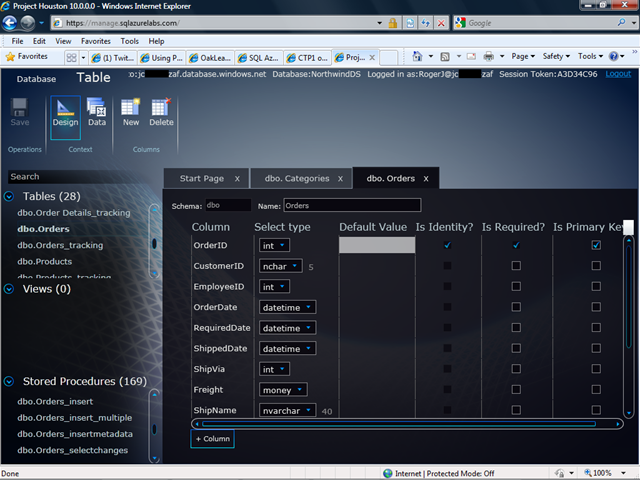

4. Scroll to and select a table to change to table design mode (click for full-size screen capture):

The selected dbo.Orders table was created by the SQL Azure Data Sync application, which SQL Azure Labs also hosts.

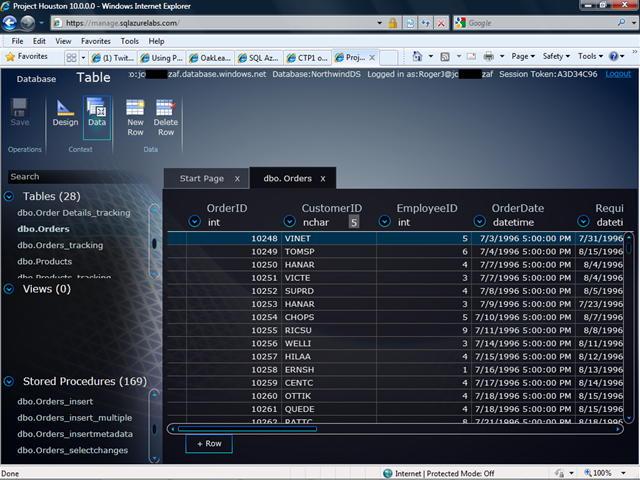

5. Click the Context group’s Data button to display a data grid (click for full-size screen capture):

Double-click a cell to edit it or select a row and click the Data group’s Delete Row button to delete it. Click + Row or the New Row button to insert a row. …

The post continues with descriptions of editing stored procedures and creating a new view.

David Robinson announced CTP1 of Microsoft® Project Code-Named “Houston” now available in a post to the SQL Azure Team blog of 7/21/2010:

At PDC ‘09, I showed a glimpse of a new Silverlight tool we were working on to allow you to develop and manage your SQL Azure databases. We showed updated versions of this at MIX’10 and TechEd North America 2010 and promised for a CTP this summer. Looking out the window, the sun is shining in Redmond, summer is here and so is the first CTP of Microsoft® Project Code-Named “Houston”.

What exactly is “Houston”

Microsoft® Project Code-Named “Houston” is a lightweight and easy to use database management tool for SQL Azure databases. It is designed specifically for Web developers and other technology professionals seeking a straightforward solution to quickly develop, deploy, and manage their data-driven applications in the cloud. Project “Houston” provides a web-based database management tool for basic database management tasks like authoring and executing queries, designing and editing a database schema, and editing table data.

How do I get started?

For this initial CTP, you can access “Houston” from SQL Azure Labs. You also need to make sure that you check the “Allow Microsoft Services” checkbox in your firewall to allow “Houston” to communicate with your databases. You can learn more on the SQL Azure firewall here.

We have also put together some starter videos for you. Those videos are as follows:

- Video – Tables - This video demonstrates how use Project “Houston” to create and modify a table in an existing SQL Server database.

- Video – Queries - This video describes how use Project “Houston” to create, modify, execute, save, and open a Transact-SQL query.

- Video – Views - This video shows how use Project “Houston” to create, select, and modify views.

- Video – Stored procedures - This video shows how use Project “Houston” to create, select, and modify stored procedures. …

Dave continues with a list of known issues.

Liam Cavanagh (@liamca) offers a slightly different scenario in his Using Project "Houston" to help with SQL Azure Data Sync Management post of 7/21/2010 to the Microsoft Sync Framework blog:

Today the SQL Azure team released CTP1 of Microsoft® Project Code-Named “Houston” on SQL Azure Labs. This is a new web based tool hosted in Windows Azure that can be used to manage your SQL Azure database.

In the past the primary mechanism for managing SQL Azure has been SQL Server Management Studio. For me one of the biggest challenges of using SQL Server Management Studio was when I wanted to work with a new customer on the Data Sync Service for SQL Azure. If this customer wanted to try the Data Sync Service, it would require them to install a copy of SQL Server Management Studio to get started. Now with Microsoft® Project Code-Named “Houston”, people can go directly to this web based management tool and start creating their SQL Azure databases without installing anything. Once they created their databases, they can launch the Data Sync Service to start synchronizing that database to one or more SQL Azure databases around the world.

The other big benefit I find with Microsoft® Project Code-Named “Houston” is the performance. Since it is hosted in the Windows Azure data centers, the latency (or time to communicate back and forth) with my SQL Azure database is much less than with SQL Server Management Studio which in many cases has far greater distance to communicate with my SQL Azure database.

If you would like to learn more about Microsoft® Project Code-Named “Houston”, please check out the SQL Azure blog.

Congratulations to the team on this CTP release! Definitely a very useful management tool.

Shayne Burgess describes Deploying an OData Service in Windows Azure in a 7/20/2010 post to the Astoria Team blog:

Windows Azure and SQL Azure are the new Cloud service products from Microsoft. In this blog post, I am going to show you how you can take a database that is hosted in SQL Azure and expose it as OData in a rich way using WCF Data Services and Windows Azure.

This walk-through requires that you have Visual Studio 2010 and both a Windows Azure and SQL Azure account.

Step 1: Configure the Database in SQL Azure

SQL Azure provides a great way to host your database in the cloud. I won’t spend a lot of time explaining how to use SQL Azure as there are a number of other blogs that have covered this in great detail. I have used the SQL Azure developer portal along with SQL Server Management Studio to create a Northwind database on my SQL Azure account that I will later expose VIA OData. The key to this step is that you want to have created your database in SQL Azure and you need to click the “Allow Microsoft Services access to this server” in the firewall settings on the SQL Azure developer portal as shown in the screen capture below – this allows your service in Windows Azure to access your database.

When creating your SQL Azure server, carefully consider the server location you choose. In my case I have selected the North Central US location for my SQL Azure server and the key is that when you later choose a Windows Azure server location to deploy your Data Service to, you will probably want to choose the same location to reduce the latency between the Data Service and the database.

Shayne continues with the following detailed, illustrated steps:

- Step 2: Create the Data Service

- Step 3: Deploy the Data Service

- Step 4: Move the Service to a Production Server

and concludes

This is a fully functioning WCF Data Service that fully supports the OData protocol. Check out the Consumers page of the odata.org website to see a list of all the client applications and client libraries that you can now target your web service with.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Alex James (@adjames) continues his OData authentication series with Authentication – Part 7 – Forms Authentication of 7/21/2010:

Our goal in this post is to re-use the Forms Authentication already in a website to secure a new Data Service.

To bootstrap this we need a website that uses Forms Auth.

Turns out the MVC Music Store Sample is perfect for our purposes because:

- It uses Forms Authentication. For example when you purchase an album.

- It has a Entity Framework model that is clearly separated into two types of entities:

- Those that anyone should be able to browse (Albums, Artists, Genres).

- Those that are more sensitive (Orders, OrderDetails, Carts).

The rest of this post assumes you’ve downloaded and installed the MVC Music Store sample.

Enabling Forms Authentication:

The MVC Music Store sample already has Forms Authentication enabled in the web.config like this:

<authentication mode="Forms">

<forms loginUrl="~/Account/LogOn" timeout="2880" />

</authentication>With this in place any services we add to this application will also be protected.

Adding a Music Data Service:

If you double click the StoreDB.edmx file inside the Models folder you’ll see something like this:

This is want we want to expose, so the first step is to click ‘Add New Item’ and then select new WCF Data Service:

Next modify your MusicStoreService to look like this:

public class MusicStoreService : DataService<MusicStoreEntities>

{

// This method is called only once to initialize service-wide policies.

public static void InitializeService(DataServiceConfiguration config)

{

config.SetEntitySetAccessRule("Carts", EntitySetRights.None);

config.SetEntitySetAccessRule("OrderDetails", EntitySetRights.ReadSingle);

config.SetEntitySetAccessRule("*", EntitySetRights.AllRead);

config.SetEntitySetPageSize("*", 50);

config.DataServiceBehavior.MaxProtocolVersion =

DataServiceProtocolVersion.V2;

}

}The PageSize is there to enforce Server Driven Paging, which is an OData best practice, we don’t like to show samples that skip this… :)

Then the three EntitySetAccessRules in turn:

- Hide the Carts entity set – our service shouldn’t expose it.

- Allow OrderDetails to be retrieved by key, but not queried arbitrarily.

- Allow all other sets to be queried by not modified – in this case we want the service to be read-only.

Next we need to secure our ‘sensitive data’, which means making sure only appropriate people can see Orders and OrderDetails, by adding two QueryInterceptors to our MusicStoreService:

[QueryInterceptor("Orders")]

public Expression<Func<Order, bool>> OrdersFilter()

{

if (!HttpContext.Current.Request.IsAuthenticated)

return (Order o) => false;var username = HttpContext.Current.User.Identity.Name;

if (username == "Administrator")

return (Order o) => true;

else

return (Order o) => o.Username == username;

}[QueryInterceptor("OrderDetails")]

public Expression<Func<OrderDetail, bool>> OrdersFilter()

{

if (!HttpContext.Current.Request.IsAuthenticated)

return (OrderDetail od) => false;var username = HttpContext.Current.User.Identity.Name;

if (username == "Administrator")

return (OrderDetail od) => true;

else

return (OrderDetail od) => od.Order.Username == username;

}These interceptors filter out all Orders and OrderDetails if the request is unauthenticated.

They allow the administrator to see all Orders and OrderDetails, but everyone else can only see Orders / OrderDetails that they created.

That’s it - our service is ready to go.

NOTE: if you have a read-write service and you want to authorize updates you need ChangeInterceptors.

Trying it out in the Browser:

The easiest way to logon is to add something to your cart and buy it:

Which prompts you to logon or register:

The first time through you’ll need to register, which will also log you on, and then once you are logged on you’ll need to retry checking out.

This has the added advantage of testing our security. Because at the end of the checkout process you will be logged in as the user you just registered, meaning if you browse to your Data Service’s Orders feed you should see the order you just created:

If however you logoff, or restart the browser, and try again you’ll see an empty feed like this:

Perfect. Our query interceptors are working as intended.

This all works because Forms Authentication is essentially just a HttpModule, which sits under our Data Service, that relies on the browser (or client) passing around a cookie once it has logged on.

By the time the request gets to the DataService the HttpContext.Current.Request.User is set.

Which in turn means our query interceptors can enforce our custom Authorization logic.

Enabling Active Clients:

In authentication terms a browser is a passive client, that’s because basically it does what it is told, a server can redirect it to a logon page which can redirect it back again if successful, it can tell it to include a cookie in each request and so on...

Often however it is active clients – things like custom applications and generic data browsers – that want to access the OData Service.

How do they authenticate?

They could mimic the browser, by responding to redirects and programmatically posting the logon form to acquire the cookie. But no wants to re-implement html form handling just to logon.

Thankfully there is a much easier way.

You can enable an standard authentication endpoint, by adding this to your web.config:

<system.web.extensions>

<scripting>

<webServices>

<authenticationService enabled="true" requireSSL="false"/>

</webServices>

</scripting>

</system.web.extensions>The endpoint (Authentication_JSON_AppService.axd) makes it much easier to logon programmatically. …

Alex continues with detailed code for “Connecting from an Active Client.”

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Nicole Hemsoth’s GIS Applications Take to the Clouds post of 7/20/2010 to the HPC in the Clouds blog begins:

Geographic Information Systems (GIS) applications have been moving into the cloud with increased momentum but like other fields where software drives the business model, the move from complex software to the software as a service cloud model was slow to catch on due to the business of delivering software—not the technological constraints of doing so. This presents a new market for those previously locked out of GIS due to high startup costs and a potential paradigm shift for how this niche segment of the software industry does business from now on. The GIS example is representative not only of how large-scale application areas are tentatively approaching the cloud from a technological and business model standpoint, but how such shifts can begin to have an instant impact on the new user groups enabled by the delivery model.

The diverse field of Geographic Information Systems (GIS) has seen greater demand for its wide array of geospatial technologies, not only because the mainstream internet has allowed for a much richer, more inclusive way to map just about anything that is a noun via a sort of unconscious crowd-sourcing of metadata and geotagging, but because the applications for such data are growing. GIS technologies have traditionally been used in predictable ways in the forestry, civil engineering and development areas, as well as in natural resource exploration and the culling of general population data for use by agencies and organizations of all sizes and purposes. As mapping has become more detailed and the underlying technology behind it more powerful, GIS has been increasingly used in marketing and consumer trend identification—and you can let your imagination take you from there.

Technologically speaking, GIS is continually evolving at the same rapid pace that the internet and satellite imaging technologies are, although the business model for GIS has, until very recently, remained the same. Over the last few months there has been an increasing amount of news from the GIS community as it begins to adapt to the arrival of software as a service (SaaS) model. Major players in the industry like Esri are taking a proactive approach by making highly publicized partnerships with cloud vendors and cloud-enabled supercomputing sites like Rocky Mountain Supercomputing Centers.

Unlike some other areas, GIS has not taken off to the clouds until recently because of what appears to be business concerns versus those revolving around application functionality. Many common GIS applications will function in public cloud environments like EC2 and Microsoft Azure but there were a relatively small handful of GIS software companies, all of whom had been carrying along just fine on their traditional mode of software delivery. It was not until Esri, who just happens to be one of the biggest players in the GIS software industry, took the first highly publicized step to the cloud to make their technology available via an SaaS model. While this is not to say that some of the large-scale GIS applications won’t experience the typical performance hitch so common for other HPC-type applications in a cloud like EC2 or Azure, this is a tight software industry that has hitherto been resistant to change—and who can blame them, at least from a business standpoint?

As one might imagine, this has traditional GIS software companies up in arms—the model of GIS software delivery is changing and it is becoming clear to many that they either need to catch up with the times and make bold moves like Esri did (they are the big newsmakers on the GIS front in terms of shifting to the SaaS model)

On the user side, however, the move for GIS applications in the cloud and delivered via an SaaS model holds great potential, especially for those who found the barriers for entry to GIS too high in terms of costs to license and then to actually have the compute thrust to run such applications.

But, when it comes to the traditional delivery model of GIS software, the times they are a' changin'...

GIS on Microsoft’s Azure Cloud

Microsoft Azure seems to be among the favored platforms when it comes to GIS applications in the cloud, particularly for applications with less hefty requirements than what is being crunched at Rocky Mountain Supercomputing Centers in the course of their partnership with GIS software maker Esri. Take for example Microsoft’s case study of GIS software company Esri and its MapIt software that they thought might best empower users if delivered as a service. [Emphasis added.]

Esri has been providing software for GIS since 1969 and is among the leaders in the space, providing its handiwork for government, industry and academia across roughly 300,000 organizations. It recently partnered with Microsoft to expand “the reach of its GIS technology by offering a lightweight solution called MapIt that combines the software plus services to provide spatial analysis and visualization tools to users unfamiliar with GIS. Esri began offering MapIt as a cloud service with the Windows Azure platform” and customers are now able to deploy the software on Azure to store their information in the Microsoft SQL database. As Microsoft reports in its detailed case study of taking GIS to the cloud on Azure, “By lowering the cost and complexity of deploying GIS, Esri is reaching new markets and providing new and enhanced services to its existing customers.” …

Return to section navigation list>

Windows Azure Infrastructure

Cory Fowler’s (@SyntaxC4) Clearing the Skies around Windows Azure post of 7/21/2010 answers questions Cory encountered during his Windows Azure presentations at recent Canadian conferences and user group meetings:

As I tour around Canada doing talks on Windows Azure at conferences and user groups, I found that the biggest concern around the cloud computing paradigm shift is cost. Slightly confused as to how a key offering of a platform is a sticking point against its adoption, I asked for some feedback. Here are some findings:

- If I invest in developing against a particular platform, how simple is it to migrate to a different platform?

- If the project that is deployed to the cloud doesn’t require the grade of redundancy and scalability in the cloud, is it possible to host the project in house in order to save money?

- How is cost accumulated for billing?

There are a few things that I’ve taken away from some of the feedback that I’ve gathered at the talks that I’ve done thus far. There is definitely a lack in understanding of the billing model of Windows Azure, but there is also a misunderstanding of the advanced infrastructure that Microsoft has unveiled. In this post, I plan to clarify some of the misunderstandings about the Windows Azure Platform.

Will I get a Migration Migraine?

To speak towards the first point, there are always many things to consider when choosing one platform over another. Each platform will normally have different offerings to attract a market from their competition. It’s ultimately up to the Developer or Systems Architect to make the decision to leverage those offerings, which could potentially “lock” an application into a particular platform.

If you are worried about your application being “locked” into the Windows Azure platform, you can opt out of the choice to use the Storage Services and leverage a [potentially larger] database instead. It’s the cost that will ultimately steer you in one direction or another on platform, and further more the features of said platform. On the plus side, leveraging Storage Services over a database while using Windows Azure gives you the ability to limit the cost of your application, while increasing the response speed of data transactions (Storage Services can be partitioned and scaled across multiple nodes, and also have a ).

If you feel the need to pull your application off of the Azure Platform, you can export the data in the Storage Service into a CSV, or Excel formatted file using the Azure Storage Explorer. The only extra cost of migration would be writing a script in order to migrate the data into your next storage solution, which is slightly more work then if you were migrate directly from one database to another, assuming there is a clear migration path between the two.

Windows Azure Billing Overview

Considering that the billing model for Windows Azure is a little complex, I though I’d take some time to break it down into sections, and outline some common misconceptions in each section, so it can be better understood. When deploying to Windows Azure you will be billed for the following categories: Compute Time, Storage Services, SQL Azure, AppFabric, Data Transfers.

Cory continues with the following topics:

- Calculating Costs on Azure Compute Hours: Compute Sizes

- Deployment and Staging Environment

- Calculating Azure Storage Space

- Calculating Azure Data Transaction Costs

- How to Forecast SQL Azure Charges

- Understanding AppFabric Billing

and concludes with:

Bringing it all together

Hopefully, you now have a better understanding of Windows Azure billing. I’ve covered all of the current items that would be billable in Windows Azure. There are however a couple of pricing models that are available as part of Introductory offers Microsoft has put forth to get people to try Windows Azure. If you are thinking of moving to this platform and would like to calculate some estimates of what your applications may cost to run in the cloud consult the TCO and ROI Calculator. To find out more information, check out the following resources:

Lori MacVittie (@lmacvittie) analyzes the similarities between SOA and PaaS in her Let’s Face It: PaaS is Just SOA for Platforms Without the Baggage post of 7/21/2010 to F5’s DevCentral blog:

At some point in the past few years SOA apparently became a four-letter word (as opposed to just a TLA that leaves a bad taste in your mouth) or folks are simply unwilling – or unable – to recognize the parallels between SOA and cloud computing .

This is mildly amusing given the heavy emphasis of services in all things now under the “cloud computing” moniker. Simeon Simeonov was compelled to pen an article for GigaOM on the evolution/migration of cloud computing toward PaaS after an experience playing around with some data from CrunchBase. He came to the conclusion that if only there were REST-based web services (note the use of the term “web services” here for later in the discussion) for both MongoDB and CrunchBase his life would have been a whole lot easier.

For an application developer, as opposed to an infrastructure developer, all these vestiges of decades-old operating system architecture add little value. In fact, they cause deployment and operational headaches—lots of them. If I had taken almost any other approach to the problem using the tools I’m familiar with I would have performed HTTP operations against the REST-based web services interface for CrunchBase and then used HTTP to send the data to MongoDB. My code would have never operated against a file or any other OS-level construct directly.

[…]

Most assume that server virtualization as we know it today is a fundamental enabler of the cloud, but it is only a crutch we need until cloud-based application platforms mature to the point where applications are built and deployed without any reference to current notions of servers and operating systems.

-- Simeon Simeonov “The next reincarnation of cloud computing”

Now I’m certainly not going to disagree with Simeon on his point that REST-based web services for data sources would make life a whole lot easier for a whole lot of people. I’m not even going to disagree with his assertion that PaaS is where cloud is headed. What needs to be pointed out is what he (and a lot of other people) are describing is essentially SOA minus the standards baggage. You’ve got the notion of abstraction in the maturation of platforms removing the need for developers to reference servers or operating systems (and thus files). You’ve got ubiquity in a standards-based transport protocol (HTTP) through which such services are consumed. You’ve got everything except the standards baggage. You know them, the real four-letter words of SOA: SOAP, WSDL, WSIL and, of course, the stars of the “we hate SOA show”, WS-everything.

But the underlying principles that were the foundation and the vision of SOA – abstraction of interface from implementation, standards-based communication channels, discrete chunks of reusable logic – are all present in Simeon’s description. If they are not spelled out they are certainly implied by his frustration with a required interaction with file system constructs, desiring instead some higher level abstracted interface through which the underlying implementation is obscured from view.

CLOUDS AREN’T CALLED “as a SERVICE” for NOTHING

Whether we’re talking about compute, storage, platform, or infrastructure as a service the operative word is service.

It’s a services-based model, a service-oriented model. It’s a service-oriented architecture that’s merely moved down the stack a bit, into the underlying and foundational technologies upon which applications are built. Instead of building business services we’re talking about building developer services – messaging services, data services, provisioning services. Services, services, and more services.

Move down the stack again and when we talk about devops and automation or cloud and orchestration we’re talking about leveraging services – whether RESTful or SOAPy – to codify operational and datacenter level processes as a means to shift the burden of managing infrastructure from people to technology. Infrastructure services that can be provisioned on-demand, that can be managed on-demand, that can apply policies on-demand. PaaS is no different. It’s about leveraging services instead of libraries or adapters or connectors. It’s about platforms – data, application, messaging – as a service.

And here’s where I’ll diverge from agreeing with Simeon, because it shouldn’t matter to PaaS how the underlying infrastructure is provisioned or managed, either. I agree that virtualization isn’t necessary to build a highly scalable, elastic and on-demand cloud computing environment. But whether that data services is running on bare-metal, or on a physical server supported by an operating system, or on a virtual server should not be the concern of the platform services. Whether elastic scalability of a RabbitMQ service is enabled via virtualization or not is irrelevant. It is exactly that level of abstraction that makes it possible to innovate at the next layer, for PaaS offerings to focus on platform services and not the underlying infrastructure, for developers to focus on application services and not the underlying platforms. Thus his musings on the migration of IaaS into PaaS are ignoring that for most people, “cloud” is essentially a step pyramid, with each “level” in that pyramid being founded upon a firm underlying layer that exposes itself as services.

SOA IS ALIVE and LIVING UNDER an ASSUMED NAME for ITS OWN PROTECTION

If we return to the early days of SOA you’ll find this is exactly the same prophetic message offered by proponents riding high on the “game changing” technology of that time.

SOA promised agility through abstraction, reuse through a services-oriented approach to composition, and relieving developers of the need to be concerned with how and where a services was implemented so they could focus instead on innovating new solutions. That’s the same thing that all the *aaS are trying to provide – and with many of the same promises. The “cloud” plays into the paradigm by introducing elastic scalability, multi-tenancy, and the notion of self-service for provisioning that brings the financial incentives to the table.

The only thing missing from the “as a service” paradigm is a plethora of standards and the bad taste they left in many a developer’s mouth. And it is that facet of SOA that is likely the impetus for refusing to say the “S” word in close proximity to cloud and *aaS. The conflict, the disagreement, the confusion, the difficulties, the lack of interoperability that nearly destroyed the interoperability designed in the first place – all the negatives associated with SOA come to the fore upon hearing that TLA instead of its underlying concepts and architectural premises. Premises which, if you look around hard enough, you’ll find still very much in use and successfully doing exactly what it promised to do.

Simeon himself does not appear to disagree with the SOA-aaS connection. In a Twitter conversation he said, “I still have scars from the early #SOA days. Shouldn't we start with something simpler for PaaS?”

To which I would now say “but we are.” After all it wasn’t – and isn’t - SOA that was so darn complex, it was its myriad complex and often competing standards. A rose by any other name, and all that. We can refuse to use the acronym, but that doesn’t change the fact that the core principles we’re applying (successfully, I might add) are, in fact, service-oriented.

The CloudTweaks blog posted Cloud Computing Confidence Expected to Drive Economic Growth, According to Global Savvis Study on 7/21/2010:

More Than Two-Thirds of IT Decision Makers Say Cloud Computing Could Help Businesses Recover from Economic Downturn

ST. LOUIS, July 21 /PRNewswire-FirstCall/ — The flexibility of cloud computing could help organizations recover from the current global economic downturn, according to 68 percent of IT and businesses decision makers who participated in an annual study commissioned by Savvis, Inc. (Nasdaq: SVVS), a global leader in cloud infrastructure and hosted IT solutions for enterprises.

Vanson Bourne, an international research firm, surveyed more than 600 IT and business decision makers across the United States, United Kingdom and Singapore, revealing an underlying pressure to do more with less budget (the biggest issue facing organizations, cited by 54 percent of respondents) and demand for lower cost, more flexible IT provisioning.

The study, in its second year, reveals that IT decision makers are confident in cloud computing’s ability to deliver budget savings. Commercial and public sector respondents predict cloud use will decrease IT budgets by an average of 15 percent, with some respondents expecting savings of more than 40 percent.

“Flexibility and pay-as-you-go elasticity are driving many of our clients toward cloud computing,” said Bryan Doerr, chief technology officer at Savvis. “However, it’s important, especially for our large enterprise clients, to work with an IT provider that not only delivers cost savings, but also tightly integrates technologies, applications and infrastructure on a global scale.”

A lack of access to IT capacity is clearly identified as a barrier to business progress, with 76 percent of business decision makers reporting they have been prevented from developing or piloting projects due to the cost or constraints within IT. For 55 percent of respondents, this remains an issue.

Global research highlights indicate that:

- Confidence in cloud continues to grow – 96 percent of IT decision makers are as confident or more confident in cloud computing being enterprise ready now than they were in 2009.

- 70 percent of IT decision makers are using or plan to be using enterprise-class cloud within two years.

- Singapore is leading the shift to cloud, with 76 percent of responding organizations using cloud computing. The U.S. follows with 66 percent, with the U.K. at 57 percent.

- The ability to scale resources up and down in order to manage fluctuating business demand was the most cited benefit influencing cloud adoption in the U.S. (30 percent) and Singapore (42 percent). The top factor driving U.K. adoption is lower cost of total ownership (41 percent).

- Security concerns remain a key barrier to cloud adoption, with 52 percent of respondents who do not use cloud citing security of sensitive data as a concern. Yet 73 percent of all respondents want cloud providers to fully manage security or to fully manage security while allowing configuration change requests from the client.

- Seventy-nine percent of IT decision makers see cloud as a straightforward way to integrate with corporate systems.

A copy of the Rising to the Challenge IT decision maker report can be found at http://savvis.itleadership.info/. Details about the Savvis Symphony suite of cloud services can be found at http://www.savvisknowscloud.com. …

<Return to section navigation list>

Windows Azure Platform Appliance

CXO Media announced on 7/21/2010 that CIO Perspectives San Francisco will be back in town on Wednesday, 9/22/2010 at the Grand Hyatt Downtown hotel:

Co-hosted by CIO magazine and its sister organization, the CIO Executive Council, this exclusive one-day executive event taps into a deep network of accomplished CIOs and business value experts to deliver timely, relevant and actionable ideas.

Register and reference promo code: GUEST

With an agenda planned by our CIO Perspectives Advisory Board of San Francisco area CIOs, this unique event packs maximum value into a day that features speakers and sessions such as:

- CIO Randy Spratt of McKesson Corp., on innovation and the collaborative IT organization

- CEO Patty Azzarello of Azzarello Group, on how CIOs can boost IT credibility with their CEOs and boards

- CIO magazine columnist Martha Heller, leading a workshop on the “CIO Paradox” and ways for senior IT leaders to resolve it

<Return to section navigation list>

Cloud Security and Governance

Information Week::Analytics offered a new Cloud Security : When Perception Becomes Reality Cloud Computing Brief on 7/21/2010:

Cloud Security : When Perception Becomes Reality

Is the cloud insecure? Maybe, maybe not. But either way, that's not the first question IT should ask when deciding whether to host customer-or partner-facing systems on an IaaS or PaaS provider's network.Just the perception of insecurity, whether grounded in fact or not, can have a significant effect on a company’s decision whether to do business with a firm whose application or service runs on the public cloud infrastructure. The fact is, security tops the list of cloud worries, according to every InformationWeek Analytics cloud survey we’ve deployed.

In our 2010 Cloud GRC Survey of 518 business technology professionals, for example, respondents who use or plan to use these services are more worried about the cloud leaking their information than they are about performance, maturity, vendor lock-in, provider viability or any other concern. Fears of losing their own or customer proprietary data also the top the list of reasons cited by the 208 respondents who say they are not using these services.

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Audrey Watters’ Cassandra: Predicting the Future of NoSQL post of 7/21/2010 to the ReadWriteCloud blog reflects on the current status of relational and Entity-Attribute-Value databases:

When Twitter announced a few weeks ago that it would not be using Cassandra for tweet storage, there was a flurry of "I told you so's" from NoSQL skeptics. The folklorist in me found that rather amusing, as Cassandra in Greek mythology was cursed with the ability to see the future. But poor Cassandra could convince no one to believe her predictions, including a rather grim one about a Trojan Horse. The tech blogger in me figured, however, she should probably have a better grasp of Cassandra and NoSQL than just my knowledge of Homer.

SQL RDBMS BBQ

SQL and relational databases have long been the solution standard for data storage and retrieval. But new web applications that are being built today don't necessarily fit into this older schema. There are new demands on databases, not simply in terms of scalability, but also in terms of availability and unpredictability. In response, a number of new databases have been developed, loosely categorized as NoSQL.

Although the name sounds like a repudiation of SQL, it doesn't mean "no SQL never ever." It means "not only SQL," and offers a far more flexible and targeted response to database management.

NoSQL OMG

In a great summary of the NoSQL movement on Heroku's blog, Adam Wiggins gives the following examples of NoSQL usage:

- Frequently-written, rarely read statistical data (for example, a web hit counter) should use an in-memory key/value store like Redis, or an update-in-place document store like MongoDB.

- Big Data (like weather stats or business analytics) will work best in a freeform, distributed db system like Hadoop.

- Binary assets (such as MP3s and PDFs) find a good home in a datastore that can serve directly to the user's browser, like Amazon S3.

- Transient data (like web sessions, locks, or short-term stats) should be kept in a transient datastore like Memcache

- If you need to be able to replicate your data set to multiple locations (such as syncing a music database between a web app and a mobile device), you'll want the replication features of CouchDB.

- High availability apps, where minimizing downtime is critical, will find great utility in the automatically clustered, redundant setup of datastores like Cassandra and Riak.

Oh, Grow Up

In a recent article on NoSQL in SD Times, Forrester analyst Mike Gualtieri says that NoSQL is "not a substitute for a database; it can augment a database. For transaction types of processing, you still need a database. You need integrity for those transactions. For storing other data, we don't need that consistency. NoSQL is a great way to store all that extra data." This cautious sort of approach - use NoSQL for "extra data" but use SQL for the real stuff - is pretty common.

It does allow people to take small steps towards NoSQL implementation. "Don't migrate your existing production data," suggests Wiggins, "instead, use one of these new datastores as a supplementary tool."

This hesitation is understandable; the legacy of relational databases is substantial. In an interview with ReadWriteWeb, Nati Shalom, CTO of GigaSpaces spoke of the history of databases, with the financial sector being among the first to hit a wall, so to speak with scalability. The rise of social networking and the read/write web, alongside cloud technologies, has vastly reshaped our needs for and demands on database architecture as well as information retrieval.

Shalom argues that the technology behind NoSQL is sound and will provide the solutions for addressing some of these issues. Nevertheless, he says, NoSQL still requires two things: better implementation and more maturity.

What the future holds for NoSQL and for database management remains to be seen. There's a Cassandra joke to be made there, I'm sure.

Lydia Leong analyzes Rackspace and OpenStack in a 7/21/2010 post to her Cloud Pundit blog:

Rackspace is open-sourcing its cloud software — Cloud Files and Cloud Servers — and merging its codebase and roadmap with NASA’s Nebula project (not to be confused with OpenNebula), in order to form a broader community project called OpenStack. This will be hypervisor-neutral, and initially supports Xen (which Rackspace uses) and KVM (which NASA uses), and there’s a fairly broad set of vendors who have committed to contributing to the stack or integrating with it.

While my colleagues and I intend to write a full-fledged research note on this, I feel like shooting from the hip on my blog, since the research note will take a while to get done.

I’ve said before that hosters have traditionally been integrators, not developers of technology, yet the cloud, with its strong emphasis on automation, and its status as an emerging technology without true turnkey solutions at this stage, has forced hosters into becoming developers.

I think the decision to open-source its cloud stack reinforces Rackspace’s market positioning as a services company, and not a software company — whereas many of its cloud competitors have defined themselves as software companies (Amazon, GoGrid, and Joyent, notably).

At the same time, open sourcing is not necessarily a way to software success. Rackspace has a whole host of new challenges that it will have to meet. First, it must ensure that the roadmap of the new project aligns sufficiently with its own needs, since it has decided that it will use the project’s public codebase for its own service. Second, it now has to manage and just as importantly, lead, an open-source community, getting useful commits from outside contributors and managing the commit process. (Rackspace and NASA have formed a board for governance of the project, on which they have multiple seats but are in the minority.) Third, as with all such things, there are potential code-quality issues, the impact of which become significantly magnified when running operations at massive scale.

In general, though, this move is indicative of the struggle that the hosting industry is going through right now. VMware’s price point is too high, it’ll become even higher for those who want to adopt “Redwood” (vCloud), and the initial vCloud release is not a true turnkey service provider solution. This is forcing everyone into looking at alternatives, which will potentially threaten VMware’s ability to dominate the future of cloud IaaS. The compelling value proposition of single pane of glass management for hybrid clouds is the key argument for having VMware both in the enterprise and in outsourced clouds; if the service providers don’t enthusiastically embrace this technology (something which is increasingly threatening), the single pane of glass management will go to a vendor other than VMware, probably someone hypervisor-neutral. Citrix, with its recent moves to be much more service provider friendly, is in a good position to benefit from this. So are hypervisor-neutral cloud management software vendors, like Cloud.com.

Lydia is an analyst at Gartner, where she covers Web hosting, colocation, content delivery networks, cloud computing, and other Internet infrastructure services.

Randy Bias (@randybias) asks Does OpenStack Change the Cloud Game? in this 7/20/2010 post to the Cloudscaling blog:

This week Rackspace Cloud, in conjunction with the NASA Nebula project, open sourced some of their Infrastructure-as-a-Service (IaaS) cloud software. This initiative, dubbed ‘OpenStack’, should have a dramatic impact on the current dynamics for building cloud computing infrastructure. Previously there have been two major camps: Amazon API and architecture compatible and VMware’s vCloud. Now there is a third alternative that could not only be a viable alternative to these two approaches, but more importantly, a fantastic option for service providers and telecommunications companies that face unique challenges.

Let’s dive in and I’ll explain.

Cloud Stack Evolution & ‘Camps’

Amazon Web Services (AWS) spawned a huge ecosystem of knock-offs, management systems, tools, and vendors. They include, but aren’t limited to:

- AWS API compatible ‘cloud stacks’ including Eucalyptus, Open Nebula, and others

- Cloud management systems for the AWS APIs and services such as RightScale and enStratus

- Cloud services layered on top of AWS services such as Jungle Disk (S3), Heroku (S3, EBS, EC2), and more

Prior, I wouldn’t have called the AWS ecosystem a ‘camp’ per se, but if you read our most recent article on Google’s foray into cloud storage, you know that it seems likely they will provide a 100% compatible version of S3 and EC2 this year. Imagine the impact of Google Compute & Storage with Amazon Web Services compatible APIs. Already the Google Storage API is nearly 100% compatible with S3.

Together, as a block, Amazon and Google could create a de facto duopoly for infrastructure clouds, which isn’t good for anyone. We need competition and more than two major players.

Up against the Amazon camp is VMware. In my article on Amazon vs. VMware last year I highlighted how these two businesses were on a collision course. Nothing has changed and competition is mounting between them. The reason is that telcos and service providers are under increasing threat from Amazon and soon Google. They need viable solutions and VMware is attempting to provide a competitive ecosystem.

The VMware cloud initiative, vCloud, is designed to arm enterprises and service providers to be competitive, but has not quite delivered yet. VMware has had a number of problems providing a full cloud stack. The software, now in beta, is codenamed ‘Redwood’ has had significant delays in getting to market. Their strategy for cloud infrastructure does not appear unified outside of delivering compute virtualization.

VMware, as a business, understands they need to make their customers competitive. They have made a number of strategic open source acquisitions such as SpringSource, RabbitMQ, and Redis. There are also murmurings that they have some special projects inside that are ‘up the stack’ from their virtualization offerings. In total this shows that VMware ‘gets it’ in that they want to create a competitive ecosystem. While each of these is currently a point solution, there is yet to be a coherent story here. Can VMW build a consistent story and strategy around these disparate pieces? Only time will tell…

Besides these two camps, there is a long tail of clouds running various frameworks vying to establish themselves such as Cloud.com’s CloudStack, 3Tera, Hexagrid, Abiquo, OpenNebula, etc. John Treadway recently had posted a roundup describing all of the various cloud stacks out there.

OpenStack is stepping into the ring as a viable third camp. In particular, the OpenStack Storage solution is a clear contender to Amazon S3 & Google Storage. Many service providers and telcos have struggled to find a viable solution using commodity hardware that was price competitive. Suddenly, there is a viable proven solution.

Yet this is only storage. How can it create an effective ‘third camp’ alternative to Amazon and VMware for an entire cloud?

Lock-in, Architecture, Standards and The Truth about Interoperability

Interoperability for infrastructure clouds is poorly understood. Most believe that the problem lies in the on-disk image format (e.g. VMDK vs. VHD vs. qcow) or in the ‘hypervisor’ (although people don’t really unders/tand what this means). The truth is that lock-in has little or nothing to do with disk formats or the hypervisor. Most on-disk image formats are simply representations of block storage (i.e. disk drives). That means converting between a VMware VMDK and a Citrix XenServer/Hyper-V VHD is relatively trivial.What about booting the converted disk image up on a new hypervisor? Guess what, since most hypervisors now rely on hardware virtualization (HVM) [1] using Intel-VT/AMD-V, that means that by default most will work with unmodified operating systems out of the box. No changes needed. The only downside of this is that usually the resulting performance is poor. This requires new paravirtualization (PV) drivers in the converted image. What does that mean? After converting the image from one format to another, you simply have to install the PV drivers for the correct OS. A process that requires being methodical, but is in no way technically challenging.

Where is the lock-in then? If it’s not the hypervisor, what makes moving from one cloud to another so difficult? Simply put, it’s architectural differences. Every cloud chooses to do storage and networking differently.

For example, if you wanted to move a virtual machine from GoGrid to Amazon, converting the GoGrid image to an AMI is not difficult. Unfortunately, GoGrid uses two networks, a ‘frontend’ and a ‘backend’ where your cloud storage system is connected to via the backend network. Every Amazon virtual server has only a single network interface. If your application assumes a separate backend network then what happens when it moves to a cloud without one? Or vice versa? Similar architectural incompatibilities exist between Rackspace Cloud, Savvis, Terremark, Hosting.com, Joyent, and all of the others.

The problem here, to be a bit more succinct, is that we need reference architectures for how infrastructure clouds are built. Amazon is one such reference. VMware’s vCloud is potentially another. Now there could be a truly open option with the gravity to gather community support.

More on The Third Camp

OpenStack’s potential to build a real community and a set of reference architectures drives towards greater standardization and interoperability. Perhaps more important than a cloud storage alternative, is this possibility for a true OpenStack community to form a critical mass such that a similar level of developers contributing to it as Amazon or VMware. Then commercial and alternative offerings, such as Cloud.com, Hexagrid, and OpenNebula can match their APIs and architectures to this set of reference architectures.Will it happen? It’s hard to say, but the opportunity is there. Rackspace and others are putting serious weight behind this initiative.

What This Means for Telcos and Service Providers

For Telcos and SPs this means an alternative to VMware’s vCloud for commodity service offerings. A way to compete and operate at scale like Amazon and at a similar price point. Standardization through a similar reference architecture means greater compatibility between service provider clouds, which means greater benefit for customers and less lock-in, making them more desirable than the walled gardens.You don’t want to differentiate on the basic compute, storage, and network offering. You want this to be as standard and interoperable as possible, just like 3G networks, TCP/IP, and similar service provider technologies. By creating a common open platform that everyone uses there is a better opportunity to facilitate wider adoption, create a competitive infrastructure service marketplace where providers work on differentiating in areas where they have an inherent advantage:

- Service and support

- Network & datacenter tie-ins (e.g. MPLS, hosting/co-lo)

- Bundled service offerings

- Differentiated value-added cloud services (VACS)

This is a game that all telcos and service providers understand. They have been playing it for the past 15+ years.

Conclusion

OpenStack, with a strong community behind it, should be an important tool for service providers and large telcos to compete at scale with the Amazon and Googles of this world.We believe OpenStack and the reference architecture(s) associated with it will allow service providers (SP) to get their undifferentiated cloud offerings up and running early. For this reason, Cloudscaling will put real resources into supporting this effort. Getting basic cloud offerings up early then means providers can focus on support, services, bundling, and differentiated services as soon as possible, while embracing as large a customer base as possible. This is just as they compete on top of basic TCP/IP services today.

[1] Clearly, the market leader, Amazon, does not use HVM. They use PVM, a fully paravirtualized mode of Xen. However, even they seem to understand that HVM is the future. Their latest offering, designed for HPC, which is performance sensitive, uses HVM and supports unmodified operating systems. The reality is that the Intel-VT and AMD-V capabilities on the latest round of processors is incredibly fast and will only get faster. The battle is over. HVM and silicon won in this case.

<Return to section navigation list>