Windows Azure and Cloud Computing Posts for 6/22/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

Dinesh Haridas recommends all Azure Drive users Update to Windows Azure Drive (Beta) in OS 1.4 in this 6/22/2010 post:

During internal testing we discovered an issue that might impact Windows Azure Drive (Beta) users under heavy load causing I/O errors. All users need to upgrade to OS 1.4 immediately, which has a fix for the problem.

Note, this issue does not apply to Windows Azure Blobs, Tables or Queues.

To ensure that your role is always upgraded to the most recent OS version, you can set the value of the osVersion attribute to “*” in the service configuration element. Here’s an example of how to specify the most recent OS version for your role.

<ServiceConfiguration serviceName="<service-name>" osVersion="*"> <Role name="<role-name>"> …. </Role> </ServiceConfiguration>If you would like to upgrade to OS 1.4 without being auto-updated beyond that, you must replace the “*” above and set the value of the osVersion attribute to “WA-GUEST-OS-1.4_201005-01”.

Additional details on configuring operating system versions and the service configuration are available here.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Gilberto J. Perera recommends that you Use Microsoft’s Codename Dallas to Power Your Next Big Idea in this 6/22/2010 post:

Do you have an idea for an application that uses census data to suggest ideal locations based on your tastes? How about an application that uses criminal reports to suggest the safest places to live? All of these applications would require access to datasets that are scattered throughout the world in proprietary databases that are not accessible to everyone. The end result is a a service or application that only uses a fraction of the information available…not a very good service after all.

This is where Microsoft’s Codename “Dallas” service steps in.

“Microsoft Codename “Dallas” is a new service allowing developers and information workers to easily discover, purchase, and manage premium data subscriptions in the Windows Azure platform. Dallas is an information marketplace that brings data, imagery, and real-time web services from leading commercial data providers and authoritative public data sources together into a single location, under a unified provisioning and billing framework. Additionally, Dallas APIs allow developers and information workers to consume this premium content with virtually any platform, application or business workflow.”

Windows Azure is Microsoft’s cloud framework that allows developers to create and manage applications that run on the cloud. The combination of data that resides in the cloud and the ability for developers to create and manage applications that live on cloud makes for scalable, fast, and cheaper deployments of applications like those that I mentioned at the start of this article. The Dallas Marketplace will bring together data providers (government, businesses, and other entities) with the developer/end-user. These entities will now be able to monetize their data and developers and end-users will have access to information that was not readily available in the past.

You will be able to use Dallas to

Find premium content to power apps for consumers and businesses

Discover and license valuable data to improve existing applications or reports

Bring disparate data sets together in innovative ways to gain new insight into business performance and processes

Instantly and visually explore APIs across all content providers for blob, structured, and real-time web services

Easily consume third party data inside Microsoft Office and SQL Server for rich reporting and analytics

Developers will be able to build applications that access multi-million dollar databases with subscription based payment systems that make it affordable. However trial subscriptions will allow developers to test content and develop applications without paying data royalties, so there would be no cost to develop and test. Developers would only pay should the application be made available to users who end up consuming the data. One of the benefits of using Dallas for application development revolves around the extensibility and availability of data regardless of platform.

Some examples of existing applications using the Azure platform are listed below:

Windows Experience Index Share Site

3M Case Study on Microsoft.com

For more case studies, visit the Windows Azure Case Study page.

Information workers will benefit the most when it comes to the use of Dallas. Users will now be able to work with data sets from disparate systems and will have access to information instantly within familiar applications like Excel and the new PowerPivot extension. Users will also be able to access data from SQL Servers, SQL Azure Databases, and other Microsoft Office assets.

Content partners, those actually providing the information will greatly benefit from this tool, not only will they be able to bring data from disparate systems into one comprehensive system, but they will also be able to monetize this content.

Gil continues with a descriptive list of “Databases Available Now”

Wayne Walter Berry posts a description and link to a 00:08:35 TechNet Video: How Do I: Configure SQL Azure Security? in this 6/21/2010 post:

In this video, Max Adams introduces security within SQL Azure. The creation of Logins, Databases and Users is discussed and demonstrated. The views sys.sql_logins and sys.databases which allow the display of logins and databases from the master database are also discussed.

View the video here.

ElijahG posted the source code for OData For Umbraco Preview 0.92 to CodePlex on 6/17/2010:

OData for Umbraco, is a OData provider built on WCF Data Services 4. It allows the content xml tree inside of Umbraco to be queried using LINQ. (Essentially it’s using LINQ to XML and LINQ to Objects.)

Service metadata is created at runtime, so no code generation is required. By default it’s secured using Basic HTTP and only has SELECT support.

- New Schema/Legacy schema's are supported. http://blog.leekelleher.com/2010/04/02/working-with-xslt-using-new-xml-schema-in-umbraco-4-1/

- Support For Document Inheritance

Whats Possible With OData and Umbraco

- PowerPivot for Excel 2010 (for business analysis)

Import and visualize data inside of Excel, and combine with other data sources. http://powerpivot.com/- Service Contract Inside Of Visual Studio

Interact with data programmatically, using service references. This is commonly used when creating Silverlight/WPF applications.- JQuery

WCF Data Services supports JSON/JSONP, so you fetch data from the umbraco tree using JQuery. Stephen Walter has an excellent tutorial http://stephenwalther.com/blog/archive/2010/04/01/netflix-jquery-jsonp-and-odata.aspxDue to the current preview the service only supports basic http authentication. (Looking for feedback how to implement this, either using .config files or compiled code).

Umbraco is an open source content management service (CMS) framework for ASP.NET. There’s an Umbraco Conference from 6/23 to 6/25/2010 going on in Copenhagen, Denmark.

MSDN Library gained a new Consuming OData Feeds from a Workflow topic recently:

This topic applies to Windows Workflow Foundation 4.

WCF Data Services is a component of the .NET Framework that enables you to create services that use the Open Data Protocol (OData) to expose and consume data over the Web or intranet by using the semantics of representational state transfer (REST). OData exposes data as resources that are addressable by URIs. Any application can interact with an OData-based data service if it can send an HTTP request and process the OData feed that a data service returns. In addition, WCF Data Services includes client libraries that provide a richer programming experience when you consume OData feeds from .NET Framework applications. This topic provides an overview of consuming an OData feed in a workflow with and without using the client libraries.

Using the Sample Northwind OData Service

The examples in this topic use the sample Northwind data service located at http://services.odata.org/Northwind/Northwind.svc/. This service is provided as part of the OData SDK and provides read-only access to the sample Northwind database. If write access is desired, or if a local WCF Data Service is desired, you can follow the steps of the WCF Data Services Quickstart to create a local OData service that provides access to the Northwind database. If you follow the quickstart, substitute the local URI for the one provided in the example code in this topic.

The topic continues with detailed instructions instructions for Consuming an OData Feed Using the Client Libraries and Consuming an OData Feed Without Using the Client Libraries.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Brian Loesgen wonders if you’re Missing your Azure AppFabric relay bindings? and helps you if you are in this 6/22/2010 post:

I’ve had a couple of people ask me about this in the past week, so I’m thinking this may be a common problem some of you are seeing. I also see two of my colleagues have blogged about it, so I thought I’d help spread the word.

The problem is you install the Azure AppFabric SDK, but the Service Bus bindings we all know and love are not visible. The cause is .NET 4.0 has its own machine.config and the SDK installer does not make the appropriate entries. This will be fixed in the next coming-soon release of the AppFabric SDK.

In the interim, you can read Kent Weare’s post here, and Wade Wegner’s post here to get past this.

Live Windows Azure Apps, APIs, Tools and Test Harnesses

PRNewswire asserts “Mandarin Oriental embraces Microsoft's approach to cloud computing and the ‘connected traveler’ in its Mandarin Oriental Hotel Group Standardizes Architecture on Microsoft Platform From Desktop to Datacenter press release of 6/22/2010:

Mandarin Oriental Hotel Group today announced that it has standardized its IT architecture exclusively on the Microsoft platform for competitive advantage and to deliver a highly responsive "connected journey" experience for its guests. The announcement was made at the HITEC 2010 conference in Orlando, Fla.

Microsoft Corp.'s approach to cloud computing in hospitality was instrumental to Mandarin Oriental's decision to fully transition to the Microsoft platform. This is part of Mandarin Oriental's overarching strategy to build a highly responsive IT infrastructure with the applications and tools needed to provide personalized guest experiences worldwide.

"We are confident that Microsoft's 'connected travel' approach is the right one for Mandarin Oriental Hotel Group as we move to the cloud," said Nick Price, CIO/vice president, Information Systems, Mandarin Oriental Hotel Group. "After evaluating leading competitive offerings, we have standardized exclusively on Microsoft technologies, which provides us with a single, highly streamlined IT platform that spans the entire organization, from the mobile and digital consumer touch points up through mid- and back-office operations and beyond. We are working with Microsoft and our core ISVs as they enhance their applications to take advantage of Windows Azure so that we can further cost-cutting and consolidation." [Emphasis added.]

Mandarin Oriental has standardized exclusively on Microsoft technologies for more than 10 years and has evolved a single, highly streamlined IT platform that spans the entire organization, from the mobile and digital consumer touch points up through mid- and back-office operations and beyond.

Mandarin Oriental is virtualizing its hotel systems as a key step toward Windows Azure and the public cloud, with plans to implement its first fully virtualized hotel later this year. It has completed the server virtualization stage using Windows Server 2008 R2 with Hyper-V technology and has already realized significant savings, increased systems availability and a reduction of carbon emissions.

Further, Mandarin Oriental is working with PAR Springer-Miller Systems to deploy its next generation hospitality platform on Windows Azure. [Emphasis added.] …

The press release goes on with a description of “Microsoft's Hospitality in the Cloud Strategy.”

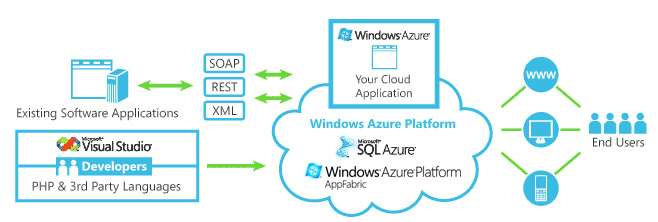

tbtechnet’s Developers: Why and How - All In the Cloud post of 6/22/2010 is:

I get asked many times by developers about Microsoft’s “cloud” efforts and technologies and where they might “plug in”.

I’ve found this rather simplistic response helps steer developers in the right direction. Developer options in the era of the “cloud”:

If you develop on-premise applications – no change here – you might be building super high power CAD/CAM, video, audio processing applications that need dedicated processors, lots of memory and very, very fast data read/write. The cloud might not be for you.

If you develop software-as-a-service in the cloud that needs lots of custom configurations and maybe specific hardware specs.

- Working with a hosting company that has lots of experience on-boarding applications on to their hosting infrastructure

- Possibly a Microsoft a Software+Services Incubation Center partner can help you launch your product as a service: http://www.microsoft.com/hosting/en/us/programs/incubationcenter.aspx

If you develop applications using familiar tools like Visual Studio and technologies like .NET and the applications might require storing relational and other types of data or the ability to connect to other applications running in the cloud or on premises.

- Windows Azure might be for you see: http://go.microsoft.com/fwlink/?LinkId=158011

- Why Azure: http://www.msdev.com/Directory/SeriesDescription.aspx?CourseId=153

If you customize hosted collaboration solutions like Microsoft SharePoint 2010 then the Business Productivity Online Suite (BPOS) http://www.microsoft.com/online/business-productivity.mspx might be an opportunity for you to offer developmental services to customers.

I’m not sure if my reformatting of tb’s post makes more sense than the original.

Return to section navigation list>

Windows Azure Infrastructure

John Treadway claims Gartner’s Cloud Numbers Don’t Add Up (again!) in this 6/22/2010 post:

Once again, Gartner has publicized entirely useless and (worse) misleading numbers on the global market for cloud computing services. Their numbers from last year were disputed by me (here, and here) and several others, yet they kept to their fataly flawed methodology for the 2010 update. This is despite at least one of the analysts who’s name appears on the report privately agreeing with me that last years numbers were “rubbish” and that they were pressured into using this methodology.

The press release cited above indicates that “cloud services revenue is forecast to reach $68.3 billion in 2010…” My primary question is “Hey Gartner, what color is the sky in your world?” Or perhaps it should be “What have you been smoking?”

I should point out at this point that someone totally unrelated to this blog or my job recently handed me a copy of the complete Dataquest report on which the press release was based.

Here is a distribution of the 2010 numbers from there report:

Soure: Gartner (May 2010)

Breaking It Down – Google AdWords Ain’t Cloud!!

As was the case last time, the most ludicrous inclusion in these numbers is what they include under the heading “Business Process Services.” Out of $68.3 billion total cloud services forecasted for 2010, Business Process Services is $57.9 billion, or 85%. The biggest chunk of this is $32 billion in online advertising. That’s right, Gartner thinks that the billions that advertisers spend with Google, Yahoo, and others for advertising their products and services should be counted as cloud spending.

Okay, so I’m marketing mortgages using AdWords, and the money coming out of my marketing budget counts as cloud? Really? Is it just me, or is that totally insane?!?

Other things that count as cloud in the “Business Process Services” bucket?

- Cloud-based E-Commerce: this is a bit of a toss-up. The fees that eBay collects from merchants and the Amazon marketplace fees are included here. Is that really a cloud service, or just distribution??? I’d say it should be out. Though if you want to put it anywhere, count it as SaaS revenue.

- Payments: this includes the fees that PayPal charges, most for merchant card processing. It ain’t cloud!

- HR Services: the fees that ADP charges your company to process your checks and provide online access to your payroll records?? Yup, it’s cloud according to Gartner.

- Supply Management: they are not clear about this, but I imagine fees charged to manufacturers for inclusion in supplier search engines (e.g. Alibaba) might count here.

- Demand Management: could be advertising analytics apps and programs for managing online search spending, but again not clear

- Finance, Accounting, Admin: again, they don’t provide good examples here. This could include NetSuite, but wouldn’t that be in SaaS?

- Operations: no idea, but most business operations I can think of would not be “cloud.

Frankly, I wish I knew what was going on over there (Gartner), but to me this report is nonsensical that I just can’t wrap my head around it. …

John continues with his own analyses for 2010 and 2014 Cloud Services based on Gartner data (revised by CloudBzz.)

Jonathan Feldman offers Cloud ROI: A Grounded View, a June 2010 Cloud Computing Brief for InformationWeek::Analystics:

Any CEO can look good during an 18-month stint with a successful company in a hot sector. The real test comes over the long haul and during market fluctuations. That same extended perspective can be a sticking point for most return on investment and total cost of ownership calculations around newer technologies, including public cloud services. For example, those skeptical of the public cloud aren’t convinced that swapping capital investments for ongoing operating costs will benefit IT long term. How much of an operating cost? How long of an operating period?

Toddy Mladenov explains Affinity Groups in Windows Azure–How Those Work? in this 6/21/2010 post:

Affinity Groups is an interesting concept that Windows Azure introduced a while ago but it seems that people are confused how it works. Here is a short explanation how you can benefit from the Affinity Groups, and things that you need to be aware of when you use them.

Benefits of Using Affinity Group

As the name suggests Affinity Groups are intended to group dependant Windows Azure services, and deploy those in one place if possible. This can have several benefits among which are:

- Performance – If your services are dependant on each other it is good if those are co-located, so that transactions between them are executed faster. The best option is if all your services are deployed in the same physical cluster in the data center

- Lowering your bill – As you may already know bandwidth within the data center is free of charge (you are still charged for transactions though). Currently it is possible to co-locate your services if you choose the same sub-region and using Affinity Group is just another way to do that

How Affinity Groups Work?

Although the Affinity Groups give you the benefits outlined above, here are few things you need to be aware of when using them:

- Specifying the same Affinity Group for two or more hosted services tells Windows Azure that those services should be co-located in the same Fabric stamp in the data center. Note that I used “should” instead “must” because this is a best effort action, and cannot be guaranteed. If there is no space in the stamp where your first hosted service is deployed, the subsequent ones will be deployed in different stamp in the same data center

- Specifying the same Affinity Group for hosted service and storage account means that those two will be co-located in the same data center. Right now this is equivalent to selecting the same sub-region for both

In essence Affinity Groups gives you small advantage over the use of sub-regions – it instructs Windows Azure to make an effort to deploy your hosted services in the same Fabric stamp.

Note (June 22nd 2010): I’ve done some edits to this post to unify the terminology with Windows Azure one, and clarify the billing benefit:

- Initially I wrote that transactions within data center are free of charge, which is not completely true. What I meant is that bandwidth is free of charge however you are still charged for transactions

- Although I used “region” throughout the post I meant “sub-region” (for example US-North Central). In this context you should also consider “sub-region” and “data center” as interchangeable terms

- Choosing sub-region guarantees you that your service will be deployed in the selected sub-region. Choosing Affinity Group instructs Windows Azure Fabric to make a best effort to deploy your service in the same stamp as other services in the same Affinity Group but does not guarantee that

Hope those clarifications are not distracting you from the main purpose of the post – to explain what the Affinity Groups can be used for.

<Return to section navigation list>

Cloud Security and Governance

Salvatore Genovese claims “Concerns about Security are Often Cited as the No. 1 Barrier to Widespread Adoption of Cloud Solutions” in a preface to his Verizon Business Offers Tips on How Enterprises Can Secure the Cloud post of 6/21/2010 about a Verizon press release:

Although there is a growing awareness of the many benefits of cloud computing -- including on-demand provisioning that enables businesses to increase efficiency and control IT costs -- concerns about security are often cited as the No. 1 barrier to widespread adoption of cloud solutions.

According to industry experts, many enterprises are still grappling with concerns about data integrity, recovery and privacy, as well as regulatory compliance in a cloud environment.

At the Gartner Security & Risk Management Summit 2010 this week, Verizon Business will address security in the cloud by leaving the audience with some key tips to keep their data and networks safe:

-- Evaluate your goals. Before deciding to move your IT services to the cloud, understand the business benefits you want to achieve. Typical benefits include: reducing the time and effort to launch new applications, enabling IT to become more responsive to the needs of the business, and lowering capital expenditures.

After defining the business goals, perform a risk-benefit analysis to determine if a move into the cloud is appropriate for the business. A few questions to consider: What are the possible scenarios should the data be compromised? What processes would be jeopardized if the cloud service failed?

-- Perform due diligence. Once the business is committed to the cloud model, determine which deployment model - public, private or hybrid - best meets the business' requirements.

-- Choose wisely. When selecting a partner to deliver services via the cloud, select a partner with a heritage in both IT and security services. Verify that risk mitigation is part of the provider's security practice. Pick a service provider that can integrate IT, security and network services, as well as provide robust service-performance assurances.

Neutral third parties can provide guidance on picking a partner. The Cloud Security Alliance, which promotes the use of best practices for securing the cloud, offers a list of corporate members. For a blog entry on a list of questions to ask a prospective partner, click here.

-- To protect your data, take a good look at your provider. According to the Cloud Security Alliance, the top security threat associated with the cloud is data loss and leakage. As such, how well the provider protects sensitive data is paramount.

When evaluating a provider, analyze the company's ability to deliver the same types of controls that would usually be done in-house - physical security, logical security, encryption, change management and business continuity and disaster recovery. Also, verify that the provider engages in safe data handling with documented backup, availability and destruction procedures.

-- Consider a hybrid security model. Incorporate a mix of services delivered in-the-cloud and on premises. This can help allay data security and privacy concerns as well as leverage legacy investments.

-- Remember to comply! Investing in the cloud and focusing on security can all be for naught if compliance initiatives are not met. Additionally, many regulations, such as the PCI Data Security Standard, include best practices that enhance a company's security posture. Communicate relevant regulations to your cloud provider, and work with the provider to facilitate compliance.

"Cloud computing offers businesses so many tangible benefits that it's a shame to see security fears inhibiting adoption of the technology," said Peter Tippett, vice president of technology and innovation at Verizon Business. "While the security concerns are valid, the prescription to alleviate them exists. Simple security actions, such as performing due diligence and embracing risk management, have an enormous impact when done consistently."

<Return to section navigation list>

Cloud Computing Events

The Voices for Innovation blog claims Microsoft Shines at Gov 2.0 Expo in this 6/22/2010 e-mail message:

Microsoft, partners, and customers took full advantage of this year’s Gov 2.0 Expo to solidify Microsoft’s place as a key player in Government across areas such as cloud computing, interoperability, open data, geospatial tools, security, and privacy. With representation at more than 10 sessions, including keynotes by Microsoft General Counsel and Senior V.P. Brad Smith and Microsoft Researcher Danah Boyd, Microsoft and partners showcased their investments in public sector solutions and services that meet the mandate of transparency, efficiency, and cost containment.

The Gov 2.0 Expo was the second of three events making up the Gov 2.0 series that culminates in the Gov 2.0 Summit, September 7-8 in Washington, D.C.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Joe Pannetieri explains Small Business: How Red Hat Will Attack Microsoft Stronghold in this 6/22/2010 post to the MSPMentor blog:

At first glance, Microsoft’s software portfolio — Windows, Office, Small Business Server and Exchange — still dominates the small business market. But Red Hat CEO Jim Whiteshurst says his company has found a back door into the small business market. Perhaps surprisingly, it doesn’t really involve desktop Linux. Here’s how Whitehurst sees Red Hat gaining a foothold in the small business IT market.

I sat down with Whitehurst at the Red Hat Partner Summit in Boston on June 21. While most of our conversation focused on larger customer bases, Whitehurst certainly sees an opportunity for Red Hat to push far deeper into the small business market.

So, is Red Hat preparing a big desktop Linux push? Nope. Instead, Whitehurst sees cloud computing — everything from Google Apps to Amazon Web Services — as Red Hat’s doorway into small business. Whitehurst assets that 90 percent of today’s clouds leverage Red Hat’s software. Moreover, he adds: Cloud computing can’t exist without open source.

Instead of building and hosting its own cloud platform, Red Hat will depend on partners and service providers to leverage Red Hat Enterprise Linux (RHEL), JBoss middleware and Red Hat Enterprise Virtualization (RHEV) for their cloud efforts. Plus, Red Hat will continue to promote an open architecture, allowing ISVs to mix and match their software with Red Hat’s own offerings, Whitehurst adds.

Of course, Microsoft has its own SMB cloud push — involving both Windows Azure and BPOS (Business Productivity Online Suite). And much of the recent Microsoft cloud effort involves a SaaS showdown with Google Apps.

I remain unconvinced that “Cloud computing can’t exist without open source.”

The VAR Guy plows the same ground in his Red Hat CEO: Cloud Can’t Exist Without Open Source post of 6/22/2010:

Red Hat CEO Jim Whitehurst (pictured) says open source can exist without cloud computing, but cloud computing can’t exist without open source. Whitehurst shared that nugget — and about a dozen other thoughts — during an interview with The VAR Guy at Red Hat Partner Summit in Boston. Here’s a recap of the conversation and a look at where Red Hat is heading next.

For the safe of fast blogging, all of the nuggets below are paraphrased. But they capture the essence of Whitehurst’s thoughts. Here we go…

1. Old IT models don’t scale: Twenty years ago, your best IT experience was at work. Now, the best IT experience occurs in the home and it’s often absolutely free, thanks to services like Google, FaceBook and Twitter, Whitehurst says. As a result, CIOs and partners need to adjust their mindsets. Which brings us to point two…

2. Don’t sell functionality: CIOs don’t want features and functions. They’ve got enough of those. Instead, they want employees to “use what they want, when they want to” with subscription type payment models, Whitehurst says.

3. More Thank Linux: Red Hat has the benefit of being the Kleenex brand of Linux, Whitehurst asserts. But Whitehurst wants partners and customers to remember that Red Hat has a broader product portfolio that features JBoss middleware and virtualization. He views Red Hat as an open architecture company, allowing customers and partners to mix and match Red Hat components with third-party software.

4. Red Hat vs. VMware: Some critics allege that Red Hat’s virtualization strategy is purely based on price benefits vs. VMware. But Whitehurst sees the strategy differently. On a performance front, Whitehurst says Red hat Enterprise Virtualization (RHEV) beats VMware. But when it comes to management tools, Whitehurst concedes Red Hat is in catch-up mode.

Still, Whitehurst compares today’s Red Hat vs. VMware to the old Red Hat vs. Sun Solaris war. Initially, Red Hat beat Solaris on price. But over time, Red Hat’s Linux story gradually gained more and more management capabilities. The same trend will repeat itself with RHEV vs. VMware, Whitehurst assets.

5. On Acquisition Rumors: Two sources tell The VAR Guy that Red Hat may be looking to acquire Groundwork Open Source. Whitehurst says Red Hat doesn’t comment on rumors. But he says Red Hat has ongoing business relationships with Groundwork and other systems management companies.

6. On A Rumored Business Intelligence Strategy: Reports in 2009 suggested that Red Hat was going to make deeper moves into the BI market. Whitehurst confirmed that Red Hat still has an investment in Jaspersoft, though Whitehurst says Red Hat also maintains close relationships with Pentaho and SAP, among other BI specialists.

7. On Red Hat in Small Businesses: Cloud computing represents Red Hat’s doorway into small businesses, Whitehurst says. He added that 90 percent of today’s clouds leverage Red Hat’s software.

8. On the Cloud Potentially Stealing Open Source’s Thunder: Whitehurst said that wasn’t the case. Pointing to Amazon Web Services and Google, Whitehurst asserted that the cloud can’t exist without open source. Of the major cloud strategies, only Windows Azure seems to be closed source, Whitehurst added.

9. On the database market: Red Hat will continue to work closely with a range of database partners rather than betting on one database, Whitehurst said. The reason: Databases are not commodities. No single database, he added, does everything great. In terms of raw numbers, Red Hat’s biggest database partner is Oracle. But Red Hat will continue to work closely with IBM DB2, Enterprise DB, Ingres, MySQL (now owned by Oracle) and other options, he added.

10. On emerging market opportunities: In recent quarters, oil and gas customers have increasingly landed on Red Hat’s top customer lists.

11. On Red Hat and the desktop Linux market: Red Hat will make “some” but “not a lot” of effort in the desktop market. Whitehurst says he wishes “Ubuntu all the luck in the world but I don’t know why anyone would pay for desktop Linux. The demand is there but how do you monetize it?”

Red Hat will continue to develop and promote its desktop Linux offering, but the far greater priority for Red Hat is a Virtual Desktop Infrastructure (VDI) push, Whitehurst said.

Next Moves

Red Hat is set to announce quarterly results tonight (June 23). The VAR Guy will be listening for more clues about the company’s business performance and strategy.

Still don’t buy it.

Michael Coté gives Automation as a Service from Opscode – Quick Analysis in this 6/22/2010 post to the Enterprise Irregulars blog:

This Monday, Opscode announced a second round of funding and the beta launch of their long awaited IT Management as a service offering. There are two companies to watch in the automation space: Puppet Labs and Opscode. Both are doing innovative work to advance automation and configuration management, indeed, they tend to blur the distinction between those two categories so much that its easy to lump them together into simply “automation.”

We’ve covered Puppet Labs and their eponymous offering many times over the years here at RedMonk (coverage). Meanwhile, after a community forking, the competing Chef team has been quietly building their open source project and company, Opscode.

Model-driven automation

I’m not sure this space has name, but I often refer to it as “next generation automation.” These systems start from the desire to make provisioning and ongoing configuration of IT easier and end up creating a model-driven, declarative system for managing the state of of any given “service” in IT. One method of using this is to start with one small component and build up to larger services. (See the podcast and transcript of how Google uses Puppet for a more detailed explanation.)

The subtle, but important distinction in all this is that the admin is focusing on building models rather than scripting the exact wing-dings to flip around on each server. Then, the wider utility is applied when the system applies those configurations at scale, is used to enforce desires configuration (monitoring for rouge changes), and is integrated with others systems to provision or de-provision services as needed. It’s little wonder that the cloud-crowd and the dev/ops sub-set like these systems.

The conceit of doing things this way is that when you’re operating at scale and especially dynamically (whether virtualized or “cloud-ized”), other methods of automation are more tedious.

Opscode’ Platform

I’ve long wanted to see as much of IT Management as possible be offered as a service, and I love seeing companies try it – the ill-fated klir, Paglo (now part of Citrix Online), Tivoli Live, Manage Engine (still in beta?), AccelOps, Service-now.com, just to name a few. At scale, IT Management offerings become a beast to manage themselves (just sit in a room full of enterprise-level service desk users to hear their complaints feature requests) and the mechanics of SaaS delivery seems to offer a hopes to (a.) remove managing the management stack problems, (b.) allow for faster delivery of features, and, (c.) lower the cost, if not make it more transparent.

While model-driven automation has proven to be exciting and useful in its own right, Opscode’s service offering is the net-new interesting thing. In short, the central hub of the Opscode’s automation service is hosted “in the cloud,” local agents are installed on your network (or in your own cloud, as it were), and the various configuration models (Chef calls them “recipes”) are centrally managed. Splitting up a tiered management system between cloud and on-premise is somewhat interesting, but the more interesting parts come from figuring out how users can collaborate around the various configuration models and take advantage of the aggregate analytics.

The Collaborative IT Management Vision

There are several interesting tangents for the Opscode Platform and similar offerings – managing cloud-deployed applications, helping treat “infrastructure as code” to mend relations and (hopefully) boost productivity between development and operations, and the pure cost savings that next generation automation offers. For me, the most innovating potential is around what I like to call “collaborative IT management.”

When it comes to collaborative IT management the Opscode Platform has attractive potential…

Alex Williams reported Salesforce.com Heralds The Activity Stream - Chatter Comes out of Beta in this 6/22/2010 post to the ReadWriteCloud blog:

Salesforce.com is launching Chatter to its entire customer base today. The adoption means that Chatter will now be part of the entire Salesforce.com stack, available as a stand alone application and as a platform for application development.

Chatter is now integrated into the entire Salesforce.com product line, including its sales and support offeirngs; Force.com and AppExchange, its marketplace for enterprise cloud computing offerings.

We hear a lot these days about Facebook and its connection to the enterprise. Salesforce.com seems to have imprinted Facebook into its DNA. That may infruriate many who see Facebook as something entirely different, compared to an enterprise service. But for Salesforce.com it's Facebook that we hear about over again in its briefings.

It's the activity stream that matters here. An activity stream that is also an application stream. That is the fast moving trend. The ability for people to communicate effectively in the deep stream of data. We are in a time where machines communication is a must in order to organize and share information in the flow of a dynamic supply chain.

Sugar CRM has an activity stream. Success Factors recently acquired CubeTree for its contact centered service built with an activity stream environment. The Enterprisre 2.0 world has its share of companies with activity streams. Soclaltext is moving its concept of the activity stream forward with its adoption of the Twitter Annotations spec. Socialcast, one of the earliest adopters of real-time activity streams, has been making headway with its service that combines real-time conversations with intergration into back-end legacy applications.

Chatter has had its fair share of skeptics. But customers do speak for themselves. Going into the launch, Salesforce.com has 5,000 customers in private beta and more than 50 applications that have built Chatter into its product infrastructure. Fedex is using Chatter to manage logistics.

BMC is using the Chatter platform for a real-time feed designed for IT departments and with internal users.

For the launch, Salesforce.com has implemented the capability for anyone to create groups inside the Chatter environment. That's already a primary feature with services like Status.net and Yammer. People need to break out streams into smaller trickles that apply to their own group.

Salesforce.com has come to dominate the CRM category. But it has not created its own category in the market like a company such as Success Factors, which has a place on every employees desktop. It has done that by positioning itself as a employee production service.

The company has a host of competitors, including Microsoft Sharepoint and IBM Lotus collaboration services. But with Chatter, Salesforce.com is increasing its odds of being more universal, providing real-time feed to employees across the organization.

David Linthicum asserts “Recent tests from the Energy Department highlight some of the areas where cloud computing is a poor fit” in his Where the cloud isn't the right tool for the job post of 6/22/2010 to InfoWorld’s Cloud Computing blog:

The U.S. Energy Department tested cloud providers on their ability to perform specific operations. "Early results from the Energy Department's Magellan cloud computing testbed suggest that commercially available clouds suffer in performance when operating Message Passing Interface (MPI) applications such as weather calculations, an official has said," according to this Federal Computer Week article.

MPI facilitates communications between processes and synchronizations between parallel processes. MPIs promote a discipline as well as a communication mechanism, making sure these programs remain in their specific domain and, thus, are easier to manage within a massively parallel environment. The Energy Department uses MPI-enabled applications for weather forecasting and for some chemistry research. Those doing the test stated that, while many commercial clouds give the "illusion of elasticity," there are logical and physical limits within cloud providers. …

This brings to light points I've been stressing for the last couple of years, including the fact that not all applications are right for the cloud. To that end, cloud providers are not optimized for specific types of applications, including MPI. However, this does not mean that clouds are slow and not scalable; they are just slow and not scalable when leveraged in a particular way.

Indeed, the Energy Department did find for computations that can be performed serially, such as genomics calculations, "there was little or no deterioration in performance." I suspect that with a bit of tuning on the provider's side, the MPI performance issues could be reduced as well. However, I doubt it could be eliminated completely, considering how public clouds are architected.

Alex Williams’ Hadoop and Oracle Hook Up Through Cloudera and Quest Software post of 6/22/2010 to the ReadWriteCloud explains:

Cloudera is working with Quest Software to provide a connector into Oracle for Hadoop, the open-source, distrbuted data management platform.

The connector, called "Ora-Oop," provides a way to transfer data between Oracle and Hadoop. The service will be available in the fourth quarter for download through Cloudera and Quest.

Quest is a tools provider for Oracle while Cloudera is known as the leading commercial Hadoop provider.

Hadoop is increasingly used by companies to keep data efficiently managed over an extended, distributed environment. It is known for its robust analytics. Yahoo! uses Hadoop to manage much of its infrastructure, optimize content and place advertisements. Facebook and many of the largest technology companies use Hadoop to manage the vast amounts of data in in its networks.

The enterprise faces its own issues as the social Web is making it vital for companies to deal with its information such as blogs, video and other media such as audio and images. This data is not easily fitted into a relational database. That's where Hadoop comes into the picture. By allowing for the bi-directional transfer of information, users can transfer the data between Hadoop and Oracle.

Ora-Oop will complement Sqoop, an open source tool designed to import data from relational databases into Hadoop.

tbtechnet points to two hosting company case studies in The New Money: Windows Server 2008 R2 Hyper-V and System Center Virtual Machine Manager, a 6/17/2010 TechNet post:

A couple of hosting solutions case studies went live recently and they discuss the virtues of leveraging Windows Server 2008 R2 Hyper-V and Systems Center Virtual Machine Manager.

The hosting companies, Decision Logic and Adhost are delivering cloud-based solutions to their customers. These hosters wanted to make sure the technologies used in the foundation of their cloud approach would keep hardware and operating costs low.

Read on here:

<Return to section navigation list>

-thumb-610x488-18567.jpg)